blogs

Insights at Cloud Speed

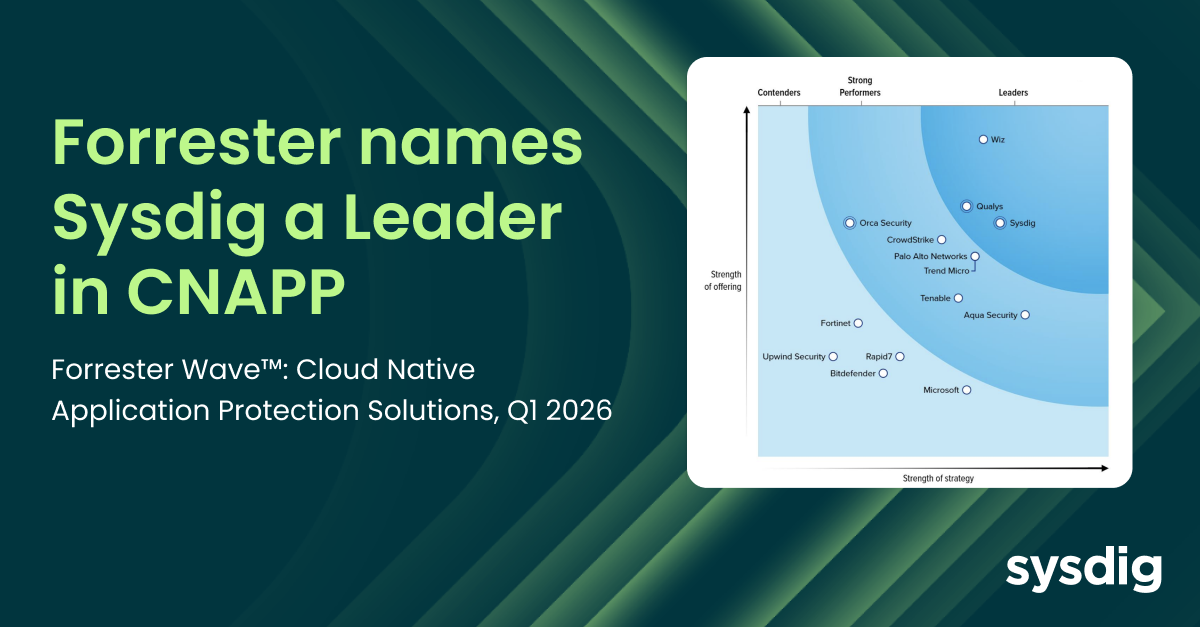

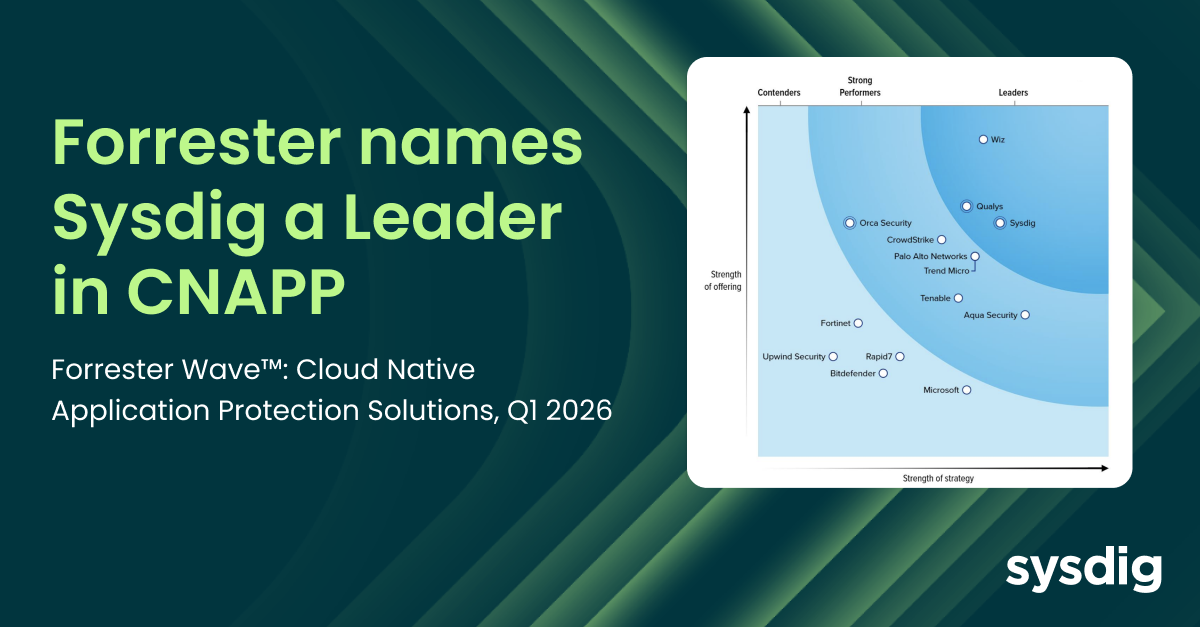

Sysdig named a Leader in the Forrester Wave™: Cloud Native Application Protection Solutions, Q1 2026

Matt Kim

|

February 17, 2026

Sysdig named a Leader in the Forrester Wave™: Cloud Native Application Protection Solutions, Q1 2026

AI-assisted cloud intrusion achieves admin access in 8 minutes

Alessandro Brucato

|

February 3, 2026

AI-assisted cloud intrusion achieves admin access in 8 minutes

Securing GPU-accelerated AI workloads in Oracle Kubernetes Engine

Manuel Boira

|

February 2, 2026

Securing GPU-accelerated AI workloads in Oracle Kubernetes Engine

Our customers have spoken: Sysdig rated a Strong Performer in Gartner® Voice of the Customer for Cloud-Native Application Protection Platforms

Marla Rosner

|

January 22, 2026

Our customers have spoken: Sysdig rated a Strong Performer in Gartner® Voice of the Customer for Cloud-Native Application Protection Platforms

join our newsletter

Stay up to date– subscribe to get blog updates now

Thank you! Your submission has been received!

Oops! Something went wrong while submitting the form.

Security briefing: February 2026

March 4, 2026

Crystal Morin

Security briefing: February 2026

No items found.

Leveling up Kubernetes Posture: From baselines to risk-aware admission

February 26, 2026

Matt Brown

Leveling up Kubernetes Posture: From baselines to risk-aware admission

Cloud Security

Compliance

Eliminating runtime blind spots: How CleanStart and Sysdig build continuous trust across the container lifecycle

February 25, 2026

Taradutt Pant

Eliminating runtime blind spots: How CleanStart and Sysdig build continuous trust across the container lifecycle

Cloud Security

LLMjacking: From Emerging Threat to Black Market Reality

February 24, 2026

Crystal Morin

LLMjacking: From Emerging Threat to Black Market Reality

Threat Research

Security for AI

Real risks live at runtime: Why CISOs must care about deep telemetry in 2026

February 18, 2026

Matt Stamper

Real risks live at runtime: Why CISOs must care about deep telemetry in 2026

Cloud Security

Sysdig named a Leader in the Forrester Wave™: Cloud Native Application Protection Solutions, Q1 2026

February 17, 2026

Matt Kim

Sysdig named a Leader in the Forrester Wave™: Cloud Native Application Protection Solutions, Q1 2026

Sysdig Features