Falco Feeds extends the power of Falco by giving open source-focused companies access to expert-written rules that are continuously updated as new threats are discovered.

The Kubernetes API server, in all versions of Kubernetes, unfortunately allows an attacker, who is able to create a ClusterIP service and set the spec.externalIPs field, to intercept traffic to that IP address. While the flaw was discovered back in 2020, there is no fix for the foreseeable future. As a result, this CVE-2020-8554 is just as relevant today as it was two years ago.

This is an up-date to the blog post "Detect CVE-2020-8554 using Falco" – originally published December 23, 2020.

We have included two new sections to cover how to prevent Man-in-the-Middle eavesdropping via NetworkPolicy recommendations and restrict the creation of External IP's on ClusterIP services via Admission Controllers.

Similarly, if an attacker is able to patch the status (which is considered a privileged operation and should not typically be granted to users) of a LoadBalancer service, just like the ClusterIP service, they can set the status.loadBalancer.ingress.ip to intercept traffic to the LoadBalancer IP address. This affects LoadBalancers as well as ClusterIP services in Kubernetes.

This type of incident is known as a Man-in-the-Middle (MitM) attack. A MitM attack is a general term for when a perpetrator positions themselves in a conversation between a user and an application, either to eavesdrop or to impersonate one of the parties, making it appear as if a normal exchange of information is underway.

The concerning issue with this CVE-2020-8554 is that there is currently no patch available, and no version of Kubernetes is protected by default from this interception technique. Furthermore, this issue affects multi-tenant clusters. Multi-tenant clusters that grant tenants the ability to create and update services and pods are most vulnerable.

If a potential attacker can already create or edit services and pods, then they may be able to intercept traffic from other pods (or nodes) in the cluster. This issue is unfortunately a design flaw that cannot be mitigated without major changes to the Kubernetes project. As a result, we must look at ways to mitigate the risk of the following MitM common vulnerability/exposure (CVE-2020-8554).

One way to mitigate risk is by making sure that you are able to detect and prevent threats trying to exploit this vulnerability. This is where a cloud-native solution like Sysdig Secure helps out. Sysdig is capable of detecting elevated or excessive permissions in users, as well as restricting user access to unnecessary Kubernetes components or resources. By implementing behavioral detection at runtime, as well as shift-left security to your CI/CD pipeline, we should be able to prevent excessive exposure to our clusters.

Again, there is no patch for this CVE-2020-8554, and it can currently only be mitigated by restricting access to vulnerable features. Because a fix to Kubernetes could potentially break other components, the Kubernetes maintainers have opened a conversation about a longer-term fix or a built-in mitigation feature.

For this blog post, we will discuss the various approaches to detecting and preventing CVE-2020-8554, unpatched MitM attack using Sysdig's Cloud-Native Security solution.

Detection CVE-2020-8554

External IP services are not widely used, so we recommend manually auditing any external IP usage. Detecting exploitation attempts of this vulnerability is critical to preventing or stopping an attack.

We can do this in two ways through Sysdig Secure.

- We can use Falco Policies to detect/audit any patches to services.

- We can use the Network Topology map to see unusual ingress/egress traffic.

Falco

Whether using standalone, open source Falco, or Falco within Sysdig Secure, users can detect malicious activity at the host and the container level. Falco is the CNCF incubation project for runtime threat detection for containers and Kubernetes. Falco rules are built in YAML format, and can be customized for specific use-case scenarios. The following Falco rule detects the creation or modification of ClusterIP services with an external IP.

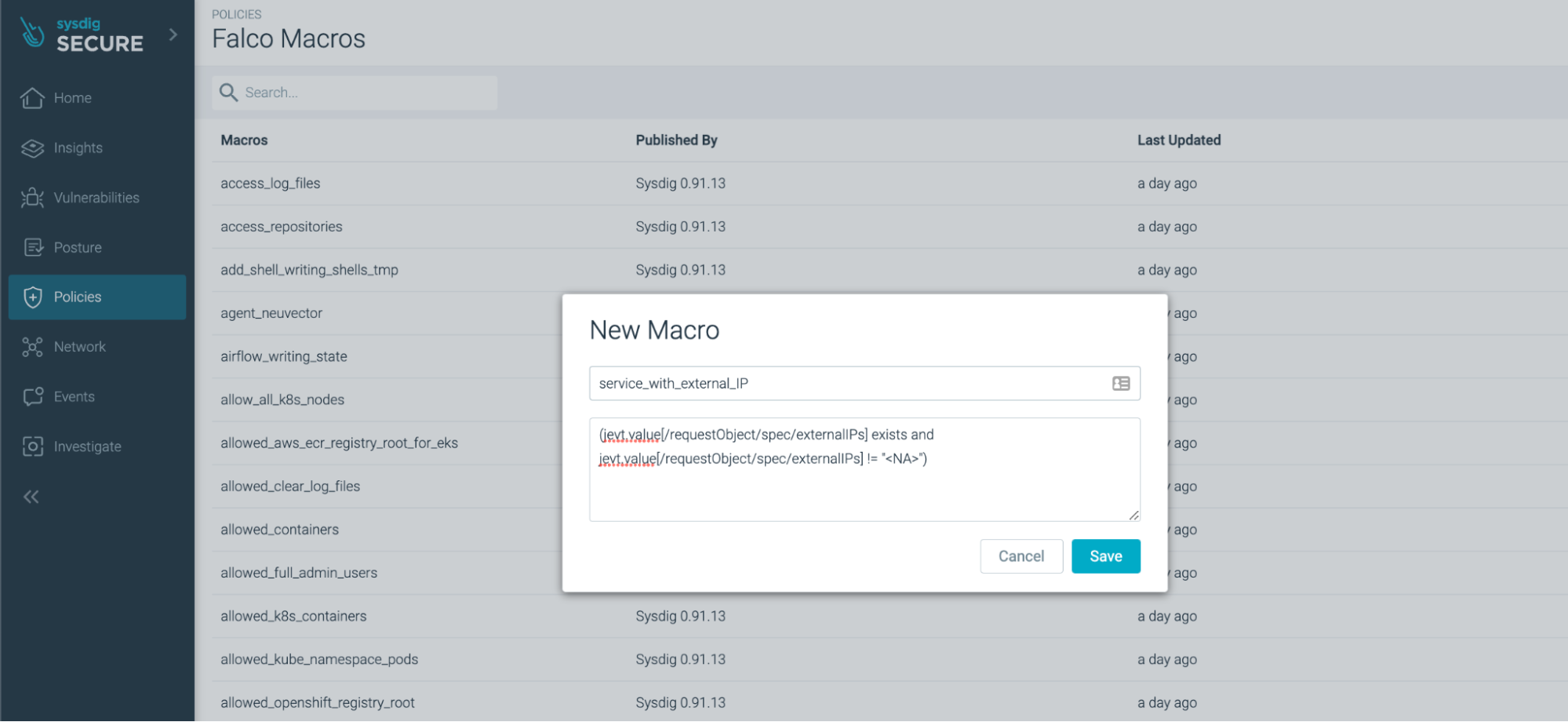

- macro: service_with_external_IP

condition: (jevt.value[/requestObject/spec/externalIPs] exists and jevt.value[/requestObject/spec/externalIPs] != "<NA>")

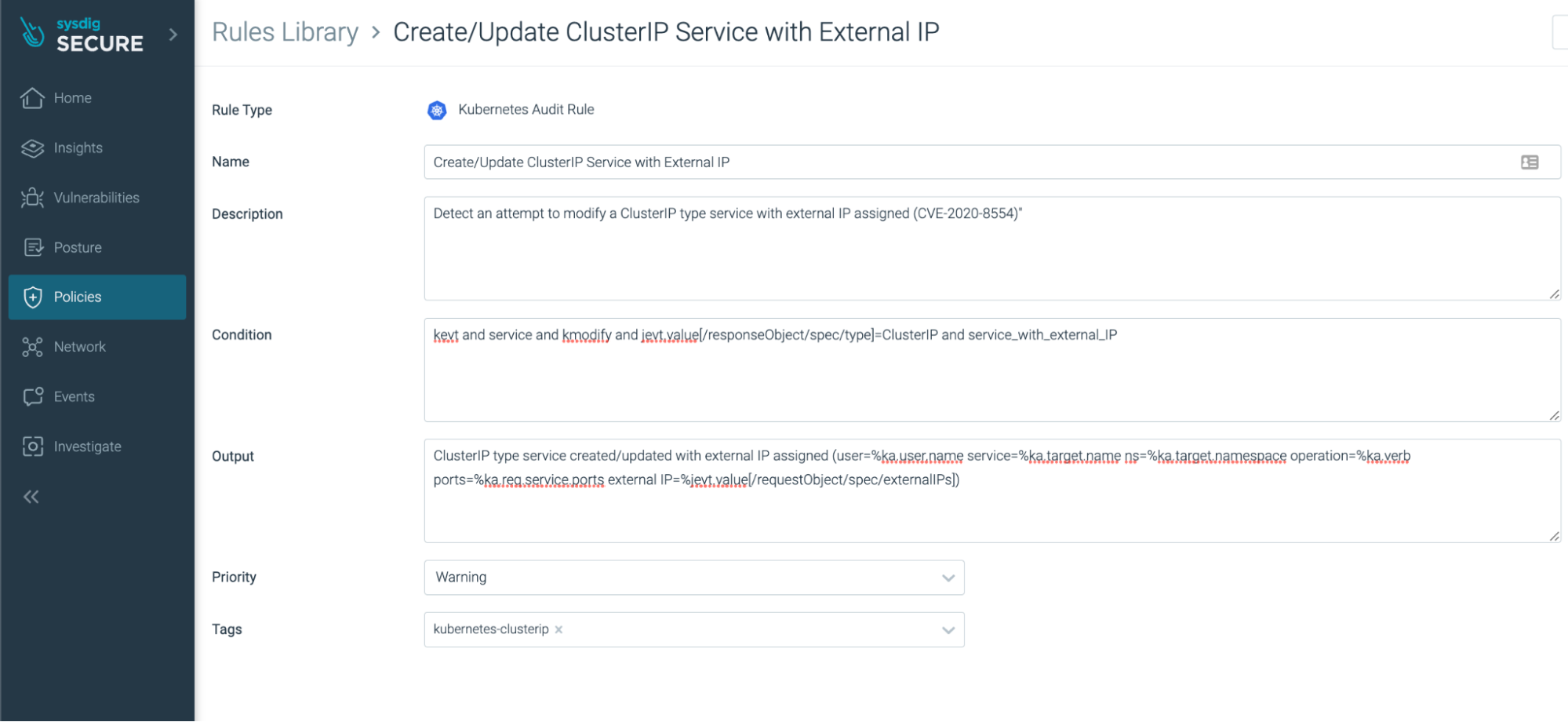

- rule: Create/Update ClusterIP Service with External IP

desc: Detect an attempt to modify a ClusterIP type service with external IP assigned (CVE-2020-8554)"

condition:kevt and service and kmodify and jevt.value[/responseObject/spec/type]=ClusterIP and service_with_external_IP

output: ClusterIP type service created/updated with external IP assigned (user=%ka.user.name service=%ka.target.name ns=%ka.target.namespace operation=%ka.verb ports=%ka.req.service.ports external IP=%jevt.value[/requestObject/spec/externalIPs])

priority: WARNING

source: k8s_auditNote: Falco streams data from the Kubernetes Audit Logs, as seen in source: k8s_audit. Whenever a service, pod, namespace, or anything else that is Kubernetes-native is created, updated, or deleted, an entry is created in the Kubernetes Audit Logs. By plugging into this log source, we can see when a service with an external IP is created, in next to real time.

The rule does not exist in Sysdig Secure by default, so we create a Macro to define the external IPs.

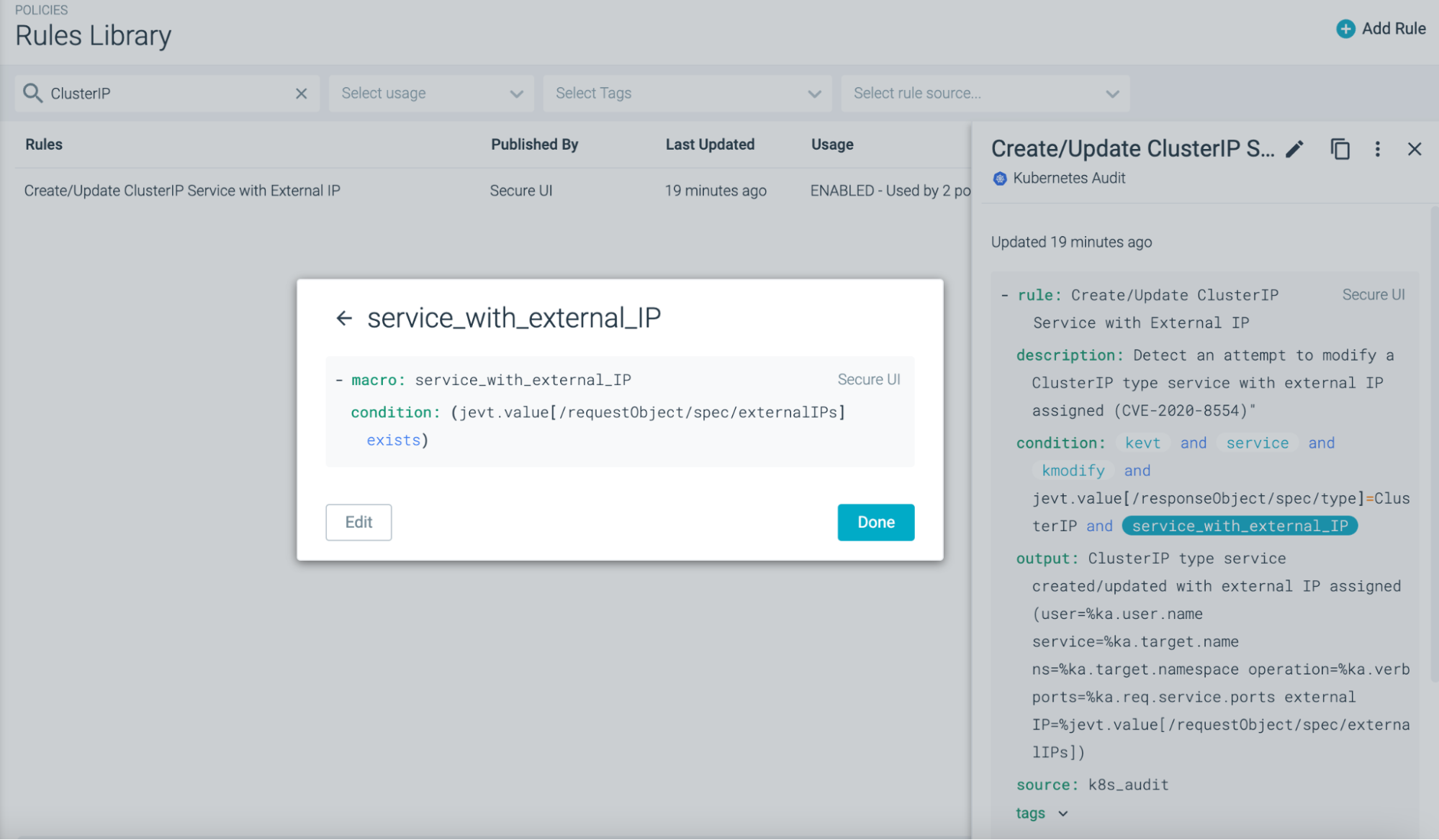

Once the Macro is created, we can build a Falco rule to operate as a conditional set for multiple Macros.

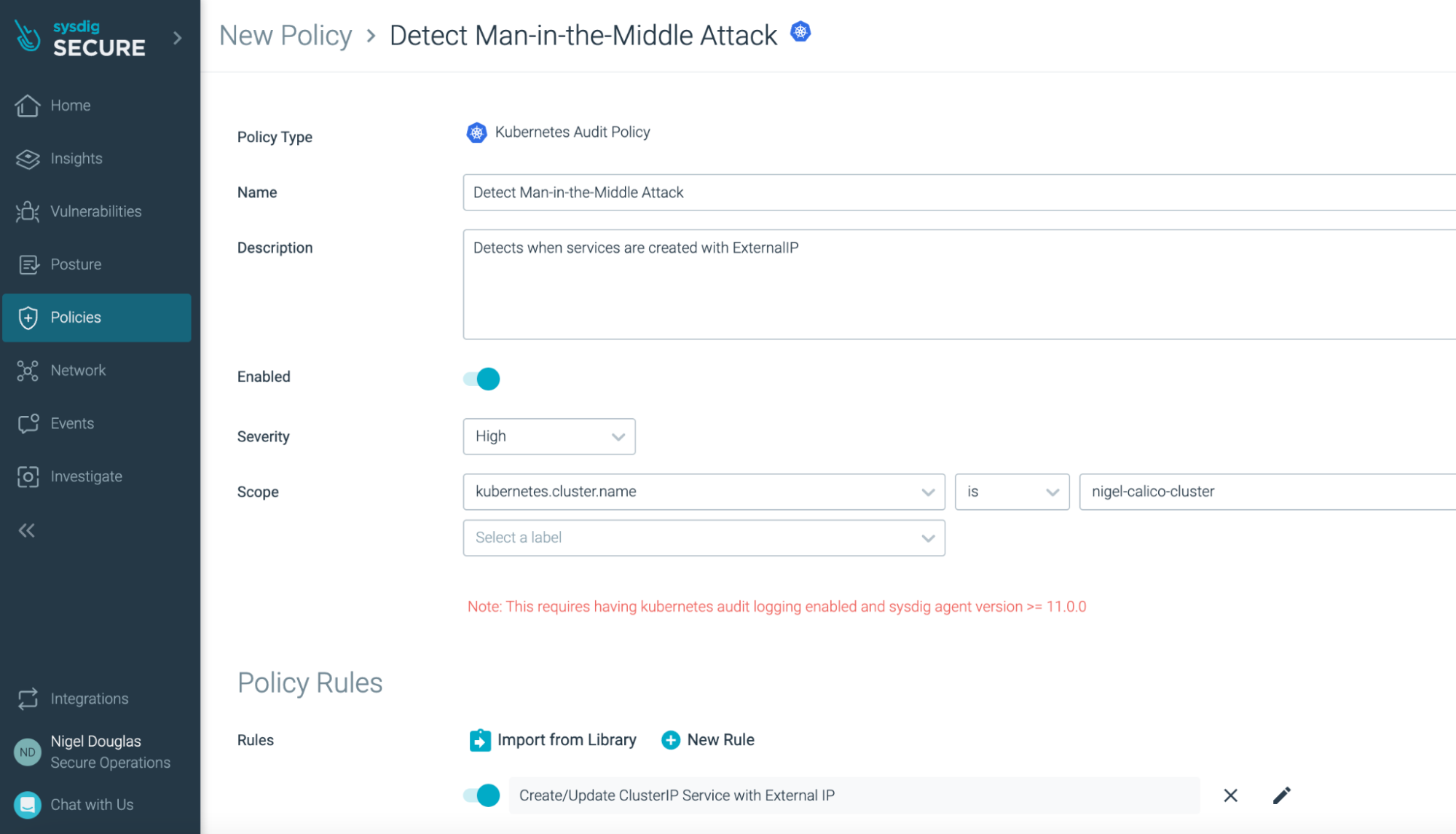

Once the rule is created, it doesn't actually apply to any activity in Kubernetes. Under 'Usage,' you can see that the rule is 'NOT USED.' We created a Falco policy that defines what we will do with the rule and where alerts will be sent.

Creating a policy is straightforward, as you can see in the following screenshot. In our case, we will just add the one ClusterIP modification rule to our new policy.

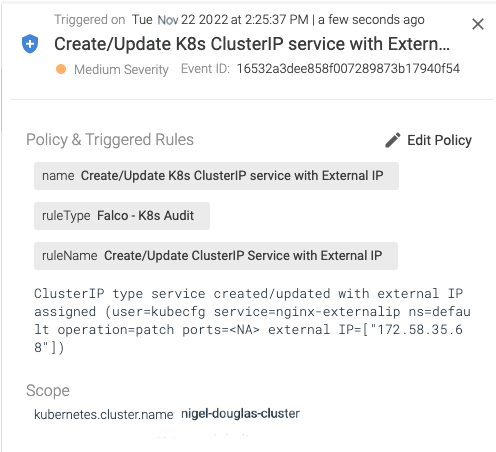

Users should not patch service status, so audit events for patch service status requests authenticated to a user may be suspicious. When someone creates or patches a service with external IP addresses, the security event output looks like this in Sysdig Secure:

Sysdig Secure leverages the Falco security project under the hood to continuously detect configuration changes, threats, and anomalous behavior across containers, Kubernetes, and cloud. Since this flaw applies to multi-tenant environments, it makes sense to have these alerts centralized within a managed CDR solution like Sysdig Secure.

However, if you are using the above rule in standalone, open source Falco, it works the same way!

For more information on Kubernetes Audit Logs, check out the official Falco documentation.

Mitigations to CVE-2020-8554

Aside from just detecting the MitM attack, we also need to prevent the exploration of CVE-2020-8554.

We will discuss Admissions Controllers and what value they add to the mitigation strategy.

Validating webhooks

To restrict the use of external IPs, you can implement an admission webhook container. There is a validating webhook called 'externalip-webhook' which is provided by the Kubernetes security community, and can prevent services from using random external IPs.

Cluster administrators can specify a list of CIDR's allowed to be used as external IPs by specifying allowed-external-ip-cidrs parameter. In turn, the webhook will only allow creation of services which do not require external IPs or whose external IPs are within the range specified by the administrator.

externalip-webhook can also restrict who can specify allowed ranges of external IPs to services by specifying allowed-usernames and allowed-groups parameters.

Open Policy Agent

Sysdig Secure's CDR solution provides a managed offering of Open Policy Agent (OPA). Sysdig Secure leverages OPA to enforce consistent policies across multiple IaC (Terraform, Helm, Kustomize) and Kubernetes environments, using policy as code framework. External IPs can be restricted using OPA Gatekeeper.

In the OPA Gatekeeper sample library, there is a ConstraintTemplate and Constraint for this vulnerability:

github.com/open-policy-agent/gatekeeper-library/blob/master/library/general/externalip/template.yaml

apiVersion: templates.gatekeeper.sh/v1

kind: ConstraintTemplate

Metadata:

name: k8sexternalips

Annotations:

metadata.gatekeeper.sh/title: "External IPs"

metadata.gatekeeper.sh/version: 1.0.0

description: >-

Restricts Service externalIPs to an allowed list of IP addresses.Within the ConstraintTemplate, we specify a constraint that targets external IPs that are not on the allowedIPs list and treats these service changes as violations.

targets:

- target: admission.k8s.gatekeeper.sh

rego: |

package k8sexternalips

violation[{"msg": msg}] {

input.review.kind.kind == "Service"

input.review.kind.group == ""

allowedIPs := {ip | ip := input.parameters.allowedIPs[_]}

externalIPs := {ip | ip := input.review.object.spec.externalIPs[_]}

forbiddenIPs := externalIPs - allowedIPs

count(forbiddenIPs) > 0

msg := sprintf("service has forbidden external IPs: %v", [forbiddenIPs])

}It allows DevOps teams to shift security further left by scanning infrastructure as code source files before deployment. Sysdig Secure also detects runtime drift, prioritizes based on application context, and fixes issues directly at the source with a pull-request.

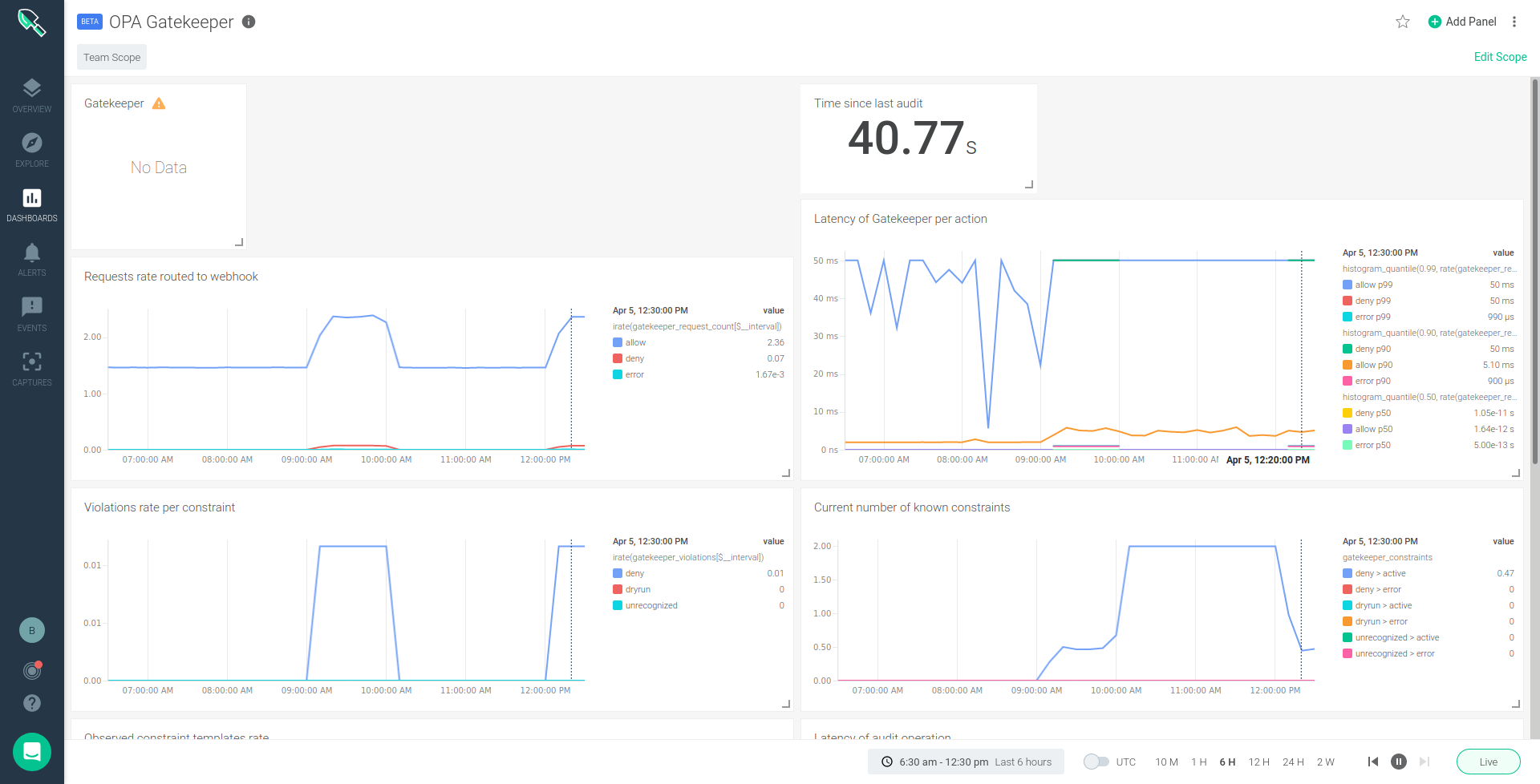

If you'd like to monitor OPA Gatekeeper to ensure it is working properly, Sysdig Monitor offers a managed version of Prometheus to scrape metrics from OPA Gatekeeper as well as other third-party, open source tools. The OPA Gatekeeper dashboard provides information on the requests rate, latency, violations rate per constraint, etc.

CVE-2020-8554 Runtime defense

Unfortunately, as good as the solution of Falco and OPA is, it relies on custom configs since there is a realistic risk of detecting false positives when a DevOps engineer creates a service with an External IP. Security teams need to audit the Falco alerts to ensure this service with the External IP is created for a legitimate reason. That's why we need to consider additional options for runtime security. Since all clusters must have a CNI (Container Networking Interface) plugin to function, we can utilize the CNI plugin's Network Policy implementation to mitigate this type of MitM vulnerability at runtime.

It's important to note that if you create a service with an arbitrary external IP address, then traffic to that external IP from within the cluster will be routed to that service. This lets an attacker, with permission to create a service with an external IP, intercept traffic to any target IP.

The routing happens at the IP layer. Whether the NetworkPolicy framework offers DNS Policies features or not, once the DNS resolves to the target IP, it will still be intercepted. That's why the following runtime policies secure the expected IP address connections and drop everything else as part of the least privilege design.

CNI

Container Network Interfaces (CNI) provide networking controls for a Kubernetes cluster. Project Calico is an example of a CNI plugin that is widely used in Kubernetes. As well as the network interface, Project Calico provides a Network Policy implementation that controls the flow of traffic before and after Destination Network Address Translation (DNAT). If traffic is hijacked by an MitM, pods can only communicate over their approved ports and protocols.

Network Policies for 'host endpoints' (applied to Nodes) can be marked as pre-DNAT. Pre-DNAT policy may only have ingress rules, not egress. This is ideal for our MitM use-case, where the adversary is trying to hijack workloads from outside the cluster. If the incoming traffic is whitelisted/allowed by the ingress pre-DNAT rules, only then will standard connection tracking be allowed in the return path traffic.

The following YAML manifest is for a GlobalNetworkPolicy that allows cluster ingress traffic for the nodes' IP CIDR range (1.2.3.4/16), and for pod IP nets assigned by Kubernetes (100.100.100.0/16). It targets Kubernetes nodes labeled with 'is(k8s-nodes).' The pre-DNAT feature works by adding the applyOnForward: true value.

apiVersion: projectcalico.org/v3

kind: GlobalNetworkPolicy

Metadata:

name: allow-cluster-internal-ingress-only

Spec:

order: 20

preDNAT: true

applyOnForward: true

Ingress:

- action: Allow

Source:

nets: [1.2.3.4/16, 100.100.100.0/16]

- action: Deny

selector: is(k8s-node)The aforementioned policy works at preventing hijacking from an outside entity. Within the cluster, we can isolate connections further at runtime with network namespace-scoped policies. If the External IP service is created in the 'engineering' namespace, it's important to allow all port connections that are needed for our services, and then apply a default-deny at the end of the network namespace to deny any other unexpected connections at runtime:

apiVersion: projectcalico.org/v3|

kind: NetworkPolicy

metadata:

name: default-deny

namespace: engineering

spec:

order: 200

selector: all()

Types:

- Ingress

- EgressThe above policy only applies at order: 200 in the engineering namespace. That way, we would set the whitelist rules at higher priorities (e.g., 0-199). The default-deny will drop all traffic after the whitelist rules. It makes sense to include default-deny rules in all network namespaces when adopting a zero trust network security architecture.

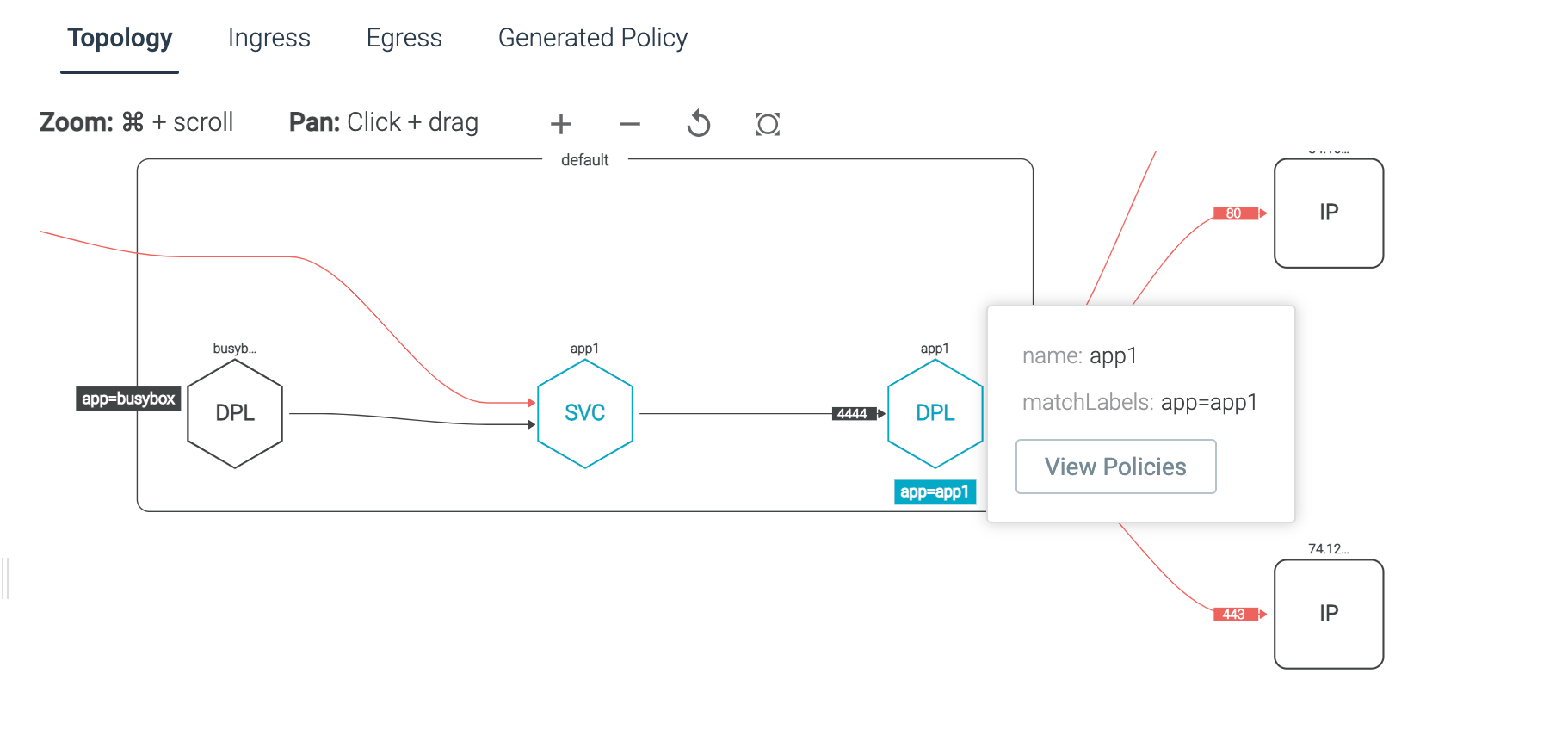

With Sysdig Secure's Network Topology view, users can visually validate if the recommended Network policy is desirable, or if something should be changed. The topology view is a high-level Kubernetes metadata view: pod owners, listening ports, services, and labels.

Communications that will not be allowed if you decide to apply this policy are color-coded red.

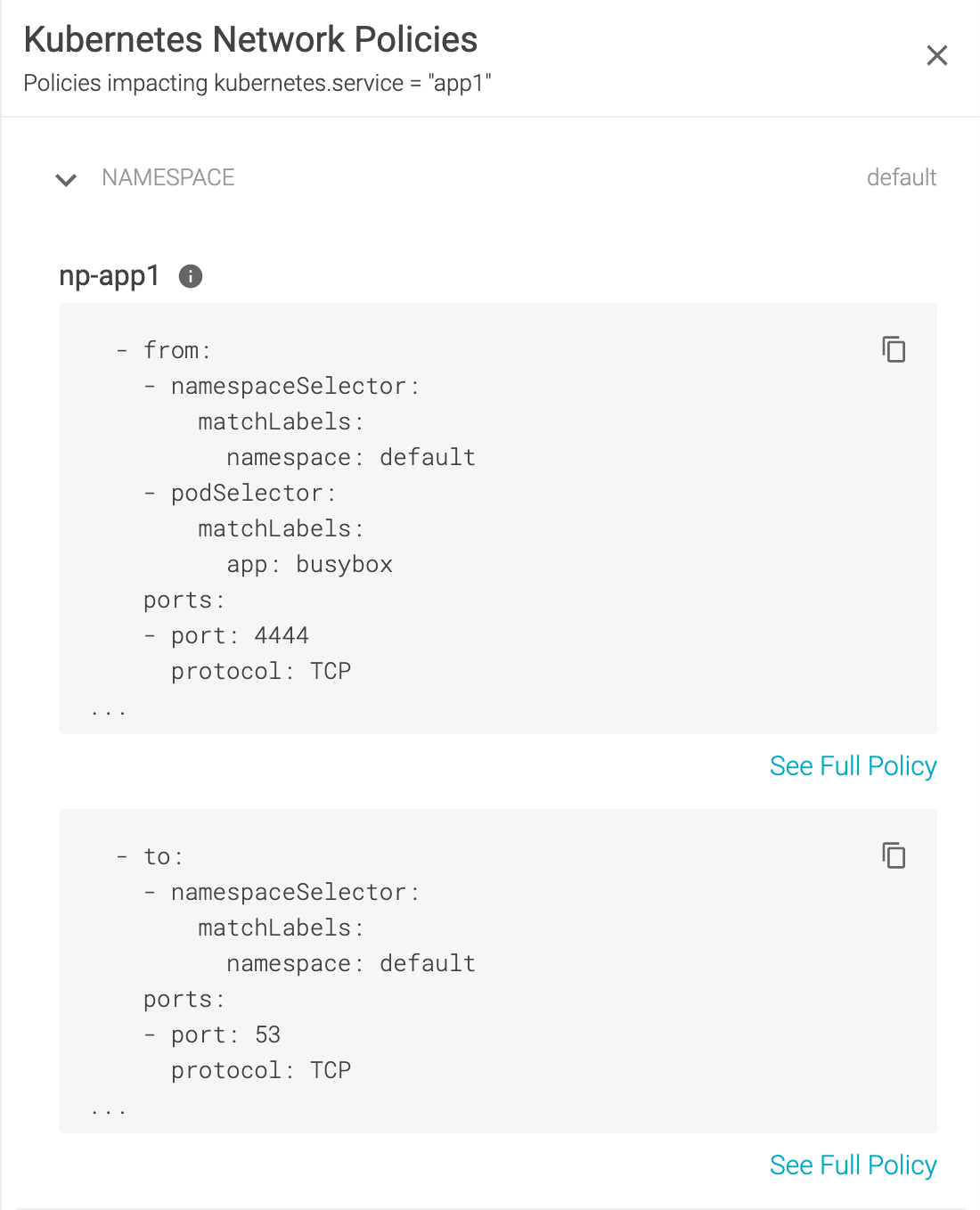

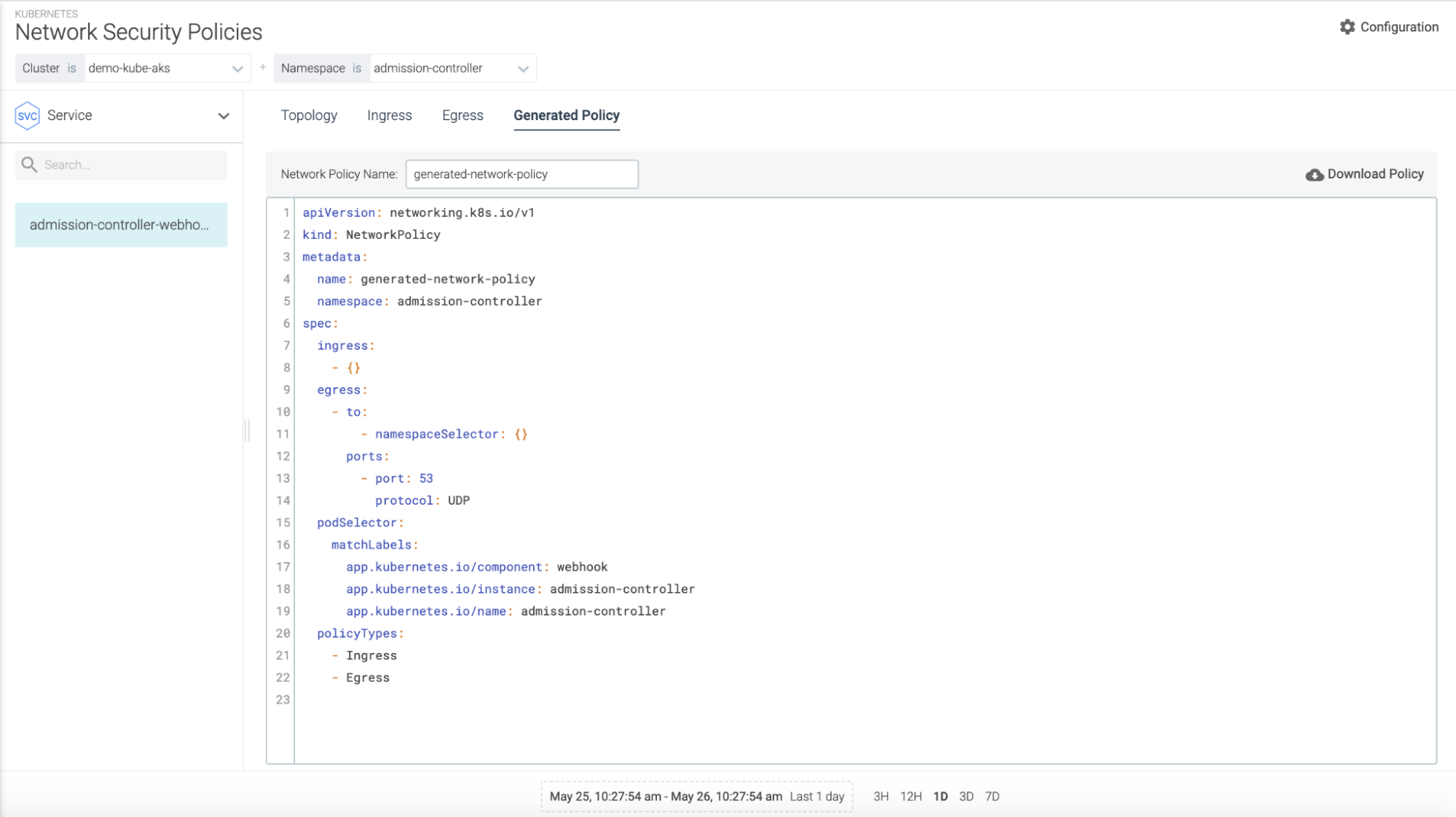

Once a Network Policy is generated via the Sysdig Secure UI, you can view the network policies applied to a cluster for a particular workload(s). We recommend reviewing the relevant policies applied to the pod-to-pod communication for the current view. Once reviewed, click 'View Policy' to see the raw yaml output of the network policy applied to that workload.

Unlike the Calico Network Policies mentioned earlier, the above policy is the default Kubernetes Network Policy implementation. This way, regardless of which CNI provider you choose, users can simply click the 'Generate Policy' tab to instantly get a generated file that will work in any Kubernetes cluster. This is ideal for multi-cloud, multi-cluster strategies where multiple CNI plugins are used.

Final words about CVE-2020-8554

Traditional EDR (Endpoint Detection & Response) solutions lack the visibility required to detect and prevent Kubernetes-specific threats like CVE-2020-8554. By not being built for Kubernetes workloads but rather adapted to them, they lack visibility of control plane activities, understanding of the ephemeral nature of containers, and have limited actionability through Admission Controllers.

In the case of Falco, OPA, and Calico, all three tools are cloud-native and designed specifically to secure containers and Kubernetes. As a CDR platform, Sysdig Secure provides the detection engine, admission controller, and network policy recommendations required to detect, prevent, and block CVE-2020-8554. External IPs can be restricted using a Kubernetes-native admission controller, like OPA Gatekeeper, and Network Connections can be blocked on a per-pod basis via NetworkPolicy implementations, like Project Calico.

Hopefully, you have a better understanding of this unpatched Kubernetes vulnerability CVE-2020-8554 after reading this blog post. Fixing the problem is somewhat straightforward, but auditing Kubernetes configurations in multi-cluster and multi-cloud environments can be quite tedious. Using a cloud-native CDR solution, like Sysdig Secure, will speed-up the Mean-Time-To-Response (MTTR) for any cloud misconfigurations on the host, containers, or cloud tenants.