Cloud Security Posture Management (CSPM) aims to automate the identification and remediation of risks across your entire cloud infrastructure. A core requirement of the CSPM framework is the need to enforce a principle of least privilege.

There are certain overlaps with Cloud Infrastructure Entitlement Management (CIEM) solutions. CIEM is a newer categorization that came after CSPM. It was built to address the supposed security gaps in traditional CSPM tools that focus exclusively on misconfigurations. CIEM tools address the inadequate control over identities and privileges that has become prevalent in public cloud deployments.

More recently, both CSPM and CIEM have been encompassed by a broader term – Cloud Native Application Protection Platform (CNAPP). Leveraging a CNAPP in your environment will provide in-depth coverage across all aspects of your environment from proactive validation of workloads to auditing the policies on the public cloud platform you are running on.

Unlike traditional on-prem environments, the provisioning of infrastructure and applications tends to be far quicker in the cloud. This allows cloud users to be more relaxed about spinning-up and tearing-down virtual machines and infrastructure. The headaches of procuring compute are largely alleviated. We can create short-lived temporary projects for testing and then delete them via a single CLI command. All these test configurations have associated cloud identities with their particular permissions. Unfortunately, companies tend to forget that the default design of their cloud is based on the shared responsibility model, which implies that although the cloud provider will do its utmost best to bolster the security of the cloud platform itself, the businesses using the platform must implement their own posture requirements for their apps and infrastructure.

In this blog post, we will discuss CSPM and look to compare the designs of these various platforms by implementing the principles of least privilege.

What is the least privilege principle?

According to CISA (Cybersecurity & Infrastructure Security Agency), the Principle of Least Privilege states that a subject should be given only the privileges needed for it to complete its task. If a subject does not need an access right, we should not give it access as part of the zero trust architecture design.

Weak or improperly applied identity policies and their particular permissions are a vulnerable target for attackers in the cloud. Continuously scanning for permissions changes, misconfigurations in build templates, and ultimately preventing drift from our original safe template will allow us to confidently automate code deployments without fear of a breach down the line.

It’s also important to recognize that identity doesn’t just imply user accounts. Machine identities, by virtue of automation and containerization, are just as, if not more, prominent.

Cloud audit and threat detection based on activity logs

In order to perform threat detection, the first thing we must have in our arsenal is a way to have cloud visibility.

We can use Falco, an open source runtime security threat detection and response engine, to detect threats at runtime by observing the behavior of your applications and containers running on cloud environments. Falco extends support for threat detection across multiple cloud environments via Falco Plugins. By streaming logs from the cloud provider to Falco, instead of installing the Falco agent in the cloud environment, we have the deep visibility required to perform posture management.

The Falco CloudTrail plugin, for example, can read AWS CloudTrail logs and emit events for each CloudTrail log entry. This plug-in also includes out-of-the-box rules that can be used to identify interesting/suspicious/notable events in CloudTrail logs, including:

- Console logins that do not use multi-factor authentication

- Disabling multi-factor authentication for users

- Disabling encryption for S3 buckets

Since Falco is open source, users can build their own Falco plugins, as outlined in this community session with Hashicorp. Users can build Falco plugins to gain additional insights not already provided in the Falco rule library, better understanding whether or not there has been a misimplementation of least privilege. With these deeper insights, users can theoretically improve their implementation of the least privilege principle. It’s important to state that Do It Yourself configurations do not work for every organization. That’s why Sysdig Secure builds upon the open source Falco rules engine to provide a simplified, managed approach to policy enforcement.

Enforcing MFA in the cloud

Whenever cloud security is mentioned, the first thing that comes to mind is secure access with MFA. MFA is a must for all users (not just administrators).

Detecting authentication without MFA is a necessary part of enforcing least privilege within CSPM solutions. As a general best practice for all cloud providers, we should be enabling MFA for all users.

Tools such as Falco, which alert us when an incident occurs, help reduce risk for your organization.

In Falco, you can configure a simple YAML manifest to detect interactive logins to AWS without MFA.

You can find the example with Falco and Cloudtrail on Falco’s blog.

Finally, another example that attackers could try to gain initial access is with MFA Fatigue or Spamming, but it is also possible to detect and alert when this occurs.

We have provided hyperlinks detailing the supported list MFA tools for AWS, Azure, and Google Cloud.

Cloud host scanning

Some practitioners refer to this as “agentless” scanning since, in many cases, CSPM providers avoid agents in favor of API’s when possible. It’s no longer the case where traditional EDR vendors install agents on workstations manually or push deploy via Group Policy Objects (GPO).

Since cloud-hosted VMs/EC2 instances are regularly spun-up and deleted, it’s a better approach to compute logs in runtime directly from the cloud tenant. Falco easily plugs in to each cloud provider’s audit logging service to enhance near real-time detection and increase the security of your cloud environment.

Audit logs help you answer “who did what, where, and when?” within your cloud resources with the same level of transparency you would expect in on-premises environments. Enabling audit logs helps your security, auditing, and compliance entities monitor cloud data and systems for possible vulnerabilities or external data misuse.

Examples of cloud audit services we plug into include AWS CloudTrail, GCP AuditTrail, and Azure PlatformLogs.

Once connected, we should have deep visibility on changes made to infrastructure, by whom, and which permissions were given to the user making those changes. By plugging into the audit logs of popular cloud providers, we should be able to track all relevant user changes. We will discuss preventative measures to stop unauthorized host connections in the cloud. In the meantime, it’s important to use Falco as a detection tool to identify.

- rule: Outbound or Inbound Traffic not to Authorized Host Process and Port

desc: Detect traffic that is not to an authorized VM process and port.

condition: >

allowed_port and inbound_outbound and container and

container.image.repository in (allowed_image)

output: >

Network connection outside authorized port and binary

(proc.cmdline=%proc.cmdline connection=%fd.name user.name=%user.name

user.loginuid=%user.loginuid container.id=%container.id evt.type=%evt.type

evt.res=%evt.res proc.pid=%proc.pid proc.cwd=%proc.cwd proc.ppid=%proc.ppid

proc.pcmdline=%proc.pcmdline proc.sid=%proc.sid proc.exepath=%proc.exepath

user.uid=%user.uid user.loginname=%user.loginname group.gid=%group.gid

group.name=%group.name container.name=%container.name

image=%container.image.repository)

priority: warning

source: syscall

append: false

Exceptions:

- name: proc_names

comps: in

fields: proc.name

Values:

- authorized_server_binaries

Code language: Perl (perl)In this blog post, we will demonstrate how using CNCF-recognized open source tools such as Anchore and Open Policy Agent (OPA) can also help us to enforce least privileges for cloud hosts and workloads.

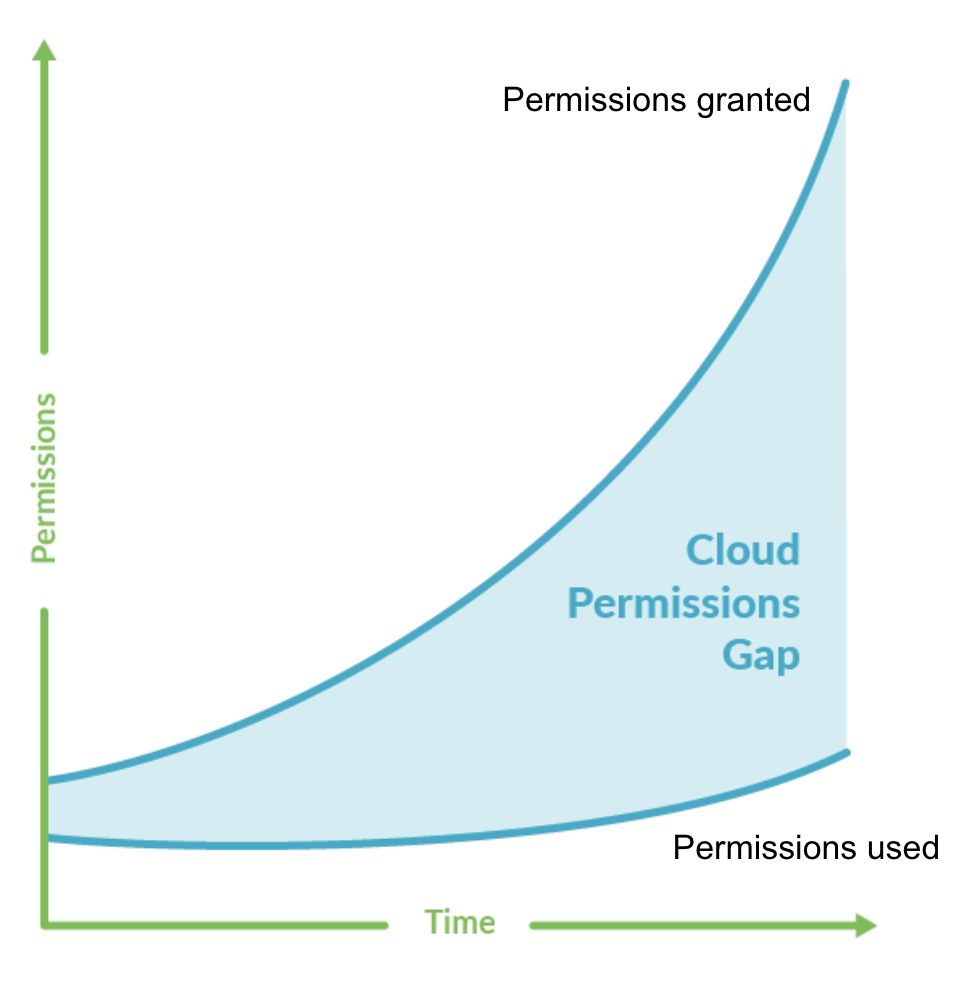

Cloud entitlement

Businesses need an ‘Identity & Access’ (IAM) view that aggregates all associated cloud activities, which can then be filtered on a per-user basis. If certain permissions that we give to a cloud user are not utilized, we should consider removing them from the user. Similarly, if a user is inactive, or the roles associated with the user are unused, we should consider removing these as part of the principle of least privilege.

Therefore, all this will help us to keep the lifecycle of users secure and audited without affecting the day-to-day ops.

Restricting user permissions

We strongly recommend creating user-specific policies based on the end-users’ requirements and behaviors. By restricting the amount of permissions given to a specific Identity, we can limit the blast radius in the case where that user account is potentially breached.

If the user in this case is only using the cloud tenant to spin-up managed Kubernetes clusters, there is little need for them to make changes to Serverless functions like Lambda, for example. As a result, we should remove the three given AWSLamdaBasicExecutionRole permissions from the attached policy where they are currently unused.

While tools like Falco are ideal for detecting patterns and behaviors associated with a potential breach, IAM solutions are necessary to securely authenticate, authorize, and audit non-human access across tools and applications. For example, CyberArk Conjur is a CNCF project that provides an open source interface for IAM controls across the entire cloud stack, such as the open source tools that are installed, containerized applications that are running, as well the cloud environments via a robust secrets management offering.

In Kubernetes and Cloud, secrets are objects created specifically for holding sensitive credentials such as account credentials, API keys, and access tokens. If secrets were to be leaked or accessed by anyone outside of the cloud environment, they could potentially access other services via shared credentials.

In Conjur, we can address this through riles. The root admin role has the following characteristics that loosely resemble Active Directories GPO tree structure:

- It is the owner of root policy. Therefore, its members can load policy under root.

- It owns all policy unless an explicit owner role is named for a branch of policy off of root.

The following statements create a root admin group role: sysdig-root-admins grants the users permission to those groups while only giving the user read privileges to non-root policy resources.

# add the following to your root policy file

# declare two users and a group

- !user nigel

- !user pietro

- !group sysdig-root-admins

# add members to the group

- !grant

role: !group sysdig-root-admins

Members:

- !user nigel

- !user pietro

# give the group privileges on some resources

- !permit

role: !group sysdig-root-admins

Privileges:

- read

Resources:

- !policy root

Code language: Perl (perl)In many cases, over 95 percent of permissions are granted to users where they are simply unused.

If we were to remove these permissions from the user account, we are limiting the possible blast radius associated with a user account takeover, which ultimately improves our security posture.

Assuming the user ‘nigel’ had their account hijacked, they only have ‘read-access’ on files that are NOT associated with their resources, which is a more manageable approach to assigning/revoking permissions to cloud users. DevOps teams cannot be expected to manually check if 219 permissions should be added to a user or not. In the case of multi-cloud environments, many businesses end up giving users excessive permissions that shouldn’t be granted in the first place.

Cloud asset inventory

The definition of what an “asset” is has dramatically changed in the cloud. Traditionally, asset inventory was listed as servers, endpoints, and network devices. Or, to put it more simply, an asset was anything with an IP address. Because modern cloud applications are no longer just virtualized compute resources, but a superset of cloud services on which businesses depend, an asset can now be considered as credentials in an automation template. Assets may also be too ephemeral, or they’re not IP addressable depending on how they are bootstrapped within infrastructure.

As such, controlling the security of your cloud account credentials is essential. Errors or misconfigurations can expose an organization to risks that could bring resources down, infiltrate workloads, exfiltrate secrets, create unseen assets, or otherwise compromise the business or reputation.

At the most fundamental level, your cloud provider will usually provide Cloud Asset Inventory, as identified in Google Cloud, Amazon AWS, and Microsoft Azure. However, as you scale your cloud environments, CloudOps often becomes too complex with hundreds of different technologies and tools that are not entirely running within the cloud provider, or where the cloud provider was not designed to give that additional insight.

That’s why it’s important to consider tools that can report on cloud asset inventory so businesses can easily maintain their regulatory compliance in line with CSPM requirements.

Avoid exposing credentials

As the number of cloud services and configurations available grows exponentially, we need to keep an inventory check of our cloud assets: VMs, workloads and services.

One place to start is by preventing the exposure of private credentials within our cloud configurations.

We mentioned earlier how secrets contain sensitive data, and therefore should be secure. The same can be said for the ConfigMap resource within Kubernetes. ConfigMaps allows you to decouple environment-specific configuration from your container images so that your applications are easily portable. Unfortunately, ConfigMaps are designed intentionally to contain non-confidential data in key-value pairs. However, many developers will mistakenly leave private credentials within their ConfigMaps, which could be accessed by an adversary in the case of Kubernetes cluster compromise.

The following Falco rule should detect when a ConfigMap contains private credentials. If found, please remove these from the configuration file.

- rule: Create/Modify Configmap With Private Credentials

desc: >

Detect creating/modifying a configmap containing a private credential (aws

key, password, etc.)

condition: kevt and configmap and kmodify and contains_private_credentials

output: >-

K8s configmap with private credential (user=%ka.user.name verb=%ka.verb

configmap=%ka.req.configmap.name namespace=%ka.target.namespace)

priority: warning

source: k8s_audit

append: false

Exceptions:

- name: configmaps

Fields:

- ka.target.namespace

- ka.req.configmap.name

Code language: Perl (perl)Finding cloud credentials

The first step to consider in the least privileged workflow is how to implement image scanning in your Infrastructure-as-Code (IaC) templates to prevent infrastructure from being created with misconfigurations and mis-permissioning. You often hear the term “shift left” being thrown around. But what is shift left?

Essentially, shift left approaches ensure we integrate security in the earliest points of design and development, often focused on CI/CD pipelines, long before any runtime controls are needed. This is one of the core considerations of CSPM.

Note: If you want to know more about image scanning:Image scanning for GitLab CI/CD

Image scanning with GitHub Actions

However, assuming you’re reading this blog and you have not implemented scanning or the scanning did not detect the misconfiguration, it’s important that we easily detect find or grep credentials that have made their way into production, and could otherwise compromise our compliance and more importantly, security posture.

The following Falco rule should detect AWS credentials that are exposed in production:

- rule: Find AWS Credentials

desc: Find or grep AWS credentials

condition: >

spawned_process and ((grep_commands and private_aws_credentials) or

(proc.name = "find" and proc.args endswith ".aws/credentials"))

output: >-

Search AWS credentials activities found (user.name=%user.name

user.loginuid=%user.loginuid proc.cmdline=%proc.cmdline

container.id=%container.id container_name=%container.name evt.type=%evt.type

evt.res=%evt.res proc.pid=%proc.pid proc.cwd=%proc.cwd proc.ppid=%proc.ppid

proc.pcmdline=%proc.pcmdline proc.sid=%proc.sid proc.exepath=%proc.exepath

user.uid=%user.uid user.loginname=%user.loginname group.gid=%group.gid

group.name=%group.name container.name=%container.name|

image=%container.image.repository:%container.image.tag)

priority: notice

source: syscall

append: false

Exceptions:

- name: proc_pname

Fields:

- proc.name

- proc.pname

…

Code language: Perl (perl)IaC scanning

As stated earlier, we should scan the IaC templates to find vulnerabilities and misconfigurations at the earliest possible point, proactively addressing these security concerns before they become an issue. IaC templates that require scanning include GitHub, BitBucket, and GitLab. Git-integrated scanning can be performed on the following types of files and folders: YAML, Kustomize, Helm, and Terraform are common examples.

Scanning is not unique to the code you write. In many cases, you could be using common images that you never built (i.e., Nginx or CentOS). Image scanning can be performed on registries you use, so you are warned when an image is vulnerable, and take action to avoid deploying it. If you are hosting your own registry using Harbor, you can even build the scanning into the actual registry.

Harbor is an open source, CNCF incubating project that provides a container image registry to secure images with Role-Based Access Control (RBAC), for hybrid and multi-cloud environments.

To ensure we meet those least privilege requirements, it makes sense to restrict who can and cannot pull images from the private registry. Similarly, Harbor delivers compliance for multi-cloud instances by securely managing images across cloud native compute platforms, which ticks the CSPM requirement.

The team at Anchore created a Harbor Scanner Adapter for its open source software supply chain security offering. This service translates the Harbor scanning API into Anchore commands, and allows Harbor to use Anchore for vulnerability reporting on images stored in Harbor registries as part of its vulnerability scan feature. This would provide full reporting capabilities for our cloud application templates.

apiVersion: apps/v1

kind: Deployment

Metadata:

name: harbor-scanner-anchore

Labels:

app: harbor-scanner-anchore

Spec:

Spec:

Containers:

- name: adapter

image: anchore/harbor-scanner-adapter:1.0.1

imagePullPolicy: IfNotPresent

Env:

- name: SCANNER_ADAPTER_LISTEN_ADDR

value: ":8080"

- name: ANCHORE_ENDPOINT

value: "http://anchore-anchore-engine-api:8228"

- name: ANCHORE_USERNAME

valueFrom:

secretKeyRef:

name: anchore-creds

key: username

- name: ANCHORE_PASSWORD

valueFrom:

secretKeyRef:

name: anchore-creds

key: password

…

Code language: Perl (perl)We can then expose the harbor scanner service by type ‘ClusterIP’ for the K8s cluster.

As specified in the deployment manifest, the scanner adapter port listens on port 8080.

—

apiVersion: v1

kind: Service

Metadata:

name: harbor-scanner-anchore

Spec:

Selector:

app: harbor-scanner-anchore

type: ClusterIP

Ports:

- protocol: TCP

port: 8080

targetPort: 8080

Code language: Perl (perl)Cloud configurations

Assuming an image was scanned in your IaC template and a known misconfiguration or vulnerabilities were detected in the build process, it’s important to prevent this kind of flaw from getting into runtime by shielding your Kubernetes.

This is where the admission controller comes into play. Whether the insecure template is approved or not, we should also consider configuring our cloud to apply least privileges to network connections permitted by a user or workload before they reach the runtime state.

Open Policy Agent

Open Policy Agent (OPA) is a CNCF-graduated project that provides policy-based admission control for cloud native environments. Admission Controllers enforce semantic validation of objects during create, update, and delete operations. In the case of the least permissions principle, insecure templates should not be permitted in production environments. By using OPA in the following scenario, we are not permitting workloads from being created in our production environment that are exposing TCP port 443.

match[{"msg": msg}] {

input.request.operation == "CREATE"

input.request.kind.kind == "Pod"

input.request.resource.resource == "pods"

input.request.object.spec.containers[_].ports[_].containerPort == 443

msg := "Preventing 443 (TCP) in pod config"

}

Code language: Perl (perl)It’s important to set these parameters at the earliest possible point in our cloud configurations. It’s not just compromised workloads that will try to access port numbers not explicitly required by our production-grade environment. Some users might also be looking to directly communicate with external C2 servers as part of a data exfiltration test. That’s why it’s important to prevent unwanted connections from all users in your cloud environment.

Identity-based policy

You can attach an identity-based policy to a user group so that all of the users receive the policy’s permissions. In the case of least privileges on AWS, Identity & Access Management (IAM) is achieved by typically having a user group called Admins and giving that user group expected elevated administrator permissions.

Any user that is added to the “non-Admin” user group should automatically have those expected Admins group permissions revoked.

Let’s say we have a forensics team who needs to see the network activity and configuration from tools like Network Manager. They would need to be granted access to all these AWS resources to be able to do their job, however, they don’t really need to configure anything. Their sole purpose is to monitor activity. For this user, we would recommend creating an identity-based policy that grants read-only access to the Amazon EC2, Network Manager, AWS Direct Connect, CloudWatch, and CloudWatch Events APIs.

This enables users to use the Network Manager console to view and monitor global networks and their associated resources, and view metrics and events for the resources. However, users cannot create or modify any resources!

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ec2:Get*",

"ec2:Describe*"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"networkmanager:Get*",

"networkmanager:Describe*"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"cloudwatch:List*",

"cloudwatch:Get*",

"cloudwatch:Describe*"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"logs:Describe*",

"logs:Get*",

"logs:List*",

"logs:StartQuery",

"logs:StopQuery",

"logs:TestMetricFilter",

"logs:FilterLogEvents"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"events:List*",

"events:TestEventPattern",

"events:Describe*"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": "directconnect:Describe*",

"Resource": "*"

}

]

}

Code language: Perl (perl)As you can see in the above policy, the IAM associated with this user has access to all of these resources, as denoted by the asterisk (*). The ‘Effect’ is set to ‘Allow,’ however, the actions are simply to ‘List,’ ‘Describe,’ and ‘Get’ for the most part. It’s important to note that the above example was for AWS. Microsoft Azure implements IAM in a slightly different approach. It uses Group Policy Object (GPO) in an Azure Active Directory tree-based structure.

Saying that, Microsoft Azure works just fine, as seen here. Google Cloud, on the other hand, provides a similar workflow to Amazon AWS, as seen in this simple example where the allow policy binds a principal to the IAM role:

{

"bindings": [

{

"members": [

"user:[email protected]"

],

"role": "roles/owner"

}

],

"etag": "BwUjMHhHNvY=",

"version": 1

}

Code language: Perl (perl)In the example above, [email protected] is granted the ‘Owner’ basic role without any conditions. This role gives the user ‘nigel’ almost unlimited access. Unless there is a very clear business justification for this user having full permissions, we should create rules like we saw in AWS to limit this user’s access.

It’s important to note that you cannot identify a user group as a Principal in an AWS identity-based policy (such as a resource-based policy), because groups relate to permissions, not authentication, and principals are authenticated IAM entities.

Resource-based policy

Resource-based policies are attached to a resource. For example, you can attach resource-based policies to Amazon S3 buckets, Amazon SQS queues, VPC endpoints, and AWS EC2 instances. Resource-based policies grant permissions to the principal that is specified in the policy.

This type of policy helps organizations specify who/what can invoke an API from a resource to which the policy is attached. This way, we can prevent a service principal on the infrastructure-level from invoking or managing serverless functions in Lambda.

The following manifest should give you an idea of implementing the least privilege principle on cloud resources.

"Version": "2022-10-17",

"Id": "default",

"Statement": [

{

"Sid": "lambda-allow-s3-nigel-function",

"Effect": "Allow",

"Principal": {

"Service": "s3.amazonaws.com"

},

"Action": "lambda:InvokeFunction",

"Resource": "arn:aws:lambda:us-east-2:123456789012:function:my-function",

"Condition": {

"StringEquals": {

"AWS:SourceAccount": "123456789000"

},

"ArnLike": {

"AWS:SourceArn": "arn:aws:s3:::nigel-bucket"

}

}

}

]

Code language: Perl (perl)Observing networking restrictions

There are different ways to implement network security controls.

Holistically, we can create Security Groups (SG) in Amazon AWS and Google Cloud, and what is alternatively known as Network Security Group (NSG) in Microsoft Azure. Whether you are managing everything on-prem behind your own firewall solution, or implementing a virtual firewall in the form of cloud security groups, we need to limit communications between infrastructure and the public internet.

If certains ports, protocols, or destination IPs do not need to explicitly be opened, let’s shut those down.

CSPM encourages organizations to apply networking controls on the infrastructure level. By ingesting AWS cloudtrail metadata into Falco, we can alert on VPC-specific networking activity. A good example of observing a least privilege design is to alert on the creation of Network Access Control Lists (ACLs) where Ingress traffic is opened to the public internet. If there’s no specific reason for opening up cloud traffic to the open internet, we should restrict these ACL conditions. Here’s the Falco template for this rule:

- rule: Create a Network ACL Entry Allowing Ingress Open to the World

desc: >-

Detect creation of access control list entry allowing ingress open to the world.

condition: |

aws.eventName="CreateNetworkAclEntry" and not aws.errorCode exists and (

not (

jevt.value[/requestParameters/portRange/from]=80 and

jevt.value[/requestParameters/portRange/to]=80

) and

not (

jevt.value[/requestParameters/portRange/from]=443 and

jevt.value[/requestParameters/portRange/to]=443

) and

(

jevt.value[/requestParameters/cidrBlock]="0.0.0.0/0" or

jevt.value[/requestParameters/ipv6CidrBlock]="::/0"

) and

jevt.value[/requestParameters/egress]="false" and

jevt.value[/requestParameters/ruleAction]="allow"

)

output: >-

Detected creation of ACL entry allowing ingress open to the world

(requesting user=%aws.user, requesting IP=%aws.sourceIP, AWS

region=%aws.region, arn=%jevt.value[/userIdentity/arn], network acl

id=%jevt.value[/requestParameters/networkAclId], rule

number=%jevt.value[/requestParameters/ruleNumber], from

port=%jevt.value[/requestParameters/portRange/from], to

port=%jevt.value[/requestParameters/portRange/to])

priority: error

source: aws_cloudtrail

append: false

exceptions: []

Code language: Perl (perl)CSPM Conclusion

According to Gartner’s definition of CSPM, the platform should concern itself with the cloud control plane, and not cloud workloads that run on that control plane. By applying the principle of least privilege to the cloud control plane, we can more easily report on potential malicious configurations or possible unintentional misconfigurations that could make it easier for attackers to breach environments or access sensitive data.

Configuring that same least privilege concept to networking, image scanning, user permissions, and user authentication should help detect, but more importantly prevent an attacker before they infiltrate a cloud environment.

CSPM is focused on this shift-left methodology. Strong posture management requires looking at zero-trust security holistically. Once those guardrails are configured, we can rely on monitoring and observability tools to notify us of potential drift from the intended security posture.

If your goal is to focus solely on identification and detection of live threats, it makes more sense to implement a Security Information & Event Management (SIEM) or a Security Automation, Orchestration, and Response (SOAR) platform. Whereas, if the goal is to prevent live threats in production, you could implement an Endpoint Protection Platform (EPP) or an Intrusion Prevention System (IPS).

Finally, CSPM shouldn’t be confused with Cloud Workload Protection Platforms (CWPP). CSPM is not intended to address security risks at the application level. It won’t scan your source code or container images to detect vulnerabilities, for instance. That’s where source code analysis, image scanners, and similar tools come into play.

As seen above, we can use a bunch of sporadic open source tools as the foundation for a CSPM platform. When applying CSPM’s least privilege principle, we limit or prevent user breaches via updated user credentials, strong networking rules, as well as alerting on potentially exposed credentials/misconfigurations in your cloud environments. By detecting insecure conditions in your cloud audit logs, admins can take proactive steps to improve the security posture of their cloud accounts.

However, CSPM only addresses part of the problem. By combining holistics posture management assessments from CSPM with granular workload-level least privilege policies and detection, we can offer an end-to-end Cloud Detection & Response (CDR) solution.

For a 101 on all things cloud security, check out our Sysdig Secure whitepaper today.