Falco Feeds extends the power of Falco by giving open source-focused companies access to expert-written rules that are continuously updated as new threats are discovered.

The term LLMjacking refers to attackers using stolen cloud credentials to gain unauthorized access to cloud-based large language models (LLMs), such as OpenAI’s GPT or Anthropic Claude. This blog shows how to strengthen LLMs with Sysdig.

The attack works by criminals exploiting stolen credentials or cloud misconfigurations to gain access to expensive artificial intelligence (AI) models in the cloud. Once they gain access, they can run costly AI models at the victim’s expense. As per the Sysdig 2024 Global Threat Report, these attacks are financially damaging, with LLMjacking specifically leading to costs as high as $100,000 per day for the targeted organization.

Challenges and risks of Large Language Models (LLMs)

Large language models (LLMs) present unique challenges and risks:

- Lack of separation of control and data planes: The inability to separate the control and data planes increases vulnerability. It allows threat actors to exploit security gaps and manipulate inputs, access sensitive data, or trigger unintended system actions. Common tactics include prompt injection, cross-system data leakage, unintended code execution, and poisoning training data. These attacks compromise confidentiality, integrity, and instructions fed to downstream systems.

- Increased attack surface: LLM use expands organizational vulnerability to adversaries. Cyber criminals who train their own AI systems on malware and other malicious software can significantly level up their attacks by:

- Crafting new and advanced variants of malware that evade detection

- Creating tailored phishing schemes (similar to the example here) and deep fakes for social engineering

- Developing innovative hacking techniques

Strategies for resilience and threat mitigation

To protect against LLMjacking, organizations should adopt robust security measures to safeguard their AI resources while maintaining operational resilience. Key strategies include enforcing strict access controls, using secrets management to secure credentials, and enabling detailed logging to monitor API interactions and billing for anomalies.

Configuration management practices, such as restricting model access and monitoring for unauthorized changes, are essential. Additionally, organizations should stay informed about evolving threats, develop incident response plans, and implement measures like adversarial training and input validation to enhance model resilience against exploitation. These steps collectively reduce the risk of unauthorized access and misuse.

Strengthen your LLMs with Sysdig

Sysdig offers our customers several strategies to secure and reduce their attack surface against LLMjacking and strengthen your LLMs.

Strengthen your AI workload security

- Start by using Sysdig’s AI workload security tools to keep your AI systems safe

- Make it a priority to identify any vulnerabilities in your AI infrastructure

- Regularly scan your systems to find and fix potential entry points for LLMjacking

Sysdig’s unified risk findings feature brings everything together, giving you a clear and streamlined view of correlated risks and events. For AI users, this means making it easier to prioritize, investigate, and address AI-related risks efficiently.

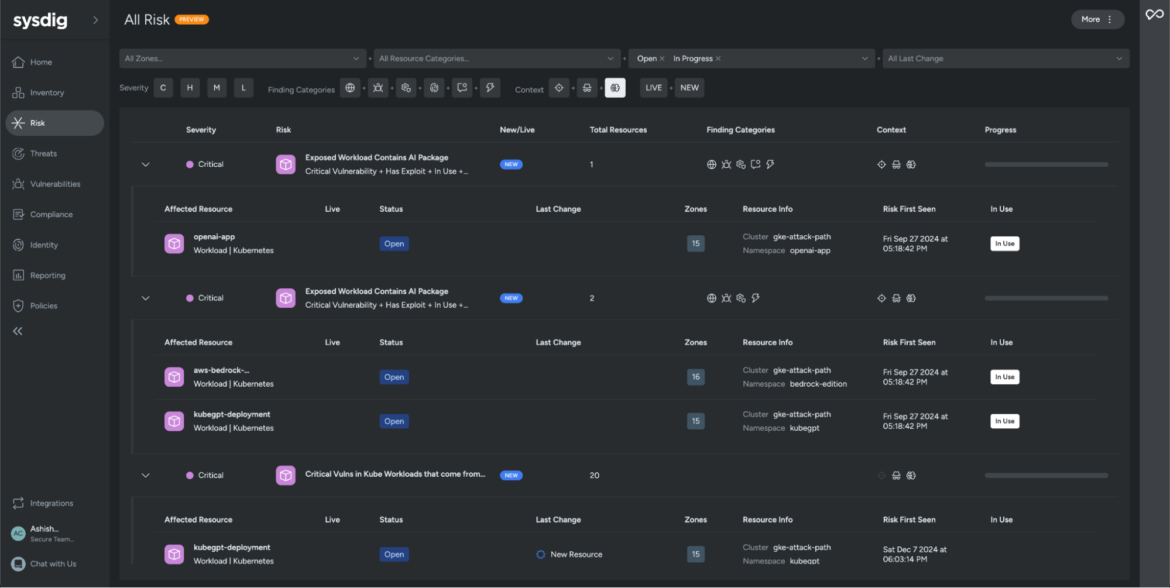

In the example below, we used Sysdig Secure to filter all risks where vulnerable AI packages were detected.

The Sysdig agent uses kernel-level instrumentation (via a kernel module or an eBPF application) to monitor everything happening under the hood, such as running processes, opened files, and network connections. This allows the agent to identify which processes are actively running in your container and which libraries are being used.

Gain clear visibility into your cloud environment

- Set up real-time monitoring for your cloud and hybrid environments

- Create alerts for any suspicious configuration changes so you can act quickly

- Establish proactive detection methods to catch security issues before they escalate

Sysdig combines risk prioritization, attack path analysis, and inventory, providing a detailed and comprehensive overview of a risk and helping you strengthen your LLMs.

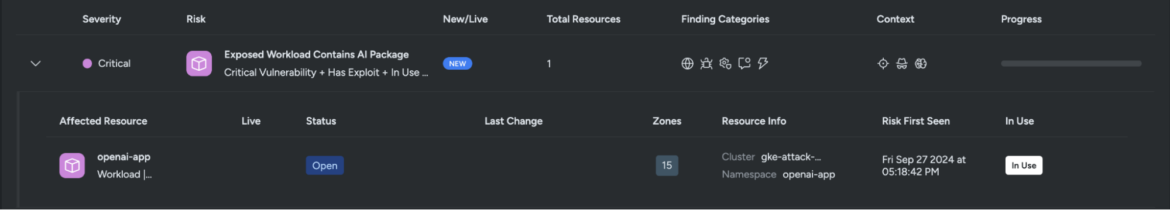

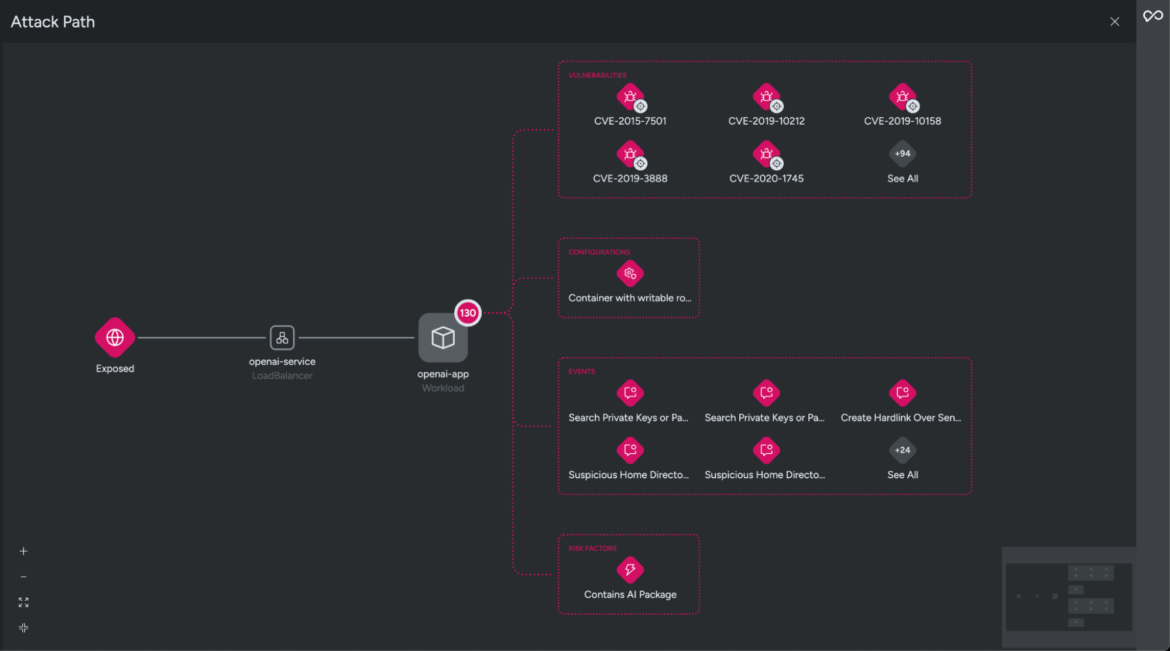

These risks are classified as critical because the AI packages with vulnerabilities are being executed at runtime. To better understand why Sysdig flagged this workload as a risk, we’ll investigate one of the affected resources tagged as openai-app.

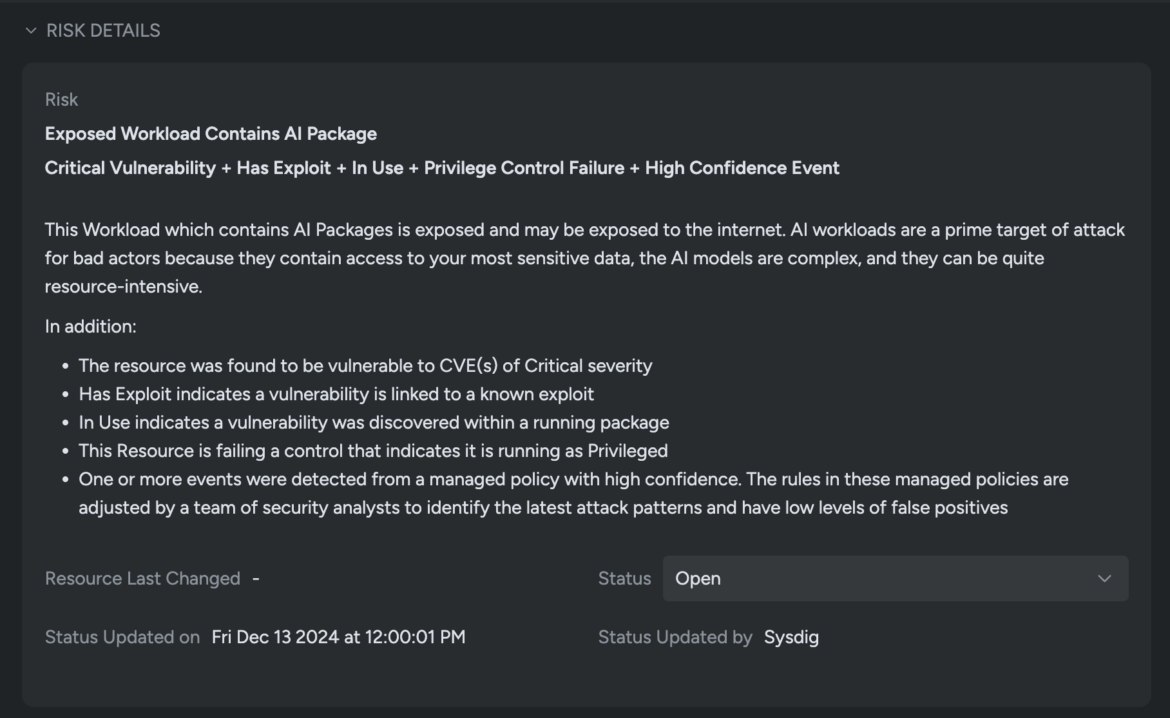

The affected workload running the vulnerable AI package is exposed to the internet and could allow threat actors access to sensitive information.

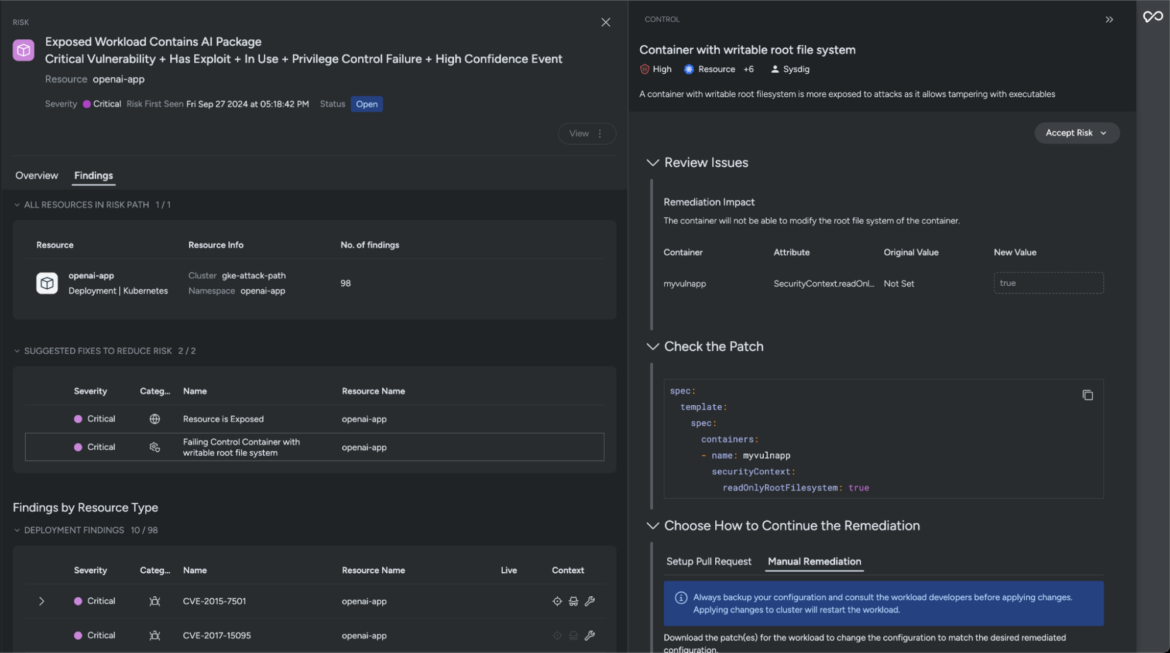

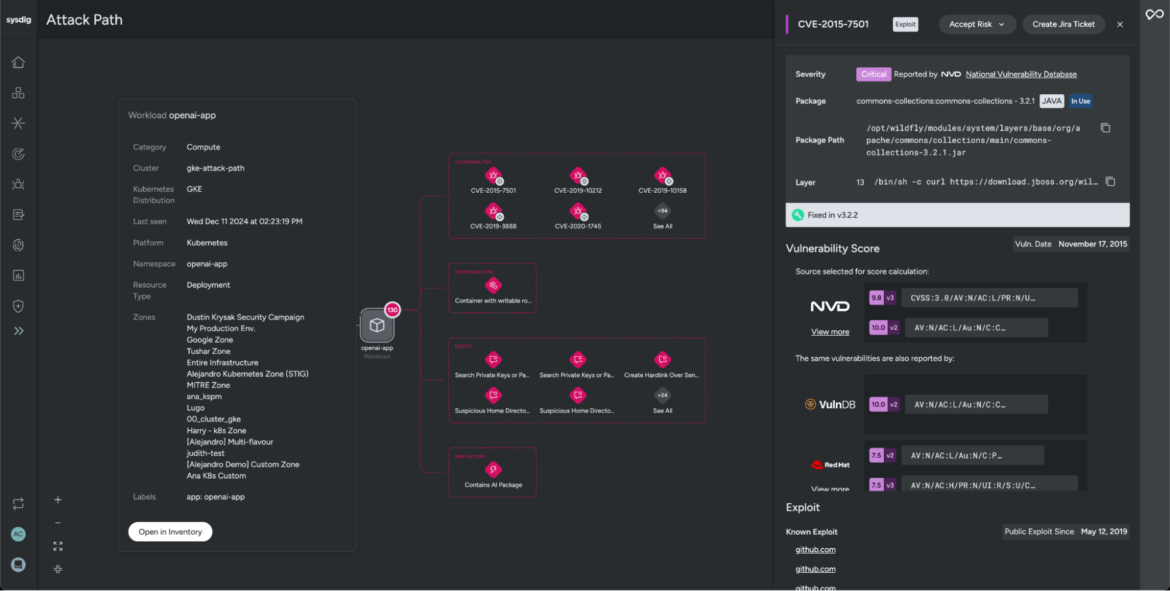

Sysdig Secure also highlights additional risk factors, such as the resource being vulnerable to critical CVEs and a known exploit, running in Privileged mode, and triggering one or more runtime events detected by the managed policy. These factors collectively elevate the risk levels to critical.

Besides providing in-depth context, Sysdig Secure also accelerates your resolution timelines. The suggested fixes are precise and help remediate the identified risk factor. For example, one way to mitigate this critical risk is by applying a patch that prevents adversaries from modifying the container’s root file system.

Sysdig logs everything on the attack path — including where the risk is occurring, related vulnerabilities, and any detected active threats at runtime. These insights help you customize and enrich your detection methods to prevent any further compromise.

Utilize smart threat detection tools

- Activate tools like Falco and Sysdig Secure to keep an eye on potential threats

- Track authentication attempts and watch for any unusual patterns or unauthorized access

- Stay alert for reconnaissance activities that could indicate a looming attack

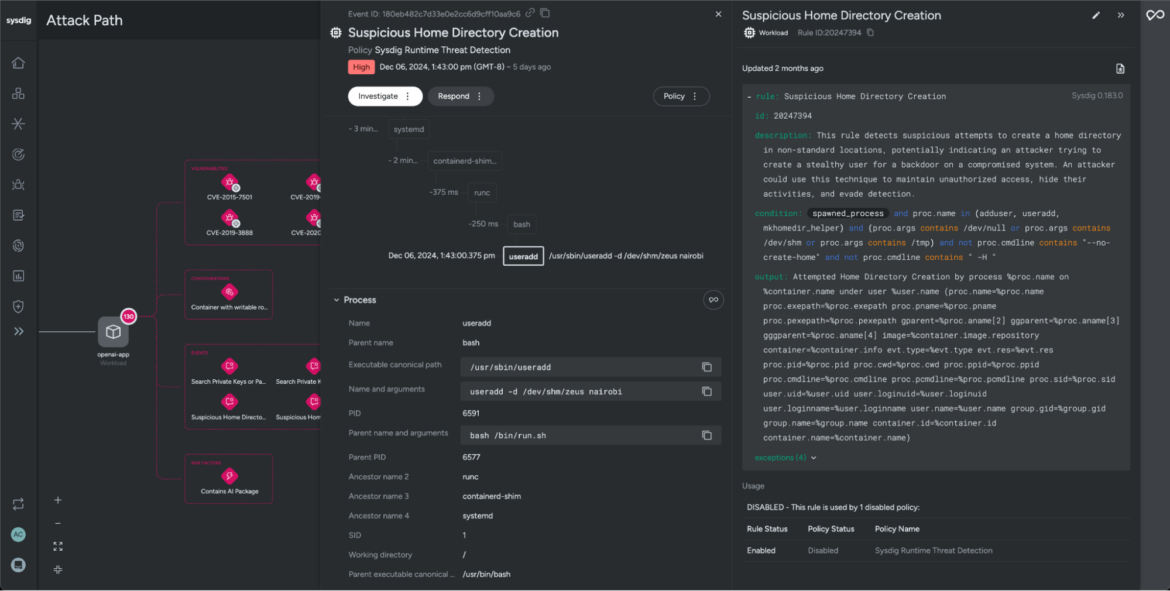

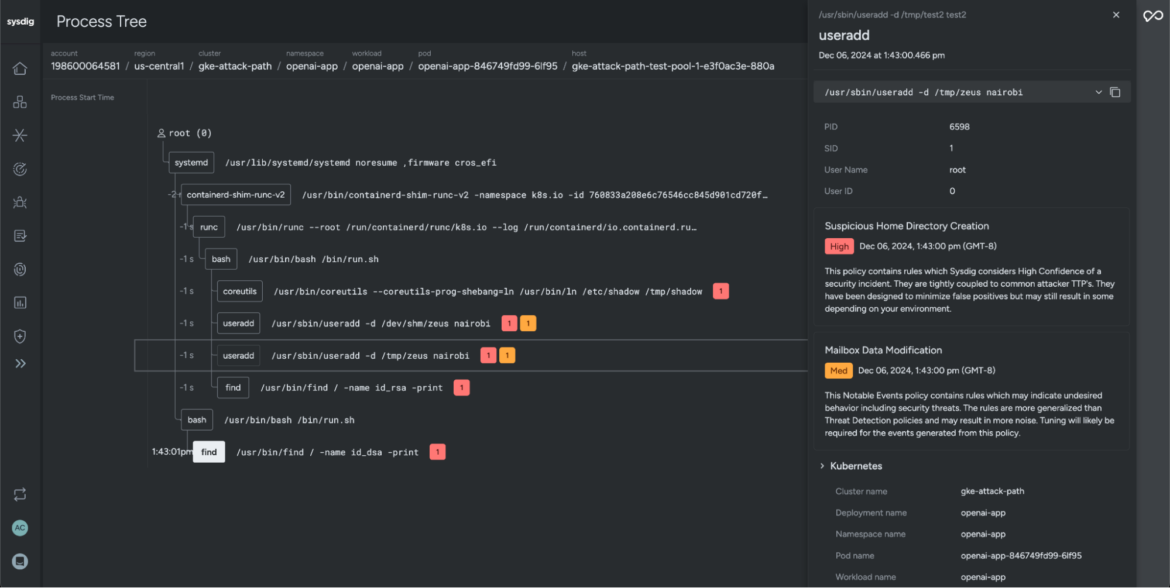

In this example, while reviewing risk factors on the attack path, you can see that Sysdig detected a suspicious home directory created in an unusual location. This could indicate that the attacker attempted to set up a backdoor user. By doing this, the attacker might aim to maintain unauthorized access, cover their tracks, and avoid being detected.

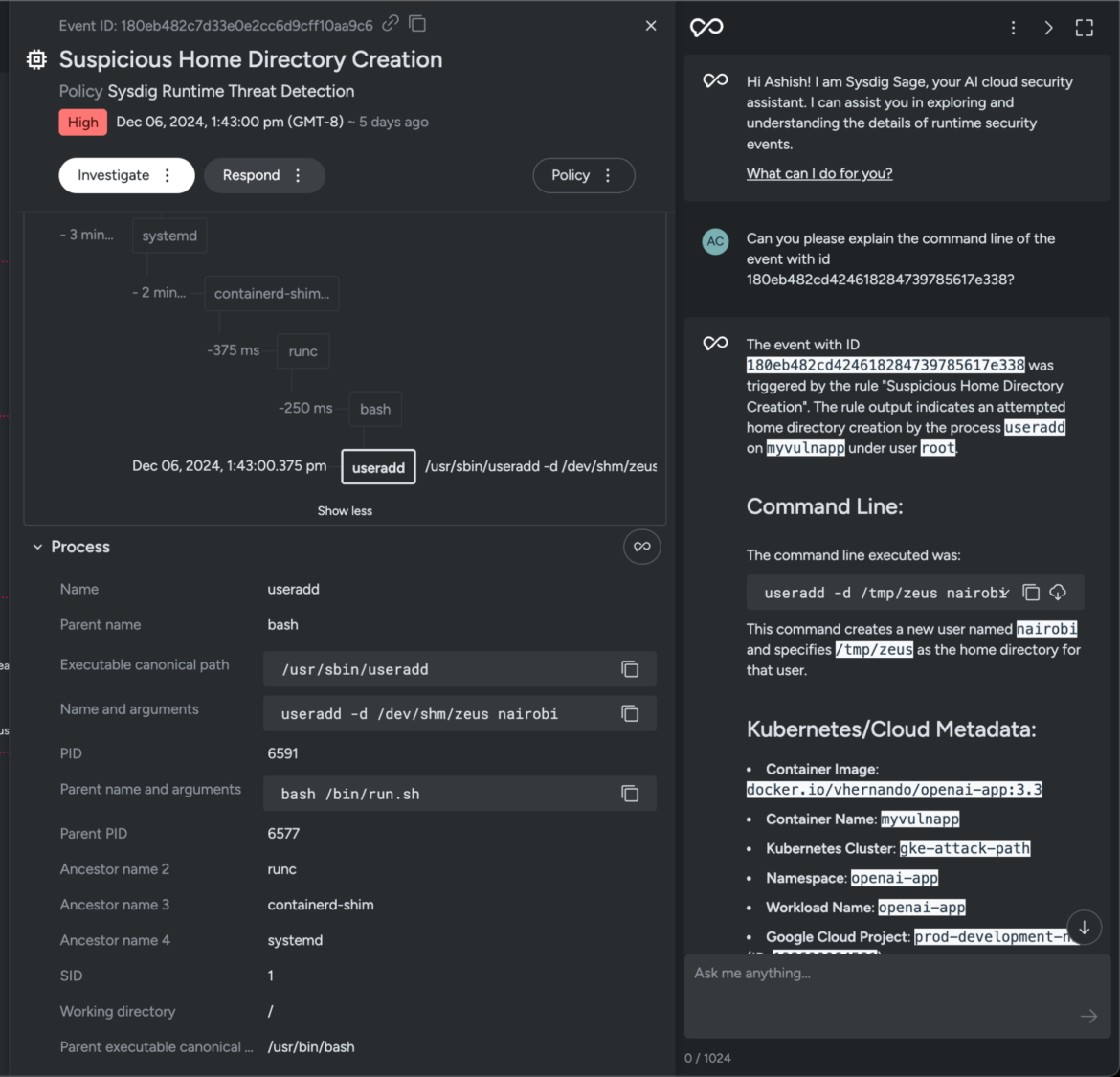

Security teams can even turn to Sysdig Sage to dig deeper and uncover the attacker’s motives. In this case, we used Sysdig Sage to analyze the command executed by the threat actor. It revealed the creation of a new user named “nairobi,” with the home directory set to /dev/shm/zeus — a temporary file storage location. This unusual choice for a home directory raises red flags and hints at possible malicious activity.

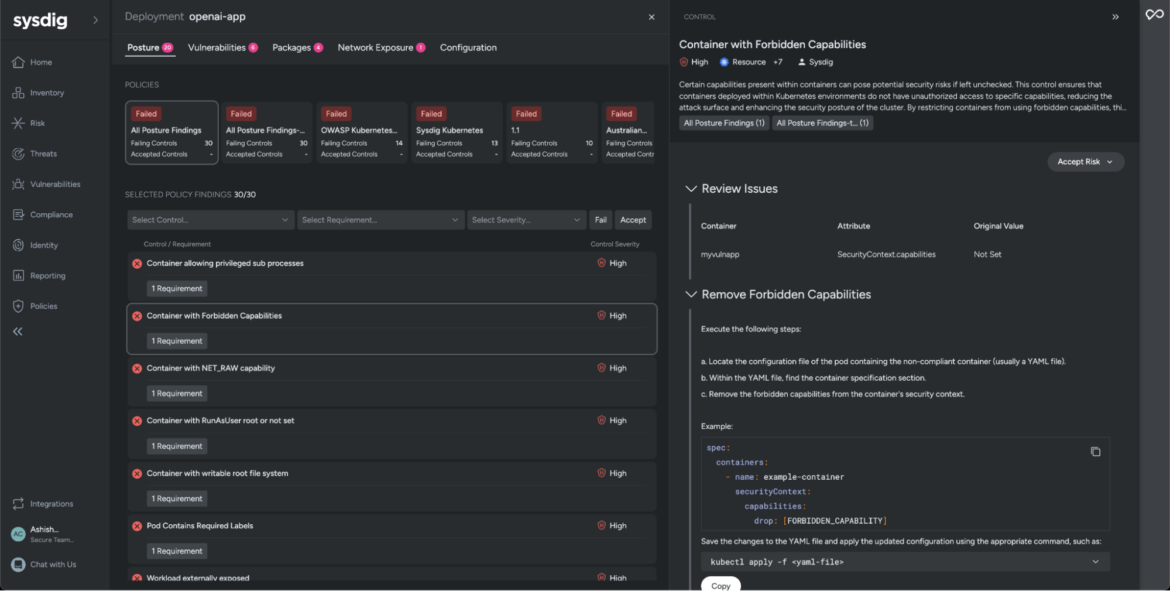

Let’s head back and take a closer look at the workload, reviewing posture misconfigurations that may have enabled the attacker to access and compromise the environment.

Control access

- Implement cloud security posture management (CSPM) to keep your cloud environment secure

- Use cloud infrastructure entitlement management (CIEM) to ensure that permissions are kept to a minimum

- Follow the principle of least privilege, giving users only the level of access they truly need

Enhance your logging and monitoring practices

- Turn on detailed logging for your cloud and system activities

- Keep thorough audit trails to help you track any suspicious behavior

- Set up automated systems to detect anomalies and create quick response plans for incidents

Our investigation uncovers an extensive list of security misconfigurations that likely allowed the creation of the “nairobi” user account and triggered the earlier event we observed. For example, Sysdig highlights that the container has forbidden capabilities that may pose potential security risks if left unchecked.

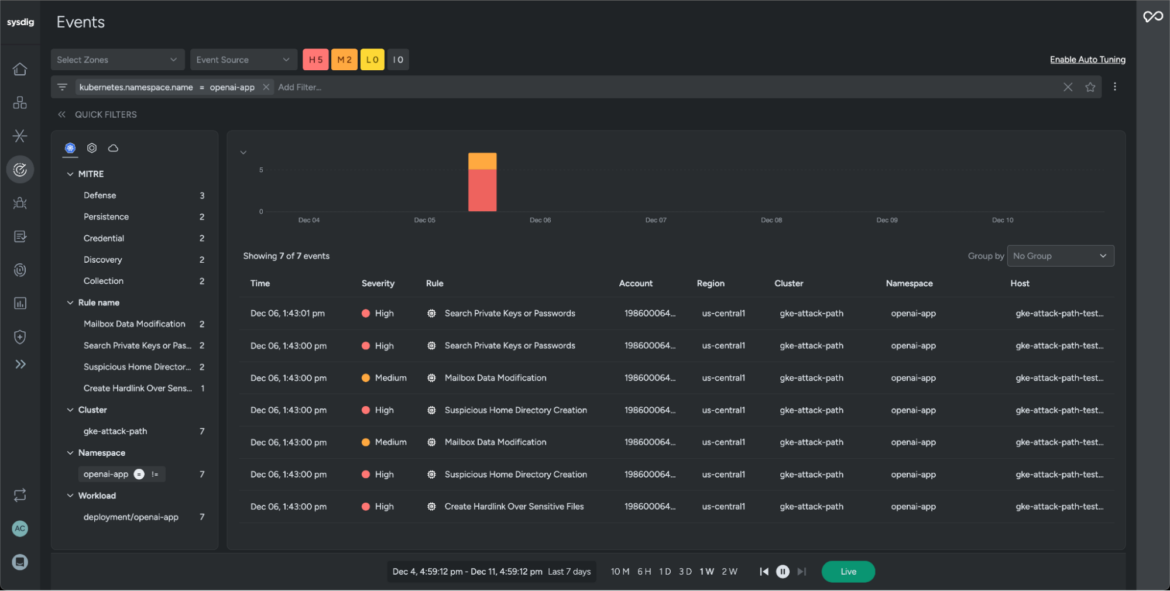

Besides uncovering misconfigurations, Sysdig monitors workload activity and logs a timeline of events leading up to the actual attack. In our scenario, we’ll filter events from the workload openapi-app for the past seven days and investigate the process tree.

With Sysdig Secure, security teams can easily analyze the lineage of events and visualize behavior of processes, understand their relationships, and uncover suspicious or unexpected actions. This helps to isolate the root cause, group high-impact events, and quickly address blindspots in a timely manner.

Actions:

- Conduct a quick security assessment of your current setup

- Update your AI infrastructure security configurations as needed

- Train your security team on how to spot LLMjacking attempts

- Regularly review and refresh your security protocols

By following these friendly tips, you can help safeguard your organization against LLMjacking, strengthen your LLMs, and ensure that your AI resources remain secure and protected.

For more insights, be sure to explore our Sysdig blogs. And if you’d like to connect with our experts, don’t hesitate to reach out — we’d love to hear from you!