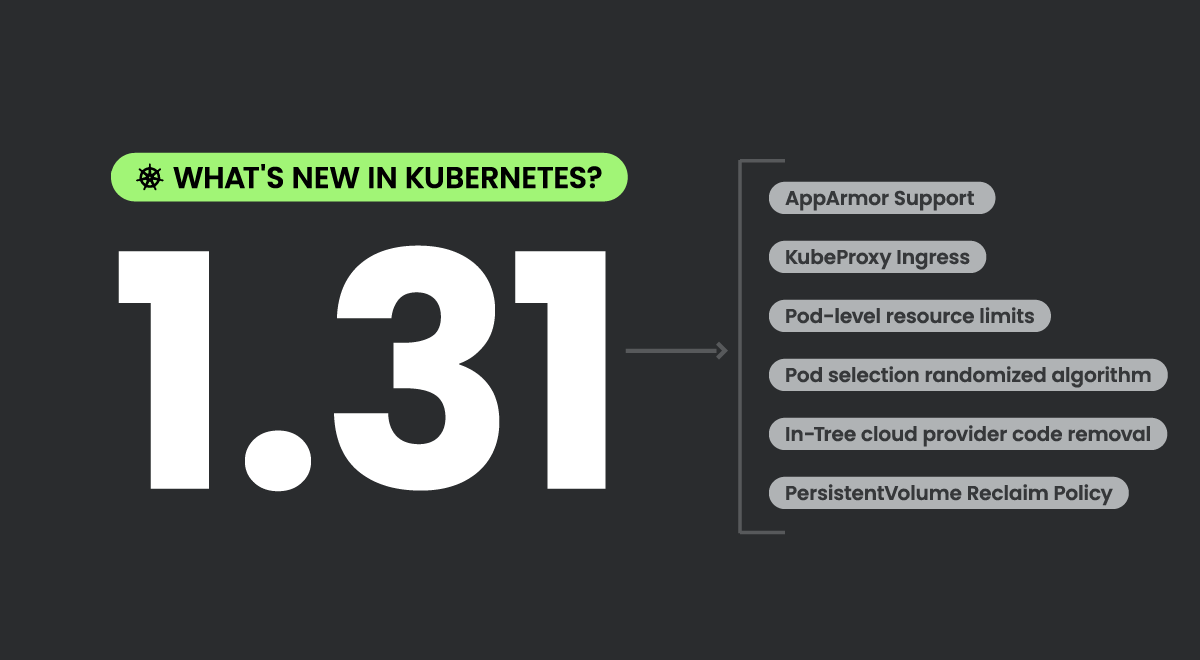

Kubernetes 1.31 is nearly here, and it’s full of exciting major changes to the project! So, what’s new in this upcoming release?

Kubernetes 1.31 brings a plethora of enhancements, including 37 line items tracked as ‘Graduating’ in this release. From these, 11 enhancements are graduating to stable, including the highly anticipated AppArmor support for Kubernetes, which includes the ability to specify an AppArmor profile for a container or pod in the API, and have that profile applied by the container runtime.

34 new alpha features are also making their debut, with a lot of eyes on the initial design to support pod-level resource limits. Security teams will be particularly interested in tracking the progress on this one.

Watch out for major changes such as the improved connectivity reliability for KubeProxy Ingress, which now offers a better capability of connection draining on terminating Nodes, and for load balancers which support that.

Further enhancing security, we see Pod-level resource limits moving along from Net New to Alpha, offering a capability similar to Resource Constraints in Kubernetes that harmoniously balances operational efficiency with robust security.

There are also numerous quality-of-life improvements that continue the trend of making Kubernetes more user-friendly and efficient, such as a randomized algorithm for Pod selection when downscaling ReplicaSets.

We are buzzing with excitement for this release! There’s plenty to unpack here, so let’s dive deeper into what Kubernetes 1.31 has to offer.

Editor’s pick:

These are some of the changes that look most exciting to us in this release:

#2395 Removing In-Tree Cloud Provider Code

Probably the most exciting advancement in v.1.31 is the removal of all in-tree integrations with cloud providers. Since v.1.26 there has been a large push to help Kubernetes truly become a vendor-neutral platform. This Externalization process will successfully remove all cloud provider specific code from the k8s.io/kubernetes repository with minimal disruption to end users and developers.

Nigel Douglas – Sr. Open Source Security Researcher

#2644 Always Honor PersistentVolume Reclaim Policy

I like this enhancement a lot as it finally allows users to honor the PersistentVolume Reclaim Policy through a deletion protection finalizer. HonorPVReclaimPolicy is now enabled by default. Finalizers can be added on a PersistentVolume to ensure that PersistentVolumes having Delete reclaim policy are deleted only after the backing storage is deleted.

The newly introduced finalizers kubernetes.io/pv-controller and external-provisioner.volume.kubernetes.io/finalizer are only added to dynamically provisioned volumes within your environment.

Pietro Piutti – Sr. Technical Marketing Manager

#4292 Custom profile in kubectl debug

I’m delighted to see that they have finally introduced a new custom profile option for the Kubectl Debug command. This feature addresses the challenge teams would have regularly faced when debugging applications built in shell-less base images. By allowing the mounting of data volumes and other resources within the debug container, this enhancement provides a significant security benefit for most organizations, encouraging the adoption of more secure, shell-less base images without sacrificing debugging capabilities.

Thomas Labarussias – Sr. Developer Advocate & CNCF Ambassador

Apps in Kubernetes 1.31

#3017 PodHealthyPolicy for PodDisruptionBudget

Stage: Graduating to Stable

Feature group: sig-apps

Kubernetes 1.31 introduces the PodHealthyPolicy for PodDisruptionBudget (PDB). PDBs currently serve two purposes: ensuring a minimum number of pods remain available during disruptions and preventing data loss by blocking pod evictions until data is replicated.

The current implementation has issues. Pods that are Running but not Healthy (Ready) may not be evicted even if their number exceeds the PDB threshold, hindering tools like cluster-autoscaler. Additionally, using PDBs to prevent data loss is considered unsafe and not their intended use.

Despite these issues, many users rely on PDBs for both purposes. Therefore, changing the PDB behavior without supporting both use-cases is not viable, especially since Kubernetes lacks alternative solutions for preventing data loss.

#3335 Allow StatefulSet to control start replica ordinal numbering

Stage: Graduating to Stable

Feature group: sig-apps

The goal of this feature is to enable the migration of a StatefulSet across namespaces, clusters, or in segments without disrupting the application. Traditional methods like backup and restore cause downtime, while pod-level migration requires manual rescheduling. Migrating a StatefulSet in slices allows for a gradual and less disruptive migration process by moving only a subset of replicas at a time.

#3998 Job Success/completion policy

Stage: Graduating to Beta

Feature group: sig-apps

We are excited about the improvement to the Job API, which now allows setting conditions under which an Indexed Job can be declared successful. This is particularly useful for batch workloads like MPI and PyTorch that need to consider only leader indexes for job success. Previously, an indexed job was marked as completed only if all indexes succeeded. Some third-party frameworks, like Kubeflow Training Operator and Flux Operator, have implemented similar success policies. This improvement will enable users to mark jobs as successful based on a declared policy, terminating lingering pods once the job meets the success criteria.

CLI in Kubernetes 1.31

#4006 Transition from SPDY to WebSockets

Stage: Graduating to Beta

Feature group: sig-cli

This enhancement proposes adding a WebSocketExecutor to the kubectl CLI tool, using a new subprotocol version (v5.channel.k8s.io), and creating a FallbackExecutor to handle client/server version discrepancies. The FallbackExecutor first attempts to connect using the WebSocketExecutor, then falls back to the legacy SPDYExecutor if unsuccessful, potentially requiring two request/response trips. Despite the extra roundtrip, this approach is justified because modifying the low-level SPDY and WebSocket libraries for a single handshake would be overly complex, and the additional IO load is minimal in the context of streaming operations. Additionally, as releases progress, the likelihood of a WebSocket-enabled kubectl interacting with an older, non-WebSocket API Server decreases.

#4706 Deprecate and remove kustomize from kubectl

Stage: Net New to Alpha

Feature group: sig-cli

The update was deferred from the Kubernetes 1.31 release. Kustomize was initially integrated into kubectl to enhance declarative support for Kubernetes objects. However, with the development of various customization and templating tools over the years, kubectl maintainers now believe that promoting one tool over others is not appropriate. Decoupling Kustomize from kubectl will allow each project to evolve at its own pace, avoiding issues with mismatched release cycles that can lead to kubectl users working with outdated versions of Kustomize. Additionally, removing Kustomize will reduce the dependency graph and the size of the kubectl binary, addressing some dependency issues that have affected the core Kubernetes project.

#3104 Separate kubectl user preferences from cluster configs

Stage: Net New to Alpha

Feature group: sig-cli

Kubectl, one of the earliest components of the Kubernetes project, upholds a strong commitment to backward compatibility. We aim to let users opt into new features (like delete confirmation), which might otherwise disrupt existing CI jobs and scripts. Although kubeconfig has an underutilized field for preferences, it isn’t ideal for this purpose. New clusters usually generate a new kubeconfig file with credentials and host details, and while these files can be merged or specified by path, we believe server configuration and user preferences should be distinctly separated.

To address these needs, the Kubernetes maintainers proposed introducing a kuberc file for client preferences. This file will be versioned and structured to easily incorporate new behaviors and settings for users. It will also allow users to define kubectl command aliases and default flags. With this change, we plan to deprecate the kubeconfig Preferences field. This separation ensures users can manage their preferences consistently, regardless of the –kubeconfig flag or $KUBECONFIG environment variable.

Kubernetes 1.31 instrumentation

#2305 Metric cardinality enforcement

Stage: Graduating to Stable

Feature group: sig-instrumentation

Metrics turning into memory leaks pose significant issues, especially when they require re-releasing the entire Kubernetes binary to fix. Historically, we’ve tackled these issues inconsistently. For instance, coding mistakes sometimes cause unintended IDs to be used as metric label values.

In other cases, we’ve had to delete metrics entirely due to their incorrect use. More recently, we’ve either removed metric labels or retroactively defined acceptable values for them. Fixing these issues is a manual, labor-intensive, and time-consuming process without a standardized solution.

This stable update should address these problems by enabling metric dimensions to be bound to known sets of values independently of Kubernetes code releases.

Network in Kubernetes 1.31

#3836 Ingress Connectivity Reliability Improvement for Kube-Proxy

Stage: Graduating to Stable

Feature group: sig-network

This enhancement finally introduces a more reliable mechanism for handling ingress connectivity for endpoints on terminating nodes and nodes with unhealthy Kube-proxies, focusing on eTP:Cluster services. Currently, Kube-proxy’s response is based on its healthz state for eTP:Cluster services and the presence of a Ready endpoint for eTP:Local services. This KEP addresses the former.

The proposed changes are:

- Connection Draining for Terminating Nodes:

Kube-proxy will use the ToBeDeletedByClusterAutoscaler taint to identify terminating nodes and fail its healthz check to signal load balancers for connection draining. Other signals like .spec.unschedulable were considered but deemed less direct.

- Addition of /livez Path:

Kube-proxy will add a /livez endpoint to its health check server to reflect the old healthz semantics, indicating whether data-plane programming is stale.

- Cloud Provider Health Checks:

While not aligning cloud provider health checks for eTP:Cluster services, the KEP suggests creating a document on Kubernetes’ official site to guide and share knowledge with cloud providers for better health checking practices.

#4444 Traffic Distribution to Services

Stage: Graduating to Beta

Feature group: sig-network

To enhance traffic routing in Kubernetes, this KEP proposes adding a new field, trafficDistribution, to the Service specification. This field allows users to specify routing preferences, offering more control and flexibility than the earlier topologyKeys mechanism. trafficDistribution will provide a hint for the underlying implementation to consider in routing decisions without offering strict guarantees.

The new field will support values like PreferClose, indicating a preference for routing traffic to topologically proximate endpoints. The absence of a value indicates no specific routing preference, leaving the decision to the implementation. This change aims to provide enhanced user control, standard routing preferences, flexibility, and extensibility for innovative routing strategies.

#1880 Multiple Service CIDRs

Stage: Graduating to Beta

Feature group: sig-network

This proposal introduces a new allocator logic using two new API objects: ServiceCIDR and IPAddress, allowing users to dynamically increase available Service IPs by creating new ServiceCIDRs. The allocator will automatically consume IPs from any available ServiceCIDR, similar to adding more disks to a storage system to increase capacity.

To maintain simplicity, backward compatibility, and avoid conflicts with other APIs like Gateway APIs, several constraints are added:

- ServiceCIDR is immutable after creation.

- ServiceCIDR can only be deleted if no Service IPs are associated with it.

- Overlapping ServiceCIDRs are allowed.

- The API server ensures a default ServiceCIDR exists to cover service CIDR flags and the “kubernetes.default” Service.

- All IPAddresses must belong to a defined ServiceCIDR.

- Every Service with a ClusterIP must have an associated IPAddress object.

- A ServiceCIDR being deleted cannot allocate new IPs.

This creates a one-to-one relationship between Service and IPAddress, and a one-to-many relationship between ServiceCIDR and IPAddress. Overlapping ServiceCIDRs are merged in memory, with IPAddresses coming from any ServiceCIDR that includes that IP. The new allocator logic can also be used by other APIs, such as the Gateway API, enabling future administrative and cluster-wide operations on Service ranges.

Kubernetes 1.31 nodes

#2400 Node Memory Swap Support

Stage: Graduating to Stable

Feature group: sig-node

The enhancement should now integrate swap memory support into Kubernetes, addressing two key user groups: node administrators for performance tuning and app developers requiring swap for their apps.

The focus was to facilitate controlled swap use on a node level, with the kubelet enabling Kubernetes workloads to utilize swap space under specific configurations. The ultimate goal is to enhance Linux node operation with swap, allowing administrators to determine swap usage for workloads, initially not permitting individual workloads to set their own swap limits.

#4569 Move cgroup v1 support into maintenance mode

Stage: Net New to Stable

Feature group: sig-node

The proposal aims to transition Kubernetes’ cgroup v1 support into maintenance mode while encouraging users to adopt cgroup v2. Although cgroup v1 support won’t be removed immediately, its deprecation and eventual removal will be addressed in a future KEP. The Linux kernel community and major distributions are focusing on cgroup v2 due to its enhanced functionality, consistent interface, and improved scalability. Consequently, Kubernetes must align with this shift to stay compatible and benefit from cgroup v2’s advancements.

To support this transition, the proposal includes several goals. First, cgroup v1 will receive no new features, marking its functionality as complete and stable. End-to-end testing will be maintained to ensure the continued validation of existing features. The Kubernetes community may provide security fixes for critical CVEs related to cgroup v1 as long as the release is supported. Major bugs will be evaluated and fixed if feasible, although some issues may remain unresolved due to dependency constraints.

Migration support will be offered to help users transition from cgroup v1 to v2. Additionally, efforts will be made to enhance cgroup v2 support by addressing all known bugs, ensuring it is reliable and functional enough to encourage users to switch. This proposal reflects the broader ecosystem’s movement towards cgroup v2, highlighting the necessity for Kubernetes to adapt accordingly.

#24 AppArmor Support

Stage: Graduating to Stable

Feature group: sig-node

Adding AppArmor support to Kubernetes marks a significant enhancement in the security posture of containerized workloads. AppArmor is a Linux kernel module that allows system admins to restrict certain capabilities of a program using profiles attached to specific applications or containers. By integrating AppArmor into Kubernetes, developers can now define security policies directly within an app config.

The initial implementation of this feature would allow for specifying an AppArmor profile within the Kubernetes API for individual containers or entire pods. This profile, once defined, would be enforced by the container runtime, ensuring that the container’s actions are restricted according to the rules defined in the profile. This capability is crucial for running secure and confined applications in a multi-tenant environment, where a compromised container could potentially affect other workloads or the underlying host.

Scheduling in Kubernetes

#3633 Introduce MatchLabelKeys and MismatchLabelKeys to PodAffinity and PodAntiAffinity

Stage: Graduating to Beta

Feature group: sig-scheduling

This was Tracked for Code Freeze as of July 23rd. This enhancement finally introduces the MatchLabelKeys for PodAffinityTerm to refine PodAffinity and PodAntiAffinity, enabling more precise control over Pod placements during scenarios like rolling upgrades.

By allowing users to specify the scope for evaluating Pod co-existence, it addresses scheduling challenges that arise when new and old Pod versions are present simultaneously, particularly in saturated or idle clusters. This enhancement aims to improve scheduling effectiveness and cluster resource utilization.

Kubernetes storage

#3762 PersistentVolume last phase transition time

Stage: Graduating to Stable

Feature group: sig-storage

The Kubernetes maintainers plan to update the API server to support a new timestamp field for PersistentVolumes, which will record when a volume transitions to a different phase. This field will be set to the current time for all newly created volumes and those changing phases. While this timestamp is intended solely as a convenience for cluster administrators, it will enable them to list and sort PersistentVolumes based on the transition times, aiding in manual cleanup and management.

This change addresses issues experienced by users with the Delete retain policy, which led to data loss, prompting many to revert to the safer Retain policy. With the Retain policy, unclaimed volumes are marked as Released, and over time, these volumes accumulate. The timestamp field will help admins identify when volumes last transitioned to the Released phase, facilitating easier cleanup.

Moreover, the generic recording of timestamps for all phase transitions will provide valuable metrics and insights, such as measuring the time between Pending and Bound phases. The goals are to introduce this timestamp field and update it with every phase transition, without implementing any volume health monitoring or additional actions based on the timestamps.

#3751 Kubernetes VolumeAttributesClass ModifyVolume

Stage: Graduating to Beta

Feature group: sig-storage

The proposal introduces a new Kubernetes API resource, VolumeAttributesClass, along with an admission controller and a volume attributes protection controller. This resource will allow users to manage volume attributes, such as IOPS and throughput, independently from capacity. The current immutability of StorageClass.parameters necessitates this new resource, as it permits updates to volume attributes without directly using cloud provider APIs, simplifying storage resource management.

VolumeAttributesClass will enable specifying and modifying volume attributes both at creation and for existing volumes, ensuring changes are non-disruptive to workloads. Conflicts between StorageClass.parameters and VolumeAttributesClass.parameters will result in errors from the driver.

The primary goals include providing a cloud-provider-independent specification for volume attributes, enforcing these attributes through the storage, and allowing workload developers to modify them non-disruptively. The proposal does not address OS-level IO attributes, inter-pod volume attributes, or scheduling based on node-specific volume attributes limits, though these may be considered for future extensions.

#3314 CSI Differential Snapshot for Block Volumes

Stage: Net New to Alpha

Feature group: sig-storage

This enhancement was removed from the Kubernetes 1.31 milestone. It aims at enhancing the CSI specification by introducing a new optional CSI SnapshotMetadata gRPC service. This service allows Kubernetes to retrieve metadata on allocated blocks of a single snapshot or the changed blocks between snapshots of the same block volume. Implemented by the community-provided external-snapshot-metadata sidecar, this service must be deployed by a CSI driver. Kubernetes backup applications can access snapshot metadata through a secure TLS gRPC connection, which minimizes load on the Kubernetes API server.

The external-snapshot-metadata sidecar communicates with the CSI driver’s SnapshotMetadata service over a private UNIX domain socket. The sidecar handles tasks such as validating the Kubernetes authentication token, authorizing the backup application, validating RPC parameters, and fetching necessary provisioner secrets. The CSI driver advertises the existence of the SnapshotMetadata service to backup applications via a SnapshotMetadataService CR, containing the service’s TCP endpoint, CA certificate, and audience string for token authentication.

Backup applications must obtain an authentication token using the Kubernetes TokenRequest API with the service’s audience string before accessing the SnapshotMetadata service. They should establish trust with the specified CA and use the token in gRPC calls to the service’s TCP endpoint. This setup ensures secure, efficient metadata retrieval without overloading the Kubernetes API server.

The goals of this enhancement are to provide a secure CSI API for identifying allocated and changed blocks in volume snapshots, and to efficiently relay large amounts of snapshot metadata from the storage provider. This API is an optional component of the CSI framework.

Other enhancements in Kubernetes 1.31

#4193 Bound service account token improvements

Stage: Graduating to Beta

Feature group: sig-auth

The proposal aims to enhance Kubernetes security by embedding the bound Node information in tokens and extending token functionalities. The kube-apiserver will be updated to automatically include the name and UID of the Node associated with a Pod in the generated tokens during a TokenRequest. This requires adding a Getter for Node objects to fetch the Node’s UID, similar to existing processes for Pod and Secret objects.

Additionally, the TokenRequest API will be extended to allow tokens to be bound directly to Node objects, ensuring that when a Node is deleted, the associated token is invalidated. The SA authenticator will be modified to verify tokens bound to Node objects by checking the existence of the Node and validating the UID in the token. This maintains the current behavior for Pod-bound tokens while enforcing new validation checks for Node-bound tokens from the start.

Furthermore, each issued JWT will include a UUID (JTI) to trace the requests made to the apiserver using that token, recorded in audit logs. This involves generating the UUID during token issuance and extending audit log entries to capture this identifier, enhancing traceability and security auditing.

#3962 Mutating Admission Policies

Stage: Net New to Alpha

Feature group: sig-api-machinery

Continuing the work started in KEP-3488, the project maintainers have proposed adding mutating admission policies using CEL expressions as an alternative to mutating admission webhooks. This builds on the API for validating admission policies established in KEP-3488. The approach leverages CEL’s object instantiation and Server Side Apply’s merge algorithms to perform mutations.

The motivation for this enhancement stems from the simplicity needed for common mutating operations, such as setting labels or adding sidecar containers, which can be efficiently expressed in CEL. This reduces the complexity and operational overhead of managing webhooks. Additionally, CEL-based mutations offer advantages such as allowing the kube-apiserver to introspect mutations and optimize the order of policy applications, minimizing reinvocation needs. In-process mutation is also faster compared to webhooks, making it feasible to re-run mutations to ensure consistency after all operations are applied.

The goals include providing a viable alternative to mutating webhooks for most use cases, enabling policy frameworks without webhooks, offering an out-of-tree implementation for compatibility with older Kubernetes versions, and providing core functionality as a library for use in GitOps, CI/CD pipelines, and auditing scenarios.

#3715 Elastic Indexed Jobs

Stage: Graduating to Stable

Feature group: sig-apps

Also graduating to Stable, this feature will allow for mutating spec.completions on Indexed Jobs when it matches and is updated with spec.parallelism. The success and failure semantics remain unchanged for jobs that do not alter spec.completions. For jobs that do, failures always count against the job’s backoffLimit, even if spec.completions is scaled down and the failed pods fall outside the new range. The status.Failed count will not decrease, but status.Succeeded will update to reflect successful indexes within the new range. If a previously successful index is out of range due to scaling down and then brought back into range by scaling up, the index will restart.

If you liked this, you might want to check out our previous ‘What’s new in Kubernetes’ editions:

- Kubernetes 1.30 – What’s new?

- Kubernetes 1.27 – What’s new?

- Kubernetes 1.26 – What’s new?

- Kubernetes 1.25 – What’s new?

- Kubernetes 1.24 – What’s new?

- Kubernetes 1.23 – What’s new?

- Kubernetes 1.22 – What’s new?

- Kubernetes 1.21 – What’s new?

- Kubernetes 1.20 – What’s new?

- Kubernetes 1.19 – What’s new?

- Kubernetes 1.18 – What’s new?

- Kubernetes 1.17 – What’s new?

- Kubernetes 1.16 – What’s new?

- Kubernetes 1.15 – What’s new?

- Kubernetes 1.14 – What’s new?

- Kubernetes 1.13 – What’s new?

- Kubernetes 1.12 – What’s new?

Get involved with the Kubernetes project:

- Visit the project homepage

- Check out the Kubernetes project on GitHub

- Get involved with the Kubernetes community

- Meet the maintainers on the Kubernetes Slack

- Follow @KubernetesIO on Twitter