Falco Feeds extends the power of Falco by giving open source-focused companies access to expert-written rules that are continuously updated as new threats are discovered.

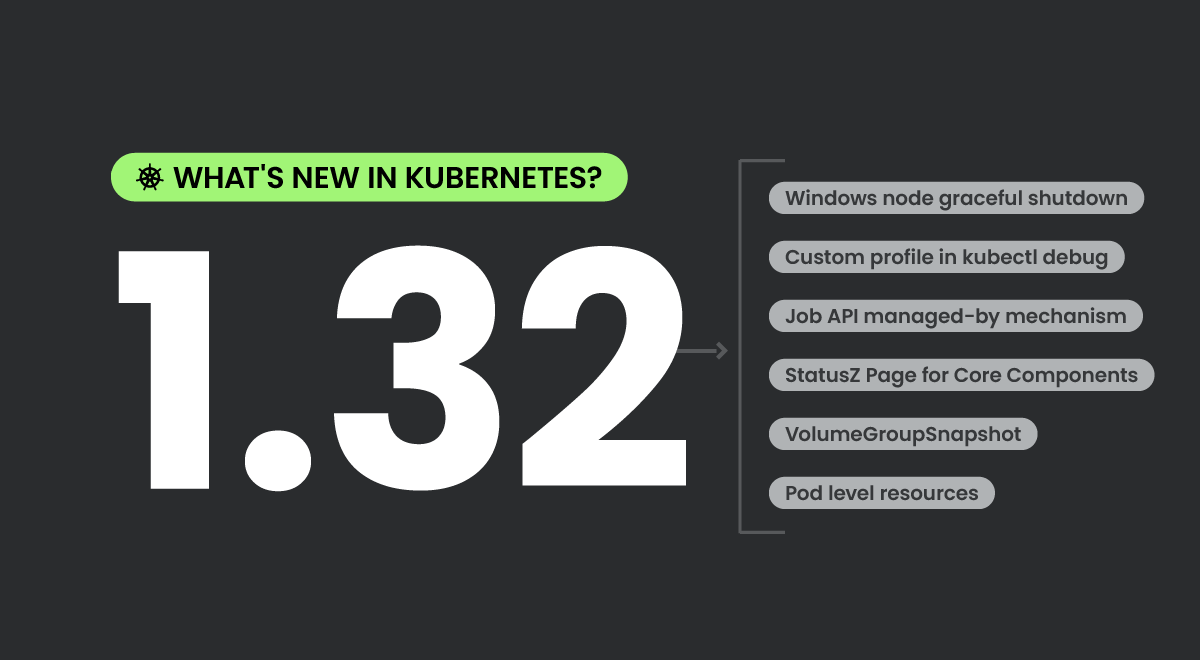

Kubernetes 1.32 is right around the corner, and there are quite a lot of changes ready for the Holiday Season! So, what's new in 1.32?

Kubernetes 1.32 brings a whole bunch of useful enhancements, including 39 changes tracked as 'Graduating' in this Kubernetes release. From these, 20 enhancements are graduating to stable, including the ability to auto remove PVCs created by StatefulSets, which provides a new feature to auto delete the PVCs created by StatefulSet when the volumes are no longer in use to ease management of StatefulSets that don't live indefinitely.

37 new alpha features are also making their debut, with a bit of conversation around the ability to fine tune CrashLookBackOff. Amongst other benefits, platform engineers will now be able to improve their pod restart backoff logic to better match the actual load it creates. This could help meet emerging use cases, such as quantifying the exposed risks to node stability that often results from decreased backoff configurations.

We're thrilled about this release and all it brings! Let's take a closer look at the exciting features Kubernetes 1.32 has in store.

Kubernetes 1.32 – Editor's pick:

These are some of the changes that look most exciting to us in this release:

#4802 Windows node gracefully shutdown

One significant enhancement in Kubernetes v1.32 that I'm looking forward to is the improvement to pod termination during Windows node shutdowns, addressing a long-standing gap in the pod lifecycle. Previously, when a node shut down, pods were often terminated abruptly, bypassing critical lifecycle events like pre-stop hooks. This could lead to issues for workloads relying on graceful termination to clean up resources or save state. With this update, the kubelet on Windows nodes will become aware of underlying node shutdown events and initiate a proper shutdown sequence for pods, ensuring they are terminated as intended by their authors. This change not only improves reliability and workload consistency but does so in a cloud-provider agnostic way.

Nigel Douglas – OSS Security Researcher

#2644 Always Honor PersistentVolume Reclaim Policy

My personal favourite enhancement in K8s v.1.32 addresses a critical gap in storage management. This improvement ensures that the reclaim policies associated with Persistent Volumes (PVs) are consistently honored. Previously, reclaim policies could be bypassed when PVs were deleted before their associated Persistent Volume Claims (PVCs), resulting in leaked storage resources in external infrastructure. This behavior created inefficiencies and required manual intervention to clean up unused storage.

By introducing finalizers for PVs, like kubernetes.io/pv-controller or external-provisioner.volume.kubernetes.io/finalizer, this enhancement ensures that the PV's lifecycle is managed more reliably. Whether using CSI drivers or in-tree plugins, the reclaim workflow is now robust against deletion timing issues, preventing resource leaks and aligning with user expectations. This improvement not only simplifies storage management but also enhances reliability and trust in Kubernetes' handling of stateful workloads, benefiting administrators and developers alike. Introducing the change behind a feature gate ensures a smooth transition, minimizing risks while phasing out the older, inconsistent behavior.

Alex Boylan – Senior Escalation Management

#4292 Custom profile in kubectl debug

As a software engineer, this enhancement to the kubectl debug command with custom profiling support significantly streamlines my debugging workflows by allowing tailored configurations. Instead of relying on predefined profiles or manually patching pod specifications, I can now define and reuse custom JSON profiles to include environment variables, volume mounts, security contexts, and other settings specific to my debugging needs. This flexibility saves time, reduces repetitive tasks, and eliminates the clutter of additional command flags. Ultimately, it makes troubleshooting Kubernetes applications more efficient and aligns the debugging process with the unique requirements of my workloads.

Shane Dempsey – Staff Software Engineer

Apps in Kubernetes 1.32

#1847 Auto remove PVCs created by StatefulSet

Stage: Graduating to Stable

Feature group: sig-apps

The Kubernetes 1.32 release introduces an opt-in feature for automatically deleting PVCs created by StatefulSets, offering a significant improvement in resource management. Previously, PVCs associated with StatefulSets were not deleted when the StatefulSet itself was removed, leading to manual cleanup and potential resource wastage. This enhancement allows users to automatically clean up PVCs when volumes are no longer in use, simplifying workflows for StatefulSets that don't require persistent data. Importantly, the feature is designed with safeguards to ensure application state remains intact during routine maintenance tasks like rolling updates or node drains, making it both convenient and reliable for StatefulSet users.

#4026 Add job creation timestamp to job annotations

Stage: Graduating to Stable

Feature group: sig-apps

The Kubernetes CronJob experience is heavily improved through the introduction of an annotation that stores the original scheduled timestamp for jobs. By setting the timestamp as metadata in the job, users and tools can easily access and track when a job was originally expected to run. This improves transparency and debugging capabilities for CronJob workloads, especially in scenarios involving delays or rescheduling. Importantly, this addition is non-disruptive to existing workloads, making it a seamless improvement that benefits both developers and operators across the Kubernetes community.

#4368 Job API managed-by mechanism

Stage: Graduating to Beta

Feature group: sig-apps

A new managedBy field in the Job spec is a really nice improvement for Kubernetes, specifically for multi-cluster job orchestration efforts like MultiKueue. This feature allows users to delegate job synchronization to an external controller, such as the Kueue controller, enabling seamless management of jobs across multiple clusters. By clearly designating the responsible controller, the enhancement simplifies workflows, avoids conflicting updates, and ensures more reliable status synchronisation.

We're confident that this will benefit the Kubernetes community through robust multi-cluster job management capabilities while advancing the scalability and flexibility of batch workloads in distributed environments.

CLI in Kubernetes 1.32

#3104 Separate kubectl user preferences from cluster configs

Stage: Net New to Alpha

Feature group: sig-cli

The inclusion of a kuberc file (~/.kube/kuberc) in the Kubernetes CLI improves usability by cleanly separating cluster credentials and server configurations from user-specific preferences. This enhancement allows users to manage their personal settings—such as command aliases, default flags, and new behavior preferences—independently of cluster-specific kubeconfig files. It simplifies workflows, especially for users managing multiple kubeconfig files, by applying a single set of preferences regardless of the active configuration.

This approach future-proofs the CLI by enabling easier implementation of new user-centric features while maintaining backward compatibility and reducing complexity in the kubeconfig structure.

Kubernetes 1.32 Instrumentation

#4827 StatusZ Page for all Core Kubernetes Components

Stage: Net New to Alpha

Feature group: sig-instrumentation

The introduction of z-pages in Kubernetes, through the statusz enhancement, offers significant benefits for real-time observability and debugging of distributed systems. By providing an integrated interface directly within servers, z-pages consolidate critical information like build version, Go version, and compatibility details in a precise, accessible format. This eliminates the need for external tools, logs, or complex setups, reducing troubleshooting time and minimising potential latency issues.

Furthermore, statusz ensures consistency in presenting essential component data, setting a standard for streamlined monitoring and debugging across the Kubernetes ecosystem. This enhancement empowers developers and operators with immediate insights, improving efficiency and reliability in managing complex systems.

#4828 Flagz Page for all Core Kubernetes Components

Stage: Net New to Alpha

Feature group: sig-instrumentation

Similar to the StatusZ page, the flagz endpoint enhancement also aims to improve the introspection and debugging capabilities of all the core Kubernetes components by providing real-time visibility into their active command-line flags. This feature aids users in diagnosing configuration issues and verifying flag changes, ensuring system stability and reducing the time spent troubleshooting unexpected behaviors.

Unlike metrics, logs, or traces, which provide performance and operational insights, flagz focuses solely on the runtime configuration of components, offering a unique and complementary perspective. Importantly, this enhancement is not intended to replace existing monitoring tools or provide visibility into components inaccessible due to network restrictions, ensuring it remains focused on dynamic flag inspection for core Kubernetes components.

Network in Kubernetes 1.32

#2681 Field status.hostIPs added for Pod

Stage: Graduating to Stable

Feature group: sig-network

This enhancement improves Kubernetes networking by making it easier for pods to retrieve the node's address, particularly in dual-stack environments where both IPv4 and IPv6 addresses are used. By introducing the status.hostIPs field in Pod status and enabling Downward API support for it, applications can dynamically access both IPv4 and IPv6 addresses of the hosting node without external workarounds.

This is especially beneficial for applications transitioning from IPv4 to IPv6, as it simplifies compatibility and ensures seamless communication during the dual-stack migration phase. It now provides developers with more robust and flexible networking capabilities, which are super crucial in highly-scalable, cloud-native deployments.

#1860 Make Kubernetes aware of the LoadBalancer behaviour

Stage: Graduating to Stable

Feature group: sig-network

Kube-proxy is getting a configurable ipMode field in the loadBalancer status of a Service, allowing cloud providers to align kube-proxy behavior with their load balancer implementations. Currently, kube-proxy binds external IPs to nodes by default, which can cause issues such as failing health checks or bypassing load balancer features like TLS termination and PROXY protocol. The new ipMode setting enables providers to opt for Proxy mode, bypassing direct IP binding and resolving these challenges, while retaining the existing VIP mode as the default.

In this case, the goal is to allow cloud providers to configure kube-proxy's handling of LoadBalancer external IPs through the Cloud Controller Manager, as well as providing a clean, configurable solution to replace existing workarounds. The intention was never to change or deprecate kube-proxy's existing default behaviour.

#4427 Relaxed DNS search string validation

Stage: Net New to Alpha

Feature group: sig-network

This proposal aims to relax DNS search string validation in Kubernetes' dnsConfig.searches field to support workloads with legacy naming conventions or specialised use cases. Currently, Kubernetes enforces strict validation based on RFC-1123, disallowing underscores ( _ ) and single-dot ( . ) search strings. This restriction creates issues for workloads needing to resolve short DNS names, such as SRV records (_sip._tcp.abc_d.example.com) or avoiding unnecessary internal DNS lookups. By introducing a RelaxedDNSSearchValidation feature gate, the proposal allows underscores and single-dot search strings, enabling better compatibility with legacy systems and more flexible DNS configurations.

The intention was to support workloads resolving DNS names containing underscores, and enable the use of a single-dot search string to prioritize external DNS lookups, whilst ensuring smooth upgrades and downgrades when the feature is toggled. At no point during the sprint was there a goal of modifying other aspects of DNS name validation beyond the searches field, nor was there a focus on allowing underscores within names managed by Kubernetes itself.

Kubernetes 1.32 Nodes

#1967 Support to size memory backed volumes

Stage: Graduating to Stable

Feature group: sig-node

This stable update enhances the portability and usability of memory-backed emptyDir volumes in Kubernetes by aligning their size with the pod's allocatable memory or an optional user-defined limit. Currently, such volumes default to 50% of a node's total memory, making pod definitions less portable across varying node configurations. By introducing a SizeMemoryBackedVolumes feature gate, this proposal ensures that memory-backed volumes are sized consistently with pod-level memory limits, improving predictability for workloads like AI/ML that rely on memory-backed storage.

Additionally, it allows users to explicitly define smaller volume sizes for better resource control, while maintaining compatibility with existing memory accounting mechanisms. This change increases reliability, portability, and flexibility for workloads leveraging memory-backed storage.

#3983 Add support for a drop-in kubelet configuration directory

Stage: Graduating to Beta

Feature group: sig-node

This proposal improves Kubernetes Kubelet configuration management by introducing a drop-in configuration directory, specified via a new –config-dir flag. During the beta phase, the default value for this flag will be set to /etc/kubernetes/kubelet.conf.d, allowing users to define multiple configuration files that are processed in alphanumeric order. This approach aligns with standard Linux practices, improving flexibility and avoiding conflicts when multiple entities manage Kubelet settings.

Initially tested via the KUBELET_CONFIG_DROPIN_DIR_ALPHA environment variable, this feature replaces it for a more streamlined process. By enabling users to override the primary configuration file (/etc/kubernetes/kubelet.conf), this enhancement simplifies handling dynamic updates and avoids issues such as race conditions. Additionally, it provides tools to view the effective Kubelet configuration, enhancing transparency and maintainability while supporting best practices for configuration management in-line with the OWASP Top 10 for Kubernetes.

#4680 Add Resource Health Status to the Pod Status for Device Plugin and DRA

Stage: Graduating to Alpha

Feature group: sig-node

Now you can expose device health information in the Pod Status – specifically within the PodStatus.AllocatedResourcesStatus field. This allows for better Pod troubleshooting in failed or temporarily unhealthy devices. Currently, when a device fails, workloads often crash repeatedly without clear visibility into the root cause. By integrating device health details from Device Plugins or Device Resource Assignments into the AllocatedResourcesStatus, users can easily identify issues related to unhealthy devices. This feature improves failure handling by providing visibility into the status of allocated resources, such as GPUs, and helps in reallocating workloads to healthy devices. Even for Pods marked as failed, device status will remain accessible as long as the kubelet tracks the Pod, ensuring comprehensive debugging information to address device-related Pod placement issues.

Scheduling in Kubernetes 1.32

#4247 Per-plugin callback functions for accurate requeueing in kube-scheduler

Stage: Graduating to Beta

Feature group: sig-scheduling

The scheduling SIG are introducing a new feature, QueueingHint, to improve Kubernetes' scheduling performance by fine-tuning the requeueing of Pods. By providing a mechanism for plugins to suggest when Pods should be retried, the scheduler can avoid unnecessary retries, which helps reduce scheduling overhead and improve throughput. Specifically, it allows plugins like NodeAffinity to requeue Pods only when the likelihood of successful scheduling is high, such as when a node update makes it eligible.

Additionally, the proposal enables plugins like DynamicResourceAllocation (DRA) to skip backoff periods, streamlining the scheduling of Pods that rely on dynamic resources, thus reducing the delay caused by waiting for updates from device drivers. This optimisation improves the overall scheduling efficiency, especially for workloads that require multiple cycles for completion.

#3902 Decouple TaintManager from NodeLifecycleController

Stage: Graduating to Stable

Feature group: sig-scheduling

This enhancement proposed separating the NodeLifecycleController and TaintManager in Kubernetes, creating two independent controllers: NodeLifecycleController for adding taints to unhealthy nodes, and TaintEvictionController for handling pod eviction based on those taints.

This separation improves code organization and maintainability by decoupling the responsibilities of tainting nodes and evicting pods. It also facilitates easier future enhancements to either component and allows for custom implementations of taint-based eviction, ultimately streamlining the management of node health and pod lifecycle.

#4832 Asynchronous preemption in the scheduler

Stage: Net New to Alpha

Feature group: sig-scheduling

This improvement also looks at decoupling two core features – preemption API calls from the main scheduling cycle, specifically after the PostFilter extension point, to improve scheduling throughput in failure scenarios. Currently, preemption tasks are performed synchronously during the PostFilter phase, which can block the scheduling cycle due to necessary API calls.

By making these API calls asynchronous, the scheduler can continue processing other Pods without being delayed by the preemption process. This change enhances scheduling efficiency by allowing the scheduler to handle preemptions in parallel with other tasks, ultimately speeding up the overall scheduling throughput.

Kubernetes 1.32 storage

#1790 Support recovery from volume expansion failure

Stage: Graduating to Beta

Feature group: sig-storage

This sig-storage team have been looking to allow users to retry expanding a PVC after a failed expansion by relaxing API validation to enable reducing the requested size. This helps users correct expansion attempts that may not be supported by the underlying storage provider, such as when attempting to expand beyond the provider's capacity. A new field, pvc.Status.AllocatedResources, is introduced to accurately track resource quotas if the PVC size is reduced. Additionally, the system ensures that the requested size can only be reduced to a value greater than pvc.Status.Capacity, preventing any unwanted shrinking.

To protect the quota system, quota calculations use the maximum of pvc.Spec.Capacity and pvc.Status.AllocatedResources, and any reduction in allocated resources is only allowed after a terminal expansion failure. This proposal simplifies recovery from expansion failures while safeguarding the quota system.

#3476 VolumeGroupSnapshot

Stage: Graduating to Beta

Feature group: sig-storage

This improvement introduces a Kubernetes API for taking crash-consistent snapshots of multiple volumes simultaneously using a label selector to group PVCs. It defines new CRDs—VolumeGroupSnapshot, VolumeGroupSnapshotContent, and VolumeGroupSnapshotClass—which enable users to create snapshots of multiple volumes at the same point in time, ensuring write order consistency across all volumes in the group.

This functionality helps avoid the need for application quiescing and the time-consuming process of taking individual snapshots sequentially. By providing the ability to capture consistent group snapshots without affecting application performance, it is particularly beneficial for use cases such as nightly backups or disaster recovery, and complements other proposals for application snapshotting and backup.

The API is designed to work with CSI Volume Drivers, leveraging the CREATE_DELETE_GET_VOLUME_GROUP_SNAPSHOT capability.

#1710 Speed up recursive SELinux label change

Stage: Graduating to Beta

Feature group: sig-storage

The aim here was to speed up the process of making volumes available to Pods on systems with SELinux in enforcing mode by eliminating the need for recursive relabeling of files. Currently, the container runtime must recursively relabel all files on a volume before a container can start, which is slow for volumes with many files. The proposed solution uses the -o context=XYZ mount option to set the SELinux context for all files on a volume without the need for a recursive walk. This change will be rolled out in phases, starting with ReadWriteOncePod volumes and eventually extending to all volumes by default, with options for users to opt out in certain cases. This approach significantly improves performance while maintaining flexibility for different use cases.

Other enhancements in Kubernetes 1.32

#2831 Kubelet OpenTelemetry Tracing

Stage: Graduating to Stable

Feature group: sig-node

Status: Deferred

This deferred KEP to v.1.32 aims to enhance the kubelet by adding support for tracing gRPC and HTTP API requests, using OpenTelemetry libraries to export trace data in the OpenTelemetry format. By leveraging the kubelet's optional TracingConfiguration specified within kubernetes/component-base, cluster operators, administrators, and cloud vendors can configure the kubelet to collect trace data from interactions between the kubelet, container runtimes, and the API server.

This feature will generate spans for various kubelet actions, such as pod creation and interactions with the CRI, CNI, and CSI interfaces, providing valuable insights for troubleshooting and monitoring. The addition of distributed tracing will enable easier diagnosis of latency and node issues, enhancing overall Kubernetes observability and management.

#4017 Add Pod Index Label for StatefulSets and Indexed Jobs

Stage: Net New to Alpha

Feature group: sig-apps

Status: Tracked for code freeze

Reading through this KEP, we can see that the improvement proposal would simplify the process of identifying the index of StatefulSet pods by adding it as both an annotation and a label. Currently, the index is extracted by parsing the pod name, which is not ideal. By setting the index as an annotation, it can easily be accessed as an environment variable via the Downward API.

Additionally, adding the index as a label enables easier filtering and selection of pods based on their index. The proposal also suggests using labels to target traffic to specific instances of StatefulSet pods, improving flexibility for use cases like filtering or directing traffic to the first pod in a set.

#2837 Pod level resources

Stage: Net New to Alpha

Feature group: sig-node

Status: Tracked for code freeze

This enhancement proposes extending the Pod API to allow specifying resource requests and limits at the pod level, in addition to the existing container-level settings. Currently, resource allocation is container-centric, which can be cumbersome for managing multi-container pods with varying resource demands.

By enabling pod-level resource constraints, this proposal simplifies resource management, making it easier to control the overall resource consumption of a pod without having to configure each container individually. This approach will improve resource utilisation, particularly for tightly coupled applications or bursty workloads, while remaining compatible with existing container-level configurations and Kubernetes features.

If you liked this, you might want to check out our previous 'What's new in Kubernetes' editions:

- Kubernetes 1.31 – What's new?

- Kubernetes 1.30 – What's new?

- Kubernetes 1.27 – What's new?

- Kubernetes 1.26 – What's new?

- Kubernetes 1.25 – What's new?

- Kubernetes 1.24 – What's new?

- Kubernetes 1.23 – What's new?

- Kubernetes 1.22 – What's new?

- Kubernetes 1.21 – What's new?

- Kubernetes 1.20 – What's new?

- Kubernetes 1.19 – What's new?

- Kubernetes 1.18 – What's new?

- Kubernetes 1.17 – What's new?

- Kubernetes 1.16 – What's new?

- Kubernetes 1.15 – What's new?

- Kubernetes 1.14 – What's new?

- Kubernetes 1.13 – What's new?

- Kubernetes 1.12 – What's new?

Get involved with the Kubernetes project:

- Visit the project homepage.

- Check out the Kubernetes project on GitHub.

- Get involved with the Kubernetes community.

- Meet the maintainers on the Kubernetes Slack.

- Follow @KubernetesIO on Twitter.

And if you enjoy keeping up to date with the Kubernetes ecosystem, subscribe to our container newsletter, a monthly email with the coolest stuff happening in the cloud-native ecosystem.