Falco Feeds extends the power of Falco by giving open source-focused companies access to expert-written rules that are continuously updated as new threats are discovered.

Kubernetes 1.25 is about to be released, and it comes packed with novelties! Where do we begin?

This release brings 40 enhancements, on par with the 46 in Kubernetes 1.24 and 45 in Kubernetes 1.23. Of those 40 enhancements, 13 are graduating to Stable, 10 are existing features that keep improving, 15 are completely new, and two are deprecated features.

The highlight in this release is the final removal of PodSecurityPolicies, being replaced by PodSecurity admission that is finally graduating to Stable. Watch out for all the deprecations and removals in this version!

There are several new security features that are really welcome additions, like support for user namespaces, checkpoints for forensic analysis, improvements in SELinux when mounting volumes, NodeExpansion secrets, and improvements to the official CVE feed. Also, other features will make life easier for cluster administrators, like non-retriable Pod failures, KMS v2 Improvements, a cleaner IPTables chain ownership, and better handling of the StorageClass defaults on PVCs.

Finally, the icing on the cake of this release is all the great features that are reaching the GA state. Big shout out to the CSI migration, a process that has been ongoing for more than three years and is finally in its final stages. Also, port ranges for NetworkPolicies, Ephemeral volumes, and the support for Cgroups v2. We are really hyped about this release!

There is plenty to talk about, so let's get started with what's new in Kubernetes 1.25.

Kubernetes 1.25 – Editor's pick:

These are the features that look most exciting to us in this release (ymmv):

#127 Add support for user namespaces

This enhancement was first requested for Kubernetes almost six years ago. It was targeting alpha in Kubernetes 1.5. The necessary technology to implement this feature has been around for a while. Finally, it's here, at least in its alpha version.

User namespaces in Kubernetes can help to relax many of the constraints imposed on workloads, allowing the use of features otherwise considered highly insecure. Hopefully, this will also make the life of attackers much more difficult.

Miguel Hernández – Security Content Engineer at Sysdig

#2008 Forensic Container Checkpointing

Container checkpointing allows creating snapshots from a specific container so analysis and investigation can be carried out on the copy. Without notifying potential attackers, this will be a great tool for forensics analysis of containers, if you manage to capture the container before it gets destroyed.

This is not only going to change Kubernetes forensics forever, but every tool that offers security and incident response for Kubernetes.

Javier Martínez – DevOps Content Engineer at Sysdig

#3329 Retriable and non-retriable Pod failures for Jobs

This is a much needed improvement on Kubernetes Jobs. Currently, if a Job fails and its restartPolicy is set on OnFailure, Kubernetes will try to run it again, up to a maximum backoff limit. However, this doesn't make sense when the failure is caused by an application error that won't be fixed on its own.

By being able to set policies for different causes of a failure, this enhancement will make Kubernetes more efficient, without wasting time executing something that is doomed to fail. It will also make Jobs more reliable to pod evictions, which will drastically reduce the number of failed Jobs administrators will have to investigate on resource constrained clusters.

Daniel Simionato – Security Content Engineer at Sysdig

#625 CSI migration – core

Not a new feature at all, but the storage SIG deserves a big kudos for this migration. They've been tirelessly moving CSI drivers from the Kubernetes core for more than three years, and we've been following their updates release after release. Now the migration is finally close to the end.

In the long term, this will mean an easier to maintain Kubernetes code and cooler features around storage. Can't wait to see what comes up next!

Víctor Jiménez Cerrada – Content Manager Engineer at Sysdig

#3299 KMS v2 improvements

With this enhancement, managing encryption keys will be easier and will require less manual steps. At the same time, operations with said keys will be faster. This is the kind of security Improvements we like to see: Better security, improved performance, and making life easier for admins.

Devid Dokash – DevOps Content Engineer at Sysdig

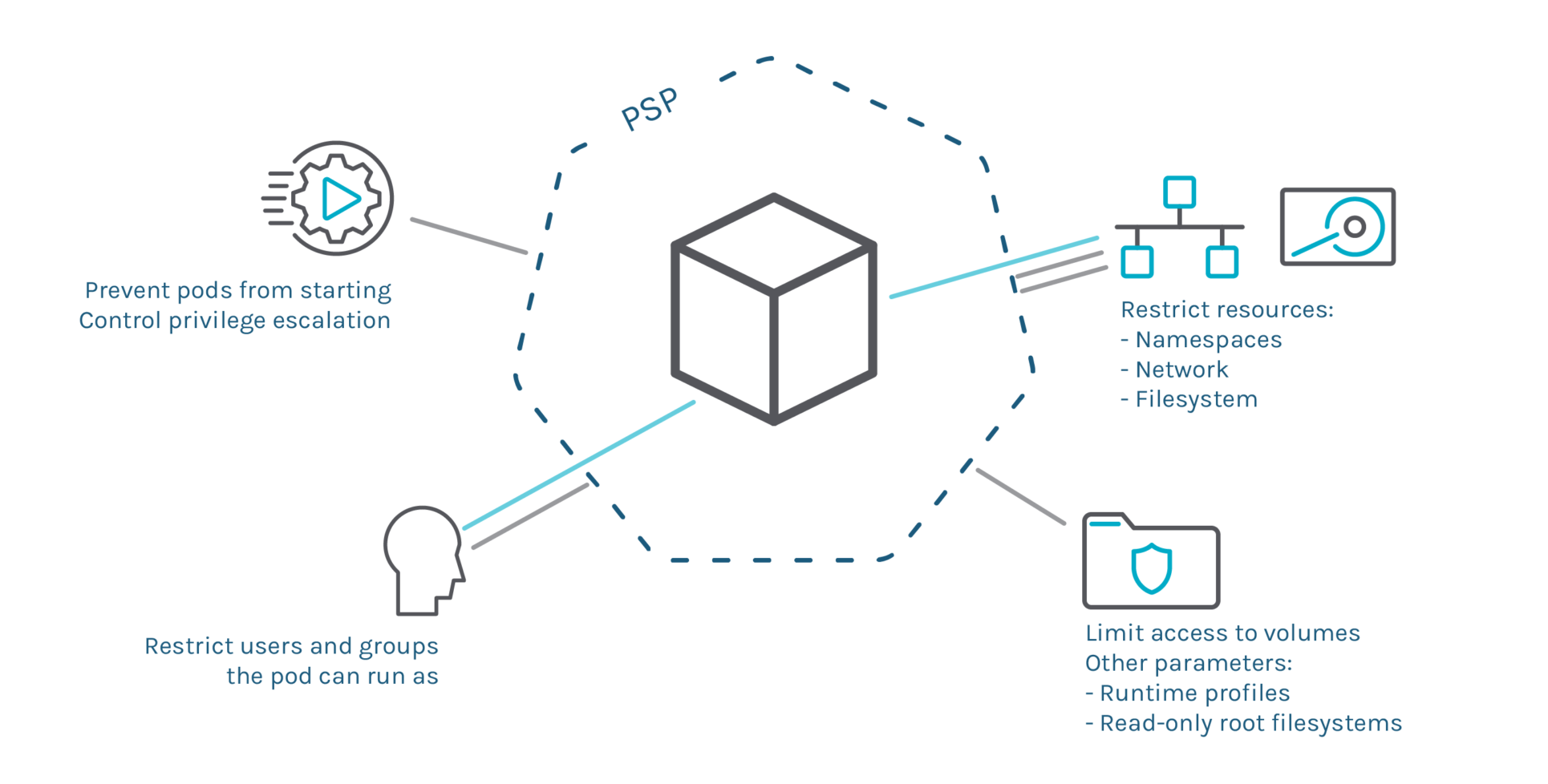

#5 PodSecurityPolicy + #2529 Pod Security Control

I believe PodSecurityPolicy deserves a special and last mention. It's probably been one of the most misunderstood components in the Kubernetes ecosystem, and now it's finally gone.

We shouldn't forget that changes in the upstream project, Kubernetes in this case, also affect other Kubernetes distributions, like Red Hat OpenShift. For that reason, they have released an update to explain how they are moving forward with the new admission controller Pod Security Control, which will probably bring a couple of headaches to more than one engineer.

Thank you for your service, PSP.

Vicente J. Jiménez Miras – Security Content Engineer at Sysdig

Deprecations

API and feature removals

A few beta APIs and features have been removed in Kubernetes 1.25, including:

- Deprecated API versions that are no longer served (use a newer one):

- CronJob

batch/v1beta1 - EndpointSlice

discovery.k8s.io/v1beta1 - Event

events.k8s.io/v1beta1 - HorizontalPodAutoscaler

autoscaling/v2beta1 - PodDisruptionBudget

policy/v1beta1 - PodSecurityPolicy

policy/v1beta1 - RuntimeClass

node.k8s.io/v1beta1

- CronJob

- Other API changes:

k8s.io/component-basewas moved tok8s.io/component-base/logs/api/v1(Go API for logging configuration)

- Other changes:

- The beta

PodSecurityPolicyadmission plugin, deprecated since 1.21, is removed. - Support for several in-tree volume plugins has been removed:

- Removed unused flags from the

kubectl runcommand. - End-to-end testing has been migrated from Ginkgo v1 to v2.

Ginkgo.Measurehas been deprecated, usegomega/gmeasureinstead.- Some apiserver metrics were changed.

- Deprecated API server

--service-account-api-audiencesflag in favor of--api-audiences. - Partially removed some seccomp annotations.

- Deprecated the command line flag

enable-taint-manager. - Removed the recently re-introduced schedulability predicate.

- Deprecated Kube-scheduler

ComponentConfigv1beta2. - The kubelet no longer supports collecting accelerator metrics through cAdvisor.

- The beta

You can check the full list of changes in the Kubernetes 1.25 release notes. Also, we recommend the Kubernetes Removals and Deprecations In 1.25 article, as well as keeping the deprecated API migration guide close for the future.

#5 PodSecurityPolicy

Stage: Deprecation

Feature group: auth

Pod Security Policies (PSPs) are a great Kubernetes-native tool to restrict what deployments can do, like limiting the execution to a list of users, or access resources like the network or volumes.

As we covered in our "Kubernetes 1.23 – What's new?" article, this feature is deprecated and is being replaced by #2579 PodSecurity admission. Check this guide to migrate to the built-in PodSecurity admission plugin.

#3446 deprecate GlusterFS plugin from available in-tree drivers

Stage: Deprecation

Feature group: storage

Following up on "#625 CSI migration – core," several CSI plugins that were included in the Kubernetes core (in-tree) are being migrated to be separate projects (out-of-tree). As this migration progresses, the in-tree counterparts are also being deprecated.

Beyond GlusterFS, support has been also removed for flocker, quobyte, and storageos.

Apps in Kubernetes 1.25

#3329 Retriable and non-retriable Pod failures for Jobs

Stage: Net New to Alpha

Feature group: apps

Feature gate: JobBackoffPolicy Default value: false

Currently, the only option available to limit retries of a Job is to use .spec.backoffLimit. This field limits the maximum retries of a Job, after which the Job will be considered failed.

This, however, doesn't allow us to discern the causes of container failures. If there are known exit codes that signal an unrecoverable error, it would be desirable to just mark the Job as failed instead of wasting computational time retrying to execute something that's doomed to fail. On the other side, it is also undesirable to have a container fail due to infrastructural events (such as pod preemption, memory pressure eviction, or node drain) count towards the backoffLimit.

This enhancement allows us to configure a .spec.backoffPolicy on the Jobs's spec that determines whether the Job should be retried or not in case of failure. By having a different behavior depending on the causes of the failure, Kubernetes can terminate Jobs early, avoiding increasing the backoff time in case of infrastructure failures or application errors.

#1591 DaemonSets should support MaxSurge to improve workload availability

Stage: Graduating to Stable

Feature group: apps

Feature gate: DaemonSetUpdateSurge Default value: true

When performing a rolling update, the ec.strategy.rollingUpdate.maxSurge field allows specifying how many new Pods will be created to replace the old ones.

Read more in our "What's new in Kubernetes 1.22" article.

#2599 Add minReadySeconds to Statefulsets

Stage: Graduating to Stable

Feature group: apps

Feature gate: StatefulSetMinReadySeconds Default value: true

This enhancement brings to StatefulSets the optional minReadySeconds field that is already available on Deployments, DaemonSets, ReplicasSets, and Replication Controllers.

Read more in our "Kubernetes 1.22 – What's new?" article.

#3140 TimeZone support in CronJob

Stage: Graduating to Beta

Feature group: apps

Feature gate: CronJobTimeZone Default value: true

This feature honors the delayed request to support time zones in the CronJob resources. Until now, the Jobs created by CronJobs are set in the same time zone, the one on which the kube-controller-manager process was based.

Read more in our "What's new in Kubernetes 1.24" article.

Kubernetes 1.25 Auth

#3299 KMS v2 improvements

Stage: Net New to Alpha

Feature group: auth

Feature gate: KMSv2 Default value: false

This new feature aims to improve performance and maintenance of the Key Management System.

One of the main issues that this enhancement addresses is the low performance of having to manage different DEK (Data Encryption Key) for each object. This is especially severe when, after restarting the kube-apiserver process which empties the cache, an operation to LIST all the secrets is performed. The solution is to generate a local KEK, which delays hitting the KMS rate limit.

Currently, tasks like rotating a key involve multiple restarts of each kube-apiserver instance. This is necessary so every server can encrypt and decrypt using the new key. Besides, indiscriminately re-encrypting all the secrets in the cluster is a resource-consuming task that can leave the cluster out of service for some seconds, or even dependent on the old key. This feature enables auto rotation of the latest key.

This feature will also add capabilities to improve observability and health check operations, which at the moment, are performed by encrypting and decrypting a resource and are costly in cloud environments.

It's a very exciting change that will help you secure your cluster a bit less painfully.

Learn more about it from its KEP.

#2579 PodSecurity admission (PodSecurityPolicy replacement)

Stage: Graduating to Stable

Feature group: auth

Feature gate: PodSecurity Default value: true

A new PodSecurity admission controller is now enabled by default to replace the Pod Security Policies deprecated in Kubernetes 1.21.

Read more in our "Kubernetes 1.22 – What's new?" article, and check the new tutorials for further information:

- Apply Pod security standards at the Cluster level

- Apply Pod security standards at the Namespace level

Network in Kubernetes 1.25

#2593 multiple ClusterCIDRs

Stage: Graduating to Alpha

Feature group: network

Feature gate: ClusterCIDRConfig Default value: false

Scaling a Kubernetes cluster is an actual challenge once it has been created. If the network is overdimensioned, we will waste many IP addresses. On the contrary, under-dimensioning a cluster will mean that at a point in time, we will have to decommission it and create a newer one.

This enhancement will enable cluster administrators to extend a Kubernetes cluster adding network ranges (PodCIDR segments) in a more flexible way. The KEP defines three clear user stories:

- Add more Pod IPs to the cluster, in case you did run out of segments and decide to scale up your cluster.

- Add nodes with higher or lower capabilities, like doubling the number of maximum Pods allocatable in future nodes.

- Provision discontiguous ranges. Useful when your network segments haven't been evenly distributed and you need to group a number of them to deploy a new Kubernetes node.

For that, the creation of a new resource, ClusterCIDRConfig, allows controlling the CIDR segments through a node allocator. Just remember that this configuration won't affect or reconfigure the already provisioned cluster. The goal is to extend the NodeIPAM functionality included in Kubernetes, not to change it.

#3178 Cleaning up IPTables chain ownership

Stage: Graduating to Alpha

Feature group: network

Feature gate: IPTablesOwnershipCleanup Default value: false

Cleaning up is always recommended, especially in your code. kubelet has historically created chains in IPTables to support components like dockershim or kube-proxy. Dockershim removal was a big step in Kubernetes, and now it's time to clean behind it.

kube-proxy is a different story. We could say that the chains that kube-proxy created were not owned by it, but by kubelet. It's time to put things where they belong, stop kubelet from creating unnecessary resources, and let kube-proxy create those by itself.

We won't see a big change in the functionality, but we'll manage a much cleaner cluster.

#2079 NetworkPolicy port range

Stage: Graduating to Stable

Feature group: network

Feature gate: NetworkPolicyEndPort Default value: true

This enhancement will allow you to define all ports in a NetworkPolicy as a range:

spec:

egress:

- ports:

- protocol: TCP

port: 32000

endPort: 32768Read more in our "Kubernetes 1.21 – What's new?" article.

#3070 Reserve Service IP ranges for dynamic and static IP allocation

Stage: Graduating to Beta

Feature group: network

Feature gate: ServiceIPStaticSubrange Default value: true

This update to the --service-cluster-ip-range flag will lower the risk of having IP conflicts between Services using static and dynamic IP allocation, and at the same type, keep the compatibility backwards.

Read more in our "What's new in Kubernetes 1.24" article.

Kubernetes 1.25 Nodes

#127 Add support for user namespaces

Stage: Graduating to Alpha

Feature group: node

Feature gate: UserNamespacesSupport Default value: false

A very long awaited feature. There are plenty of vulnerabilities where, due to excessive privileges given to a Pod, the host has been compromised.

User namespaces have been supported by the Linux Kernel for some time. It is possible to test them through different container runtimes. Bringing them to the Kubernetes ecosystem will definitely open a new range of possibilities, like allowing too demanding containers to believe they are running in privileged mode; reducing the surface attack of container images seems to still be a challenge for many.

Do you remember the last time someone requested CAP_SYS_ADMIN capabilities? If you're like us, you were probably hesitant to give it. Now, due to this enhancement, these permissions will be available in the Pod, but not in the host. Mounting a FUSE filesystem or starting a VPN inside a container can stop being a headache.

If you haven't visualized it yet, let's say it in plainer language: Processes inside the container will have two identities (UID/GID). One inside the Pod, and a different one outside, on the host, where they have potential to be more harmful.

Do you want to start using it already? Enable the feature gate UserNamespacesSupport and set the spec.hostUsers value inside the Pod to false (true or unset would use the host users, as Kubernetes currently does). This feature is not yet suitable for production though.

To find out more about this, the KEP contains additional information and an exhaustive list of CVEs affected by lack of this feature.

#2008 Forensic Container Checkpointing

Stage: Graduating to Alpha

Feature group: node

Feature gate: ContainerCheckpointRestore Default value: false

Container Checkpointing enables taking a snapshot of a running container.

This snapshot can be transferred to another node where a forensic investigation can be started. Since the analysis is being done in a copy of the affected container, any possible attacker with access to the original will not be aware of such analysis.

This feature uses the newly introduced CRI API so the kubelet can request a one-time call to create the checkpoint.

A snapshot creation is requested via the /checkpoint endpoint, and will be stored in .tar format (i.e., checkpoint-<podFullName>-<containerName>-<timestamp>.tar) under the --root-dir (defaults to /var/lib/kubelet/checkpoints).

#2831 Kubelet OpenTelemetry tracing

Stage: Graduating to Alpha

Feature group: node

Feature gate: KubeletTracing Default value: false

In line with KEP-647 on the APIServer, this enhancement adds OpenTelemetry tracing to the GRPc calls made in the kubelet.

These traces can provide insights on the interactions at the node level, for example, between the kubelet and the container runtime.

This will help administrators investigate latency issues occurring at the node level when creating or deleting Pods, attaching volumes to containers, etc.

#3085 pod sandbox ready condition

Stage: Graduating to Alpha

Feature group: node

Feature gate: PodHasNetworkCondition Default value: false

A new condition, PodHasNetwork, has been added to the Pod definition to let the Kubelet indicate that the pod sandbox has been created, and the CRI runtime has configured its network. This condition marks an important milestone in the pod's lifecycle, similar to ContainersReady or the Ready conditions.

Up until now, for example, once the Pod had been successfully scheduled triggering the PodScheduled condition, there were no other specific conditions regarding the initialization of the network.

This new condition will benefit cluster operators configuring components in the Pod sandbox creation, like CSI plugins, CRI runtime, CNI plugins, etc.

#3327 CPU Manager policy: socket alignment

Stage: Net New to Alpha

Feature group: node

Feature gate: CPUManagerPolicyAlphaOptions Default value: false

This adds a new static policy to the CPUManager, align-by-socket, that helps achieve predictable performance in the cases where aligning CPU allocations at NUMA boundaries would result in assigning CPUs from different sockets.

This complements previous efforts to have more control on which CPU cores your workloads actually run:

#2254 cgroup v2

Stage: Graduating to Stable

Feature group: node

Feature gate: N/A

This enhancement covers the work done to make Kubernetes compatible with Cgroups v2, starting with the configuration files.

Check the new configuration values, as there will be some changes in the ranges of the values. For example, cpu.weight values will change from [2-262144] to [1-10000].

Read more in our "What's new in Kubernetes 1.22" article.

#277 Ephemeral Containers

Stage: Graduating to Stable

Feature group: node

Feature gate: EphemeralContainers Default value: true

Ephemeral containers are a great way to debug running pods. Although you can't add regular containers to a pod after creation, you can run ephemeral containers with kubectl debug.

Read more in our "Kubernetes 1.16 – What's new?" article.

#1029 ephemeral storage quotas

Stage: Graduating to Beta

Feature group: node

Feature gate: LocalStorageCapacityIsolationFSQuotaMonitoring

Default value: true

A new mechanism to calculate quotas that is more efficient and accurate than scanning ephemeral volumes periodically.

Read more in our "Kubernetes 1.15 – What's new?" article.

#2238 Add configurable grace period to probes

Stage: Major Change to Beta

Feature group: node

Feature gate: ProbeTerminationGracePeriod Default value: true

This enhancement introduces a second terminationGracePeriodSeconds field, inside the livenessProbe object, to differentiate two situations: How much should Kubernetes wait to kill a container under regular circumstances, and when is the kill due to a failed livenessProbe?

Read more in our "Kubernetes 1.21 – What's new?" article.

#2413 seccomp by default

Stage: Graduating to Beta

Feature group: node

Feature gate: SeccompDefault Default value: true

Kubernetes now increases the security of your containers, executing them using a Seccomp profile by default.

Read more in our "What's new in Kubernetes 1.22" article.

Scheduling in Kubernetes 1.25

#3094 Take taints/tolerations into consideration when calculating PodTopologySpread Skew

Stage: Graduating to Alpha

Feature group: scheduling

Feature gate: NodeInclusionPolicyInPodTopologySpread Default value: false

As we discussed in our "Kubernetes 1.16 – What's new?" article, the topologySpreadConstraints fields allows you to spread your workloads across nodes; it does so together with maxSkew, which represents the maximum difference in the number of pods between two given topology domains.

The newly added field NodeInclusionPolicies allows setting values for both NodeAffinity and NodeTaint (with values: Respect/Ignore) to take into account taints and tolerations when calculating this pod topology spread skew.

NodeAffinity: Respectmeans that nodes matchingnodeAffinityandnodeSelectorwill be included in the skew process.NodeAffinity: Ignore(default) means that all nodes will be included.NodeTaint: Respectmeans that tainted nodes tolerating the incoming pod, plus regular nodes will be included in the skew process.NodeTaint: Ignore(default) means that all nodes will be included.

This will prevent some pods from entering into an unexpected Pending state, since the skew constraint is assigning them to nodes with taints or tolerations.

#3243 Respect PodTopologySpread after rolling upgrades (to review)

Stage: Net New to Alpha

Feature group: scheduling

Feature gate: MatchLabelKeysInPodTopologySpread Default value: false

PodTopologySpread facilitates a better control on how evenly distributed Pods that are related to each other are. However, when rolling out a new set of Pods, the existing – soon to disappear – Pods are included in the calculations, which might lead to an uneven distribution of the future ones.

This enhancement adds the flexibility, thanks to a new field in the Pod spec and its calculation algorithm, of considering arbitrary labels included in the Pods definition, enabling the controller to create more precise sets of Pods before calculating the spread.

In the following configuration, we can observe two relevant fields, labelSelector and matchLabelKeys. Until now, only labelSelector has determined the number of Pods in the topology domain. By adding one or more keys to matchLabelKeys, like the known pod-template-hash, the controller can distinguish between Pods from different ReplicaSets under the same Deployment, and calculate the spread in a more accurate way.

apiVersion: v1

kind: Pod

Metadata:

name: example-pod

Spec:

# Configure a topology spread constraint

topologySpreadConstraints:

- maxSkew: <integer>

minDomains: <integer> # optional; alpha since v1.24

topologyKey: <string>

whenUnsatisfiable: <string>

labelSelector: <object>

matchLabelKeys: <list> # optional; alpha since v1.25

### other Pod fields go hereThis feature might not change your life, or even change the performance of your cluster. But if you ever wondered why your Pods were not distributed the way you wanted, here you have the answer.

#785 Graduate the kube-scheduler ComponentConfig to GA

Stage: Graduating to Stable

Feature group: scheduling

Feature gate: NA

ComponentConfig is an ongoing effort to make component configuration more dynamic and directly reachable through the Kubernetes API.

Read more in our "Kubernetes 1.19 – What's new?" article.

In this version, several plugins have been removed:

- KubeSchedulerConfiguration

v1beta2is deprecated, please migrate tov1beta3orv1. - The scheduler plugin

SelectorSpreadis removed, use thePodTopologySpreadinstead.

Read about previous deprecations in our "What's new in Kubernetes 1.23" article.

#3022 Min domains in PodTopologySpread

Stage: Graduating to Beta

Feature group: scheduling

Feature gate: MinDomainsInPodTopologySpread Default value: true

A new minDomains subresource establishes a minimum number of domains that should count as available, even though they might not exist at the moment of scheduling a new Pod. Therefore, when necessary, the domain will be scaled up and new nodes within that domain will be automatically requested by the cluster autoscaler.

Read more in our "What's new in Kubernetes 1.24" article.

Kubernetes 1.25 Storage

#1710 SELinux relabeling using mount options

Stage: Graduating to Alpha

Feature group: storage

Feature gate: SELinuxMountReadWriteOncePod Default value: false

This feature is meant to speed up the mounting of PersistentVolumes using SELinux. By using the context option at mount time, Kubernetes will apply the security context on the whole volume instead of changing context on the files recursively. This implementation has some caveats and in the first phase will only be applied to volumes that are backed by PersistentVolumeClaims with ReadWriteOncePod.

#3107 NodeExpansion secret

Stage: Graduating to Alpha

Feature group: storage

Feature gate: CSINodeExpandSecret Default value: false

Storage is a requirement, not only for stateful applications, but also for cluster nodes. Expanding a volume using credentials was already possible in previous versions of Kubernetes using StorageClasses Secrets. However, when mounting the storage on a node, we didn't have a mechanism to pass those credentials when doing a NodeExpandVolume operation.

These new enhancements enable passing the secretRef field to the CSI driver by leveraging two new annotations in the StorageClass definition:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: csi-storage-sc

parameters:

csi.storage.k8s.io/node-expand-secret-name: secret-name

csi.storage.k8s.io/node-expand-secret-namespace: mysecretsnsStay tuned, there will soon be a blog post with more detailed information on the Kubernetes site. In the meantime, refer to the KEP to discover more.

#3333 Reconcile default StorageClass in PersistentVolumeClaims (PVCs)

Stage: Net New to Alpha

Feature group: storage

Feature gate: RetroactiveDefaultStorageClass Default value: false

This enhancement consolidates the behavior when PVCs are created without a StorageClass, adding an explicit annotation to PVCs using the default class. This helps manage the case when cluster administrators change the default storage class, forcing a brief moment without a default storage class between removing the old one and setting the new one.

With this feature enabled when a new default is set, all PVCs without StorageClass will retroactively be set to the default StorageClass.

#361 Local ephemeral storage resource management

Stage: Graduating to Stable

Feature group: storage

Feature gate: LocalStorageCapacityIsolation Default value: true

A long time coming since it was first introduced in Alpha in Kubernetes 1.7, this allows us to limit the ephemeral storage used by Pods by configuring requests.ephemeral-storage and limits.ephemeral-storage in a similar fashion as done for CPU and memory.

Kubernetes will evict Pods whose containers go over their configured limits and requests. As for CPU and memory, the scheduler will check the storage requirements for the Pods against the available local storage before scheduling them on the node. In addition, administrators can configure ResourceQuotas to set constraints on the total requests/limits on a namespace, and LimitRanges to configure default limits for Pods.

#625 CSI migration – core

Stage: Graduating to Stable

Feature group: storage

Feature gate: CSIInlineVolume Default value: true

As we covered in our "Kubernetes 1.15 – What's new?" article, the storage plugins were originally in-tree, inside the Kubernetes codebase, increasing the complexity of the base code and hindering extensibility.

There is an ongoing effort to move all this code to loadable plugins that can interact with Kubernetes through the Container Storage Interface.

Most of the PersistentVolume types have been deprecated, with only these left:

- cephfs

- csi

- fc

- hostPath

- iscsi

- local

- nfs

- rbd

This effort has been split between several tasks: #178, #1487, #1488, #1490, #1491, #1491, #2589.

#1488 CSI migration – GCE

Stage: Graduating to Stable

Feature group: storage

Feature gate: CSIMigrationGCE Default value: true

This moves the support for Persistent Disk in GCE (Google Container Engine) to the out-of-tree pd.csi.storage.gke.io Container Storage Interface (CSI) Driver (which needs to be installed on the cluster).

This enhancement is part of the #625 In-tree storage plugin to CSI Driver Migration effort.

#596 CSI Ephemeral volumes

Stage: Graduating to Stable

Feature group: storage

Feature gate: CSIInlineVolume Default value: true

CSI volumes can only be referenced via PV/PVC today. This works well for remote persistent volumes. This feature introduces the possibility to use CSI volumes as local ephemeral volumes as well.

Read more in our "What's new in Kubernetes 1.14" article.

#1487 CSI migration – AWS

Stage: Graduating to Stable

Feature group: storage

Feature gate: CSIMigrationAWS Default value: true

As we covered in our "What's new in Kubernetes 1.23" article, all plugin operations for the AWS EBS Container Storage Interface (CSI) driver are now redirected to the out-of-tree 'ebs.csi.aws.com' driver.

This enhancement is part of the #625 In-tree storage plugin to CSI Driver Migration effort.

#1491 CSI migration – vSphere

Stage: Graduating to Beta

Feature group: storage

Feature gate: CSIMigrationvSphere Default value: false

As we covered in our "What's new in Kubernetes 1.19" article, the CSI driver for vSphere has been stable for some time. Now, all plugin operations for vspherevolume are now redirected to the out-of-tree 'csi.vsphere.vmware.com' driver.

This enhancement is part of the #625 In-tree storage plugin to CSI Driver Migration effort.

#2589 CSI migration – Portworx

Stage: Graduating to Beta

Feature group: storage

Feature gate: CSIMigrationPortworx Default value: false

As we covered in our "What's new in Kubernetes 1.23" article, all plugin operations for the Portworx Container Storage Interface (CSI) driver are now redirected to the out-of-tree 'pxd.portworx.com' driver.

This enhancement is part of the #625 In-tree storage plugin to CSI Driver Migration effort.

Other enhancements in Kubernetes 1.25

#2876 CRD validation expression language

Stage: Graduating to Beta

Feature group: api-machinery

Feature gate: CustomResourceValidationExpressions Default value: true

This enhancement implements a validation mechanism for Custom Resource Definitions (CRDs), as a complement to the existing one based on webhooks.

These validation rules use the Common Expression Language (CEL) and are included in CustomResourceDefinition schemas, using the x-kubernetes-validations extension.

Two new metrics are provided to track the compilation and evaluation times: cel_compilation_duration_seconds and cel_evaluation_duration_seconds.

Read more in our "What's new in Kubernetes 1.23" article.

#2885 Server side unknown field validation

Stage: Graduating to Beta

Feature group: api-machinery

Feature gate: ServerSideFieldValidation Default value: true

Currently, you can use kubectl –validate=true to indicate that a request should fail if it specifies unknown fields on an object. This enhancement summarizes the work to implement the validation on kube-apiserver.

Read more in our "Kubernetes 1.23 – What's new?" article.

#3203 Auto-refreshing official CVE feed (to review)

Stage: Net New to Alpha

Feature group: security

Feature gate: N/A

Let's say you need a list of CVEs with relevant information about Kubernetes that you want to fetch programmatically. Or, to keep your peace of mind, you want to browse through a list of fixed vulnerabilities, knowing that the official K8s team is publishing them.

One step further, you may be a Kubernetes provider that wants to inform their customers of which CVEs are recently announced, need to be fixed, etc.

Either way, this enhancement isn't one that you'll enable in your cluster, but one you'll consume via web resources. You only need to look for the label official-cve-feed among the vulnerability announcements.

Here is the KEP describing the achievement. There's no need to go immediately and read it, but maybe one day it will become useful.

#2802 Identify Windows pods at API admission level authoritatively

Stage: Graduating to Stable

Feature group: windows

Feature gate: IdentifyPodOS Default value: true

This enhancement adds an OS field to PodSpec, so you can define what operating system a pod should be running on. That way, Kubernetes has better context to manage Pods.

Read more in our "Kubernetes 1.23 – What's new?" article.

That's all for Kubernetes 1.25, folks! Exciting as always; get ready to upgrade your clusters if you are intending to use any of these features.

If you liked this, you might want to check out our previous 'What's new in Kubernetes' editions:

- Kubernetes 1.24 – What's new?

- Kubernetes 1.23 – What's new?

- Kubernetes 1.22 – What's new?

- Kubernetes 1.21 – What's new?

- Kubernetes 1.20 – What's new?

- Kubernetes 1.19 – What's new?

- Kubernetes 1.18 – What's new?

- Kubernetes 1.17 – What's new?

- Kubernetes 1.16 – What's new?

- Kubernetes 1.15 – What's new?

- Kubernetes 1.14 – What's new?

- Kubernetes 1.13 – What's new?

- Kubernetes 1.12 – What's new?

Get involved in the Kubernetes community:

- Visit the project homepage.

- Check out the Kubernetes project on GitHub.

- Get involved with the Kubernetes community.

- Meet the maintainers on the Kubernetes Slack.

- Follow @KubernetesIO on Twitter.

And if you enjoy keeping up to date with the Kubernetes ecosystem, subscribe to our container newsletter, a monthly email with the coolest stuff happening in the cloud-native ecosystem.