Google Cloud Run is a serverless compute platform that automatically scales your stateless containers. In this post we are going to showcase how to secure the entire lifecycle of your Cloud Run services.

Sysdig provides a security for Cloud Run for Anthos. With Sysdig Secure you can embed security and validate compliance across the serverless lifecycle. The Sysdig platform is open by design, with the scale, performance and usability enterprises demand.

Sysdig announced its support for Google Cloud’s Anthos earlier this year. Anthos has now integrated the Cloud Run serverless technology as a new native component, which is also fully supported by Sysdig.

Although we are going to use Anthos to illustrate the examples and use cases in this post, the Cloud Run serverless platform can also be deployed to a managed Google Cloud Run environment, Google Kubernetes Engine (GKE), GKE on-prem and multi-cloud environments. Cloud Run is based on Knative, ensuring that your applications remain portable and compliant to open standards.

Let’s start with a brief introduction to the platform:

Google Cloud Anthos

Anthos is an application management platform designed for hybrid cloud environments.

Anthos bridges the gap between the managed cloud and on-prem deployments providing a unified control plane and a consistent development and operations experience across physical servers, on-prem VMs, Compute Engine VMs, etc.

Built on top of ubiquitous cloud-native technologies like Kubernetes, Istio, and Knative, Anthos will allow you to seamlessly run your container workloads wherever you need them to, simplifying the migration, modernization, and security controls for your applications.

Google Cloud Run

Cloud Run is built around the idea of combining the simplicity and abstraction of serverless computing with the flexibility of containerized workloads, in one single solution.

Using Cloud Run, you will define your containers in the usual way (i.e. writing a Dockerfile), but their infrastructure and lifecycle management will be abstracted away from you, offering a native serverless experience. Cloud Run will also automatically manage your service scale, up and down from zero depending on traffic, almost instantaneously.

Using strict serverless frameworks you are forced to follow certain constraints, having to choose from a predefined set of supported languages, libraries and code frameworks. Using a standard containerized solution is more flexible in this regard, but comes with an additional operations investment: provisioning, configuring, and managing servers. Cloud Run gives you the best of both worlds, offering the simplicity of a serverless platform without limiting the way you develop your services code.

You can build and deploy your Google Cloud Run applications over any compatible platform in three simple steps:

- Define: Describe the container writing its Dockerfile, you get to choose the base operating system, frameworks and libraries, listening ports, etc.

- Publish: Using the gcloud command line tool, the published container image is stored in the Google Container Registry associated with your GCP project.

- Deploy: Executing the

run deploysubcommand for our image, together with the service name and region. On successful deployment, the command line will automatically display your service’s URL. Your service is up and ready to go!

Before we dive into more specific examples, there are several technical considerations that you need to keep in mind when deploying Cloud Run services:

- Cloud Run containers are stateless, this allows the platform to treat them as serverless functions.

- You containers are required to accept HTTP requests in the port defined on the

PORTenvironment variable. - Even when you are running the same container image, different configurations will create container instances with different revision IDs. This unique identifier is extremely useful to determine which workload in particular is behaving in an unexpected way, when was it launched and the YAML containing the complete definition. We will leverage that feature in the examples below to correlate executions and instances during the security forensics phase.

Serverless security with Cloud Run and Sysdig

Containers are ephemeral by nature, Cloud Run containers doubly so, since they are managed as a serverless function and scaled up and down on demand. Based on our 2019 container usage report, 52% of containers live 5 minutes or less.

Does this mean that Cloud Run security needs to be relaxed, or that security controls will become a burden for your lightning-fast service deployment pipeline? No, you just need to use the tools that are tailored for this workflow.

Sysdig Secure embeds security and compliance across all stages of the Cloud Run serverless lifecycle:

- Sysdig instrumentation will directly communicate with the host kernel, from the point of view of the pods/containers it is completely transparent.

- This single agent per host will provide you visibility, monitoring and security. A single platform that will correlate monitoring and security events, showing you the big picture.

- Sysdig natively understands container-native and Kubernetes metadata, allowing you to instantly organize and segment your data by namespace, container id, service id, etc.

Let’s showcase three common security use cases to get a taste of the possibilities of Cloud Run and Sysdig running together:

- Advanced image scanning for Cloud Run containers

- Runtime security for serverless Cloud Run workloads

- Forensics and incident response in a serverless context

Advanced image scanning for Cloud Run containers

As we mentioned earlier, after defining and building the container image that will be used for Cloud Run, you will need to publish it in your project’s Google Cloud Registry.

This step is pretty straightforward, follow these instructions to configure your Docker access credentials, tag your image according to your Google Cloud project ID and just:

docker push gcr.io/<your_project_id>/hello:latest

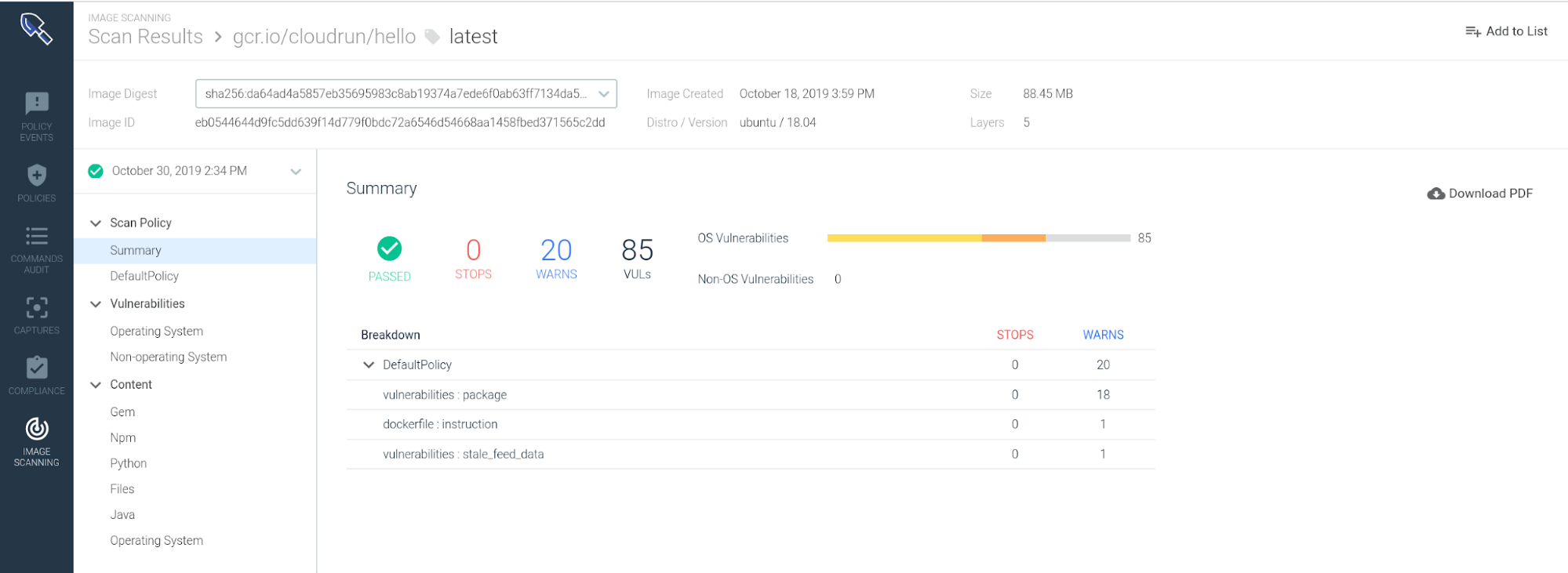

Now, we need to configure the Sysdig Secure backend to access the same repository that you are using for your Cloud Run project.

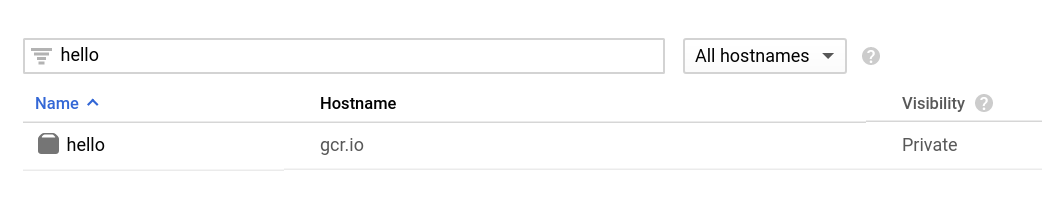

From the Sysdig Secure interface access the Image Scanning section (on the left) and click on registry credentials:

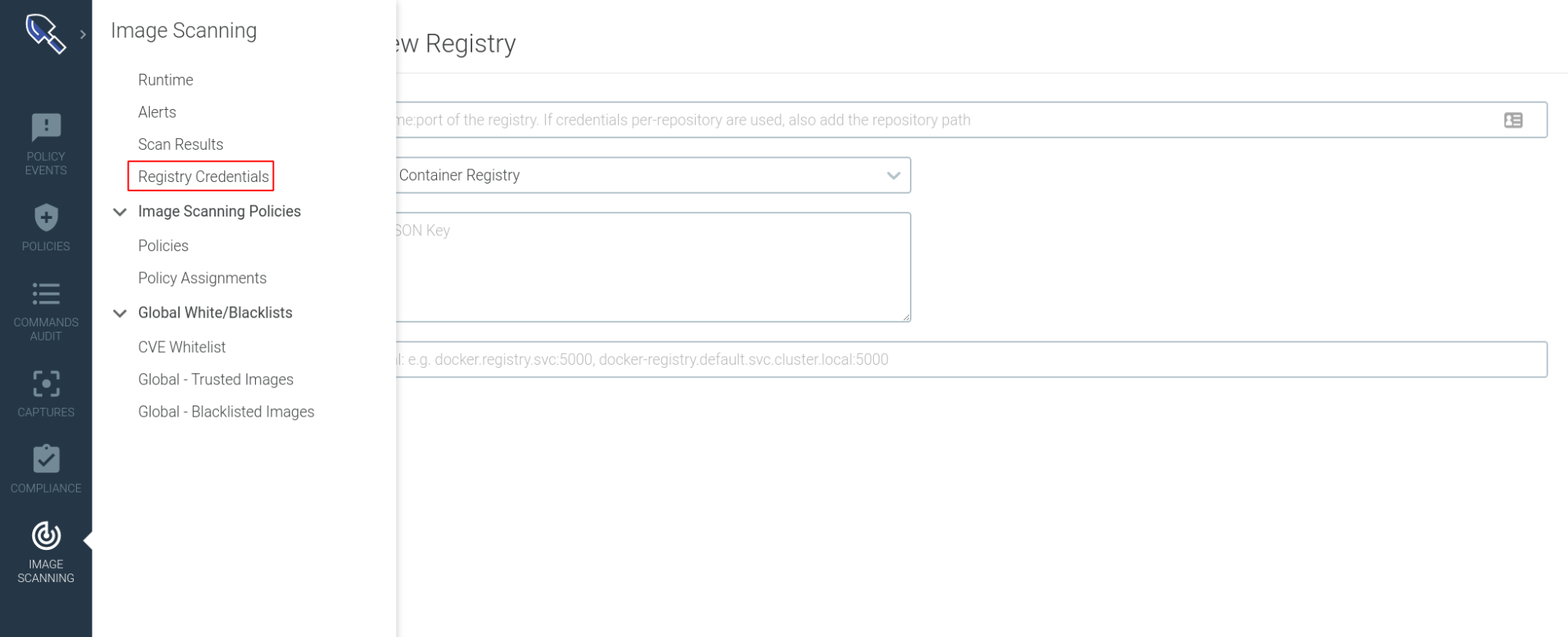

From this interface, you can add new container registries. Let’s go ahead and configure the GCR repository that we will be using for Cloud Run.

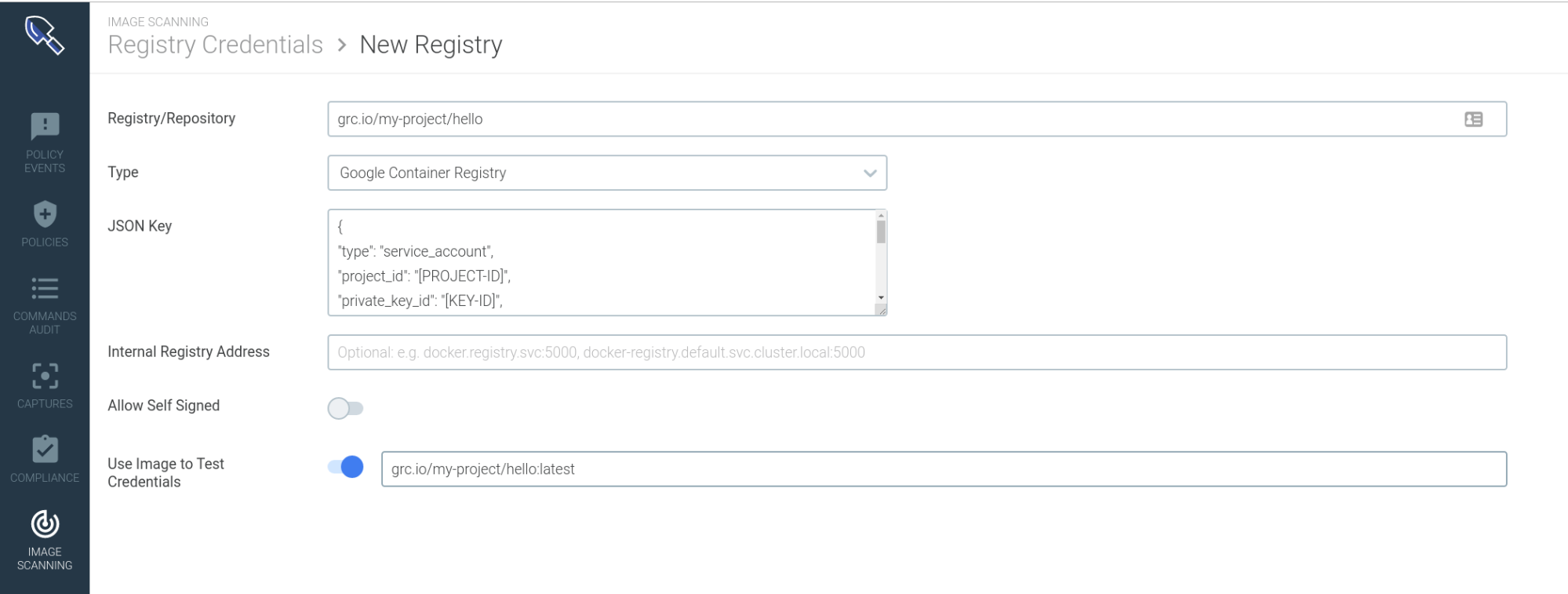

In the form, you will need to set the repository path, pick Google Container Registry as the registry type and obtain the JSON auth key associated with your account. You can, optionally, download an image from that repository to test connectivity and credentials.

Save the form, now your Cloud Run images can be pulled and analyzed by Sysdig Secure. If you look at the report from that particular image:

You will be able to browse:

- Base distro/version for this image

- Vulnerabilities found at the OS level

- Vulnerabilities found at Non-OS level (Python, npm, ruby gems, etc)

- Policy evaluation criteria and final decision for this image (pass|fail)

- Inventory for the content and files in this image, etc.

Detecting CVEs in your images is just the first step, you can configure your own customized policies or run queries to report on CVEs or package in run-time or static environments.

Runtime security for serverless Cloud Run workloads

Image scanning and vulnerability reporting is an essential part of your cloud-native security, but security teams still need to detect and respond to attacks at runtime:

- A new 0-day vulnerability is released in the wild

- Malware managed to go through the scanning phase undetected

- You containers or hosts are accessed interactively to exploit a privilege escalation

- Your application binary is secure, but the deployment configuration is not, etc

There are many security scenarios where you will require container-specific runtime security to adequately respond to these threats.

Google Cloud Run services are simple: You can immediately figure out which binaries they need to run, which ports they need to open, which files they need to access, etc. Using the Sysdig runtime behavioural language you can adopt a least privilege policy for your Cloud Run services, everything that is now allowed will be automatically flagged.

Sysdig will analyze live the runtime behaviour of every container and the host, including serverless applications supported by Cloud Run containers. All this information is fed to the runtime rule engine, which will trigger an alert if any rule violation is detected.

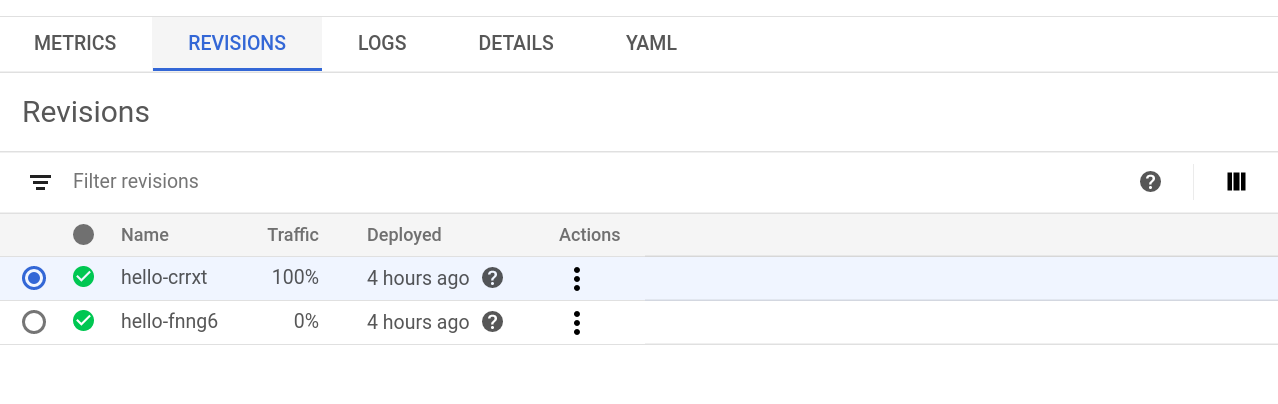

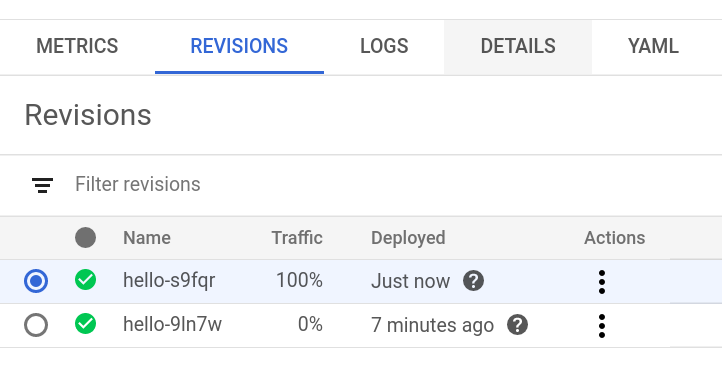

Let’s illustrate this with a simple example. We just launched our “hello” service using Google Cloud Run for Anthos:

As you can see in the image above, the same service has different revision IDs, whenever you change the configuration and re-launch the service, it will be assigned a new unique ID.

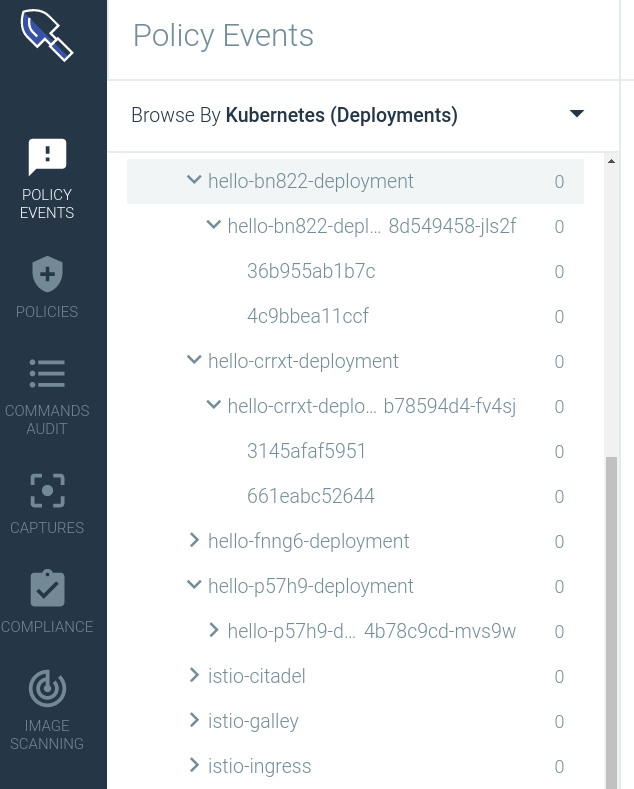

Using Sysdig, you will have full visibility over the containers that support your Cloud Run services, out of the box. Accessing either the Monitor or Secure interfaces of the Sysdig platform, you will be able to see the existing Cloud Run containers organized hierarchically using their Kubernetes metadata (namespace, deployment, annotations, labels).

The Sysdig agent will capture the Kubernetes labels and tags for every running container. For the Cloud Run context, you can use these tags for two immediately relevant use cases:

- Classifying and telling the Cloud Run managed containers apart from the other “regular” containers in your cluster

- This will allow you to define different visualizations, security alerts, metric thresholds, etc depending on the “type” of container that you are scoping to.

- Retrieve the Revision ID for any current or past container that was running on your cluster and correlate this information with the security alerts or service performance events.

We know that our simple “hello” container is just executing a process:

root 1 0.0 0.1 110760 11976 ? Ssl 11:18 0:10 /hello

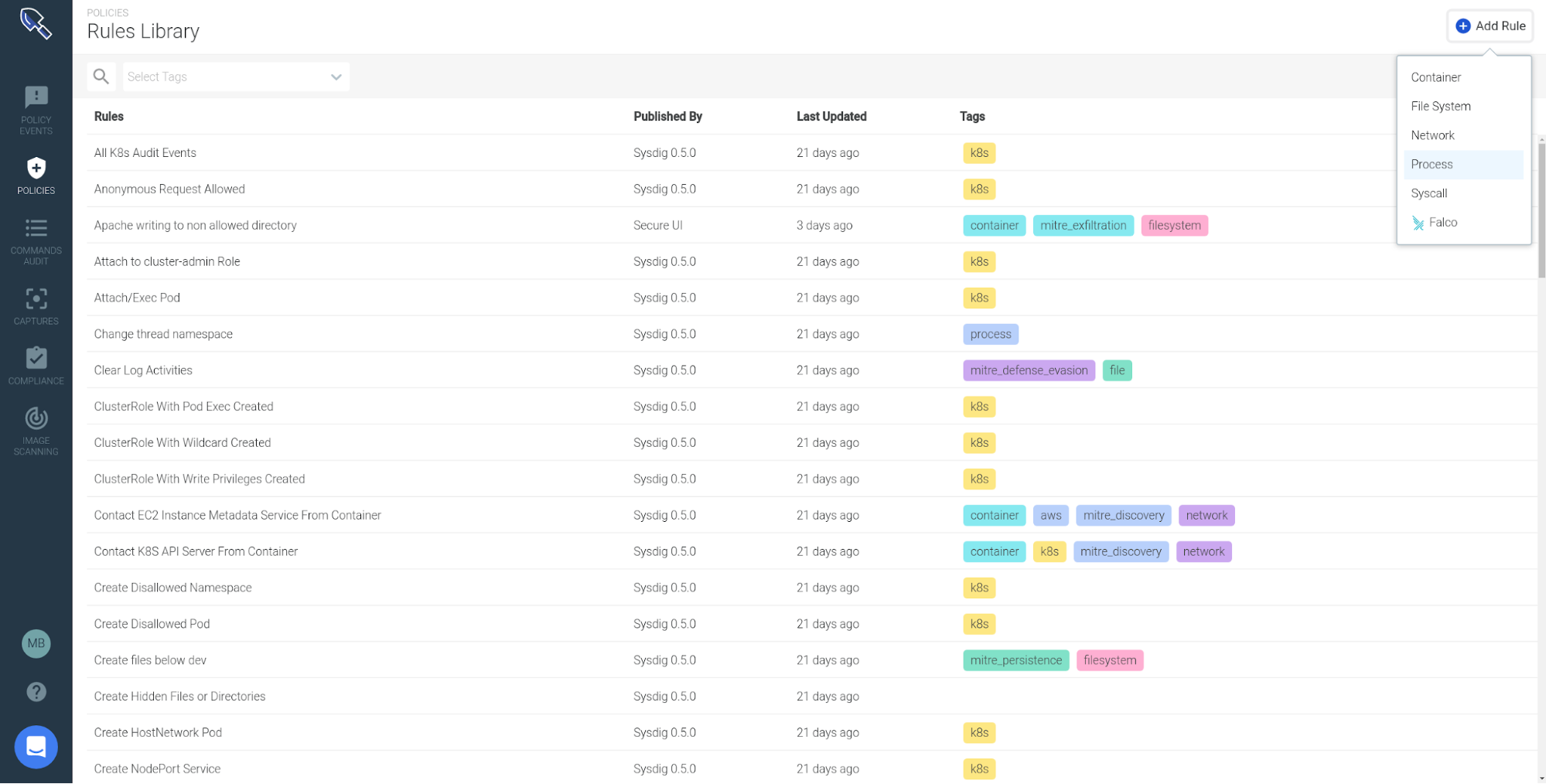

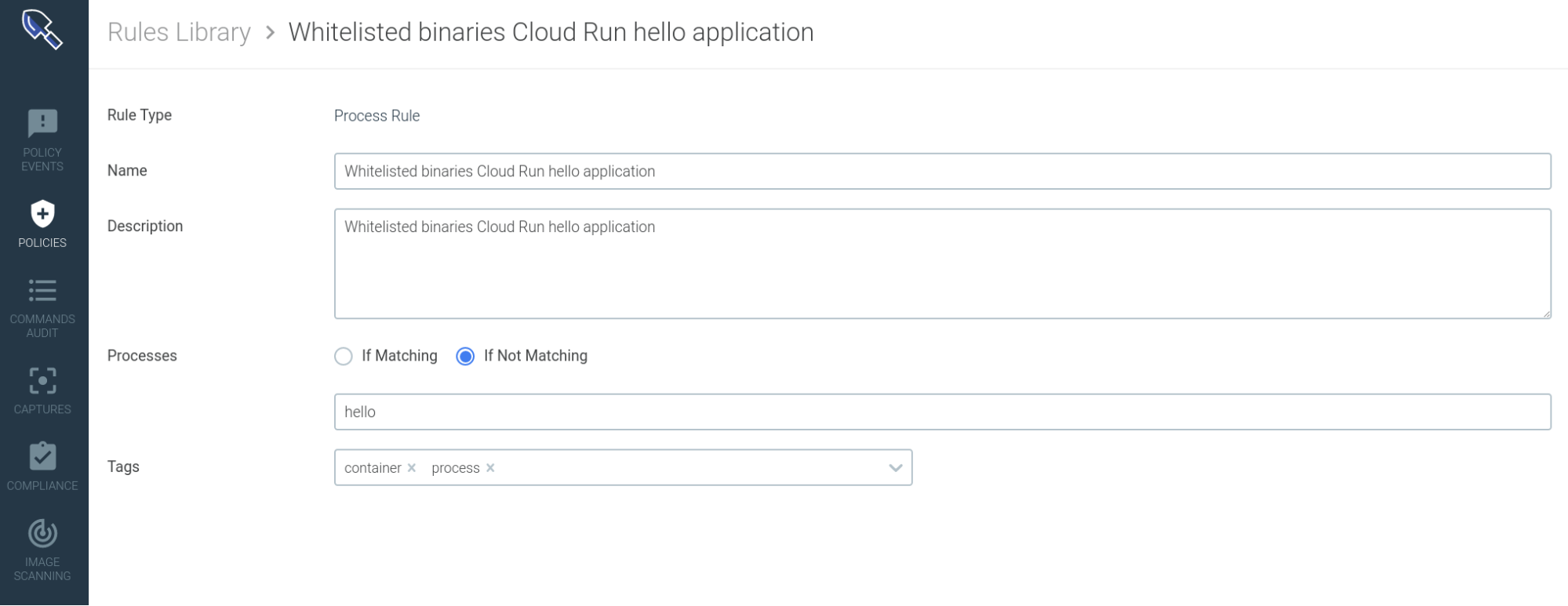

Let’s create a whitelisting rule that will alert you if any other binary is executed inside that container.

You can access the runtime rule library in Sysdig Secure and create a new runtime rule:

You will just create a new rule that triggers whenever the syscall stream detects any binary that is not in the whitelist:

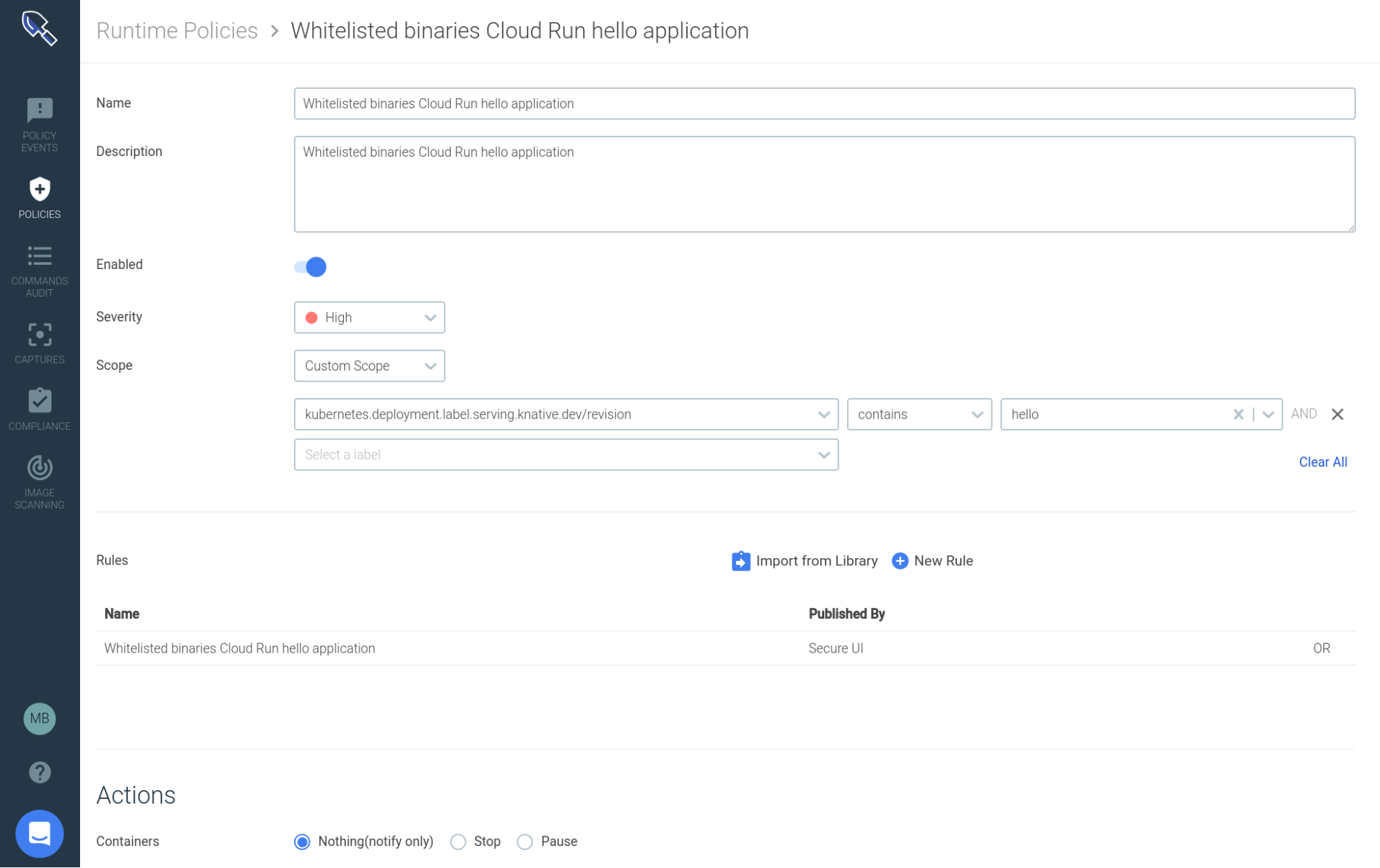

Now, let’s scope and make this rule actionable creating a runtime policy:

We are scoping this policy to containers where the tag revision contains the string hello, it will work for all the different revision IDs for the same serverless service, but it won’t affect the other regular containers living in our cluster.

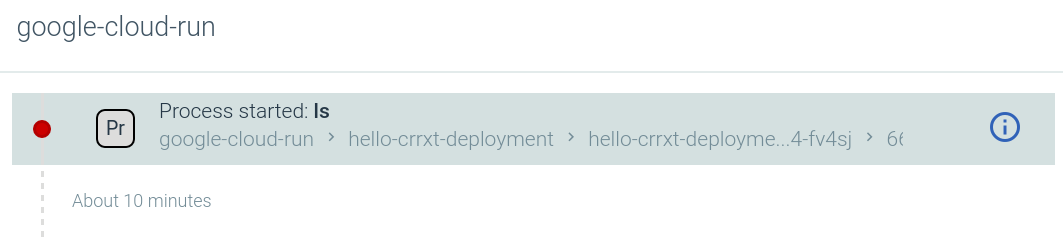

Let’s trigger this policy, for example executing ls inside the container, you will soon receive an event like this:

If you check the full details for the event, you will be able to display:

- Complete Kubernetes scope (host, namespace, pod name, revision ID)

- Commands audit information (PID, PPID, shell id, UID, cwd)

- Container id, full container name in Kubernetes, Image SHA256 digest

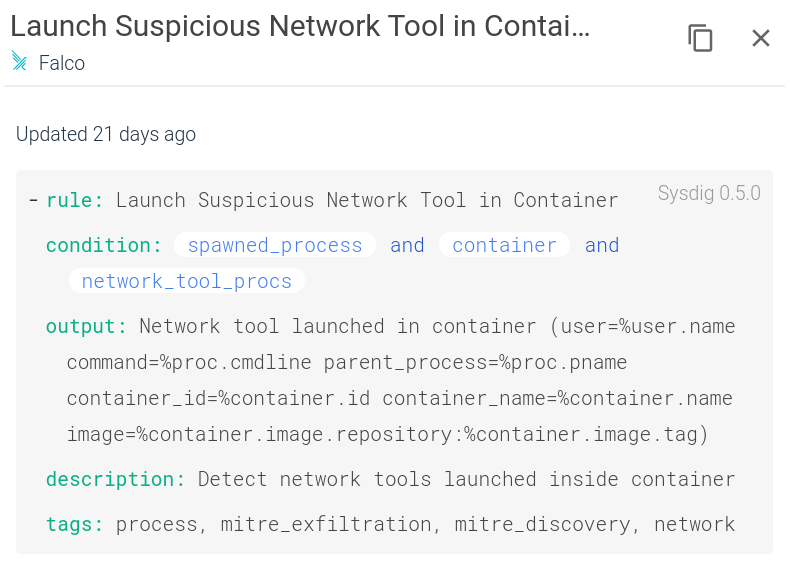

For the sake of simplicity we just demonstrated a basic runtime rule in this example. Using the Falco runtime language you can describe any advanced trigger condition that you need to enforce in your scenario, including syscalls, container labels, kubernetes attributes, lists, macros with subconditions and so on:

Forensics and incident response in a serverless context

Google Cloud Run is a serverless, autoscaled-on-demand environment. This means that the affected workload will probably be long gone when the security incident comes to your attention. How do you perform incident response and forensics in such scenario? It’s like the CSI series, but without a body.

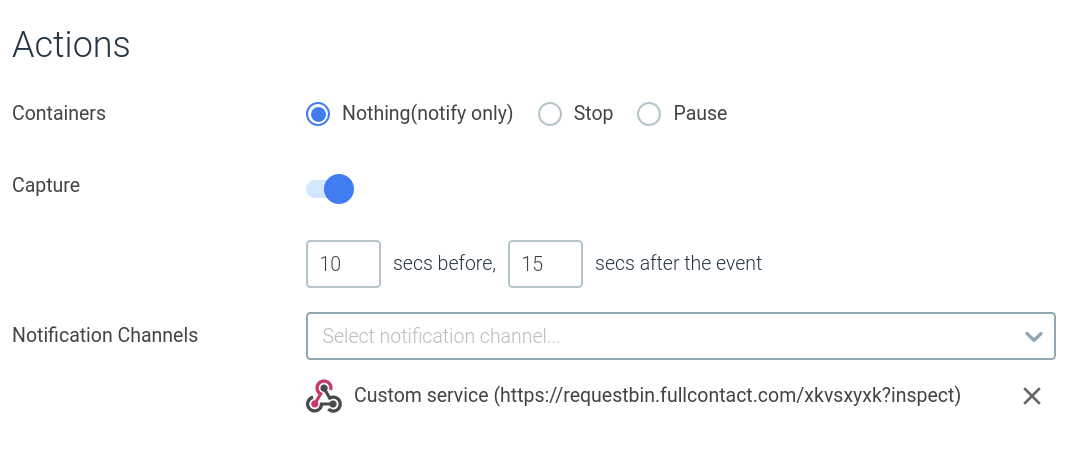

Again, it’s just a matter of using the right tool for the job. Going back to the policy definition that we displayed before there are several automated actions that you can take when a policy is triggered:

- You will always notify the event in the events stream, directly stop the container or pause it for further investigation.

- You have several notification channels to push the event information (email, webhook, slack, pagerduty, etc).

- You can also create a full activity capture. Note that you can capture after, but also before the security event happened.

This is an important detail for forensics, you want to be able to reconstruct the sequence of events prior to the security trigger. Many attacks are preceded by recognizance and information discovery steps.

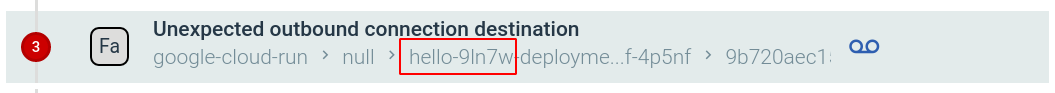

For this example, we are going to use the Unexpected outbound connection destination. You know that your Cloud Run service needs to contact several Google Cloud APIs, and you can whitelist those, but if it tries to contact any other destination that is suspicious (hijacked to mine bitcoins? Trying to connect to the regular pods in your cluster? Trying to contact the K8S API?). This time we are going to configure a Sysdig capture associated with the policy event.

Now, we are going to trigger the policy. To make things more realistic, after triggering the security policy, we are going to deploy a new service revision in our Google Cloud console:

The old service revision doesn’t have any incoming traffic, so it was automatically scaled down to 0.

But the alert was triggered for the old revision:

You can correlate the information present in Sysdig Secure and the Google Cloud Run console to discover:

- The specific service revision ID that triggered the alert, when did it trigger, what runtime rule in particular was violated.

- Who launched that revision, when, and which configuration was being used at that time.

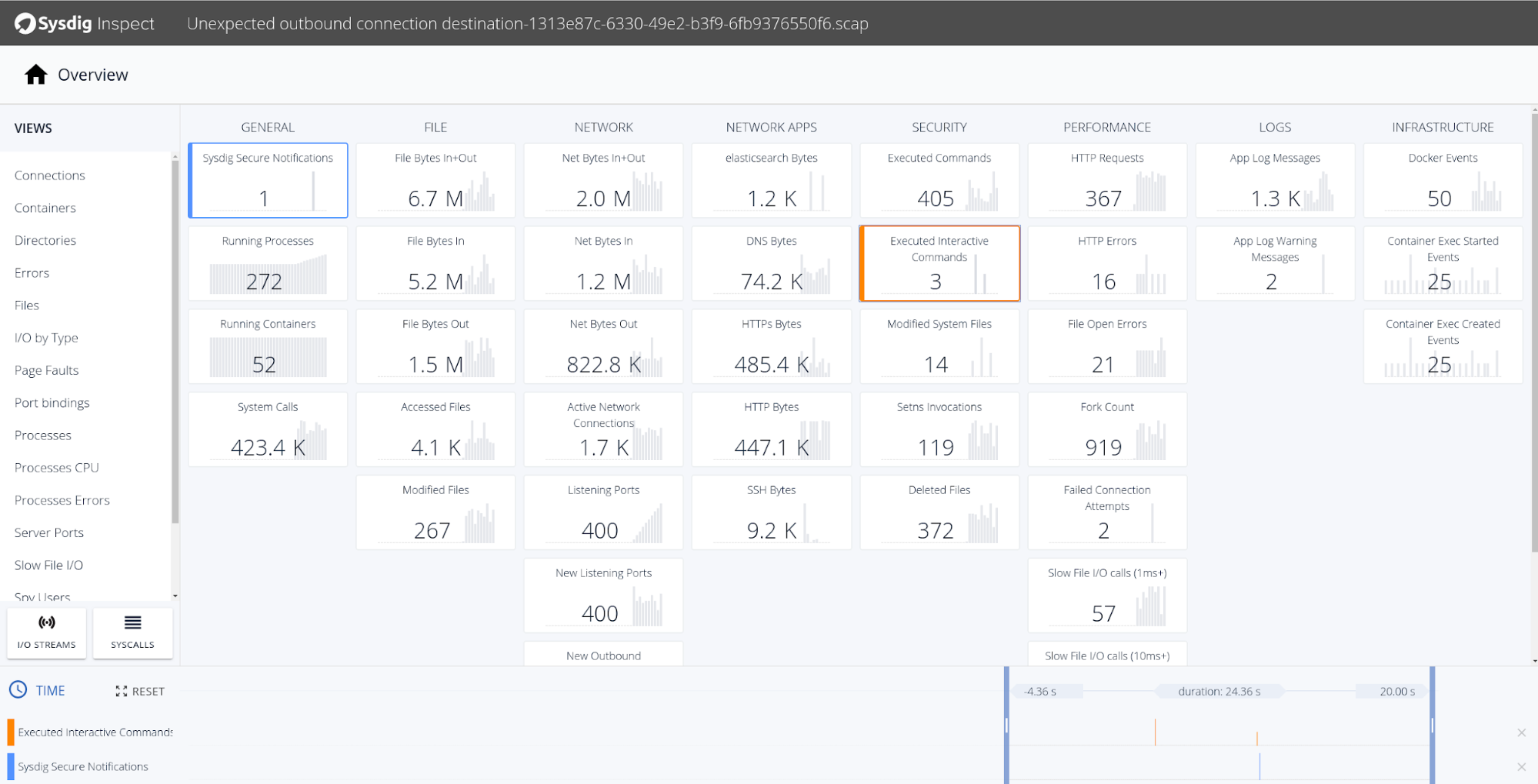

And that’s just the first steps in your forensics investigation, attached to this policy event you will find a capture with every system call that was executed during the security incident:

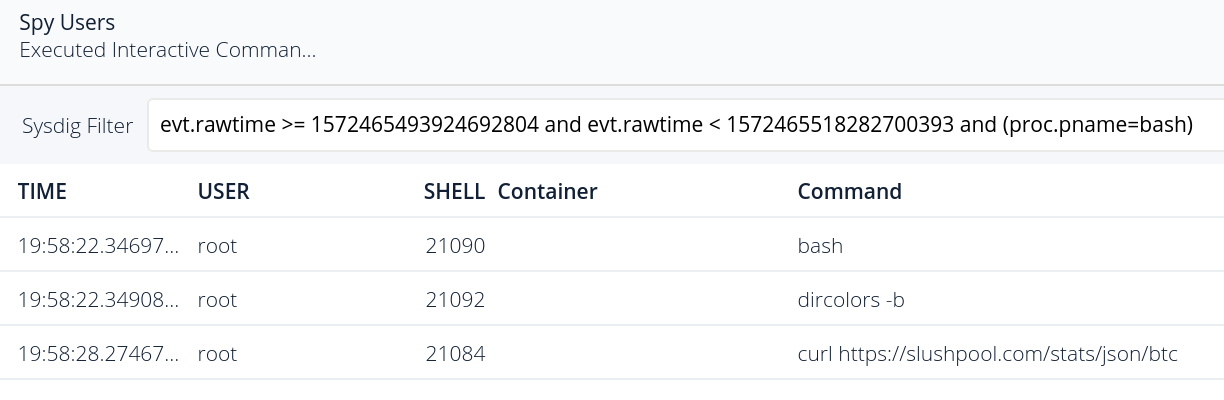

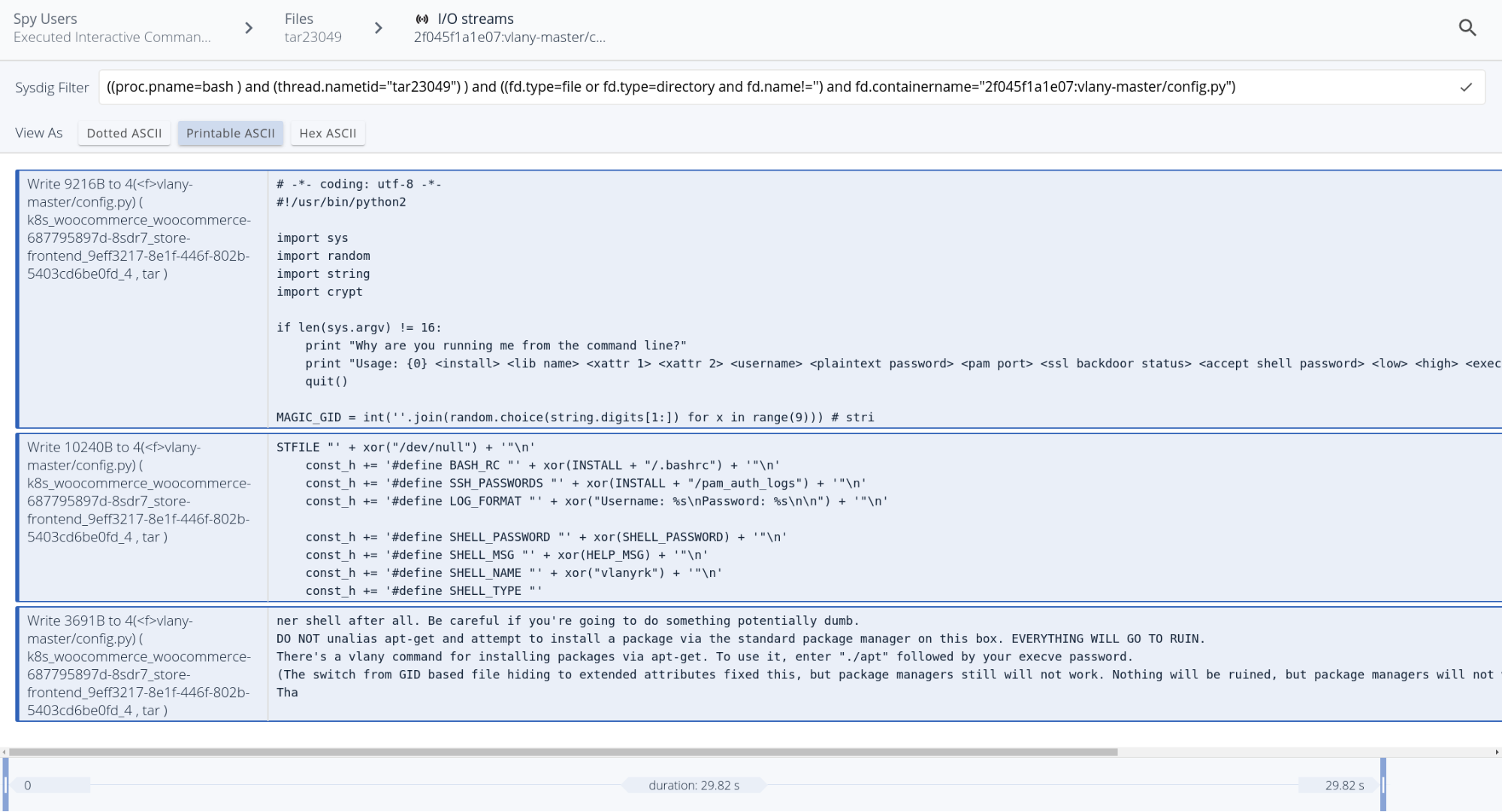

There seems to be some interactive activity that matches the exact moment that the alert was triggered, but also before. Let’s take a closer look at the executed interactive commands:

Extremely suspicious activity, the last command (curl) was the one actually triggering the policy. From this interface we can drill down to discover the fine-grained details of any action performed during the attack: connections open, new processes spawned, listening ports, files modified and even the content that was modified inside those files:

Conclusions

The Google Cloud Run platform sits in the sweet spot between serverless platforms and containers. Here at Sysdig, we are happy to expand our Google Cloud Anthos support and provide support Cloud Run for Anthos, for both the Monitor and Secure pillars of the platform.

The Sysdig platform brings many advantages to the Google Cloud Run workflow:

- Transparent instrumentation, that will keep your Google Cloud services light, simple, and adhering to the serverless principles.

- Native support for private Google container registries, enabling image scanning, custom image checks, and vulnerability reporting directly from the interface.

- Cloud-native and fine-grained forensics, specially tailored to deal with ephemeral and stateless workloads.