Measuring latency within my code is something that I do very very often. Occasionally I resort to tools like profilers to help me out but, honestly, most of the time I just put timers in my code and print the results to the console or a log file. The reasons are:

- Running a profiler requires quite a bit of setup, which often is not justified or I’m too lazy to do

- A profiler tends to give me a very noisy output, like the full execution stack, while I usually only want to focus on a few specific portion of code

I bet that this sounds pretty familiar to you. And I bet that, if you’re lazy like I am, you’re going to be interested in how to profile your software and systems with less effort. Read on!

We are going to learn how to use a functionality recently added to sysdig, tracers, to easily take measurements inside your code and then analyze them. To ground the story, I’ll use a real use case: Is the aggregate functionality introduced in MongoDB 2.2 faster than doing a traditional map-reduce call? If yes, how much better is it?

Setup First of all, I need to deploy Mongo. Docker is my friend here:

$> docker run --name mongotest -d mongo

Now I need some data for the queries. I can create it using the ruby

Faker library. For example, this scripts creates 30,000 simulated customer entries:

require 'faker' require 'mongo' include Mongo client = MongoClient.new(ENV['MONGODB'], 27017) db = client["test"] collection = db["customers"] 30000.times do collection.insert({ :first_name => Faker::Name.first_name, :last_name => Faker::Name.last_name, :city => Faker::Address.city, :country_code => Faker::Address.country_code, :orders_count => Random.rand(10)+1 }) end

Now let’s execute the script:

$> MONGODB=<server_address> ruby filldata.rb</server_address>

And voila, we have a MongoDB backend with a bunch of data to play with!

Instrumentation

Now I’m going to create two scripts that each get the sum of the orders grouped by country code. However, the scripts leverage different underlying MongoDB facilities. The first one uses `map_reduce:“`

require 'mongo' include Mongo $stdout.sync = true client = MongoClient.new(ENV['MONGODB'], 27017) db = client["test"] collection = db["customers"] loop do print ">:t:map-reduce::n" # Mark the beginning of the query collection.map_reduce("function() { emit(this.country_code, this.orders_count) }", "function(key,values) { return Array.sum(values) }", { :out => { :inline => true }, :raw => true}); print "<:t:map-reduce::n" # Mark the end of the query end

While the second one uses aggregate:

require 'mongo' include Mongo $stdout.sync = true client = MongoClient.new(ENV['MONGODB'], 27017) db = client["test"] collection = db["customers"] loop do print ">:t:aggregate::n" # Mark the beginning of the query collection.aggregate( [ { "$match" => {}}, { "$group" => { "_id" => "$country_code", "value" => { "$sum" => "$orders_count" } } } ]) print "<:t:aggregate::n" # Mark the end of the query end

Notice anything particular in these scripts? Yes, those prints in the code are sysdig tracers:

print "<:t:aggregate::n" # Mark the end of the query

In this case, tracers do the following:

- They mark the beginning and the end of a span. > is the beginning, and < is the end

- They give it a unique ID.t in this case means “use the thread ID as the span ID”, which is an easy way to get a unique number across executions

- They also give it a name “map-reduce” is the name in the first script, “aggregate” in the second one.

You can read the tracers manual for the details but, as you can see, it’s pretty straightforward.

When you write these strings to /dev/null and sysdig is running, sysdig captures them, decodes them and does the measurement magic for you.

So let’s run the scripts and redirect their output to /dev/null:

$> MONGODB=<server_address> ruby query_agg.rb > /dev/null & $> MONGODB=</server_address><server_address> ruby query_mr.rb > /dev/null & </server_address>

Timing Analysis

Sysdig lets me run my analysis live, as the scripts are running. This time however, I’m going to take a capture file:

$> sudo sysdig -w mongo.scap

Download the capture file and you will be able to practice what I describe in this blog post on your machine.

Let’s open the capture file with Csysdig:

$> csysdig -r mongo.scap

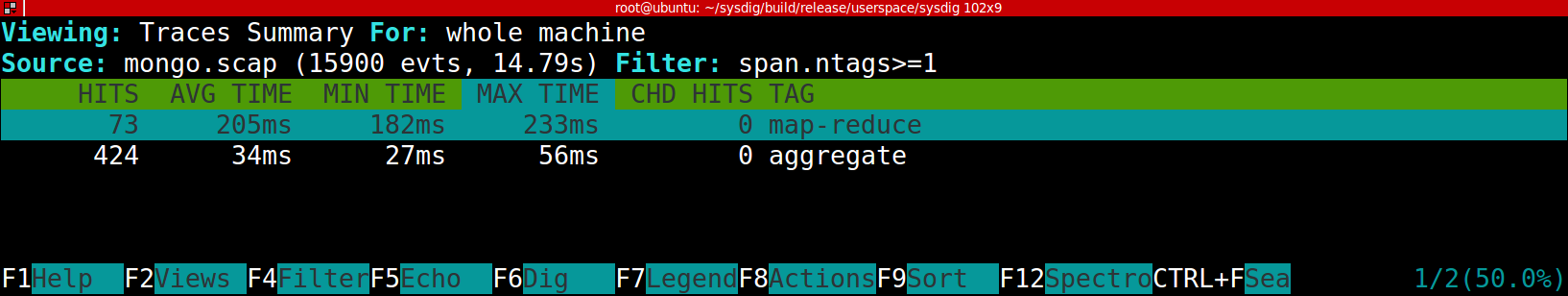

Let’s take a high level look at the latencies, by selecting the Traces Summary view (F2 → Traces Summary):

This gives us the number of hits and timing information for the two types of queries that we instrumented. It’s evident that aggregate is much faster, more than 6 times on average.

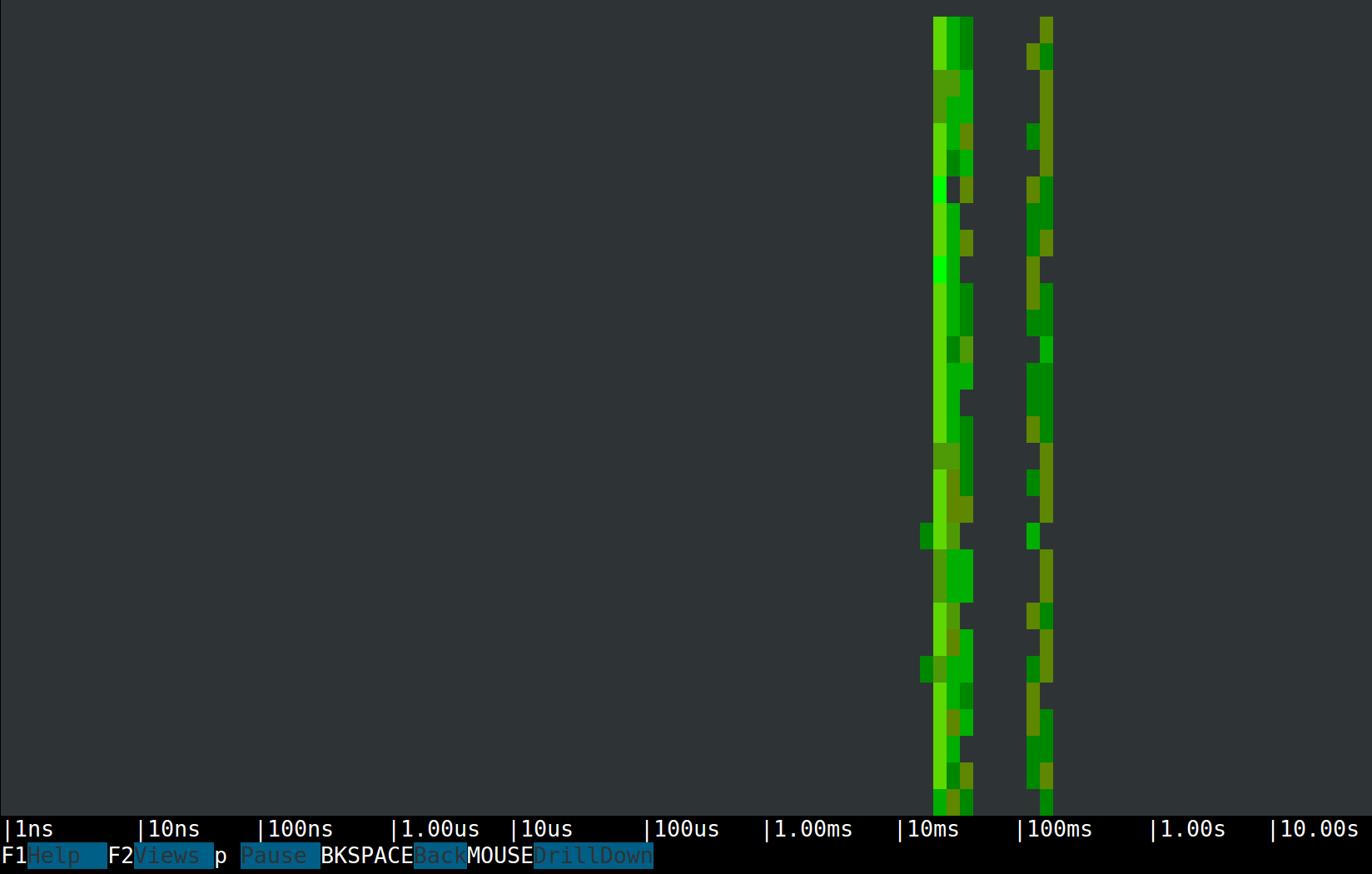

Now let’s switch to a spectrogram view (F2 → Traces Spectrogram):

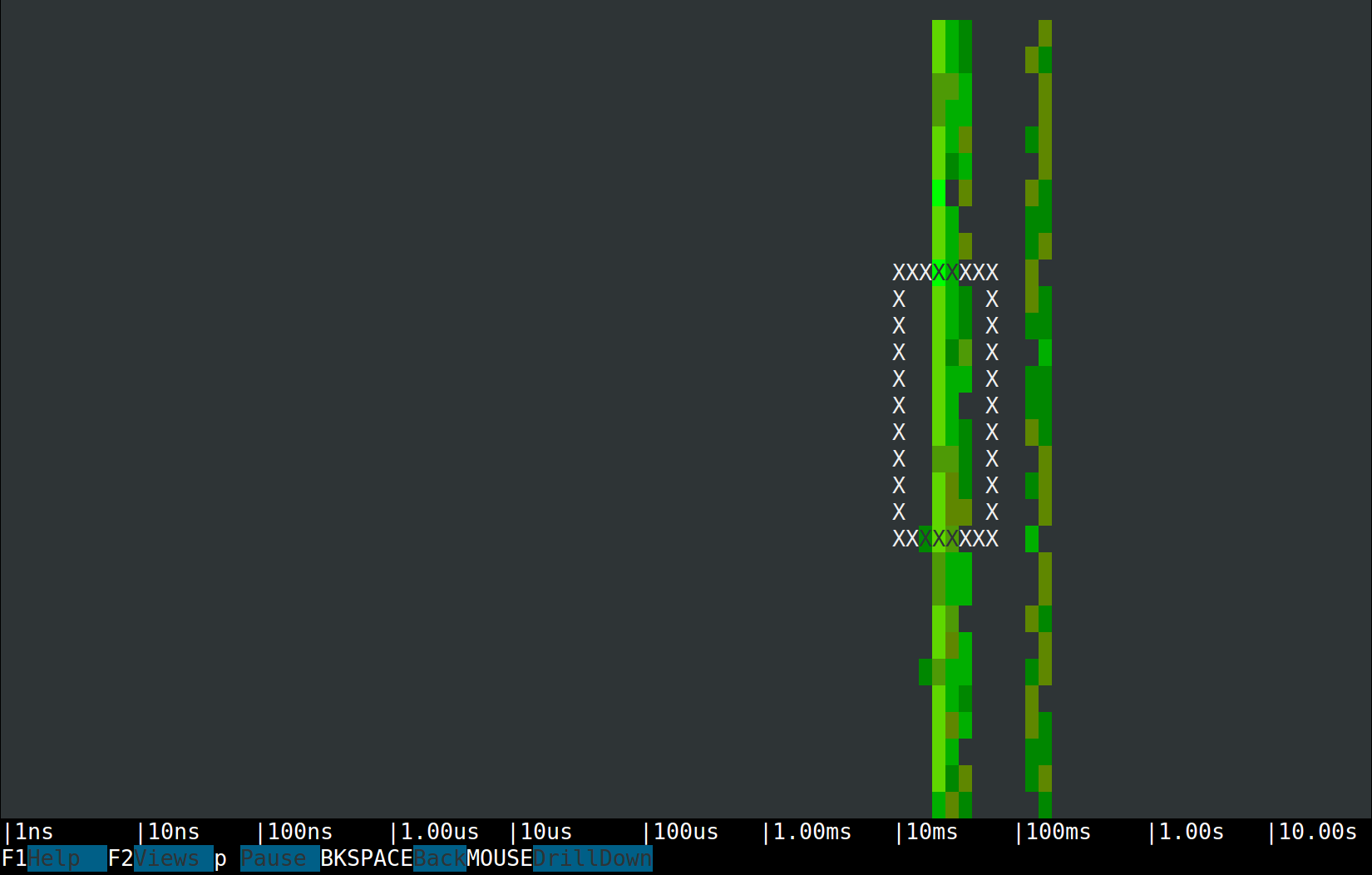

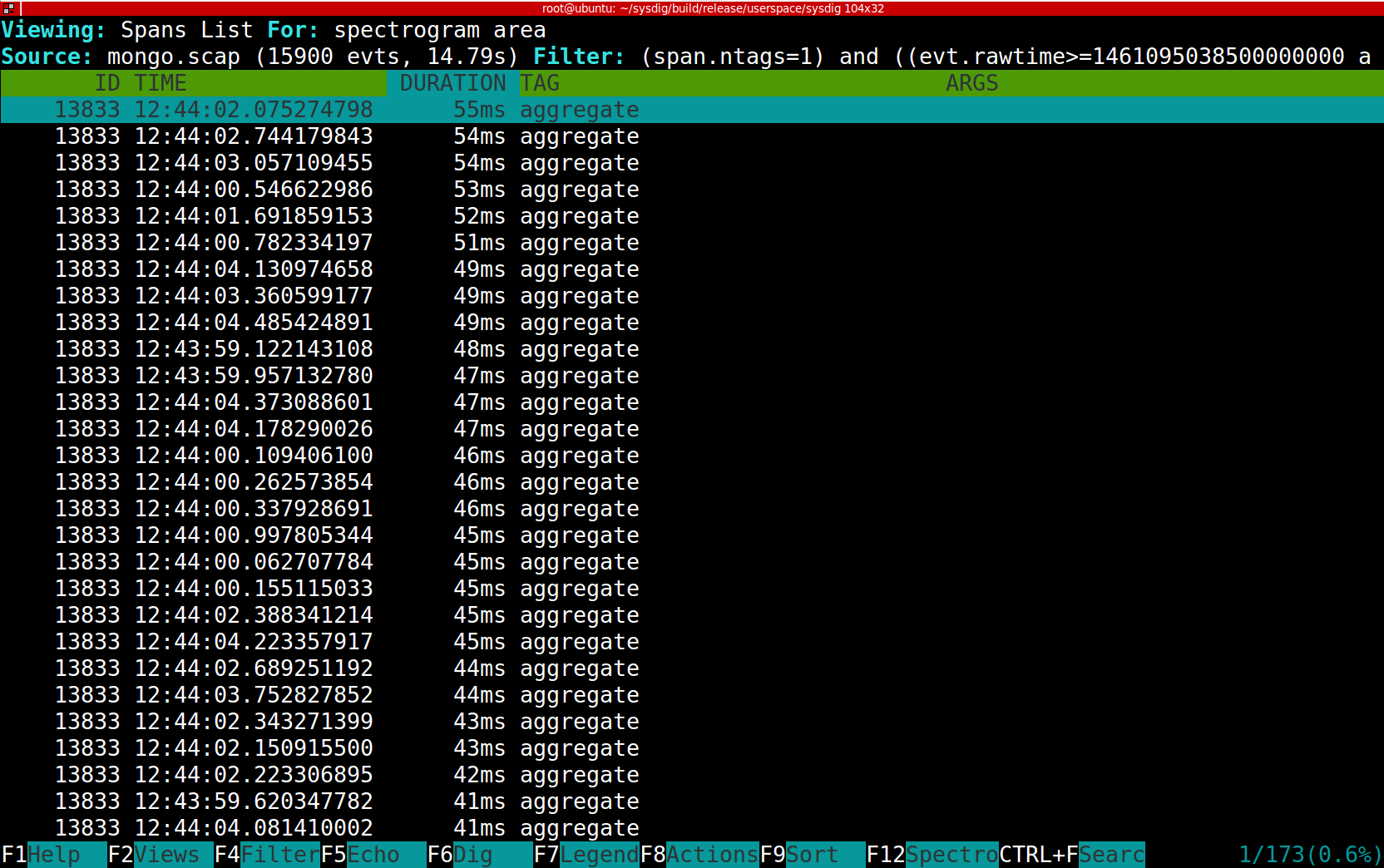

Spectrograms allow us to visualize the latencies of almost anything. In sysdig they are often used for measuring system calls, and now we can use them for trace spans as well.What we see is two clear latency bands, one in the tens of milliseconds and one in the hundreds of milliseconds. This is consistent with the output of the Traces Summary view, so my thesis is that the left band is made by aggregate requests, while the right band is all map-reduce requests. Proving the thesis is just a mouse click away. The Csysdig spectrogram allows me to make a selection and drill down to see inside it. So let’s drill down into the left band:

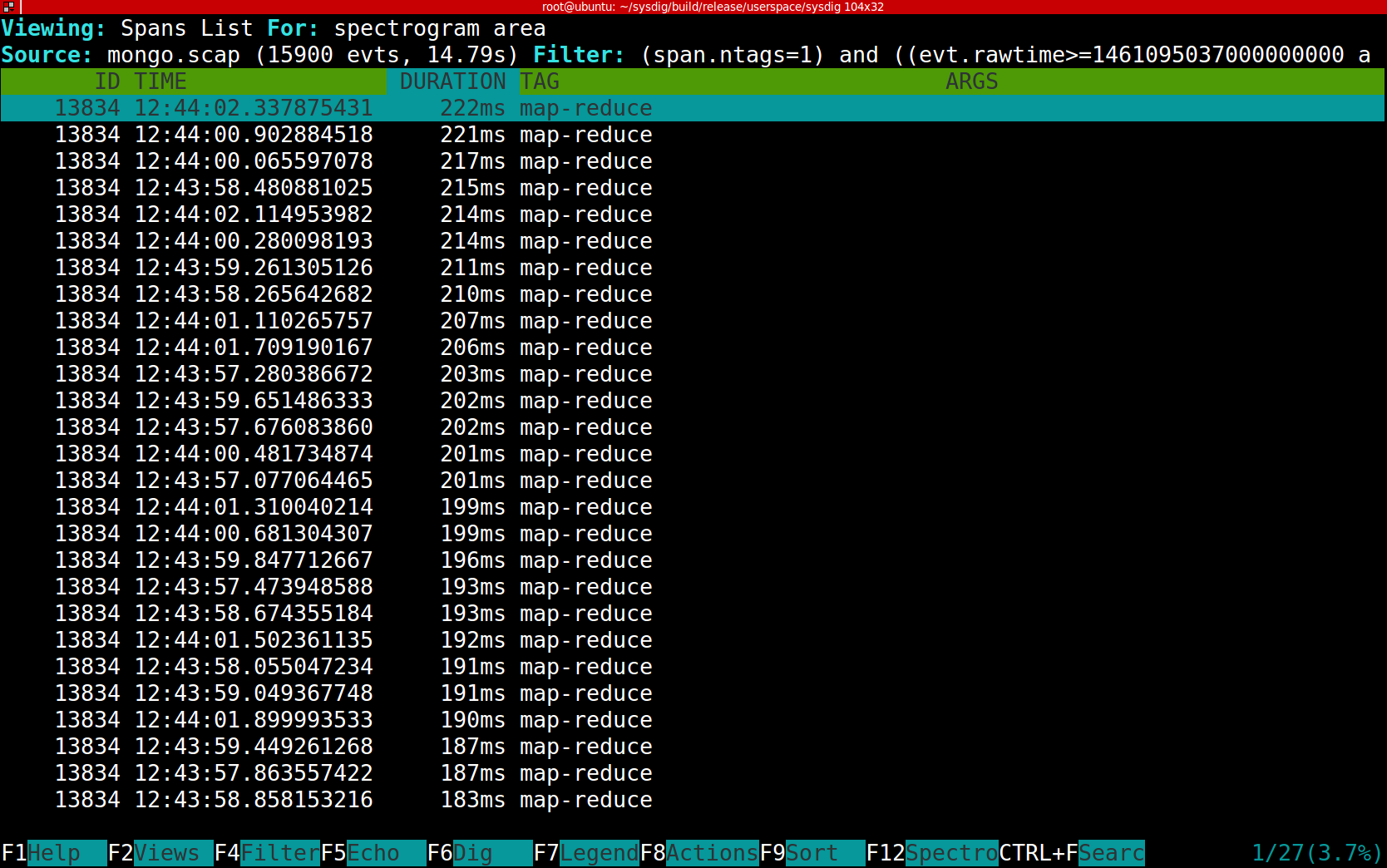

The result is shown in this picture and it’s exactly what I expected:

The right band contains only map-reduce requests, and each of them takes much longer:

Span-Based Resource Monitoring

Once our app is instrumented with sysdig tracers, we can leverage full sysdig functionality in a span-aware way. For example, let’s compare the network bandwidth utilization of the two query options. There are several ways to do it with sysdig, but this time I’m going to use the echo_fd chisel and just filter I/O from the map-reduce spans:

$> sysdig -r mongo.scap -c echo_fds evtin.span.tags=map-reduce ------ Write 234B to 172.17.42.1:33431->172.17.0.28:27017 (ruby) ....m...............test.$cmd..............mapreduce.....customers..map.G...:... ------ Read 16B from 172.17.42.1:33431->172.17.0.28:27017 (ruby) .$......m....... ------ Read 20B from 172.17.42.1:33431->172.17.0.28:27017 (ruby) .................... ------ Read 4B from 172.17.42.1:33431->172.17.0.28:27017 (ruby) .#.. ------ Read 8.99KB from 172.17.42.1:33431->172.17.0.28:27017 (ruby) .results..#...0. [email protected]. ...._id.....AE..value.......

The snippet above isolates a single query. We can see that there’s a 191 byte request buffer and then another 4 buffers sent back from Mongo, for a total of 9480 bytes.

Now let’s try with aggregate:

$> sysdig -r mongo.scap -c echo_fds evtin.span.tags=aggregate ------ Write 184B to 172.17.42.1:33430->172.17.0.28:27017 (ruby) ....+...............test.$cmd..............aggregate.....customers..pipeline.q.. ------ Read 16B from 172.17.42.1:33430->172.17.0.28:27017 (ruby) .&......+....... ------ Read 20B from 172.17.42.1:33430->172.17.0.28:27017 (ruby) .................... ------ Read 4B from 172.17.42.1:33430->172.17.0.28:27017 (ruby) .&.. ------ Read 7.96KB from 172.17.42.1:33430->172.17.0.28:27017 (ruby) .waitedMS..........result..&...0.#...._id.....ET..orders_count.......1.#...._id.

Quite a bit smaller! A total of 8375 bytes, or around 12% better.

Conclusion

The first, obvious conclusion is: laziness pays off! Tracers are an easier method of profiling just about anything: methods in your software, file access, network transactions…anything. The second conclusion is: use aggregate and not map-reduce in your mongo queries. It will be much faster and save you network bandwidth.

I hope that the measuring techniques explained in this blog post can be a useful addition to your toolbelt. We designed them to be as frictionless as possible but at the same time support common development use cases, especially in containerized environments.

The whole stack described in the post is open source and very easy to install. Give it a try and let us you know what you think.