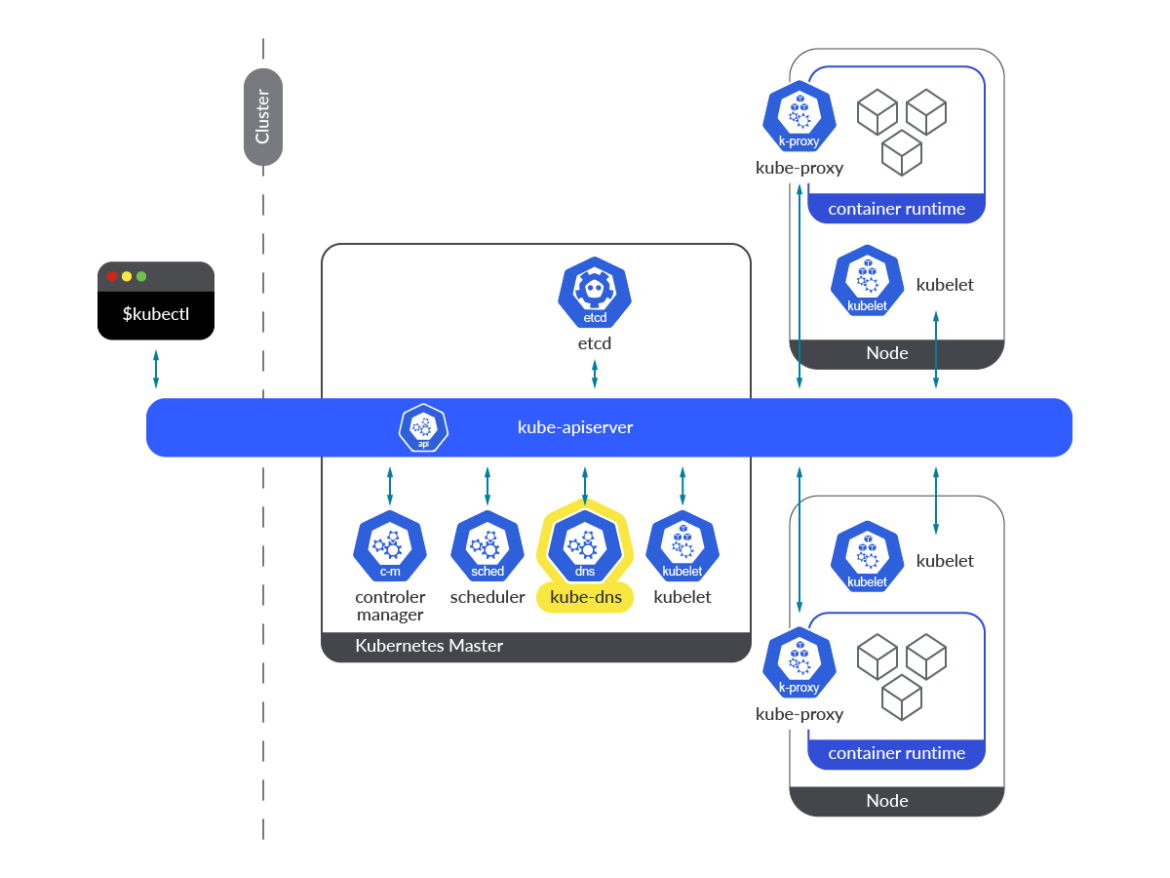

CoreDNS is a DNS add-on for Kubernetes environments. It is one of the components running in the control plane nodes, and having it fully operational and responsive is key for the proper functioning of Kubernetes clusters. Learning how to monitor CoreDNS, and what its most important metrics are, is a must for operations teams.

At some point in your career, you may have heard: Why is it always DNS?

Well, it is simple. DNS is one of the most sensitive and important services in every architecture. Applications, microservices, services, hosts… Nowadays, everything is interconnected, and this doesn’t necessarily mean internal services. It can also be applied to external services. DNS is responsible for resolving the domain names and for facilitating IPs of either internal or external services, and Pods. Maintaining the Pods DNS records is a critical task, especially when it comes to ephemeral Pods, where IP addresses can change at any moment without warning.

But what happens when DNS is unresponsive or down? You are in serious trouble.

If you are running your workloads in Kubernetes, and you don’t know how to monitor CoreDNS, keep reading and discover how to use Prometheus to scrape CoreDNS metrics, which of these you should check, and what they mean.

In this article, we will cover the following topics:

- What is the Kubernetes CoreDNS?

- How to monitor CoreDNS in Kubernetes?

- Monitoring the Kubernetes CoreDNS: Which metrics should you check?

- Conclusion

What is the Kubernetes CoreDNS?

Starting in Kubernetes 1.11, and just after reaching General Availability (GA) for DNS-based service discovery, CoreDNS was introduced as an alternative to the kube-dns add-on, which had been the de facto DNS engine for Kubernetes clusters so far. As its name suggests, CoreDNS is a DNS service written in Go, widely adopted because of its flexibility.

When it comes to the kube-dns add-on, it provides the whole DNS functionality in the form of three different containers within a single pod: kubedns, dnsmasq, and sidecar. Let’s take a look at these three containers:

kubedns: It’s a SkyDNS implementation for Kubernetes. It is responsible for DNS resolution within the Kubernetes cluster. It watches the Kubernetes API and serves the appropriate DNS records.dnsmasq: It provides a DNS caching mechanism for SkyDNS resolution requests.sidecar: This container exports metrics and performs healthchecks on the DNS service.

Let’s now talk about CoreDNS!

CoreDNS came to solve some of the problems that kube-dns brought at that time. Dnsmasq introduced some security vulnerabilities issues that led to the need for Kubernetes security patches in the past. In addition, CoreDNS provides all its functionality in a single container instead of the three needed in kube-dns, resolving some other issues with stub domains for external services in kube-dns.

CoreDNS exposes its metrics endpoint on the 9153 port, and it is accessible either from a Pod in the SDN network or from the host node network.

# kubectl get ep kube-dns -n kube-system -o json |jq -r ".subsets"

[

{

"addresses": [

{

"ip": "192.169.107.100",

"nodeName": "k8s-control-2.lab.example.com",

"targetRef": {

"kind": "Pod",

"name": "coredns-565d847f94-rz4b6",

"namespace": "kube-system",

"uid": "c1b62754-4740-49ca-b506-3f40fb681778"

}

},

{

"ip": "192.169.203.46",

"nodeName": "k8s-control-3.lab.example.com",

"targetRef": {

"kind": "Pod",

"name": "coredns-565d847f94-8xqxg",

"namespace": "kube-system",

"uid": "bec3ca63-f09a-4007-82e9-0e147e8587de"

}

}

],

"ports": [

{

"name": "dns-tcp",

"port": 53,

"protocol": "TCP"

},

{

"name": "dns",

"port": 53,

"protocol": "UDP"

},

{

"name": "metrics",

"port": 9153,

"protocol": "TCP"

}

]

}

]

You already know what CoreDNS is and the problems that have already been solved. It’s time to dig deeper into how to get CoreDNS metrics, and how to configure a Prometheus instance to start scraping its metrics. Let’s get started!

How to monitor CoreDNS in Kubernetes?

As you have just seen in the previous section, CoreDNS is already instrumented and exposes its own /metrics endpoint on the port 9153 in every CoreDNS Pod. Accessing this /metrics endpoint is easy – just run curl and start pulling the CoreDNS metrics right away!

Getting access to the endpoint manually

Once you know which are the endpoints or the IPs where CoreDNS is running, try to access the 9153 port.

# curl http://192.169.203.46:9153/metrics

# HELP coredns_build_info A metric with a constant '1' value labeled by version, revision, and goversion from which CoreDNS was built.

# TYPE coredns_build_info gauge

coredns_build_info{goversion="go1.18.2",revision="45b0a11",version="1.9.3"} 1

# HELP coredns_cache_entries The number of elements in the cache.

# TYPE coredns_cache_entries gauge

coredns_cache_entries{server="dns://:53",type="denial",zones="."} 46

coredns_cache_entries{server="dns://:53",type="success",zones="."} 9

# HELP coredns_cache_hits_total The count of cache hits.

# TYPE coredns_cache_hits_total counter

coredns_cache_hits_total{server="dns://:53",type="denial",zones="."} 6471

coredns_cache_hits_total{server="dns://:53",type="success",zones="."} 6596

# HELP coredns_cache_misses_total The count of cache misses. Deprecated, derive misses from cache hits/requests counters.

# TYPE coredns_cache_misses_total counter

coredns_cache_misses_total{server="dns://:53",zones="."} 1951

# HELP coredns_cache_requests_total The count of cache requests.

# TYPE coredns_cache_requests_total counter

coredns_cache_requests_total{server="dns://:53",zones="."} 15018

# HELP coredns_dns_request_duration_seconds Histogram of the time (in seconds) each request took per zone.

# TYPE coredns_dns_request_duration_seconds histogram

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.00025"} 14098

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.0005"} 14836

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.001"} 14850

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.002"} 14856

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.004"} 14857

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.008"} 14870

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.016"} 14879

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.032"} 14883

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.064"} 14884

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.128"} 14884

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.256"} 14885

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.512"} 14886

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="1.024"} 14887

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="2.048"} 14903

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="4.096"} 14911

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="8.192"} 15018

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="+Inf"} 15018

coredns_dns_request_duration_seconds_sum{server="dns://:53",zone="."} 698.531992215999

coredns_dns_request_duration_seconds_count{server="dns://:53",zone="."} 15018

…

(output truncated)

You can also access the /metrics endpoint through the CoreDNS Kubernetes service exposed by default in your Kubernetes cluster.

# kubectl get svc -n kube-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 129d # kubectl exec -it my-pod -n default -- /bin/bash # curl http://kube-dns.kube-system.svc:9153/metrics

How to configure Prometheus to scrape CoreDNS metrics

Prometheus provides a set of roles to start discovering targets and scrape metrics from multiple sources like Pods, Kubernetes nodes, and Kubernetes services, among others. When it comes to scraping metrics from the CoreDNS service embedded in your Kubernetes cluster, you only need to configure your prometheus.yml file with the proper configuration. This time, the endpoints role is the one you should use to discover this target.

Edit the ConfigMap that includes the prometheus.yml config file.

# kubectl edit cm prometheus-server -n monitoring -o yaml

Then, add this configuration snippet under the scrape_configs section.

- honor_labels: true

job_name: kubernetes-service-endpoints

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scrape

- action: drop

regex: true

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scrape_slow

- action: replace

regex: (https?)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scheme

target_label: __scheme__

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: (.+?)(?::\d+)?;(\d+)

replacement: $1:$2

source_labels:

- __address__

- __meta_kubernetes_service_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_service_annotation_prometheus_io_param_(.+)

replacement: __param_$1

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_service_name

target_label: service

- action: replace

source_labels:

- __meta_kubernetes_pod_node_name

target_label: node

At this point, after redeploying the Prometheus Pod, you should be able to see the CoreDNS metrics endpoints available in the Prometheus console (go to Status -> Targets).

The CoreDNS metrics are available from now on, and accessible from the Prometheus console.

Top CoreDNS metrics: Which ones should you check?

Disclaimer: CoreDNS metrics might differ between Kubernetes versions and platforms. Here, we used Kubernetes 1.25 and CoreDNS 1.9.3. You can check the metrics available for your version in the CoreDNS repo.

First of all, let’s talk about the availability. The number of CoreDNS replicas running in your cluster may vary, so it is always a good idea to monitor just in case there is any variation that might affect availability and performance.

- Number of CoreDNS replicas: If you want to monitor the number of CoreDNS replicas running on your Kubernetes environment, you can do that by counting the

coredns_build_info metric. This metric provides information about the CoreDNS build running on such Pods.count(coredns_build_info)

From now on, let’s follow the Four Golden Signals approach. In this section, you’ll learn how to monitor CoreDNS from that perspective, measuring errors, Latency, Traffic, and Saturation.

Errors

Being able to measure the number of errors in your CoreDNS service is key to getting a better understanding of the health of your Kubernetes cluster, your applications, and services. If any application or internal Kubernetes component gets unexpected error responses from the DNS service, you can run into serious trouble. Watch out for SERVFAIL and REFUSED errors. These could mean problems when resolving names for your Kubernetes internal components and applications.

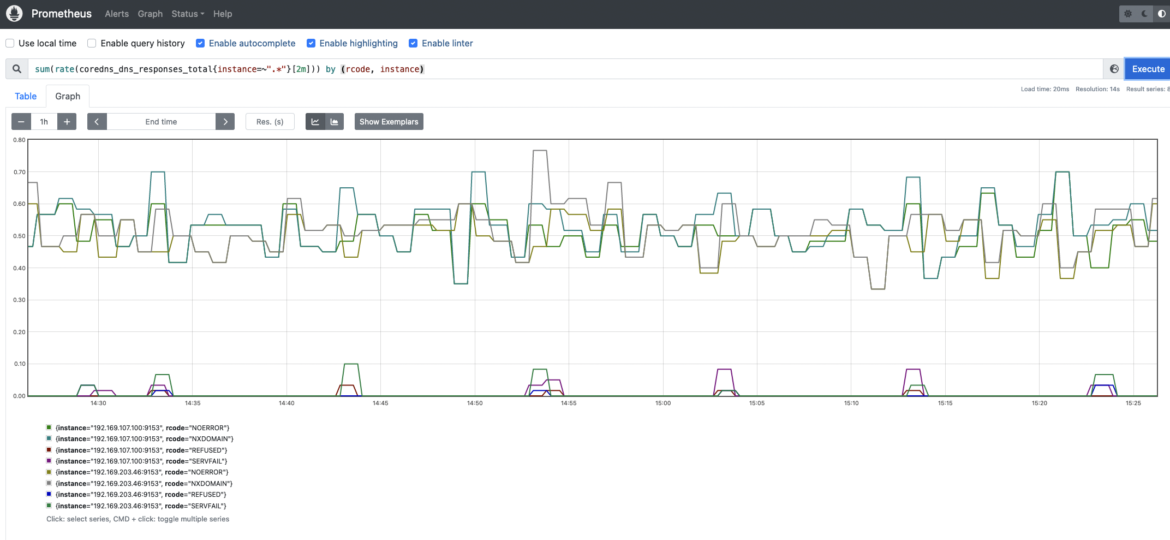

coredns_dns_responses_total: This counter provides information about the number of CoreDNS response codes, namespaces, and CoreDNS instances. You may want to get the rate for each response code. It is always a useful way to measure the error rate in your CoreDNS instances.sum(rate(coredns_dns_responses_total{instance=~".*"}[2m])) by (rcode, instance)

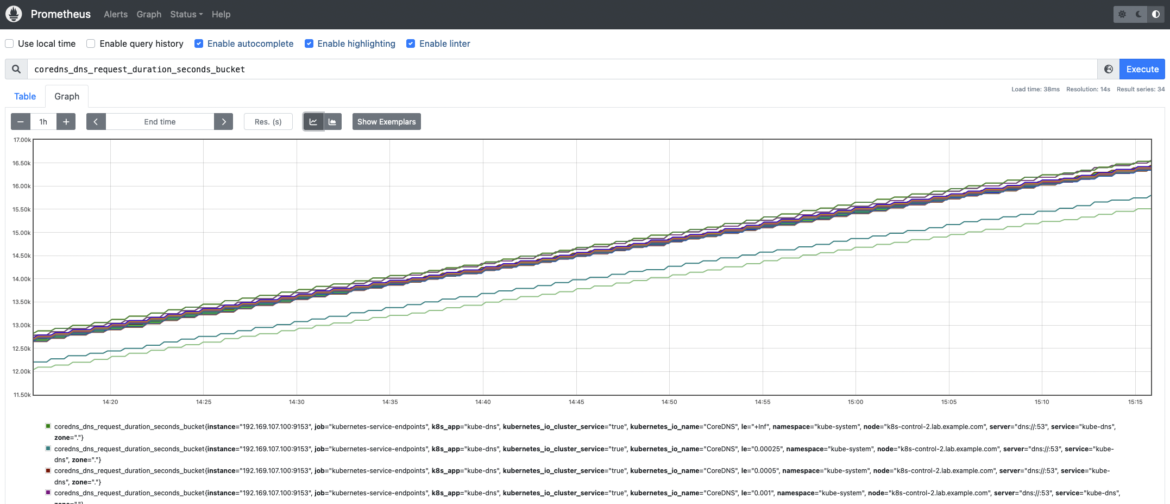

Latency

Measuring latency is key to ensure the DNS service performance is optimal for a proper operation in Kubernetes. If latency is high or is increasing over time, it may indicate a load issue. If CoreDNS instances are overloaded, you may experience issues with DNS name resolution and expect delays, or even outages, in your applications and Kubernetes internal services.

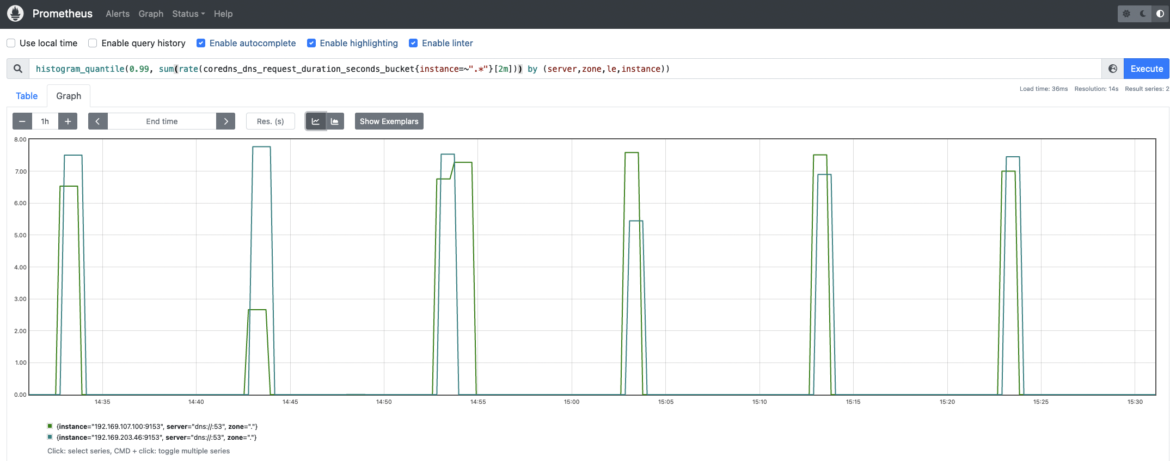

coredns_dns_request_duration_seconds_bucket: CoreDNS requests duration in seconds. You may want to calculate the 99th percentile to see how latencies are distributed among CoreDNS instances.histogram_quantile(0.99, sum(rate(coredns_dns_request_duration_seconds_bucket{instance=~".*"}[2m])) by (server,zone,le,instance))

Traffic

The amount of traffic or requests the CoreDNS service is handling. Monitoring traffic in CoreDNS is really important and worth checking on a regular basis. Observing whether there is any spike in traffic volume or any trend change is key to guaranteeing a good performance and avoiding problems.

coredns_dns_requests_total: DNS requests counter per zone, protocol, and family. You may want to measure and monitor the rate of CoreDNS requests by type (A, AAAA). “A” stands for ipv4 queries, while “AAAA” are ipv6 queries.(sum(rate(coredns_dns_requests_total{instance=~".*"}[2m])) by (type,instance))

Saturation

You can easily monitor the CoreDNS saturation by using your system resource consumption metrics, like CPU, memory, and network usage for CoreDNS Pods.

Others

CoreDNS implements a caching mechanism that allows DNS service to cache records for up to 3600s. This cache can significantly reduce the CoreDNS load and improve performance.

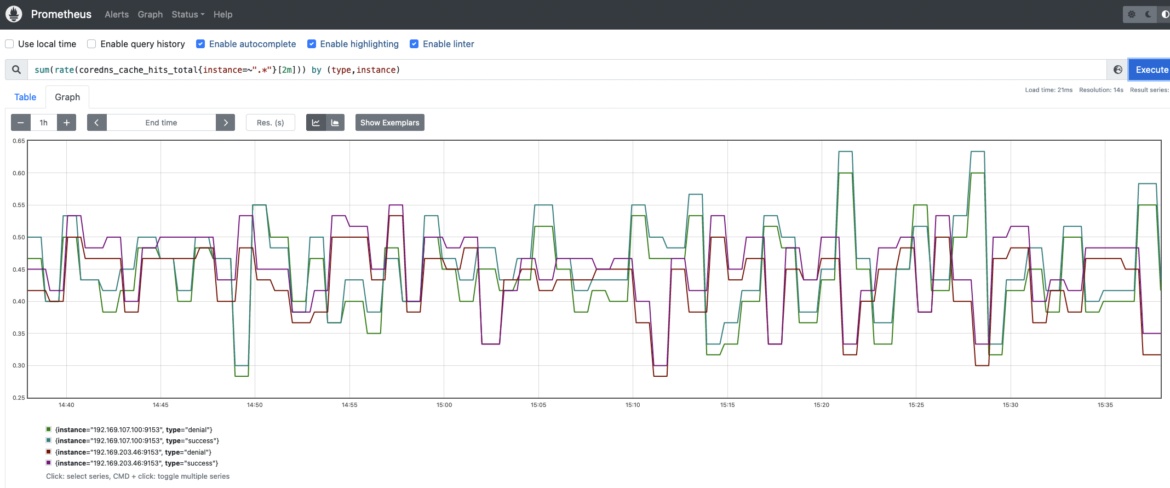

coredns_cache_hits_total: A cache hits counter. You may want to monitor the cache hit rate by running the following query. Thanks to this PromQL query you can easily monitor denial and success rates for CoreDNS cache hits.sum(rate(coredns_cache_hits_total{instance=~".*"}[2m])) by (type,instance)

Conclusion

Along with kube-dns, CoreDNS is one of the choices available to implement the DNS service in your Kubernetes environments. DNS is mandatory for a proper functioning of Kubernetes clusters, and CoreDNS has been the preferred choice for many people because of its flexibility and the number of issues it solves compared to kube-dns.

If you want to ensure your Kubernetes infrastructure is healthy and working properly, you must permanently check your DNS service. It is key to ensure a proper operation in every application, operating system, IT architecture, or cloud environment.

In this article, you have learned how to pull the CoreDNS metrics and how to configure your own Prometheus instance to scrape metrics from the CoreDNS endpoints. Thanks to the key metrics for CoreDNS, you can easily start monitoring your own CoreDNS in any Kubernetes environment.

Monitor Kubernetes CoreDNS and troubleshoot issues up to 10x faster

Sysdig can help you monitor and troubleshoot problems with CoreDNS and other parts of the Kubernetes control plane with the out-of-the-box dashboards included in Sysdig Monitor, and no Prometheus server instrumentation is required! Advisor, a tool integrated in Sysdig Monitor, accelerates troubleshooting of your Kubernetes clusters and its workloads by up to 10x.

Sign up for a 30-day trial account and try it yourself!