Falco Feeds extends the power of Falco by giving open source-focused companies access to expert-written rules that are continuously updated as new threats are discovered.

Since the Sysdig Threat Research Team (TRT) discovered LLMjacking in May 2024, we have continued to observe new insights into and applications for these attacks. Large language models (LLMs) are rapidly evolving and we are all still learning how best to use them, but in the same vein, attackers continue to evolve and grow their use cases for misuse. Since our original discovery, we have observed new motives and methods by which attackers conduct LLMjacking — including rapid expansion to new LLMs, such as DeepSeek.

As we reported in September, the frequency and popularity of LLMjacking attacks are increasing. Given that trend, we weren’t surprised to see DeepSeek being targeted within days of its media virality and following spike in usage. LLMjacking attacks have also received considerable public attention, including a lawsuit from Microsoft that targeted cybercriminals who stole credentials and used them to abuse their generative AI (GenAI) services. The complaint alleges that the defendants used DALL-E to generate abusive content. An escalation from when we shared examples of benign image generation in our September LLMjacking update.

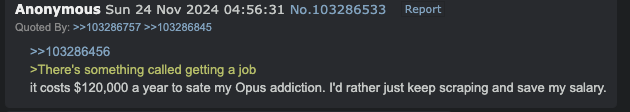

Cloud-based LLM usage costs can be staggering, surpassing several hundreds of thousands of dollars monthly. Sysdig TRT found over a dozen proxy servers using stolen credentials across many different services, including OpenAI, AWS, and Azure. The high cost of LLMs is the reason cybercriminals (like the one in the example below) choose to steal credentials rather than pay for LLM services.

This LLMjacking update from Sysdig TRT will explore new tactics and models targeted, the business of proxies, and threat researchers’ latest findings.

LLMjackers quickly adopt DeepSeek

Attackers quickly implement the latest models when they are released. For example, on Dec 26, 2024, DeepSeek released its advanced model, DeepSeek-V3, and a few days later it was already implemented in this OpenAI Reverse Proxy (ORP) instance hosted on HuggingFace: https://huggingface.co/spaces/nebantemenya/joemini.

That instance is based on a fork of the ORP accessible at agalq13/lollllll, where they pushed the commit containing the implementation of DeepSeek. A few weeks later, on Jan 20, 2025, DeepSeek released a reasoning model called DeepSeek-R1. The day after, the author of that forked repository implemented it as well.

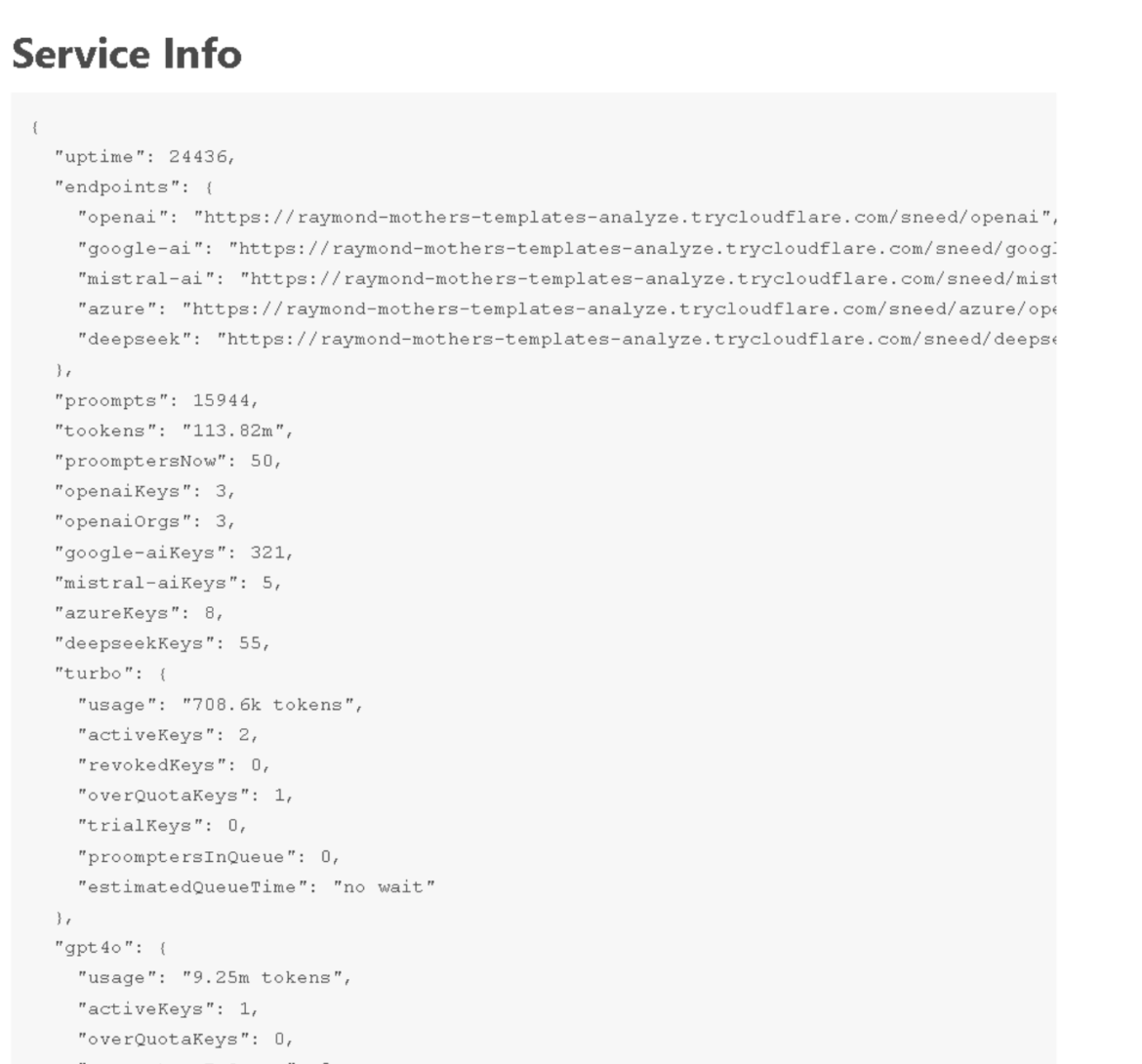

Not only has support for new models such as DeepSeek been implemented, but we also found that multiple ORPs have been populated with DeepSeek API keys, and users are starting to take advantage of them. The ORP below shows it has 55 DeepSeek API keys already.

LLMjacking and OpenAI Reverse Proxy

The usage of OAI Reverse Proxy (ORP) for LLMjacking was reported in the original article, and it continues to be popular. ORP acts as a reverse proxy server for LLMs of various providers. As per the documentation, the ORP server can be exposed via a Nginx configuration with the user’s provided domain or through auto-generated dynamic domains like TryCloudflare. Those subdomains are used to hide source IPs or domains, a tactic we saw with other threat actors such as LABRAT.

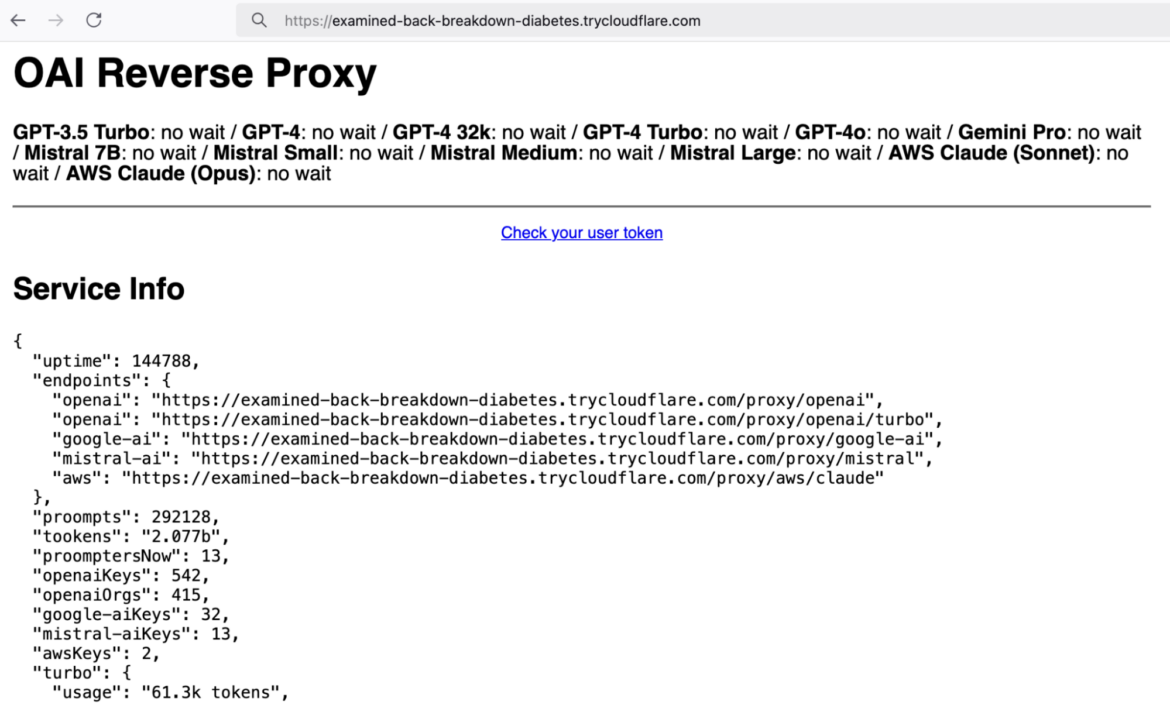

The following screenshot shows an ORP instance operated by an attacker. ORP provides statistics about the current state of the proxy, including the number of keys available, wait times, and how often each type of LLM was used. In the example below, hundreds of keys from OpenAI, Google AI, and Mistral AI are being used by the proxy, many of which are likely stolen.

We found many other exposed ORP instances, but what caught our attention was one hosted at https://vip[.]jewproxy[.]tech. Access to this proxy is being sold at a custom storefront (hxxps://jewishproxy[.]sell[.]app/product/vip-token). A 30-day access token costs the user $30. More information from the author is on this web page (hxxps://rentry[.]org/proxy4sale).

This proxy is being used quite extensively, likely by many users leveraging several LLMs. Unfortunately, it gets rebooted periodically, so the statistics on the webpage are reset. We were able to capture a snapshot of the instance showing heavy usage. These are the relevant statistics we saw:

1"uptime": 395555,

2

3...

4

5 "proompts": 132651,

6

7 "tookens": "2.213b ($21868.20)",

8

9 "proomptersNow": 18,

10

11 "openaiKeys": 32,

12

13 "openaiOrgs": 13,

14

15 "google-aiKeys": 2,

16

17 "awsKeys": 1,

18

19 "azureKeys": 1,

20

21 "turbo": {

22

23 "usage": "5.87m tokens ($5.87)",

24

25...

26

27 "gpt4-turbo": {

28

29 "usage": "16.02m tokens ($160.16)",

30

31...

32

33 "gpt4o": {

34

35 "usage": "694.17m tokens ($3470.85)",

36

37...

38

39 "o1-mini": {

40

41 "usage": "233.6k tokens ($1.17)",

42

43...

44

45 "o1": {

46

47 "usage": "3.81m tokens ($76.28)",

48

49...

50

51 "gpt4": {

52

53 "usage": "1.19m tokens ($35.68)",

54

55...

56

57 "gpt4-32k": {

58

59 "usage": "4.84m tokens ($290.69)",

60

61...

62

63 "gemini-pro": {

64

65 "usage": "15.23m tokens ($0.00)",

66

67...

68

69 "gemini-flash": {

70

71 "usage": "559.6k tokens ($0.00)",

72

73...

74

75 "aws-claude": {

76

77 "usage": "605.45m tokens ($4843.64)",

78

79...

80

81 "aws-claude-opus": {

82

83 "usage": "865.59m tokens ($12983.87)",

84

85...ORP calculates the cost of the model usage with this code. These statistics are reset after each reboot and are valid for the listed uptime. As the first comment in the code says, these costs are underestimated because it considers every token an input token, which costs less than output tokens. Tokens are words, character sets, or combinations of words and punctuation generated by large language models (LLMs) when they process text. LLMs often measure their usage by tokens, both input and output.

If we compute those costs as the average between input and output costs, the total amount increases to almost $50,000 ($49,595.83). Based on the “uptime” of 395,555 seconds, this figure was reached in 4.5 days.

Claude 3 Opus is the most used and expensive model, with 865.59 million tokens used. Based on the token price from the Bedrock documentation, we estimated that this model alone costs the account owner $38,951.55.

The total number of tokens used by all the ORPs amounted to more than 2 billion tokens during the times we could observe.

Who pays for the use of these resources? The owners of the accounts associated with the stolen keys. In an LLMjacking scenario, the victims of the attack are those who had their cloud accounts compromised, similar to a cryptojacking attack when the attacker uses the victim’s compute resources and runs up a bill.

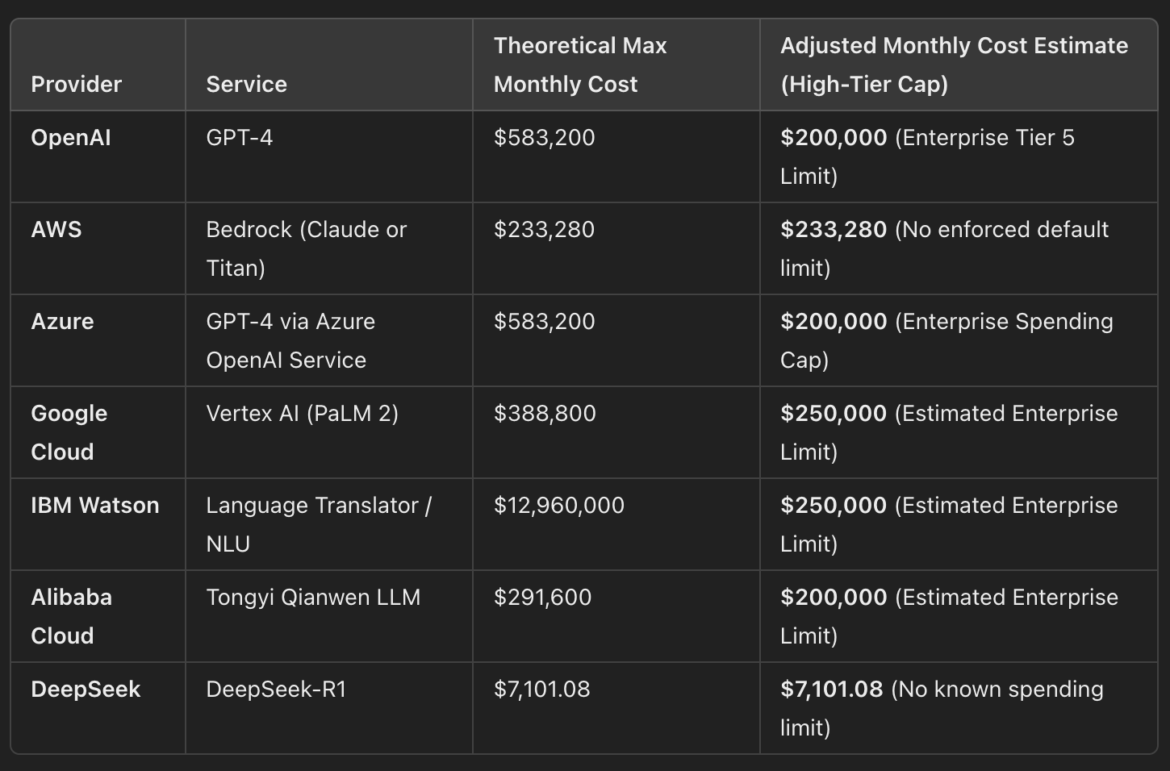

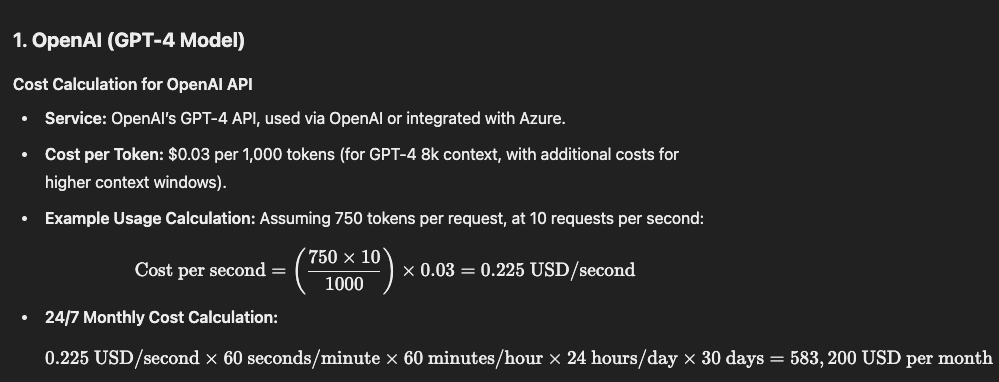

LLMs are expensive to operate, as this table from OpenAI demonstrates. Access to these services must be protected, and not just because of the costs incurred from unauthorized usage, but also due to the potential for data leaks.

LLMjacking tactics, techniques, and procedures

LLMjacking is no longer just a potential fad or trend. Communities have been built to share tools and techniques. ORPs are forked and customized specifically for LLMjacking operations. Cloud credentials are being tested for LLM access before being sold. LLMjacking operations are beginning to establish a unique set of TTPs, and we have identified some of these below.

Communities

Many active communities exist around using LLMs for NSFW content and creating AI characters for role-playing. These users prefer communicating over 4chan and Discord. They share access to the LLMs via ORPs, both private and public. While 4chan threads are periodically archived, summaries of tools and services are often available on the pastebin-style website Rentry.co, a popular choice for sharing links and any associated access information. Sites hosted on Rentry can use markdown, provide custom URLs, and be edited after posting.

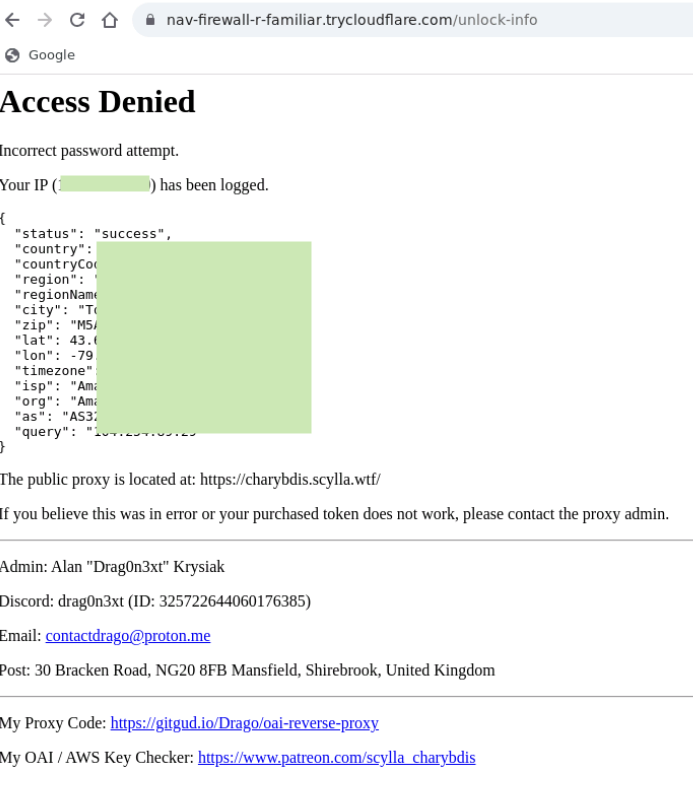

During our research we discovered over 20 ORP proxies, some using custom domains and others using TryCloudFlare tunnels, such as: “https://examined-back-breakdown-diabetes.trycloudflare[.]com/”.

While investigating LLMjacking attacks in our cloud honeypot environments, we discovered several TryCloudflare domains in the LLM prompt logs, where the attacker was using the LLM to generate a Python script that interacts with ORPs. This started a trail back to the servers that were using TryCloudFlare tunnels.

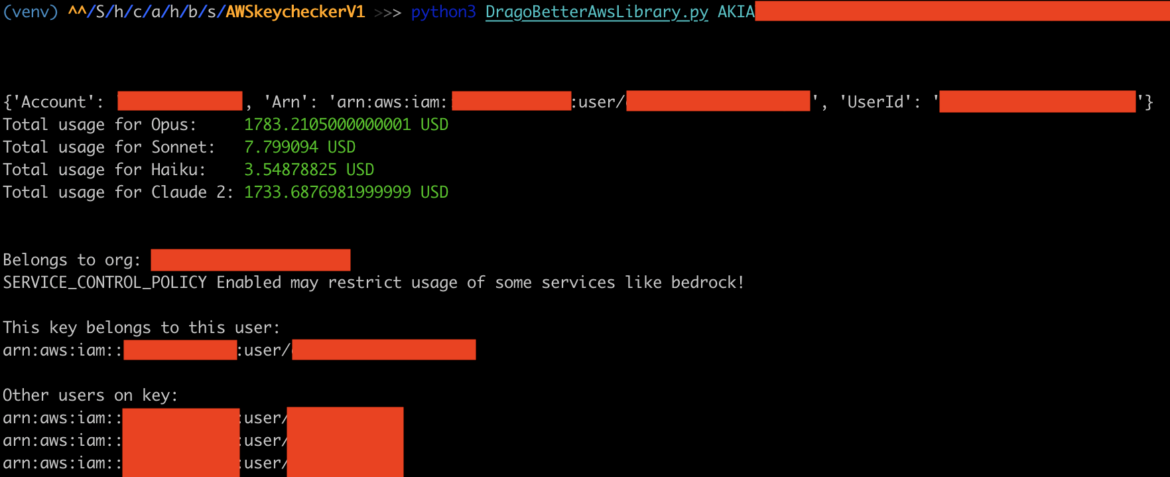

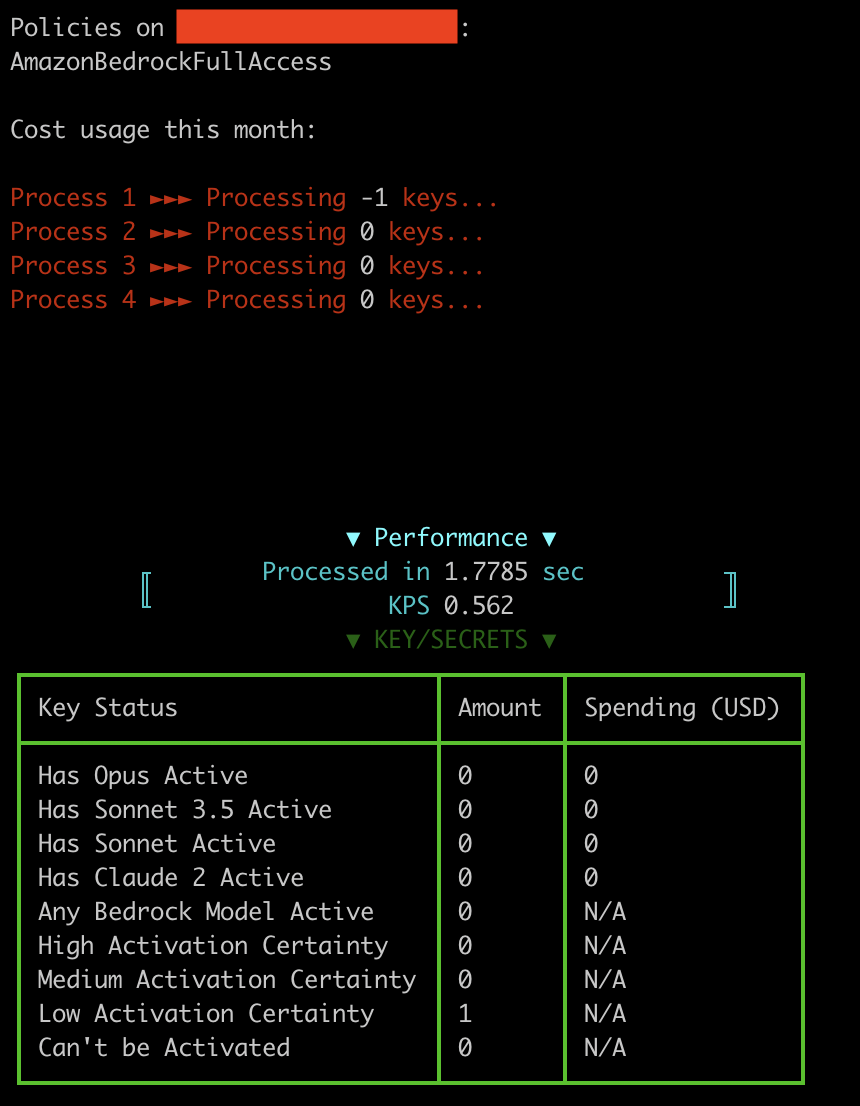

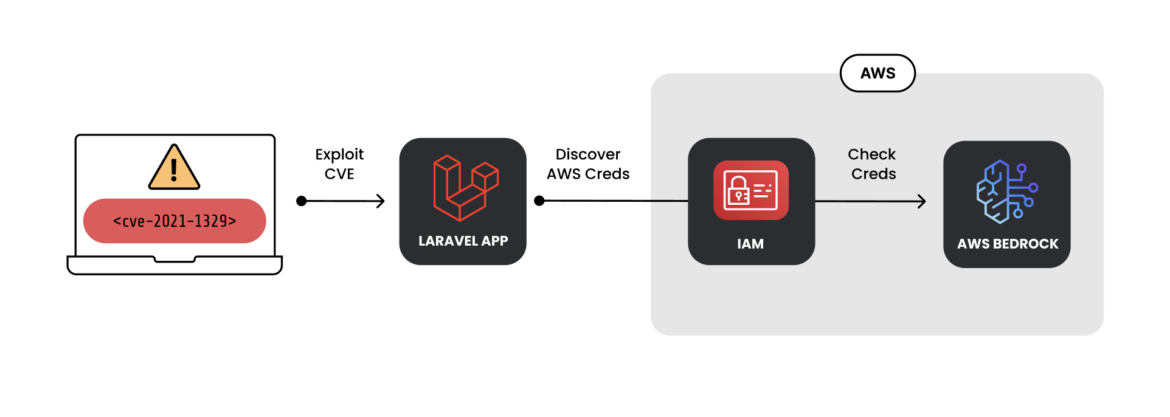

Credential theft

Attackers steal credentials through vulnerable services, such as Laravel, and then use the scripts below as verification tools to determine whether the credentials are capable of accessing ML services. Once access has been gained to a system and credentials found, attackers will run their verification scripts on collected data. Another popular source of credentials is from software packages in public repositories that may have them exposed.

All of the scripts have some common attributes: concurrency to be more efficient with a large number of (stolen) keys, and built with automation in mind.

OAI Reverse Proxy customizations

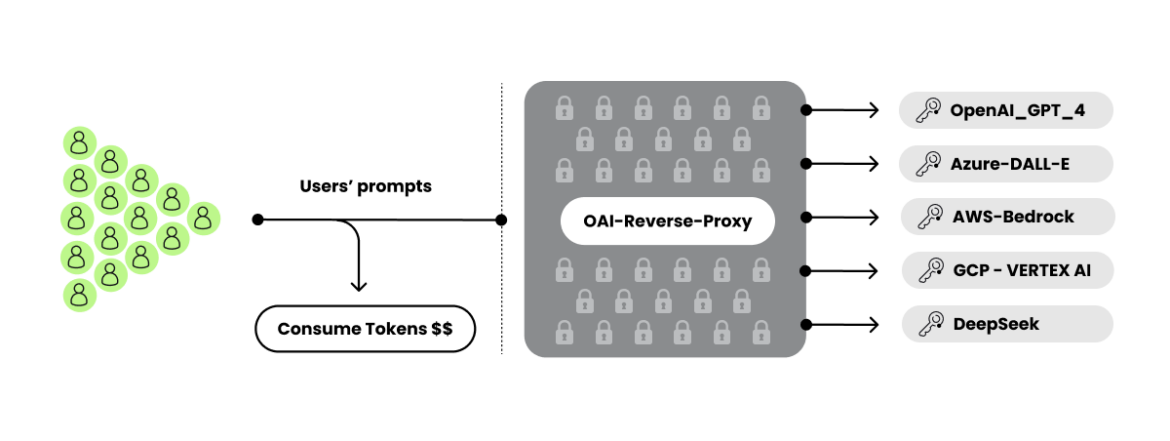

This diagram illustrates the complete flow from the stolen credentials to ultimately getting customers to access the ML services via a proxy. Let’s examine each component step.

The original OAI Reverse Proxy from Khanon has many different forks and custom configurations, including some modified versions that are being advertised for their privacy and stealth features. These features include the ability to run without generating alerts and with modified logging features. The legality of prompts is often questioned, and the modifications aim to ensure that user prompts remain entirely private.

Examples of efforts made to obfuscate ORPs:

- Operators can modify the website’s style to add a layer of obfuscation. We found one proxy that uses CSS to make reading the page very difficult. To properly gain access, the user has to disable CSS.

- Operators can adjust the proxy to enable password authentication, thereby keeping the number of keys they manage more confidential. Additionally, they will log all authentication errors in an attempt to scare the user.

Operational security

To protect their server, ORP operators typically seek to hide the IPs or VPS utilized to deploy the proxy. They often use TryCloudflare tunnels that do not require registration to create temporary domains. As the documentation shows, they are quick and easy to set up. The randomly generated links, which are made up of four words, are then shared with their users.

Some operators add additional layers of obfuscation to the proxy link-sharing process to limit access. For example, some Rentry pages with ORP information display four words in random places that are used to create the Trycloudflare subdomain. This method helps hide the URL from any web scraping systems.

Ultimately, Rentry links circulate in forums and chat rooms; however, like other underground groups, scams abound.

Best practices for detecting and combatting LLMjacking

LLMjacking is primarily accomplished through the compromise of credentials or access keys. It has become common enough that MITRE added LLMjacking to the MITRE ATT&CK framework to raise awareness about this threat and help defenders map this attack.

Defending against AI service account compromise primarily involves securing access keys, implementing strong identity management, monitoring for threats, and ensuring least privilege access. Here are some best practices to defend against account compromises:

Secure access keys

Access keys are a major attack vector, so they should be managed carefully.

Avoid hardcoding credentials. Don’t embed API keys, access keys, or credentials in source code, configuration files, or public repositories (e.g. GitHub, Bitbucket). Instead, use environment variables or secrets management tools such as AWS Secrets Manager, Azure Key Vault, or HashiCorp Vault.

Use temporary credentials. Use temporary security credentials instead of long-lived access keys, such as AWS STS AssumeRole, Azure Managed Identities, and Google Cloud IAM Workload Identity.

Rotate access keys. Regularly change access keys to reduce exposure. Automate the rotation process where possible.

Monitor for exposed credentials. Use automated scanning to detect exposed credentials. Some example tools are AWS IAM Access Analyzer, GitHub Secret Scanning, and TruffleHog.

Monitor account behavior. When an account key is compromised, it will usually deviate from normal behavior and start taking suspicious actions. Continuously monitor your cloud and AI service accounts using tools such as Sysdig Secure.

Conclusion

As the demand for access to advanced LLMs has grown, LLMjacking attacks have become increasingly popular. Due to steep costs, a black market for access has developed around OAI Reverse Proxies — and underground service providers have risen to meet the needs of consumers. LLMjacking proxy operators have expanded access to credentials, customized their offerings, and begun including new models like DeepSeek. At the same time, AWS and Azure recently added DeepSeek models to Amazon Bedrock and Azure AI Foundry.

Meanwhile, legitimate users have become a prime target. With unauthorized account use resulting in hundreds of thousands of dollars of losses for victims, proper management of these accounts and their associated API keys has become critical.

LLMjacking attacks are evolving, as are the motivations driving them. Ultimately, attackers will continue to seek access to LLMs and find new malicious uses for them. It’s incumbent upon users and organizations to be prepared to detect and defend against them.

Appendix

References — LLM costs by provider

In the following paragraphs, we share the LLM costs provided by ChatGPT. These were derived from the o3-mini model using the free tier. We requested cost estimates from several cloud providers, focusing on AI models.

OpenAI (GPT-4 Model)

24/7 Monthly Cost Calculation: 0.225 USD/second×60 seconds/minute×60 minutes/hour×24 hours/day×30 days= 583,200 USD per month.

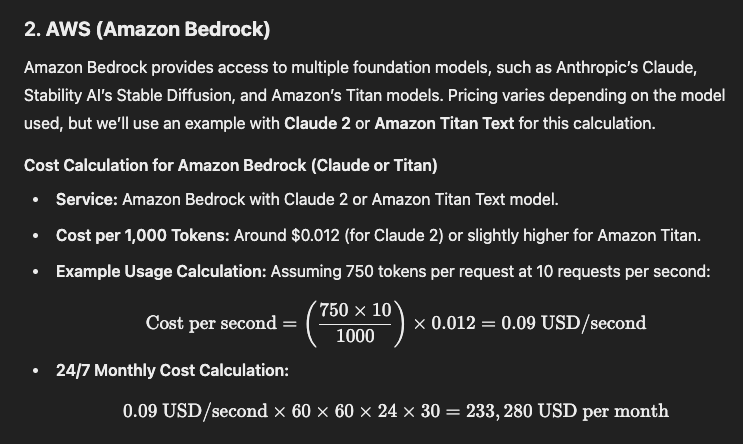

AWS (Amazon Bedrock)

24/7 Monthly Cost Calculation: 0.09 USD/second×60×60×24×30=233,280 USD per month

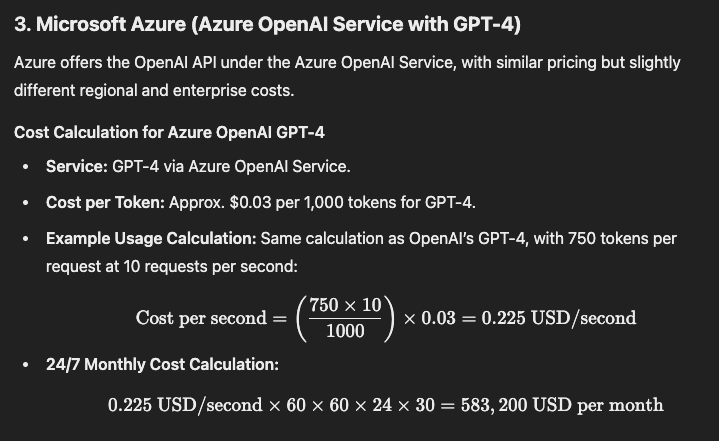

Microsoft Azure (Azure OpenAI Service with GPT-4)

24/7 Monthly Cost Calculation: 0.225 USD/second×60×60×24×30=583,200 USD per month

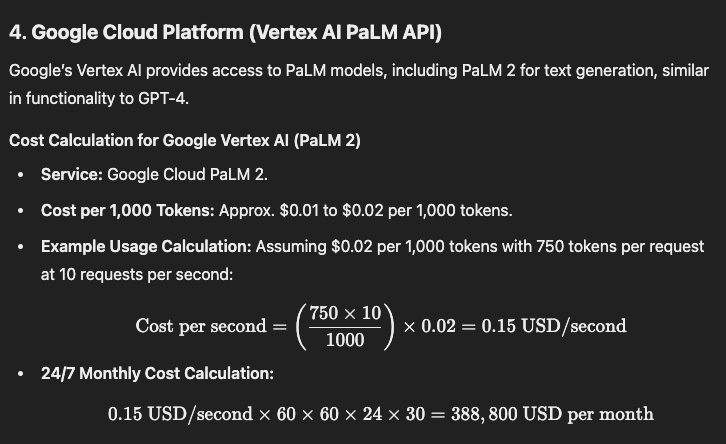

Google Cloud Platform (Vertex AI PaLM API)

24/7 Monthly Cost Calculation: 0.15 USD/second×60×60×24×30=388,800 USD per month

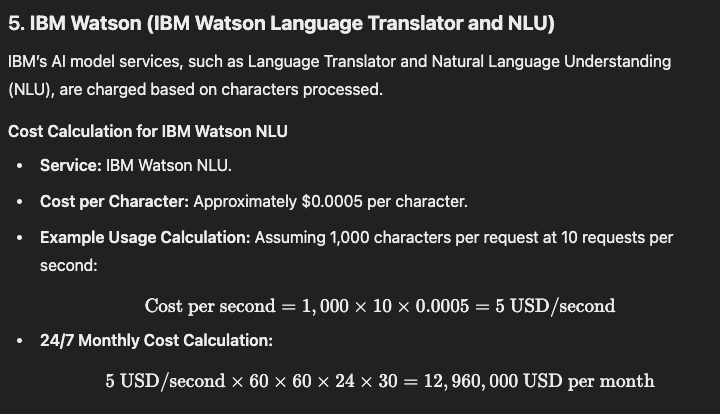

IBM Watson (IBM Watson Language Translator and NLU)

24/7 Monthly Cost Calculation: 5 USD/second×60×60×24×30=12,960,000 USD per month

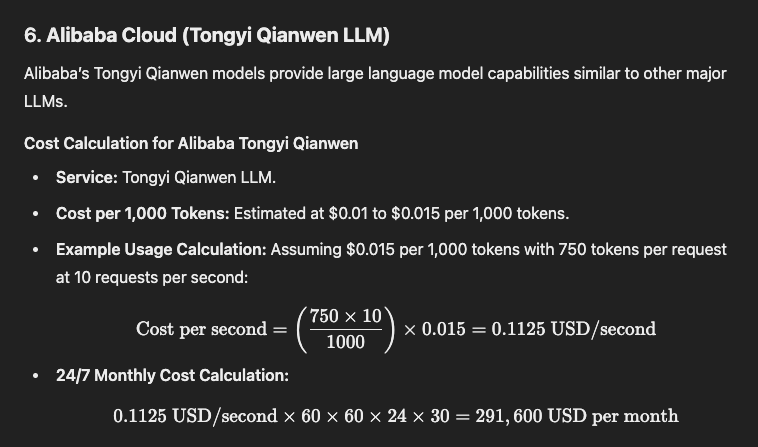

Alibaba Cloud (Tongyi Qianwen LLM)

24/7 Monthly Cost Calculation: 0.1125 USD/second×60×60×24×30=291,600 USD per month

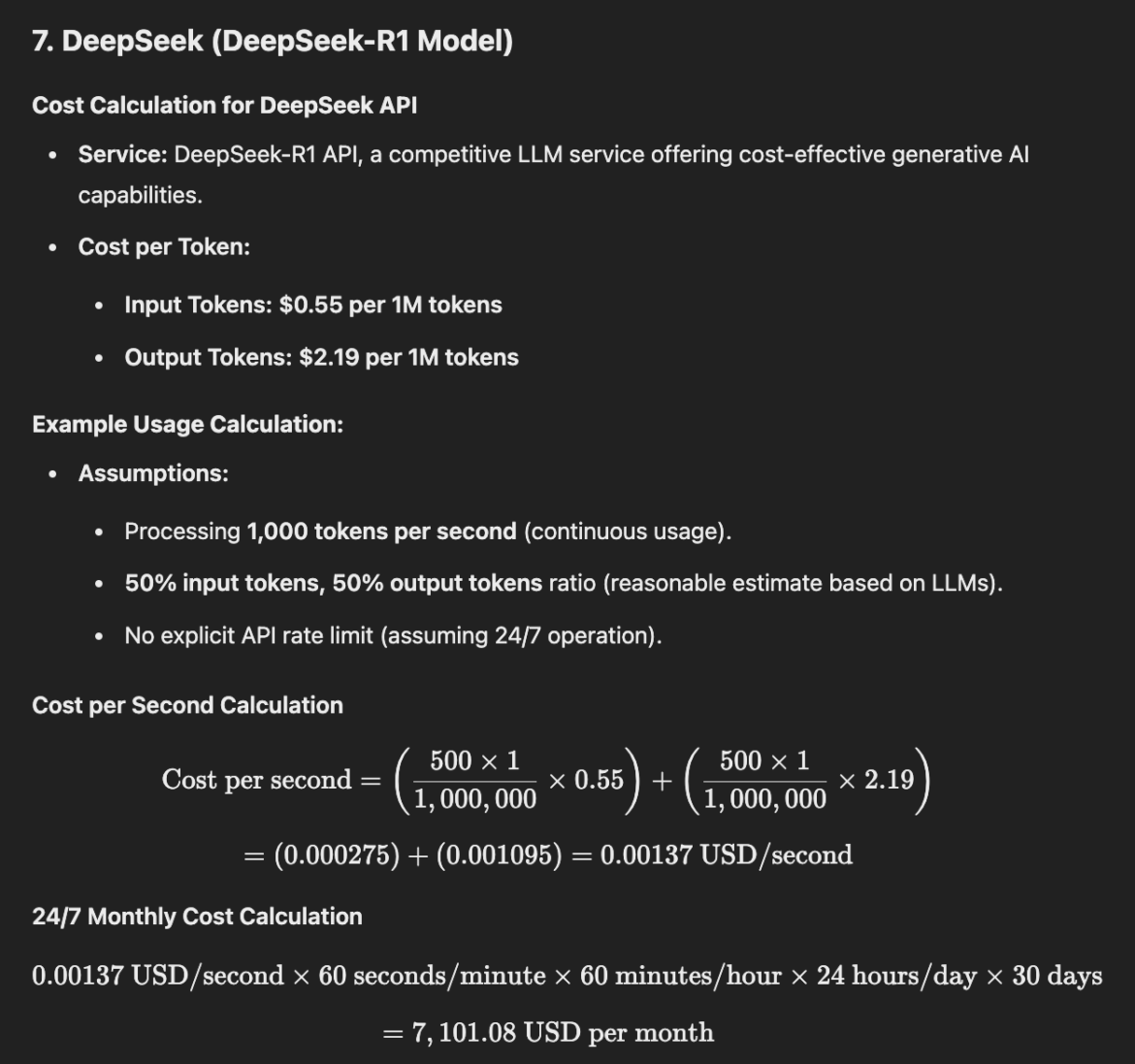

DeepSeek (DeepSeek-R1 Model)

0,00137USD/second x 60 x 60 x 24 x 30 = 7,101.08 USD

Given the absence of explicit rate limits and the practical constraints mentioned, the previously estimated maximum monthly cost of $7,101.08 remains a reasonable approximation for potential abuse scenarios. However, actual costs could vary based on usage patterns and system performance.