What is Docker Swarm?

Docker Swarm is a container orchestrator tool offered by Docker as a application mode, for managing multiples nodes, either physical or virtual, and it’s deployments like a whole single system, that is the “Swarm”, natively in Docker.

These nodes of the Swarm, as it was said, are connected with each other, can be either physical or virtual, and based on this, its roll can be different:

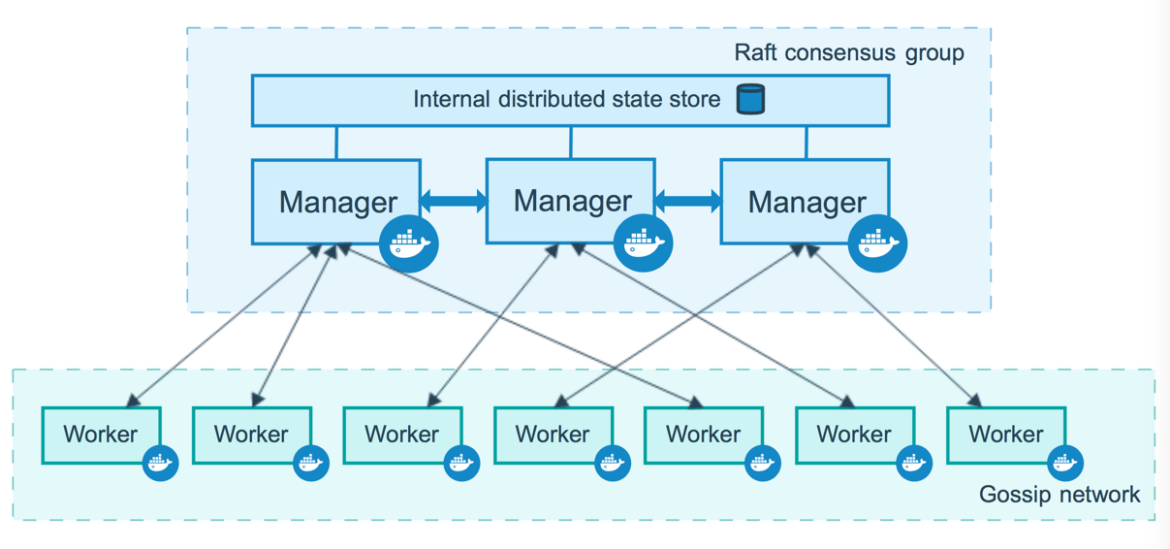

- Manager nodes: These contain the Swarm Manager, the process in charge of handling the commands in the Swarm mode and reconciling the desired state with the actual cluster state.

- Worker nodes: These contain the workloads of your system.

These can be possible with Swarmkit, a collection of tools for orchestrating distributed systems, including technologies like Raft consensus or distributed state and is integrated into Docker.

Why use Docker Swarm?

Compared to running Docker without Swarm mode, Docker Swarm provides several benefits for running production workloads.

Benefits

High availability: Docker Swarm promotes high-availability environments by using a Swarm of nodes and workers. It uses Raft consensus (from Swarmkit) to establish reliable communication and fault tolerance between nodes. To create highly available environments, you need to have at least 3 manager nodes ensuring a maximum of 1 node failure. The recommended number of manager nodes is 7 (to handle 3 node failures at any given time).

Load balancing: Since there are multiple worker nodes, you can deploy multiple instances of applications in the Swarm. An internal load balancing mechanism by the manager nodes ensures that the requests are evenly distributed across the worker nodes. Swarm Manager also ensures that the services are alive and responding by providing health and readiness checks.

Simplicity: The Docker ecosystem of technologies facilitates operational agility, portability, and cost savings. In particular, Docker Swarm mode is integrated with Docker so developers don’t need to add anything else. The Docker CLI tool contains the necessary commands and options for easily managing apps and containers both in Swarm and non-Swarm mode. Compared to K8s, where you have a steep learning curve, Docker Swarm mode is simpler to deploy and use in practice.

Common Use Cases

Docker Swarm mode is suitable for deploying small to moderate deployment configurations. For example, this could be a small stack of applications consisting of a single database, a Web app, a cache service, and a couple of other backend services. Larger deployments may hit the limitations of this mode, mainly because of the maintenance, customization, and disaster recovery requirements.

Docker Swarm is also suitable if you need to prioritize high service availability and automatic load balancing over automation and fault tolerance.

Overall, if organizations want to transition to containers, Docker Swarm can be a good option since it is simpler to manage and operate.

Why use Docker Swarm instead of another container orchestrator?

As we mentioned earlier, one key benefit of using Docker Swarm compared to a more advanced container orchestration solution like K8s is that it has an easier learning curve. The tools and services are available just by virtue of installing Docker. If you want to spin up multiple nodes, you can do that using VirtualBox or Vagrant; then, you can create a cluster with a few commands. In addition, it does not require configuration changes other than using the existing options available with Docker.

You can also use Docker Swarm in production if you have a stack that is moderately low maintenance (like 3-10 nodes with less than 100 containers running). For larger workloads, Docker Swarm quickly falls behind K8s, which has better tools, support, and documentation at that scale.

Key Concepts

Some of the key concepts to understand when working with Docker Swarm mode include the following:

Swarm

A Swarm comprises a collection of nodes that coordinate with each other to ensure reliable operations. By having a Swarm of nodes, you can reduce the risk of a single point of failure by having nodes operate in a consensus. At any given time, there is a leader node (out of the list of manager nodes) that makes all the key decisions for the Swarm. If the leader node becomes unavailable, then the rest of the nodes in the consensus will agree on a new leader to pick up the responsibilities of the failed node.

Node

A node is merely a physical or virtual machine that runs one instance of Docker Engine in Swarm mode. Based on its configuration, this instance can run as a worker node or as a manager. A worker node is responsible for accepting workloads (deployments and services). On the other hand, manager nodes are the control plane of the Swarm and are responsible for service orchestration, consensus participation, and workload scheduling. Both types of nodes are required in sufficient quantities to ensure high availability and reliability of running services.

Services and Tasks

Workloads or actions performed in Swarm are divided into two different types. Services are definitions of containers that need to be deployed based on some criteria. Within Swarm mode, you can deploy services that run globally, as one instance per node (like monitoring or log collection containers), or as replicated services. A replicated service represents a typical application that needs to be deployed with multiple instances (copies) to handle spike workloads.

Services that are deployed in Swarm can be scaled up or down using the docker service scale command and can be reachable by any node of the same cluster via an internal DNS resolution.

A task is a single workload definition assigned to a node. When submitting a task to Docker Swarm, it runs on a node; however, it cannot run on a different node with the same ID. In order to create a task, you just need to create a service that describes the desired deployment and then the task will perform the work. A task has a lifecycle status assigned to it with various states (for example, NEW for newly created, PENDING for waiting for assignment, or COMPLETE when it has successfully completed).

Load Balancing

Load balancing is distributing the flow of requests to services in an even manner. When you have a spike of requests (think Super Bowl time) and lots of people are visiting a website, you want to spread the number of visits into multiple instances running the website. This is to ensure every visitor experiences the same quality of service. Docker Swarm offers automatic load balancing out of the box, and it has an easy way to publish ports for services to external load balancers like HAProxy or NGINX.

Use Case Example

To experiment with creating a Swarm using Docker, you want to have at least one manager node and 2 worker nodes. I’ve created a simple Vagrantfile that provides a stack of 4 VMs that install Docker as part of their init script.

First, you want to spin up the VMs:

$ vagrant up When all of the machines are ready, join the manager node:

$ vagrant status

Current machine states:

manager running (virtualbox)

worker01 running (virtualbox)

worker02 running (virtualbox)

worker03 running (virtualbox)

$ vagrant ssh manager

vagrant@manager:~$ docker infoCreating a Swarm

Run the following command in a manager node to initialize Docker Swarm:

vagrant@manager:~$ docker swarm init --listen-addr 10.10.10.2:2377 --advertise-addr 10.10.10.2:2377

Swarm initialized: current node (r9xym5ymmzmm7e7ux3f4sxcs9) is now a manager.Here, we’ve used the private IP address of the manager (10.10.10.2), which is within the same network as the worker nodes’ IP address list (10.10.10.21, 10.10.10.22, and 10.10.10.23).

To add a worker to this Swarm, run the following command:

$ docker swarm join --token SWMTKN-1-4w74yys9pihkl9qhmdrk0zlqtca9xrihan36cktdt2du251qxm-bw60iu884vettl2ay65m0y1uy 10.10.10.2:2377

This node joined a swarm as a worker.Code language: JavaScript (javascript)The join token was exposed when you created the first manager node. Make sure to use the same command for each of the worker nodes: worker01, worker02, and worker03.

Finally, check the list of nodes from the manager node:

vagrant@manager:~$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

r9xym5ymmzmm7e7ux3f4sxcs9 * manager Ready Active Leader 20.10.12

r1l8jn1ot24hfzs0f7e9te5u3 worker01 Ready Active 20.10.12

wi7uq4jpcoh8rd7hlej6xuc4b worker02 Ready Active 20.10.12

p7paeu2d72rqu4dr1dllvcdys worker03 Ready Active 20.10.12So far, so good.

Deploying Services to a Swarm

To deploy a service to a Swarm, you can just use the docker service command to push a container application. You can specify parameters like the number of replicas or publish service ports outside of the Swarm. Use the following command from within the same network of the Swarm to deploy NGINX containers spread to all nodes:

vagrant@manager:~$ docker service create --name my_nginx --replicas 8 --publish published=8080,target=80 nginxYou should be able to check the running containers within a node and verify that you can access ‘localhost:8080‘:

vagrant@worker02:~$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

de396992a942 nginx:latest "/docker-entrypoint.…" About a minute ago Up About a minute 80/tcp my_nginx.2.enp8yy55f0j1u1y8tm44lpxjq

935eea500ce6 nginx:latest "/docker-entrypoint.…" About a minute ago Up About a minute 80/tcp my_nginx.6.f8zl2oh530rvfe15cr6fcmae0Code language: JavaScript (javascript)

You can also use the docker stack command with a yml specification file that describes the services you want to deploy. For example, the previous command is equivalent to the following spec:

# Dockerfile

version: '3.7'

services:

my_nginx:

image: nginx:latest

deploy:

replicas: 8

ports:

- "8080:8080"Code language: PHP (php)vagrant@manager:~$ docker stack deploy -c stack.yml my_nginx

vagrant@manager:~$ docker stack services my_nginx

ID NAME MODE REPLICAS IMAGE PORTS

l8op85m1kaoy my_nginx_my_nginx replicated 8/8 nginx:latest *:8080->8080/tcpOther Useful Commands

There are some useful commands you can use while in Swarm mode. Let’s take a brief look at them:

Inspecting a node status: You can use the docker node inspect command to review node information:

vagrant@manager:~$ docker node inspect worker02

[

{

"ID": "wi7uq4jpcoh8rd7hlej6xuc4b",

"Version": {

"Index": 35

},

"CreatedAt": "2023-02-15T12:28:39.485382607Z",

"UpdatedAt": "2023-02-16T10:16:47.28165702Z",

…Code language: JavaScript (javascript)Demoting a manager node to a worker: You can demote a manager to a worker using the docker node demote <manager_node_id> command.

Promoting a worker node to a manager: On the other hand, you can promote a worker node to a manager node using the docker node promote command:

vagrant@manager:~$ docker node promote worker01

Node worker01 promoted to a manager in the swarm.

vagrant@manager:~$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

r9xym5ymmzmm7e7ux3f4sxcs9 * manager Ready Active Leader 20.10.12

r1l8jn1ot24hfzs0f7e9te5u3 worker01 Ready Active Reachable 20.10.12

wi7uq4jpcoh8rd7hlej6xuc4b worker02 Ready Active 20.10.12

p7paeu2d72rqu4dr1dllvcdys worker03 Ready Active 20.10.12Draining a node: Draining a node means that the Swarm engine migrates any running containers out of this node into the rest of the worker nodes. This is useful when you have planned node upgrades that seem important, and also to make sure that the node is healthy before it accepts new workloads:

vagrant@manager:~$ docker node update --availability drain worker02

vagrant@manager:~$ docker node inspect worker02 --format '{{ .Spec.Availability }}'

DrainCode language: JavaScript (javascript)You can return the node to an active state by executing the following command:

vagrant@manager:~$ docker node update --availability active worker02

worker02Conclusion

That concludes our brief overview of Docker Swarm mode and its operational capabilities. Docker Swarm is a compelling alternative to K8s because it offers simplicity, automatic load balancing, and a smooth setup. Since Docker Swarm mode is built into Docker, you can start experimenting with it straight away, without having to learn additional technologies.

Overall, Docker Swarm mode makes the deployment of highly available replicated services easier and more efficient.