While auditing the Kubernetes source code, I recently discovered an issue (CVE-2020-8563) in Kubernetes that may cause sensitive data leakage.

You would be affected by CVE-2020-8563 if you created a Kubernetes cluster over vSphere, and enabled vSphere as a cloud provider with logging level set to 4 or above. In that case, your vSphere user credentials will be leaked in the cloud-controller-manager‘s log. Anyone with access to this log will be able to read them and impersonate your vSphere user, compromising your whole infrastructure.

In this article, you’ll understand this issue, what parts of Kubernetes are affected, and how to mitigate it.

Preliminary

Let’s start by listing the Kubernetes components that either cause, or are impacted by the issue.

- Kube-controller-manager: The Kubernetes controller manager is a combination of the core controllers that watch for state updates, and make changes to the cluster accordingly. The controllers that currently ship with Kubernetes include:

- Replication controller: Maintains the correct number of pods on the system, for replicating controller objects.

- Node controller: Monitors the changes of the nodes.

- Endpoints controller: Populates the endpoint object, which is responsible for joining the service object and pod object.

- Service accounts and token controller: Manages service accounts and tokens for namespaces.

- Cloud-controller-manager: Introduced in v1.6, it runs controllers to interact with the underlying cloud providers. This is an attempt to decouple the cloud vendor code from the Kubernetes code.

- Informer/SharedInformer: The key component of a controller. They watch for changes on the current state of Kubernetes objects and send events to a Workqueue, where the events are then popped up by worker(s) to be processed.

- Secret: Kubernetes Secrets let you store and manage sensitive information, such as passwords, OAuth tokens, and ssh keys. Storing confidential information in a Secret is safer and more flexible than putting it verbatim in a Pod definition or in a container image.

The CVE-2020-8563 issue

The issue with CVE-2020-8563 is as follows: If you have a Kubernetes cluster running on vSphere and its logging level is set to 4 or above, the vSphere credentials will be logged by cloud-controller-manager.

This section walks through the relevant code paths of Kubernetes to explain what went wrong.

In the Kubernetes source code, you will find a folder called “legacy-cloud-providers.” It contains the legacy code for the cloud controllers: AWS, Azure, GCE, OpenStack, and vSphere. Although they are deprecated and will be removed in the future, they are still used until today. For example, if you use kops to create a Kubernetes cluster on AWS, you will find the following WARNING message about the cloud provider deprecation:

W0831 22:04:04.653364 1 plugins.go:115] WARNING: aws built-in cloud provider is now deprecated. The AWS provider is deprecated and will be removed in a future release

As the warning reveals, you are most likely using the legacy cloud provider.

The credentials are leaked when cloud-controller-manager starts. So let’s follow the execution to find the lines that are actually logging them.

When cloud-controller-manager starts, it also starts a bunch of controllers. While doing so, it also sets up an informer on the user cloud object:

// startControllers start the cloud specific controller loops.

func startControllers(c *cloudcontrollerconfig.CompletedConfig, stopCh <-chan struct{}, cloud cloudprovider.Interface, controllers map[string]initFunc) error {

// Initialize the cloud provider with a reference to the clientBuilder

cloud.Initialize(c.ClientBuilder, stopCh)

// Set the informer on the user cloud object

if informerUserCloud, ok := cloud.(cloudprovider.InformerUser); ok {

informerUserCloud.SetInformers(c.SharedInformers)

}

for controllerName, initFn := range controllers {

if !genericcontrollermanager.IsControllerEnabled(controllerName, ControllersDisabledByDefault, c.ComponentConfig.Generic.Controllers) {

klog.Warningf("%q is disabled", controllerName)

continue

}

klog.V(1).Infof("Starting %q", controllerName)

_, started, err := initFn(c, cloud, stopCh)

if err != nil {

klog.Errorf("Error starting %q", controllerName)

return err

}

klog.Warningf("Skipping %q", controllerName)

continue

}

klog.Infof("Started %q", controllerName)

...

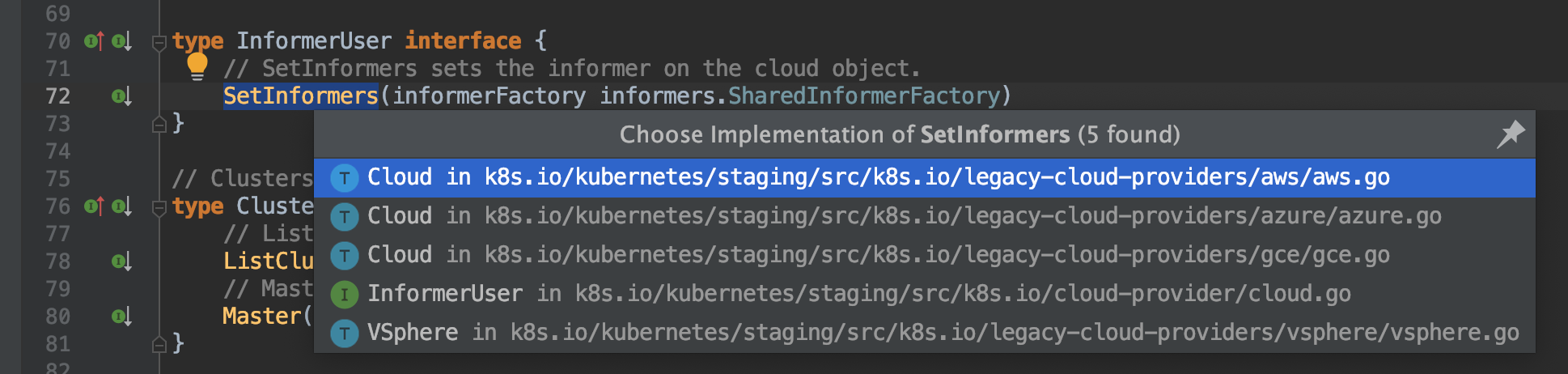

The InformerUser is actually an interface:

type InformerUser interface {

// SetInformers sets the informer on the cloud object.

SetInformers(informerFactory informers.SharedInformerFactory)

}

Each cloud provider has its own implementation. If you look for the SetInformers function, you’ll find that each cloud provider AWS, Azure, GCE, and vSphere implements it:

Below is the vSphere’s implementation:

// Initialize Node Informers

func (vs *VSphere) SetInformers(informerFactory informers.SharedInformerFactory) {

if vs.cfg == nil {

return

}

if vs.isSecretInfoProvided {

secretCredentialManager := &SecretCredentialManager{

SecretName: vs.cfg.Global.SecretName,

SecretNamespace: vs.cfg.Global.SecretNamespace,

SecretLister: informerFactory.Core().V1().Secrets().Lister(),

Cache: &SecretCache{

VirtualCenter: make(map[string]*Credential),

},

}

if vs.isSecretManaged {

klog.V(4).Infof("Setting up secret informers for vSphere Cloud Provider")

secretInformer := informerFactory.Core().V1().Secrets().Informer()

secretInformer.AddEventHandler(cache.ResourceEventHandlerFuncs{

AddFunc: vs.SecretAdded,

UpdateFunc: vs.SecretUpdated,

})

klog.V(4).Infof("Secret informers in vSphere cloud provider initialized")

}

...

According to the code, if the attributes “secret-name” and “secret-namespace” are specified in the vsphere.conf file, the condition “vs.isSecretInfoProvided” returns true.

There is another undocumented attribute “secret-not-managed” that can be configured in the vsphere.conf file. By default this attribute was false, so the condition “vs.isSecretManaged” always returns true.

As a consequence, there will always be a secret informer to handle events like creating and updating secrets.

We are getting close to the source of the issue…

In the “SecretAdded” function, we find the following:

// Notification handler when credentials secret is added.

func (vs *VSphere) SecretAdded(obj interface{}) {

secret, ok := obj.(*v1.Secret)

if secret == nil || !ok {

klog.Warningf("Unrecognized secret object %T", obj)

return

}

if secret.Name != vs.cfg.Global.SecretName ||

secret.Namespace != vs.cfg.Global.SecretNamespace {

return

}

klog.V(4).Infof("secret added: %+v", obj)

vs.refreshNodesForSecretChange()

}

The “obj” variable contains all the information of the secret, including metadata like secret name, namespace, and actual secret data.

This includes the vSphere credentials from the vsphere.conf file. The secret that matches the vsphere secret name and namespace will be logged as follows:

secret added: &Secret{ObjectMeta:{vsphere-secret kube-system /api/v1/namespaces/kube-system/secrets/vsphere-secret 97345a64-db69-4adb-9444-e00e7345fd46 737933 0 2020-09-03 11:20:30 -0700 PDT <nil> <nil> map[] map[kubectl.kubernetes.io/last-applied-configuration:{"apiVersion":"v1","kind":"Secret","metadata":{"annotations":{},"name":"vsphere-secret","namespace":"kube-system"},"stringData":{"vsphere.conf":"username: [email protected]\npassword: password"},"type":"Opaque"}

Further investigation shows that the same also happens inside the “SecretUpdated” function.

Impact of CVE-2020-8563

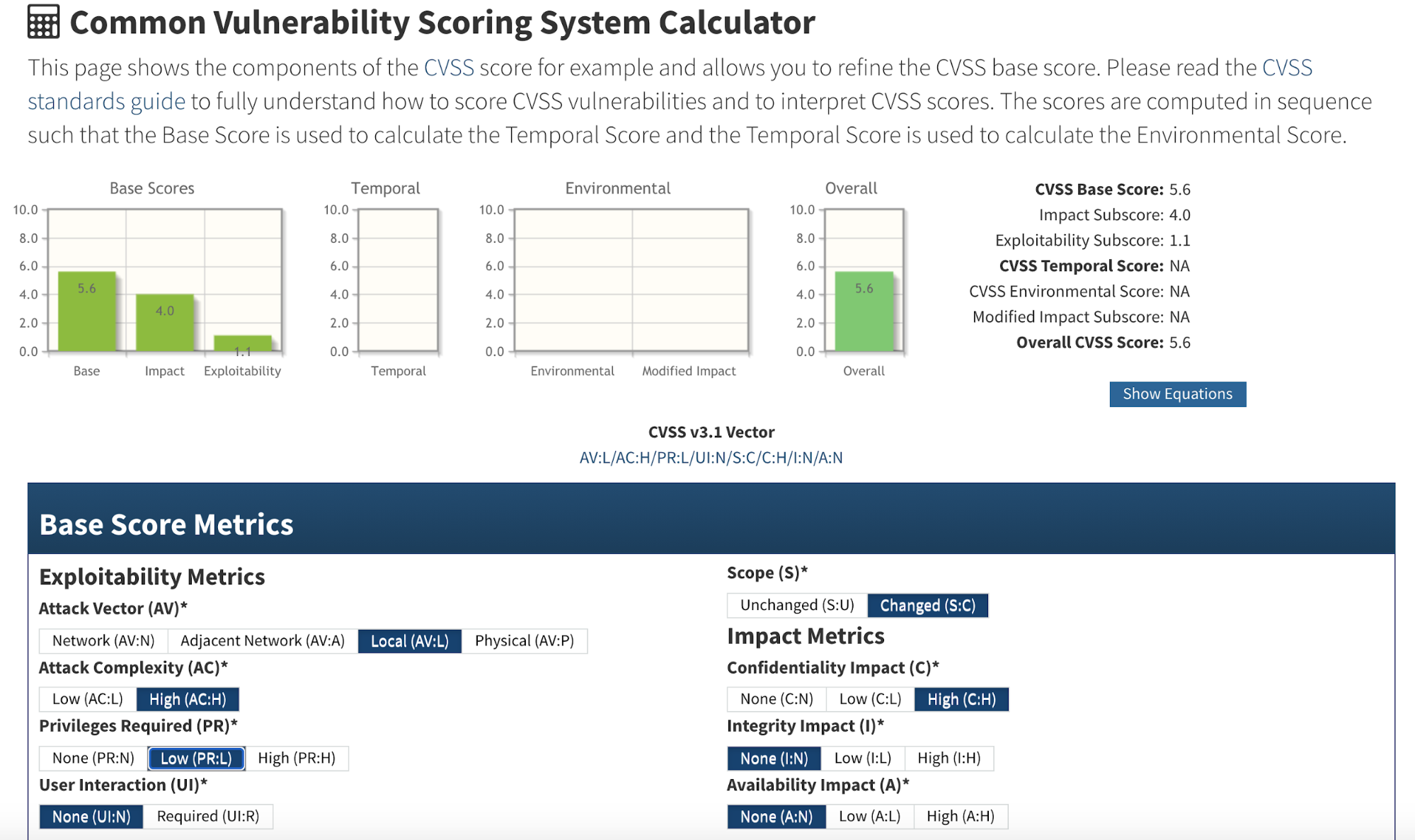

According to the CVSS system, it scores 5.6 as medium severity.

This takes into account that, in order to make the secret leak happen, the attacker needs to find a way to set the log level of cloud-controller-manager to 4 or above. As the exploit requires privileges to access to the node where the component resides, the severity is just medium.

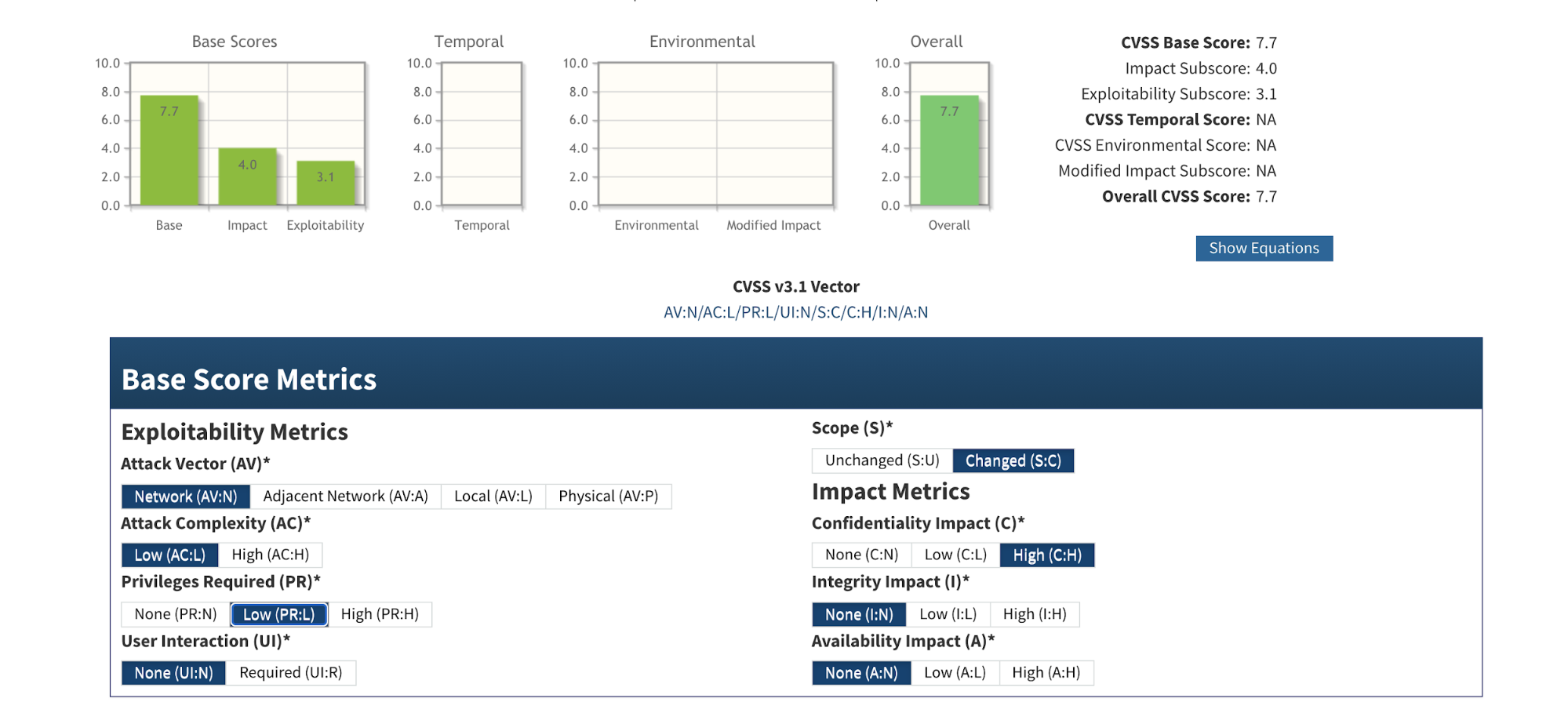

However, what if the log level of cloud-controller-manager was set to 4 or above in the beginning? For example, perhaps someone increased log verbosity (via kubeadm) to assist a troubleshoot.

Then the severity of this CVE score increases to 7.7 as high severity:

In this case, there’s no need for special access. Everyone with access to the cloud-provider-controller’s log is able to view the secrets.

To summarize, the impacted Kubernetes clusters have the following configurations:

- Kubernetes clusters which were built on vSphere

- The logging level of cloud-controller-manager is set to

4or above. - They use a Kubernetes secret to store the vSphere credentials.

Overall, the secret stored clear text in the log archive is dangerous as the secret could then be exposed to any users or applications who have access to the log archives. People who have access to the vsphere credentials can act on behalf of the vsphere user. If the secret stores vSphere administrator’s credentials (which is the most likely scenario), then the entire cluster could be compromised.

Mitigating CVE-2020-8563

If you’re impacted by this CVE, you should update your vSphere password immediately. Expired credentials can’t be used to access your vSphere data center.

Even if the log level of cloud-controller-manager was set to its default value, you should check for Kubernetes components like kube-apiserver and kube-controller-manager that may be starting with a verbose log.

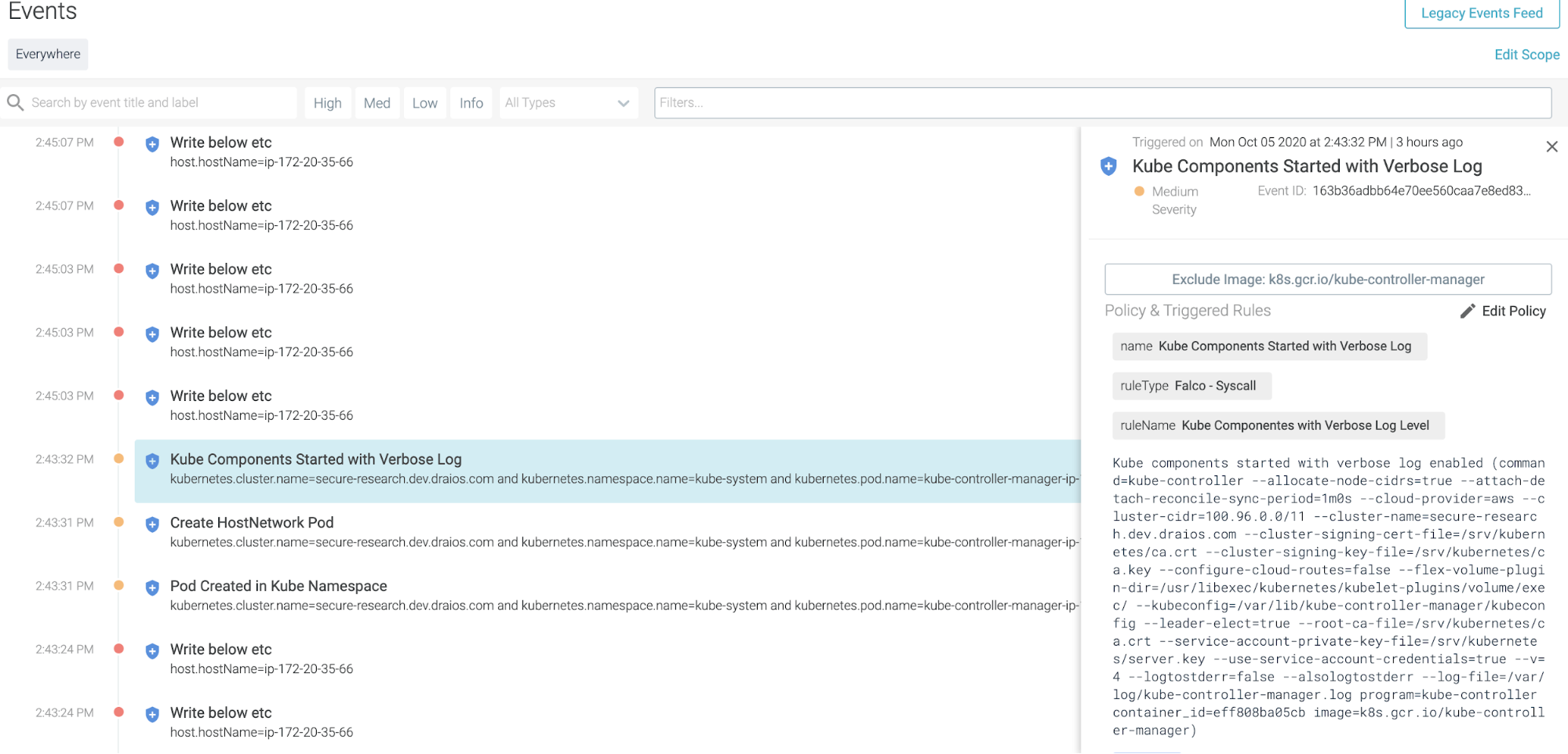

Falco, a CNCF incubating project, can help detect anomalous activities in cloud native environments. The following Falco rule can help you detect if you are impacted by CVE-2020-8563:

- list: kube_controller_manager_image_list items: - "k8s.gcr.io/kube-controller-manager" - macro: kube_controller_manager condition: (proc.name=kube-controller and container.image.repository in (kube_controller_manager_image_list)) - list: kube_apiserver_image_list items: - "k8s.gcr.io/kube-apiserver" - macro: kube_apiserver condition: (proc.name=kube-apiserver and container.image.repository in (kube_apiserver_image_list)) - list: vsphere_cloud_manager_image_list items: - "gcr.io/cloud-provider-vsphere/cpi/release/manager" - macro: vsphere_cloud_manager condition: (proc.name=vsphere-cloud-c and container.image.repository in (vsphere_cloud_manager_image_list)) # TODO: add more components like kube-proxy, kube-scheduler - macro: kube_components condition: (kube_apiserver or kube_controller_manager or vsphere_cloud_manager) # default log level is 2, any log level greater than 2 is considered verbose - macro: verbose_log condition: ((evt.args contains "-v=" or evt.args contains "--v=") and not (evt.args contains "-v=0" or evt.args contains "-v=1" or evt.args contains "-v=2" or evt.args contains "--v=0" or evt.args contains "--v=1" or evt.args contains "--v=2")) - rule: Kube Componentes Started with Verbose Log Level desc: Detected kube components like kube-apiserver, kube-proxy started with verbose log enabled condition: spawned_process and kube_components and verbose_log output: Kube components started with verbose log enabled (command=%proc.cmdline program=%proc.name container_id=%container.id image=%container.image.repository) priority: WARNING tags: - "process"

This rule will detect Kubernetes components starting with a verbose log.

The security event output will look like this in Sysdig Secure (which uses Falco underneath):

Conclusion

You might be following the best practices to harden the kube-apiserver, as it is the brain of the Kubernetes cluster, and also etcd, as it stores all of the critical information about the Kubernetes cluster. However, if there is one thing CVE-2020-8563 teaches us, it’s that we shouldn’t stop there as we need to secure every Kubernetes component.

Sysdig Secure can help you benchmark your Kubernetes cluster and check whether it is compliant with security standards like PCI or NIST. Try it today!