Falco Feeds extends the power of Falco by giving open source-focused companies access to expert-written rules that are continuously updated as new threats are discovered.

Discover how applying a quick set of file integrity monitoring best practices will help you detect the tampering of critical file systems in your cloud environment.

An attacker can gain access to your system escaping the container by modifying certain files, like the runc binary (CVE-2019-5736). By monitoring these files, we can detect those unauthorized modifications and react immediately to those compromising attempts.

File integrity monitoring (FIM) is an ongoing process which gets you visibility into all of your sensitive files, detecting changes and tampering of critical system files or directories that would indicate an ongoing attack. Knowing if any of these files have been tampered with is critical to keep your infrastructure secure, helping you detect attacks at an early stage and investigate them afterwards.

FIM is a core requirement in many compliance standards, like PCI/DSS, NIST SP 800-53, ISO 27001, GDPR, and HIPAA, as well as in security best practice frameworks like the CIS Distribution Independent Linux Benchmark.

In an ideal scenario, any change occurred in a sensitive file by an unauthorized actor should be detected and immediately reported.

"📄👀 Malware, leaked credentials or attacks can be detected with file integrity monitoring. Learn how with these 9 best practices."

Following these best practices for the tools you use in your infrastructure will help you detect this kind of attack.

This article dives into a curated list of FIM best practices, focused on host and container security:

This selected set of file integrity monitoring best practices are grouped by topic, but please remember that FIM is just a piece in the whole security process.

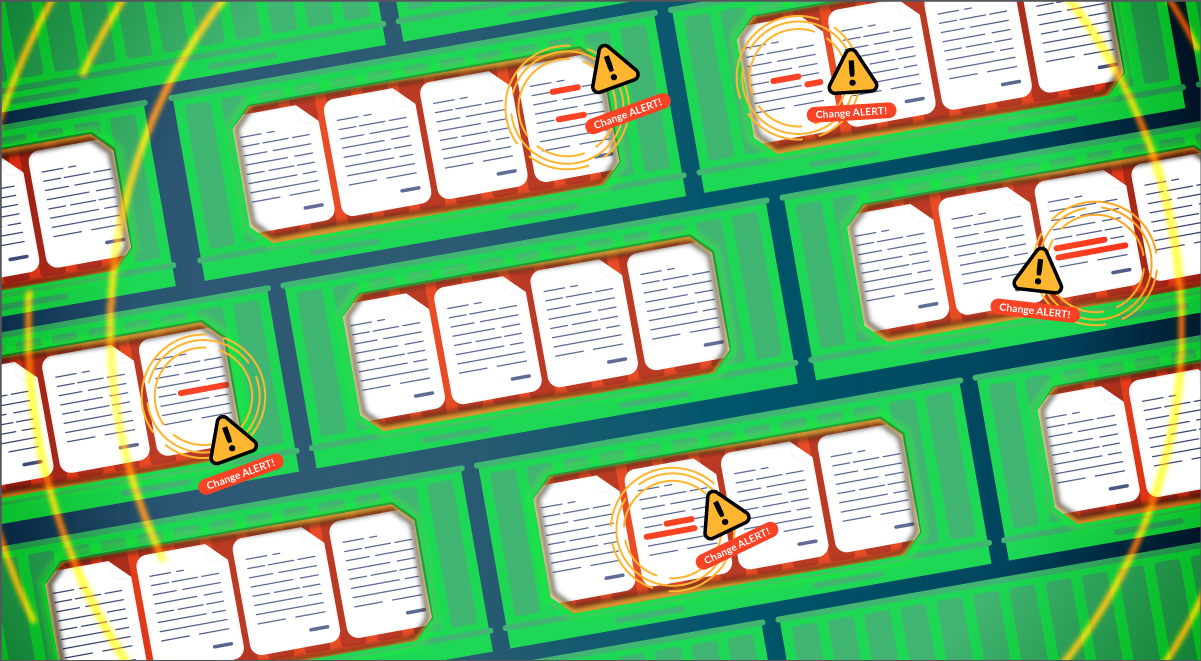

#1 Prepare an asset inventory

Maintaining an asset inventory is the first step in securing your system. You cannot secure what you can't see, and thus, having a list of files and directories that are important for you is the very first best practice you must implement in your infrastructure.

A word of caution here: Too big of a scope will cause too many alerts, and it can lead to too many false positives and alert fatigue, rendering your whole FIM untrustable and worthless.

#1.1 Scope which files and directories need to be monitored

Create and maintain a comprehensive list of the files and directories that need monitoring. These assets include system and configuration files, as well as files containing sensitive information. While many of these files will depend on the actual business use case, there are a common set of assets that you certainly want to be monitored:

You must take into consideration assets living in your cloud infrastructure, like logs files stored in an S3 bucket, if you are using AWS.

#1.2 Define appropriate permissions

Define appropriate writing permissions for the files and directories in your asset list. This way, you can detect if an unauthorized user modifies these files.

Especially critical is the fact that in containers, when you have writing permissions in a volume exposed from the host, you can create a symlink to another directory and/or file in the host, a first step for escaping the container.

At this point, it is important to differentiate a regular file write from a file destruction, since many of the files in your inventory will need to be routinely appended to, but are not expected to be truncated or deleted. Examples of these files would be system, shell, or application log files.

Each file or directory needs to be associated with a modification policy. For example, files under /bin or /sbin should not be modified, new files should not be created under these directories at all, and mysql.log is expected to grow but never decrease in size.

#1.3 Define a baseline

Calculate the checksums of your assets and store them in a safe location to be continually checked against. Since the checksum is the fingerprint of the actual contents of a file, any unauthorized deviation from the fingerprint's stored value means the file has been tampered with.

#2 Detect drift

Using the information from your asset inventory (files and directories list, along with their permissions and checksum information), monitor your system for any deviations. You can detect drift early on in your CI/CD pipeline, and at runtime in quasi real-time by using runtime policies.

#2.1 Shift left with image scanning policies

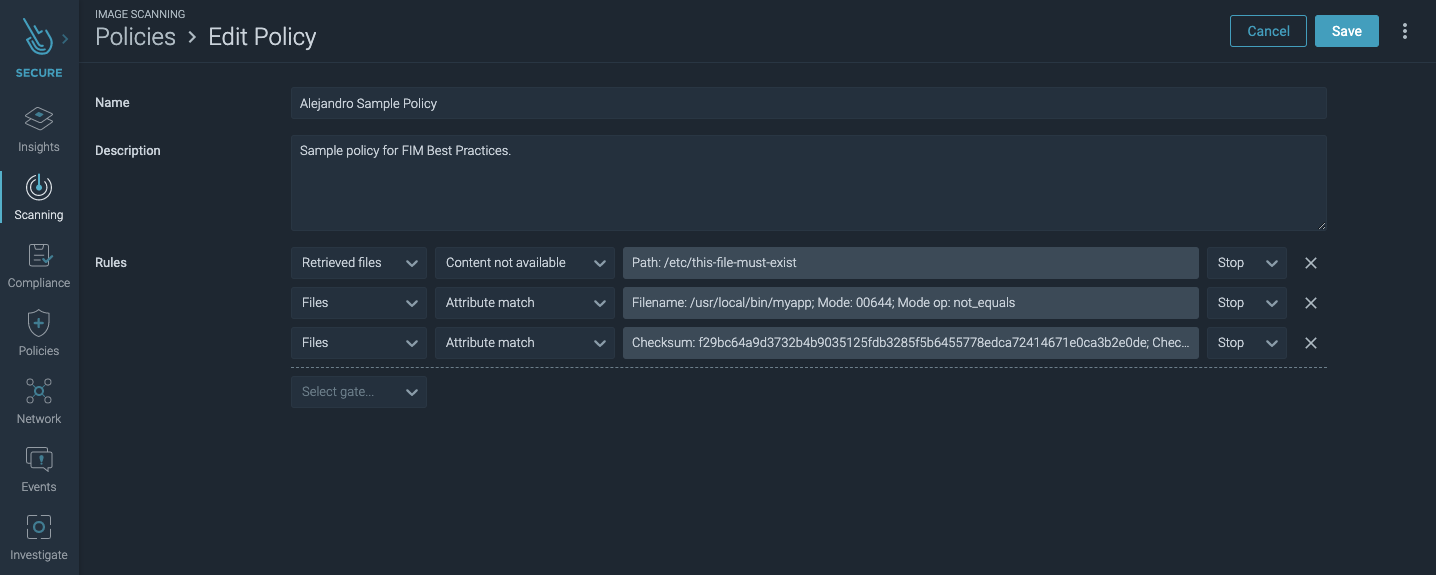

Store your asset inventory relevant data, incorporating it in your image scanning policy. This will allow you to shift your security left by rejecting builds in your CI/CD pipelines.

Tailor your image scanning policy to check for:

- The existence of files or directories that should not be there, and reciprocally, the lack of a file or directory that should.

- The presence of file permissions that should not be there, specifically write, execute,

SUID, andGUIDpermissions, as well as the sticky bit. - Any discrepancy in the checksums of specific files compared with the baseline.

If you are interested in image scanning, don't miss these dockerfile best practices.

If your inventory is well defined, the creation of these policies will be straightforward and the file integrity monitoring in your pipeline will stop FIM related incidents right in their tracks.

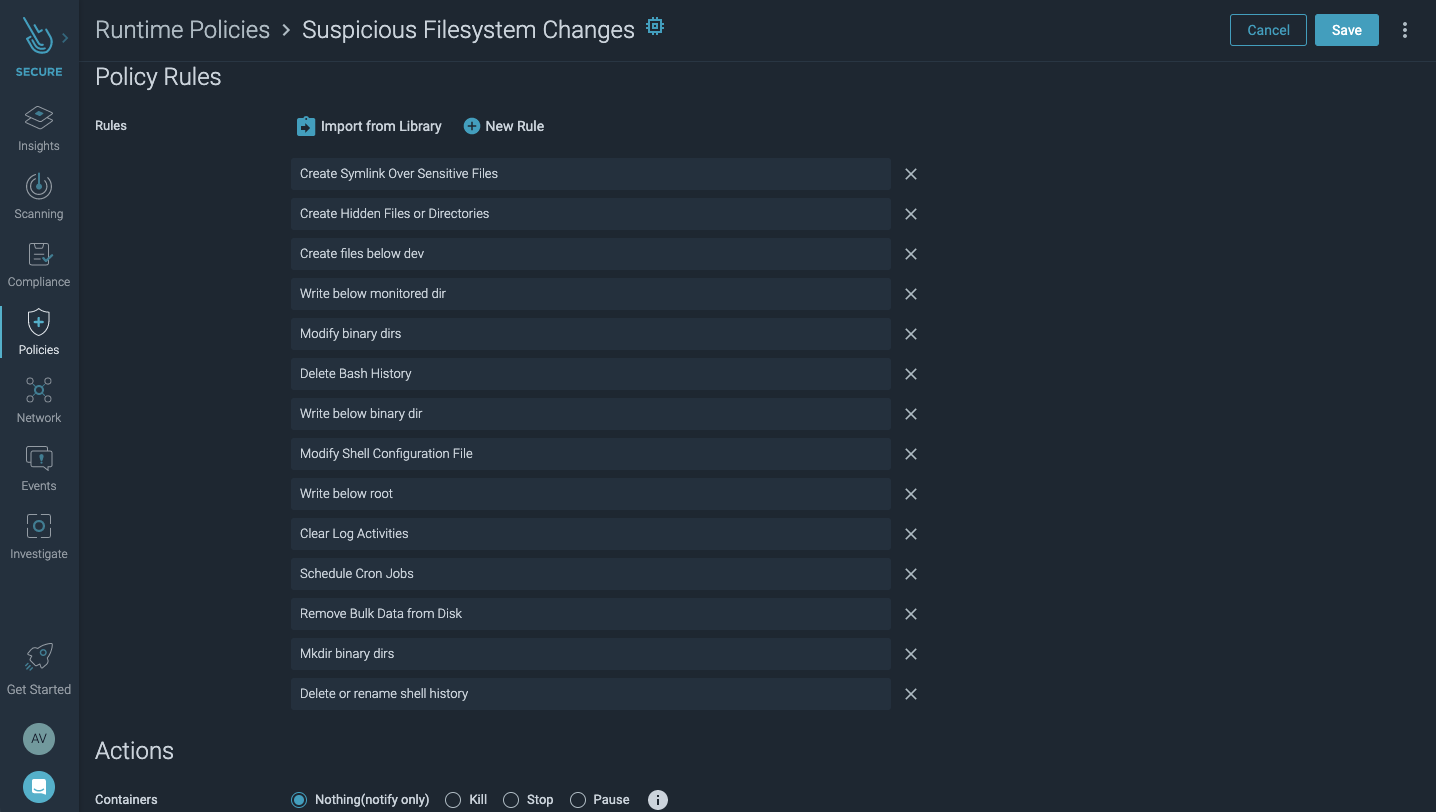

#2.2 Detect real-time threats with runtime policies

Implement runtime drift detection policies that will alert you on any unauthorized changes to the filesystem. Customize your runtime policy to look for the following file integrity monitoring common use cases:

- The creation, renaming, or deletion of files or directories.

- The modification of file or directory attributes, like permissions, ownership, and inheritance.

- The modification of files inside a container.

- The modification of files below sensitive paths (

/bin,/etc,/root, …). - The deletion of shell history.

#3 Notify, investigate, and respond

You can now detect drift in your asset inventory by means of image scanning policies shifting your file integrity monitoring left, and runtime policies providing real-time detection. The circle won't be completed unless you are properly notified and provided with all the necessary data to appropriately respond to the incident.

#3.1 Implement an automated alert and response mechanism

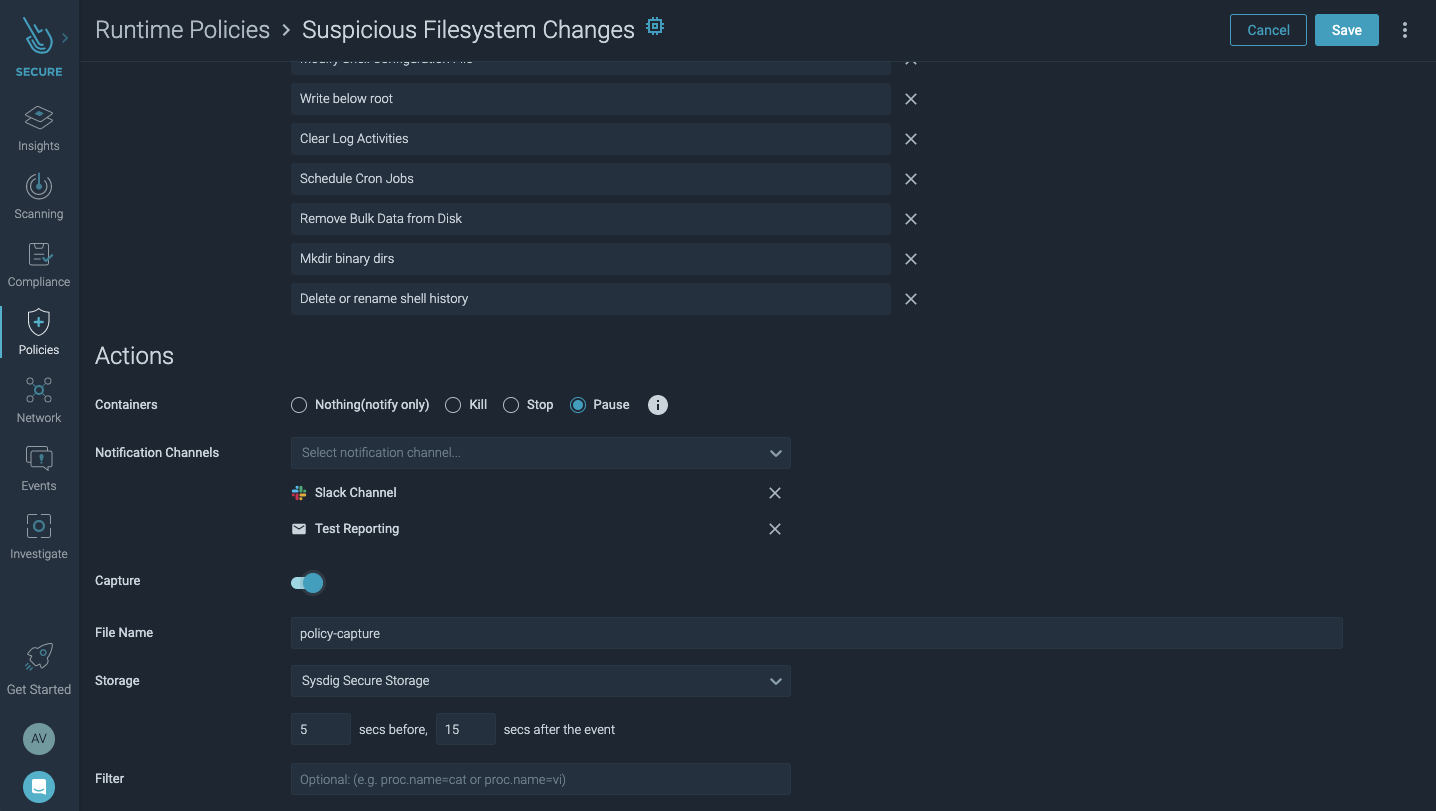

Automate remediation responses in your runtime policies by:

- Pausing and quarantining, or killing, the container to stop the attack.

- Sending a notification via Slack, JIRA, Email, PagerDuty, SNS, etc.

#3.2 Gather forensics data for further investigation

An attacker will typically make use of tactics and techniques that have been proven to be able to evade traditional security and forensics tools. To overcome this, gathering low level syscall data properly enriched with container / Kubernetes metadata can provide the ultimate forensics data you can rely on. You can implement this in your runtime policies.

You can then use the open source tool Sysdig Inspect, to analyze everything that happened right before and after a suspicious file activity.

#4 Compliance and Benchmarks

As mentioned before, file integrity monitoring is necessary for meeting important compliance standards like PCI / DSS 3.2.1, which mandates the following:

By detecting the modification of such log files by unauthorized users or processes, you can ensure compliance with this PCI control 10.5.5. By implementing the alert and response mechanism in the runtime policy, you comply with PCI control 11.5.1. These two are the only controls in PCI / DSS 3.2.1 that concern file integrity monitoring, so by following the previous best practices you comply with all the FIM requirements in this standard.

#4.1 Stick to compliance requirements

As stated before, some compliance standards require you to implement a file integrity monitoring strategy. Make sure to thoroughly study the standard you are implementing and take good note of which files it wants to be monitored; most often than not, these will be the log files in your system. Any attempt to tamper with these log files are probably an Indicator Removal on Host attack tactic.

We already saw how PCI / DSS wants you to monitor the integrity of logs files. As another example, NIST 800-53 control SI-07 explicitly tells you to use "integrity verification tools to detect unauthorized changes" on a variety of organization-defined objects, and control AC-02 calls for the monitorization of "create, enable, modify, disable and remove information system accounts in accordance with policy". Other standards like ISO 27001, GDPR, and HIPAA may have different FIM requirements.

It is your responsibility to extract the information of the compliance standard you are implementing and, equipped with this essential data, go and prepare that asset inventory we mentioned in #1.

#4.2 Run automated benchmarks

Compliance standards are not the only source of information you will need to use. Make sure to select the benchmarks framework that fits your industry and follow it; the profit will be twofold. For example, CIS Distribution Independent Linux Benchmark states:

You can extract essential information from the controls in this benchmark (or the specific benchmark you are implementing, mind you), so make sure to scan the framework and use it to complement your inventory.

And, as a colophon, implement automated benchmarks that will periodically scan your infrastructure and create standardized reports which will give you specific and detailed information on each and every deviation from your inventory baseline.

So, in a way, these last two best practices are the first and the last steps you will need to take care of when implementing a successful file integrity monitoring strategy.

Conclusion

File integrity monitoring is key to detect attackers compromising containers or gaining access to your system.

Remember to:

- Make an inventory of sensitive files.

- Set up detections for modifications, creations or deletions on those files.

- Efficiently notify on unexpected behavior and enable a forensics investigation.

- Meet compliance standards.

By following these best practices, attackers will have a tougher time compromising your infrastructure, and you'll sleep better at night.

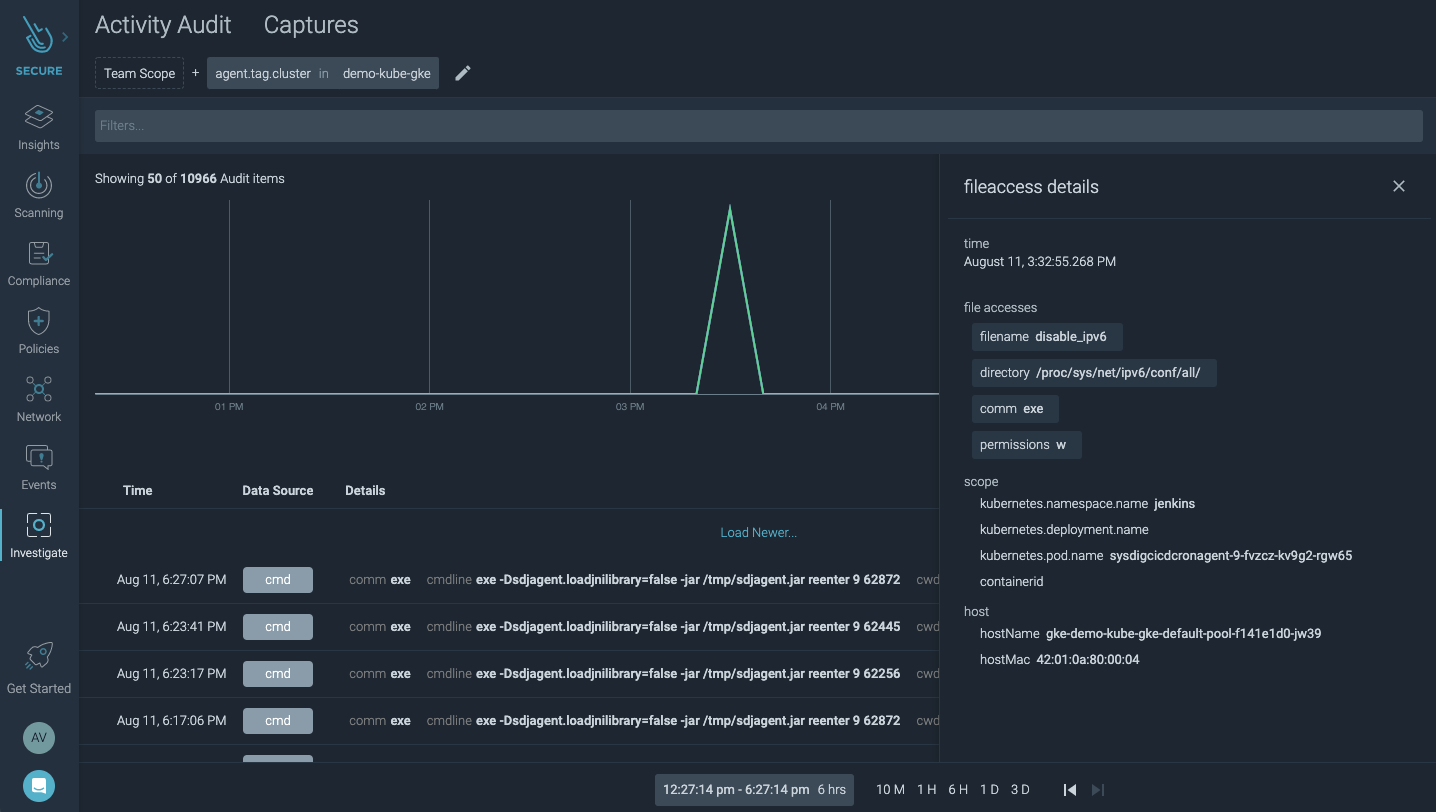

Detecting an attack with Sysdig Secure: Copying malware into a file directory

Let's see file integrity monitoring in action with an example.

We'll go through an attack where a hacker is copying malware to a sensitive file inside a container. Then, we'll see how a robust FIM solution can detect and analyze all activity surrounding this attack, helping you respond effectively.

Suppose a hacker gets access to your container (can also be a VM or a bare metal host) and starts to copy malware into /usr/bin directory.

If you were monitoring your host/container at runtime, you would have been alerted to this suspicious activity.

Let's go a step further to drill into the activity surrounding this file change. This attack was performed by a user named JohnDoe who kube-exec'ed into a pod and copied malware into a directory that was part of a sensitive java application namespace.

It's hard to know the details of what's happening inside Kubernetes at any given time, especially since containers are ephemeral. But this audit trail shows exactly how a file was tampered with and who made the change, all within the context of cloud and Kubernetes environments and even after the container is gone.

Sysdig Secure can support you in managing security risk by helping you implement FIM for hosts and containers.

Learn more about Kubernetes Security in our product page, or visit and sign up for a 30-day free Sysdig Secure trial!