Falco Feeds extends the power of Falco by giving open source-focused companies access to expert-written rules that are continuously updated as new threats are discovered.

Kubernetes 1.18 is about to be released! After the small release that was 1.17, 1.18 comes strong and packed with novelties. Where do we begin?

There are new features, like the OIDC discovery for the API server and the increased support for Windows nodes, that will have a big impact on the community.

We are also happy to see how some features that have been on Alpha state for too long are now being reconsidered and prepared for the spotlight, like Ingress or the API Server Network Proxy.

Additionally, we have to celebrate the 13 features that are graduating to Stable. It's a third of all new changes!

Here is the detailed list of what's new in Kubernetes 1.18.

This is what's new in #Kubernetes 1.18 Click to tweet

Kubernetes 1.18 – Editor's pick:

These are the features that look most exciting to us in this release (ymmv):

#1393 OIDC discovery for service account token issuer

Being able to use Kubernetes API Tokens as a general authentication mechanism can change the way we organize our infrastructure. It will allow us to integrate services further like communication between clusters, simplifying setups by making external authentication services unnecessary.

#1513 CertificateSigningRequest API

The Certificate API is another feature that was meant for core components, but started to be used for third party services. This enhancement is an example of how Kubernetes adapts to the community needs, making it not only easier to provision certificates, but also more secure.

#1441 kubectl debug

This new command will make a huge difference when it comes to debugging issues on running pods. Creating debug containers or redeploying pods with a different configuration, those are common tasks that will be faster from now on.

#1301 Implement RuntimeClass on Windows

Being able to specify which pods must run on Windows machines is going to allow mixing of Windows and Linux workloads on the same cluster, opening a new dimension of possibilities.

#1001 Support CRI-ContainerD on Windows

This is a huge step to improve Kubernetes compatibility on Windows nodes, although we'll have to wait for future releases to see the full benefits. With little upgrades like this, Kubernetes shows they are serious on their Windows support.

Kubernetes 1.18 core

#1393 Provide OIDC discovery for service account token issuer

Stage: Alpha

Feature group: auth

Kubernetes service accounts (KSA) can use tokens (JSON Web Tokens or JWT) to authenticate against the Kubernetes API, using for example kubectl --token . However, the Kubernetes API is currently the only service that can authenticate those tokens.

As the Kubernetes API server isn't (and shouldn't be) accessible from the public network, some workloads must use a separate system to authenticate. An example of this is authenticating across clusters, such as from within a cluster to somewhere else.

This enhancement aims to make KSA tokens more useful, allowing services outside the cluster to use them as a general authentication method without overloading the API server. To achieve this, the API server provides an OpenID Connect (OIDC) discovery document that contains the token public keys, among other data. Existing OIDC authenticators can use these keys to validate KSA tokens.

The OIDC discovery can be enabled with the ServiceAccountIssuerDiscovery feature gate, and requires some configuration to make it work.

#853 Configurable scale velocity for HPA

Stage: Alpha

Feature group: autoscaling

The Horizontal Pod Autoscaler (HPA) can automatically scale the number of pods in resources to adjust the workload demand. Until now, you could only define a global scaling velocity for the whole cluster. However not all resources are equally critical. You might want to upscale and downscale your core services faster than less important ones.

Now, add behaviours to your HPA configurations:

behavior:

scaleUp:

policies:

- type: Percent

value: 100

periodSeconds: 15

scaleDown:

policies:

- type: Pods

value: 4

periodSeconds: 60

In this example, pods can be doubled every 15 seconds. When it comes to downscaling, four pods can be removed every minute. Check the full syntax in the documentation.

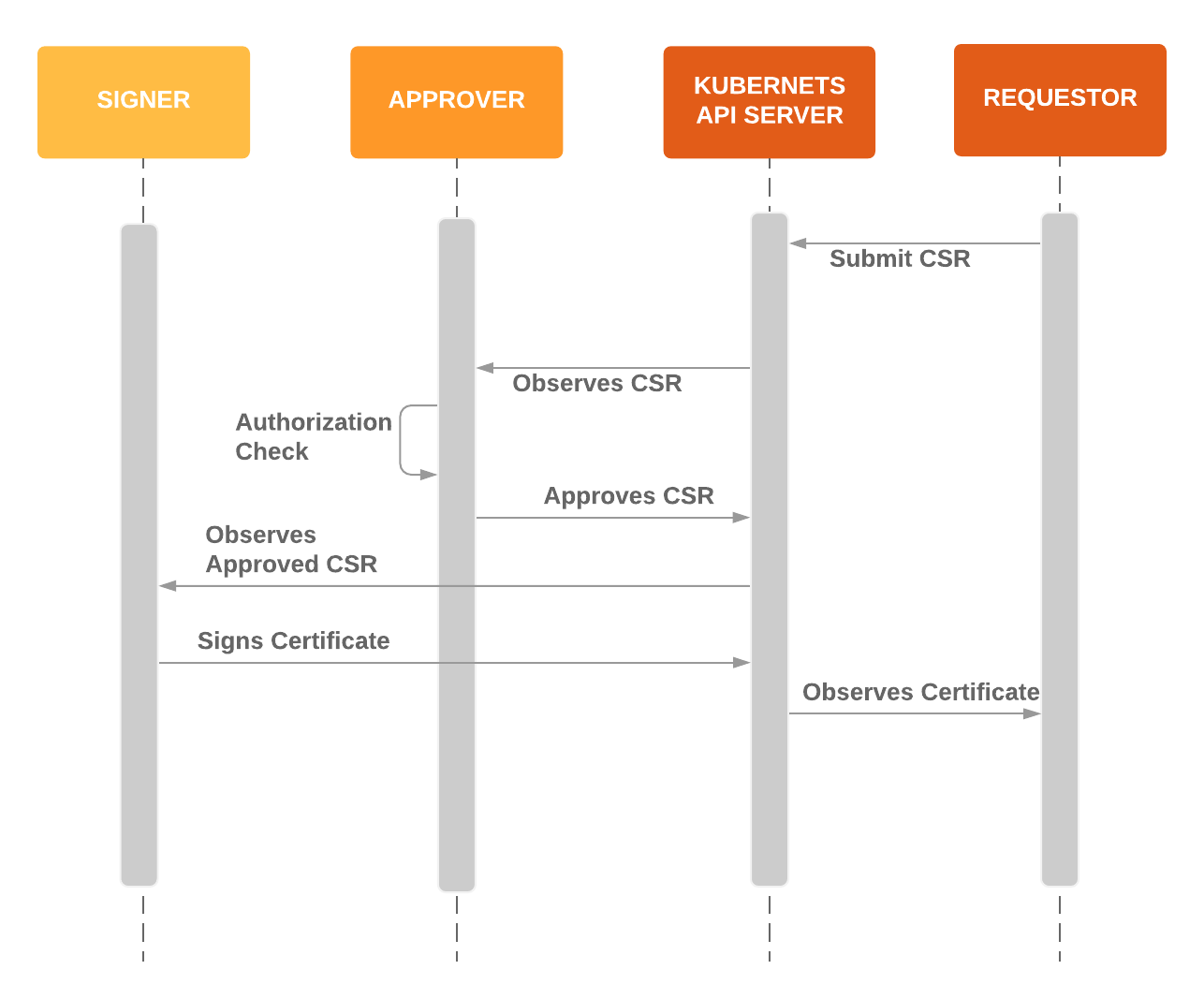

#1513 CertificateSigningRequest API

Stage: Major Change to Beta

Feature group: auth

Each Kubernetes cluster has a root certificate authority that is used to secure communication between core components, which are handled by the Certificates API. This is convenient, so eventually it started to be used to provision certificates for non-core uses.

This enhancement aims to embrace the new situation, improving both the signing process and its security.

A Registration Authority figure, the Approver, ensures that a certificate signing request (CSR) has been submitted by the actual requestor; they also make sure that the requestor has the appropriate permissions to submit that request.

Scheduling

#1451 Run multiple Scheduling Profiles

Stage: Alpha

Feature group: scheduling

Not all of the workloads inside a Kubernetes cluster are the same. You most likely want to spread your web servers on as many nodes as possible, but you may want to bundle as many latency-sensitive resources as possible in the same node. This is why you can configure multiple schedulers inside the same cluster and instruct which scheduler to use for each pod.

However, this may lead to race conditions, as each scheduler can have a different view of the cluster at a given moment.

This enhancement allows you to run one scheduler with different configurations, or profiles, each with its own schedulerName. Pods will keep using schedulerName to define what profile to use, but it will be the same scheduler doing the work, avoiding those race conditions.

#895 Even pod spreading across failure domains

Stage: Graduating to Beta

Feature group: scheduling

With topologySpreadConstraints, you can define rules to distribute your pods evenly across your multi-zone cluster, so high availability will work correctly and the resource utilization will be efficient.

Read more in the release for 1.16 of the What's new in Kubernetes series.

#166 Taint Based Eviction

Stage: Graduating to Stable

Feature group: scheduling

Taint based evictions move from alpha to beta state in Kubernetes 1.13. When this feature is enabled (TaintBasedEvictions=true in --feature-gates), the taints are automatically added by the NodeController (or kubelet) and the former logic for evicting pods from nodes, based on the Ready NodeCondition, is disabled.

Read more in the release for 1.13 of the What's new in Kubernetes series.

Nodes

#1539 Extending Hugepage Feature

Stage: Major Change to Stable

Feature group: node

HugePages are a mechanism to reserve big blocks of memory with predefined sizes that are faster to access thanks to hardware optimizations. This is especially useful for applications that handle big data sets in memory or ones that are sensitive to memory access latency, like databases or virtual machines.

In Kubernetes 1.18 two enhancements are added to this feature.

First, the pods are now allowed to request HugePages of different sizes.

apiVersion: v1

kind: Pod

…

spec:

containers:

…

resources:

requests:

hugepages-2Mi: 1Gi

hugepages-1Gi: 2Gi

And second, container isolation of HugePages has been put in place to solve an issue where a pod could use more memory than requested, ending up in resource starvation.

#688 Pod Overhead: account resources tied to the pod sandbox, but not specific containers

Stage: Graduating to Beta

Feature group: node

In addition to the requested resources, your pods need extra resources to maintain their runtime environment.

With PodOverhead feature gate enabled, Kubernetes will take into account this overhead when scheduling a pod. The Pod Overhead is calculated and fixed at admission time and it's associated with the pod's RuntimeClass. Get the full details here.

Read more in the release for 1.16 of the What's new in Kubernetes series.

#693 Node Topology Manager

Stage: Graduating to Beta

Feature group: node

Machine learning, scientific computing and financial services are examples of systems that are computational intensive and require ultra low latency. These kinds of workloads benefit from isolated processes to one CPU core rather than jumping between cores or sharing time with other processes.

The node topology manager is a kubelet component that centralizes the coordination of hardware resource assignments. The current approach divides this task between several components (CPU manager, device manager, CNI), which sometimes results in unoptimized allocations.

Read more in the release for 1.16 of the What's new in Kubernetes series.

#950 Add pod-startup liveness-probe holdoff for slow-starting pods

Stage: Graduating to Beta

Feature group: node

Probes allow Kubernetes to monitor the status of your applications. If a pod takes too long to start, those probes might think the pod is dead, causing a restart loop. This feature lets you define a startupProbe that will hold off all of the other probes until the pod finishes its startup. For example,"Don't test for liveness until a given HTTP endpoint is available".

Read more in the release for 1.16 of the What's new in Kubernetes series.

Network

#752 EndpointSlice API

Stage: Major Change to Beta

Feature group: network

The new EndpointSlice API will split endpoints into several Endpoint Slice resources. This solves many problems in the current API that are related to big Endpoints objects. This new API is also designed to support other future features, like multiple IPs per pod.

Read more in the release for 1.16 of the What's new in Kubernetes series.

#508 IPv6 support added

Stage: Graduating to Beta

Feature group: network

Support for IPv6-only clusters was introduced a long time ago on Kubernetes 1.9. This feature has been tested widely by the community and is now graduating to Beta.

#1024 Graduate NodeLocal DNSCache to GA

Stage: Graduating to Stable

Feature group: network

NodeLocal DNSCache improves Cluster DNS performance by running a dns caching agent on cluster nodes as a Daemonset, thereby avoiding iptables DNAT rules and connection tracking. The local caching agent will query kube-dns service for cache misses of cluster hostnames (cluster.local suffix by default).

You can learn more about this beta feature reading the design notes in its Kubernetes Enhancement Proposal (KEP) document.

Read more in the release for 1.15 of the What's new in Kubernetes series.

#1453 Graduate Ingress to V1

Stage: Graduating to Beta

Feature group: network

An Ingress resource exposes external HTTP and HTTPS routes as services, which are accessible to other services within the cluster.

This API object was included in Kubernetes 1.1, becoming a de-facto Stable feature. This enhancement removes inconsistencies between Ingress implementations and begins its way into general availability.

For example, you can now define a pathType to explicitly state if a path should be treated as a Prefix or an Exact match. If several paths within an Ingress match a request, the longest matching paths will take precedence.

Also, the kubernetes.io/ingress.class annotation has been deprecated. Now the new ingressClassName field and the IngressClass resource should be used.

#1507 Adding AppProtocol to Services and Endpoints

Stage: Graduating to Stable

Feature group: network

The EndpointSlice API added a new AppProtocol field in Kubernetes 1.17 to allow application protocols to be specified for each port. This enhancement brings that field into the ServicePort and EndpointPort resources, replacing non-standard annotations that are causing a bad user experience.

Kubernetes 1.18 API

#1040 Priority and Fairness for API Server Requests

Stage: Alpha

Feature group: api-machinery

During high loads, the Kubernetes API server needs to be responsible for admin and maintenance tasks. The existing --max-requests-inflight and --max-mutating-requests-inflight command-line flags can limit the incoming requests, but they are too coarse grained and can filter out important requests during high traffic periods.

The APIPriorityAndFairness feature gate enables a new max-in-flight request handler in the apiserver. Then, you can define different types of requests with FlowSchema objects and assign them resources with RequestPriority objects.

For example, the garbage collector is a low priority service:

kind: FlowSchema

meta:

name: system-low

spec:

matchingPriority: 900

requestPriority:

name: system-low

flowDistinguisher:

source: user

match:

- and:

- equals:

field: user

value: system:controller:garbage-collector

So you can assign very few resources to it:

kind: RequestPriority

meta:

name: system-low

spec:

assuredConcurrencyShares: 30

queues: 1

queueLengthLimit: 1000

However, self-maintenance requests have higher priority:

kind: RequestPriority

meta:

name: system-high

spec:

assuredConcurrencyShares: 100

queues: 128

handSize: 6

queueLengthLimit: 100

You can find more examples in the enhancement proposal.

#1601 client-go signature refactor to standardize options and context handling

Stage: Major Change to Stable

Feature group: api-machinery

Several code refactors have been done on client-go, the library that many core binaries use to connect to the Kubernetes API, to keep consistency on method signatures.

This includes adding a context object to some methods, an object that carries request-scoped values across API boundaries and between processes. Accessing this object eases the implementation of several features, like freeing calling threads after timeouts and cancellations, or adding support for distributed tracing.

#576 APIServer DryRun

Stage: Graduating to Stable

Feature group: api-machinery

Dry-run mode lets you emulate a real API request and see if the request would have succeeded (admission controllers chain, validation, merge conflicts, …) and/or what would have happened without actually modifying the state. The response body for the request is supposed to be as close as possible to a non dry-run response. This core feature will enable other user level features like the kubectl diff subcommand.

Read more in the release for 1.13 of the What's new in Kubernetes series.

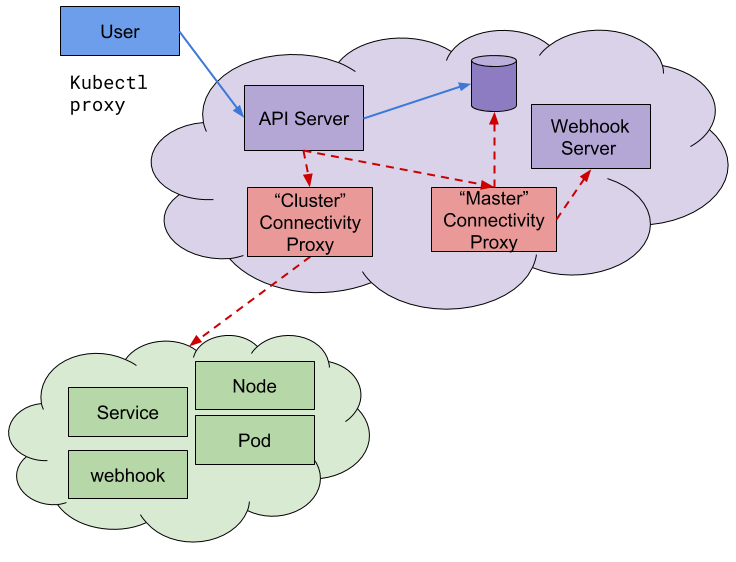

#1281 API Server Network Proxy KEP to Beta

Stage: Graduating to Beta

Feature group: api-machinery

Some people (mostly Cloud Providers) prefer to isolate their cluster API server in a separate control network rather than the cluster network. One way to achieve this, while maintaining connectivity with the rest of the cluster components, is to use the Kubernetes API server network proxy.

Having this extra layer can enable other features like metadata audit logging and validation of outgoing API server connections.

This enhancement covers the work to fix some issues and prepare this proxy for general availability, like removing the SSH Tunnel code from the Kubernetes API server and improving the isolation of the control network from the cluster network.

Windows support in Kubernetes 1.18

#1001 Support CRI-ContainerD on Windows

Stage: Alpha

Feature group: windows

ContainerD is an OCI-compliant runtime that works with Kubernetes. Contrary to Docker, ContainerD includes support for the host container service (HCS v2) in Windows Server 2019, which offers more control over how containers are managed and can improve some Kubernetes API compatibility.

This enhancement introduces ContainerD 1.3 support in Windows as a Container Runtime Interface (CRI). Check more details in this enhancement page.

#1301 Implement RuntimeClass on Windows

Stage: Alpha

Feature group: windows

Using RuntimeClass you can define the different types of nodes that exist in your cluster, then you use runtimeClassName to specify in which nodes a pod can be deployed. This feature was introduced on Kubernetes 1.12 and had major changes on Kubernetes 1.14.

This enhancement expands this feature to Windows nodes, which is really helpful in heterogeneous clusters to instruct Windows pods to be deployed only on Windows nodes. This is how you define a RuntimeClass to restrict pods to Windows Server version 1903 (10.0.18362).

apiVersion: node.k8s.io/v1beta1

kind: RuntimeClass

metadata:

name: windows-1903

handler: 'docker'

scheduling:

nodeSelector:

kubernetes.io/os: 'windows'

kubernetes.io/arch: 'amd64'

node.kubernetes.io/windows-build: '10.0.18362'

tolerations:

- effect: NoSchedule

key: windows

operator: Equal

value: "true"

You would then use runtimeClassName in your pods, like this:

apiVersion: v1

kind: Pod

metadata:

name: mypod

spec:

runtimeClassName: windows-1903

# ...

Check the enhancement page for further details, and to learn how this feature interacts with #1001 Support CRI-ContainerD and Hyper-V.

#689 Support GMSA for Windows workloads

Stage: Graduating to Stable

Feature group: windows

This will allow an operator to choose a GMSA at deployment time and run containers using it to connect to existing applications, such as a database or API server, without changing how the authentication and authorization are managed inside the organization.

Read more in the release for 1.14 of the What's new in Kubernetes series.

#1043 RunAsUserName for Windows

Stage: Graduating to Stable

Feature group: windows

Now that Kubernetes has support for Group Managed Service Accounts, we can use the runAsUserName Windows specific property to define which user will run a container's entrypoint.

Read more in the release for 1.16 of the What's new in Kubernetes series.

#995 Kubeadm for Windows

Stage: Graduating to Beta

Feature group: cluster-lifecycle

Support for Windows nodes was introduced in Kubernetes 1.14, but there wasn't an easy way to join Windows nodes to a cluster.

Since Kubernetes 1.16, kubeadm join is available for Windows users with partial functionality. It will lack some features like kubeadm init or kubeadm join --control-plane.

Read more in the release for 1.16 of the What's new in Kubernetes series.

Storage

#695 Skip Volume Ownership Change

Stage: Alpha

Feature group: storage

Before a volume is bind-mounted inside a container, all of its file permissions are changed to the provided fsGroup value. This ends up being a slow process on very large volumes, and also breaks some permission sensitive applications, like databases.

The new FSGroupChangePolicy field has been added to control this behaviour. If set to Always, it will maintain the current behaviour. However, when set to OnRootMismatch, it will only change the volume permissions if the top level directory does not match the expected fsGroup value.

#1412 Immutable Secrets and ConfigMaps

Stage: Alpha

Feature group: storage

A new immutable field has been added to Secrets and ConfigMaps. When set to true, any change done in the resource keys will be rejected. This protects the cluster from accidental bad updates that would break the applications.

A secondary benefit derives from immutable resources. Since they don't change, Kubelet doesn't need to periodically check for their updates, which can improve scalability and performance.

After enabling the ImmutableEmphemeralVolumes feature gate, you can do:

apiVersion: v1

kind: Secret

metadata:

…

data:

…

immutable: true

However, once a resource is marked as immutable, it is impossible to revert this change. You can only delete and recreate the Secret, and you need to recreate the Pods that use the deleted Secret.

#1495 Generic data populators

Stage: Alpha

Feature group: storage

This enhancement establishes the foundations that will allow users to create pre-populated volumes. For example, pre-populating a disk for a virtual machine with an OS image, or enabling data backup and restore.

To accomplish this, the current validations on the DataSource field of persistent volumes will be lifted, allowing it to set arbitrary objects as values. Implementation details on how to populate volumes are delegated to purpose-built controllers.

#770 Skip attach for non-attachable CSI volumes

Stage: Graduating to Stable

Feature group: storage

This internal optimization will simplify the creation of VolumeAttachment objects for Container Storage Interface (CSI) drivers that don't require the attach/detach operations, like NFS or ephemeral secrets-like volumes.

For those drivers, the Kubernetes Attach/Detach controller always creates VolumeAttachment objects and waits until they're reported as "attached". Changes have been made on the CSI volume plugin to skip this step.

#351 Raw block device using persistent volume source

Stage: Graduating to Stable

Feature group: storage

BlockVolume reaches general availability in Kubernetes 1.18. You can access a raw block device just setting the value of volumeMode to block. The ability to use a raw block device without a filesystem abstraction allows Kubernetes to provide better support for high performance applications that need high I/O performance and low latency, like databases.

Read more in the release for 1.13 of the What's new in Kubernetes series.

#565 CSI Block storage support

Stage: Graduating to Stable

Feature group: storage

The ability to use a raw block device without a filesystem abstraction allows Kubernetes to provide better support for applications that need high I/O performance and low latency, like databases.

Read more in the release for 1.13 of the What's new in Kubernetes series.

#603 Pass Pod information in CSI calls

Stage: Graduating to Stable

Feature group: storage

The CSI out-of-tree storage driver may opt-in to receive information about Pod that requested a volume in NodePublish request, such as Pod name and namespace.

The CSI driver can use this information to authorize or audit usage of a volume, or generate content of the volume tailored to the pod.

Read more in the release for 1.14 of the What's new in Kubernetes series.

#989 Extend allowed PVC DataSources

Stage: Graduating to Stable

Feature group: storage

Using this feature, you can "clone" an existing persistent volume. A Clone results in a new, duplicate volume being provisioned from an existing volume.

Read more in the 1.15 release of the What's new in Kubernetes series.

Other Kubernetes 1.18 features

#1441 kubectl debug

Stage: Alpha

Feature group: cli

A new kubectl debug command has been added to extend debug capabilities.

This command allows to create ephemeral containers in a running pod, restart pods with a modified PodSpec, and start and attach to a privileged container in the host namespace.

#1020 Moving kubectl package code to staging

Stage: Major Change to Stable

Feature group: cli

This internal restructuring of the kubectl code is the first step to move the kubectl binary into its own repository. This helped decouple kubectl from the kubernetes code base and made it easier for out-the-tree projects to reuse its code.

#1333 Enable running conformance tests without beta REST APIs or features

Stage: Beta

Feature group: architecture

This enhancement collects the work done to ensure that neither the Kubernetes components nor the Kubernetes conformance depends on beta REST APIs or features. The end goal is to ensure consistency across distributions, as non-official distributions, like k3s, Rancher or Openshift shouldn't be required to enable non-GA features.

#491 Kubectl Diff

Stage: Graduating to Stable

Feature group: cli

kubectl diff will give you a preview of what changes kubectl apply will make on your cluster. This feature, while simple to describe, is really handy for the everyday work of a cluster operator. Note that you need to enable the dry-run feature on the API-server for this command to work.

Read more in the release for 1.13 of the What's new in Kubernetes series.

#670 Support Out-of-Tree vSphere Cloud Provider

Stage: Graduating to Stable

Feature group: cloud-provider

Build support for the out-of-tree vSphere cloud provider. This involves a well-tested version of the cloud-controller-manager that has feature parity to the kube-controller-manager.

This feature captures mostly implemented work already completed in the Cloud Provider vSphere repository.

Read more in the release for 1.14 of the What's new in Kubernetes series.

That's all, folks! Exciting as always; get ready to upgrade your clusters if you are intending to use any of these features.

If you liked this, you might want to check out our previous What's new in Kubernetes editions:

- What's new in Kubernetes 1.17

- What's new in Kubernetes 1.16

- What's new in Kubernetes 1.15

- What's new in Kubernetes 1.14

- What's new in Kubernetes 1.13

- What's new in Kubernetes 1.12

And if you enjoy keeping up to date with the Kubernetes ecosystem, subscribe to our container newsletter, a monthly email with the coolest stuff happening in the cloud-native ecosystem.