What Is K3s?

If you want something that uses less resources and has fewer moving parts than a full Kubernetes distribution, you’ll want to look into the K3s project, which was created by the folks at Rancher Labs (now part of SUSE). K3s is now a Cloud Native Computing Foundation (CNCF) project, and as such, it must pass the same software conformance tests that other CNCF-certified distributions must pass to ensure that configurations built for Kubernetes will just work.

You might be interested to know that the term K3s is a play on K8s, the popular shortened form of Kubernetes. The 8 represents the number of letters between the k and the s, so the originator(s) of the term K3s divided the total number of letters in Kubernetes (10) in half, then replaced the 8 with a 3 to represent its trimmed requirements.

K3s vs. K8s Architecture

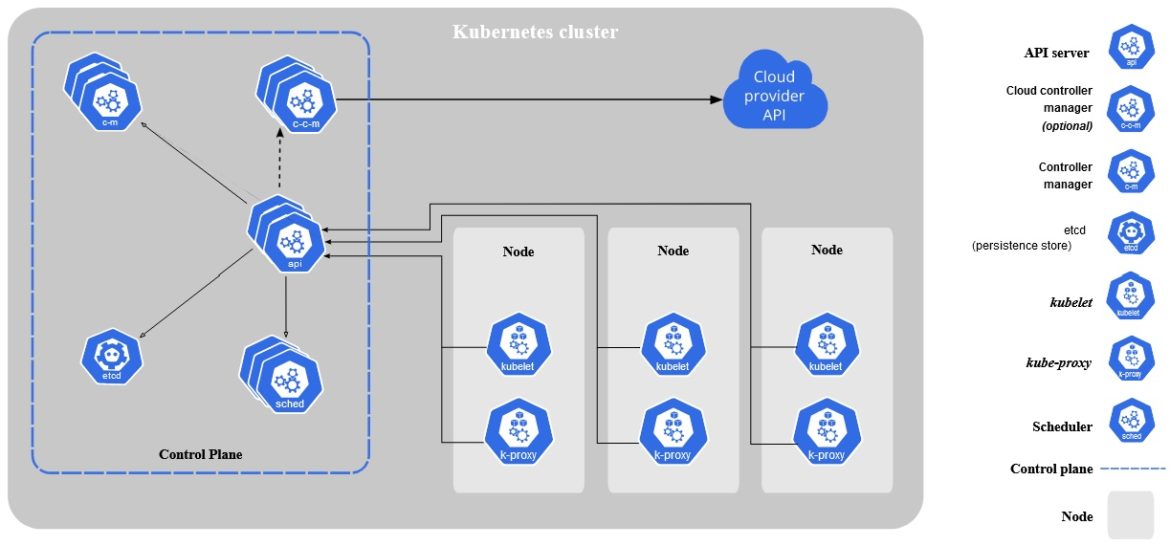

Full Kubernetes Architecture

Just to refresh your memory, the Kubernetes architectural model has six main components (each of which is a distinct process).

The four Kubernetes components that run on the control plane Kubernetes nodes are:

- API Server: Manages all communications between components.

- Controller Manager: Manages all jobs that are used to maintain the cluster.

- Scheduler: Looks for newly created pods that don’t have a home and finds them one.

- Cloud Controller Manager (Optional): Interacts with known cloud providers for things like storage and load balancer configuration.

In addition, a key/value store is also required to hold configuration-related information that the cluster uses to manage its state. The most commonly used database for this purpose is etcd, and it is usually run on the control plane.

The worker nodes have two components:

- Kubelet: Handles the life cycle of containers running on its nodes.

- Kube-proxy: Is the central point for in-cluster network traffic coming from and going to the host.

Full Kubernetes Architecture

(source: https://kubernetes.io/docs/concepts/overview/components/)

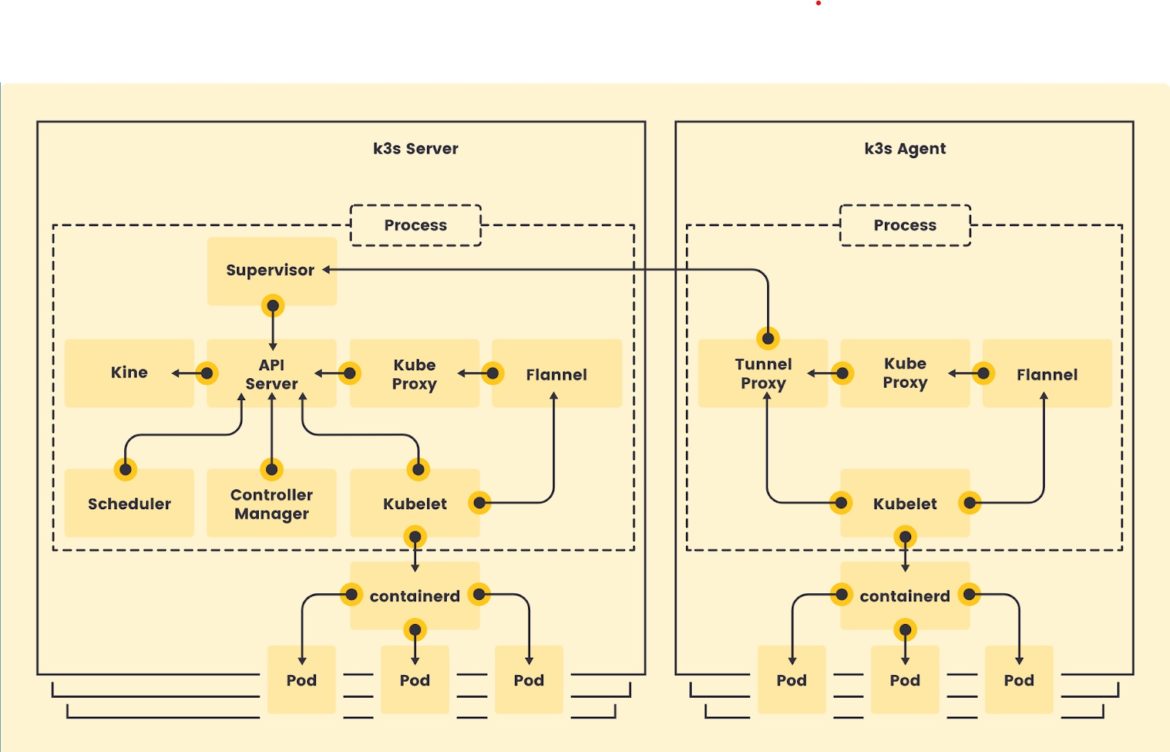

K3s Architecture

So, the K3s approach is to deliver a Kubernetes distribution that strips out everything that’s optional, including all API specifications in alpha and beta stages. While this is the point where some lightweight distributions stop streamlining, K3s goes one step further and builds the remaining functionality into two custom binaries, one with the control plane components and another with the worker node components.

To simplify the deployment and operational model even more, these binaries also include a CNI plugin for networking and an embedded key/value data store. The embedded data store is used in clusters with a single control plane node to eliminate the need to run an external database (which adds excessive overhead in such cases).

K3s Architecture

(source: https://k3s.io/img/how-it-works-k3s-revised.svg)

Setting Up K3s

There are several deployment models that can be used for K3s. You can have single-node clusters, a single control plane node with multiple workers, or multiple control plane nodes with multiple workers.

Installing a Single-Node Cluster

As you can see in Rancher’s Quick-Start Guide, installing a single-node cluster is as simple as issuing a single command:

root@server:~# curl -sfL https://get.k3s.io | sh -

[INFO] Finding release for channel stable

[INFO] Using v1.22.6+k3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.22.6+k3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.22.6+k3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s.service

[INFO] systemd: Enabling k3s unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s.service → /etc/systemd/system/k3s.service.

[INFO] systemd: Starting k3sCode language: PHP (php)The command worked with no additional configuration, and it looks like everything started properly.

root@server:~# kubectl get nodes -A

NAME STATUS ROLES AGE VERSION

server Ready control-plane,master 1m29s v1.22.6+k3s1Code language: PHP (php)Adding a Worker Node

Adding a worker node can also be as easy as issuing a single command if all requirements are met. You’ll need the server’s hostname and the token from the /var/lib/rancher/k3s/server/token file.

[root@agent ~]# curl -sfL https://get.k3s.io | \

K3S_URL=https://myserver:6443 \

K3S_TOKEN=mynodetoken \

sh -

[INFO] Finding release for channel stable

[INFO] Using v1.22.6+k3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.22.6+k3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.22.6+k3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-agent-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s-agent.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s-agent.service

[INFO] systemd: Enabling k3s-agent unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s-agent.service → /etc/systemd/system/k3s-agent.service.

[INFO] systemd: Starting k3s-agentCode language: PHP (php)And did it work? Yes, yes it did.

root@server:~# kubectl get nodes -A

NAME STATUS ROLES AGE VERSION

server Ready control-plane,master 4m35s v1.22.6+k3s1

agent Ready <none> 50s v1.22.6+k3s1Code language: PHP (php)Air Gap Installations

If you need to do an installation in an environment where the Internet isn’t available or isn’t reliable, or if you’d rather not have to place your trust in a random script from the Internet, then you will need to do what is called an air gap installation. (The term “air gap” was originally used in the security industry to mean that there was a physical separation between the Internet and the device in question.)

To accomplish this type of installation, you will need to download the K3s release of your choice from the official GitHub repository (at https://github.com/k3s-io/k3s/releases), then follow the installation instructions detailed in Rancher’s air gap documentation. All you need to do is download and uncompress the release archive, and the installation is pretty simple.

Enabling a Multi-Node Control Plane for High Availability

K3s isn’t configured to support a highly available control plane out of the box. However, it’s possible to configure such functionality and achieve high availability. You can find an explanation of these steps and the additional requirements in Rancher’s installation documentation.

According to Rancher’s high availability installation guide, the general idea is to run the server setup with an additional parameter pointing at the external data store on all of the nodes that will be members of the control plane. Then, before adding workers, you need to set up a single point of entry for them to connect to so that they will not need to be reconfigured if a control plane node fails. Usually, a virtual IP or a load balancer provides that functionality.

Once these are in place, you use the same commands documented above to add worker nodes.

K3s vs. K3d

K3d is a community-driven open source utility that runs K3s as a container instead of a process directly in the operating system, which is the default configuration. Among other pluses, this gives you the ability to run multiple K3s clusters within a single host OS, which can be beneficial for testing multi-node configurations and will increase speed and reliability when spinning multiple clusters to support CI/CD pipelines.

You can find more information at k3d.io. It is important to note that even though the same community and commercial organizations that drive the development of K3d are behind K3s, K3d does not currently have commercial support.

Alternative Lightweight Kubernetes Distributions

Many different projects are creating lightweight Kubernetes distributions. In this section, we will cover a few of the most popular.

Minikube

Minikube is created and managed by the Kubernetes project. Its primary purpose is to provide a single-node Kubernetes cluster to use for development and testing purposes. It is as bare-bones as a cluster can be, and since it is Kubernetes, you can apply any configuration that you would apply to any other cluster, including CNI, ingress, CSI, and deployments. The standard deployment for minikube runs inside a Debian Linux VM, and it will run in Docker, HyperKit, Hyper-V, KVM, Parallels, Podman, VirtualBox, and VMware Fusion/Workstation. Since it uses the VM model, it can run equally well on a MacOS, Windows, or Linux desktop.

MicroK8s

MicroK8s was created by Canonical to address environments with resource constraints. It has also been adopted in a lot of development and test environments because it requires less memory for the base runtime than minikube, and it matches what could be running in production exactly. Much like minikube, MicroK8s is available as a VM based on Ubuntu, and it can also be directly deployed onto an existing Ubuntu host. This is the model most often used in production environments, since hosts in Kubernetes clusters are usually single purpose, unlike development and test hosts.

MicroShift

OpenShift is a leading enterprise-focused Kubernetes distribution built by Red Hat. They offer a lightweight distribution called MicroShift, which is built using the same enterprise-friendly mix of technologies but focuses more on edge use cases. While it is not as mature as the other options we’ve discussed, MicroShift is more prescriptive in the choices it includes in its technology stack (as is K3s).

K3s vs. MicroK8s vs. Minikube vs. MicroShift

| K3s | MicroK8s | Minikube | MicroShift | |

| Backed By | CNCF (donated by Rancher) | Canonical | Kubernetes (under CNCF) | Red Hat |

| Minimum Memory | 0.5 GB | 0.5 GB | 2 GB | 2 GB |

| Architectures | x86, ARM64, ARMv7 | x86, ARM64, s390x | x86, ARM64, ARMv7, ppc64, s390x | x86 (AMD64), ARM64, or riscv64 |

| HA by Default | No | Yes | No | No |

| Default CNI | Flannel | Flannel | KindNet | Flannel |

| Default Ingress | traefik | N/A | N/A | OpenShift-ingress |

| Packaged OS | N/A | Ubuntu | Debian | N/A |

| Will Run On | Most major Linux distributions (Ubuntu, SUSE, RHEL, Rocky, etc.) | MacOS, Windows, most major Linux distributions | MacOS, Windows, most major Linux distributions | Designed for recent Linux distributions from the Red Hat family (CentOS Streams, Fedora, RHEL) |

| Custom Kubernetes Binaries | Yes | No | No | No |

| Commercial Support Available | Yes (SUSE) | Yes (Canonical) | No | Not Yet (Red Hat) |

Conclusion

Since K3s is a certified Kubernetes distribution, it should work well in any Kubernetes deployment. If you intend to work with large clusters, however, you might be better off using Rancher’s RKE2 or a managed service offering like GKE, EKS, or Civo. Where K3s really shines is in development, testing, and resource-constrained environments such as IoT and edge computing. Kubernetes is best-of-breed at what it does, and streamlined deployments with lightweight runtimes are exactly what is needed in those situations.