When it comes to securing applications in the cloud, adaptation is not just a strategy but a necessity. We’re currently experiencing a monumental shift driven by the mass adoption of AI, fundamentally changing the way companies operate. From optimizing efficiency through automation to transforming the customer experience with speed and personalization, AI has empowered developers with exciting new capabilities. While the benefits of AI are undeniable, it is still an emerging technology that poses inherent risks for organizations trying to understand this changing landscape. That’s where Sysdig comes in to secure your organization’s AI development and keep the focus on innovation.

Today, we are thrilled to announce the launch of AI Workload Security to identify and manage active risk associated with AI environments. This new addition to our cloud-native application protection platform (CNAPP) will help security teams see and understand their AI environments, identify suspicious activity on workloads that contain AI packages, and prioritize and fix issues fast.

Skip ahead to the launch details!

AI has changed the game

The explosive growth of AI in the last year has reshaped the way many organizations build applications. AI has quickly become a mainstream topic across all industries and a focus for executives and boards. Advances in the technology have led to significant investment in AI, with more than two-thirds of organizations expected to increase their AI investment over the next three years across all industries. GenAI specifically has been a major catalyst of this trend, driving much of this interest. The Cloud Security Alliance’s recent State of AI and Security Survey Report found that 55% of organizations are planning to implement GenAI solutions this year. Sysdig’s research also found that since December 2023, the deployment of OpenAI packages has nearly tripled.

With more companies deploying GenAI workloads, Kubernetes has become the deployment platform of choice for AI. Large language models (LLMs) are a core component of many GenAI applications that can analyze and generate content by learning from large amounts of text data. Kubernetes has numerous characteristics that make it an ideal platform for LLMs, providing advantages in scalability, flexibility, portability, and more. LLMs require significant resources to run, and Kubernetes can automatically scale resources up and down, while also making it simple to export LLMs as container workloads across various environments. The flexibility when deploying GenAI workloads is unmatched, and top companies like OpenAI, Cohere, and others have adopted Kubernetes for their LLMs.

From opportunity to risk: security implications of AI

AI continues to advance rapidly, but the widespread adoption of AI deployment creates a whole new set of security risks. The Cloud Security Alliance survey found that 31% of security professionals believe AI will be of equal benefit to security teams and malicious third parties, with another 25% believing it will be more beneficial to malicious parties. Sysdig’s research also found that 34% of all currently deployed GenAI workloads are publicly exposed, meaning they are accessible from the internet or another untrusted network without appropriate security measures in place. This increases the risk of security breaches and puts the sensitive data leveraged by GenAI models in danger.

Sysdig found that 34% of all currently deployed GenAI workload are publicly exposed.

Another development that highlights the importance of AI security in the cloud are the forthcoming guidelines and increasing pressures to audit and regulate AI, as proposed by the Biden administration’s October 2023 Executive Order and following recommendations from the National Telecommunications and Information Administration (NTIA) in March 2024. The European Parliament also adopted the AI Act in March 2024, introducing stringent requirements on risk management, transparency, and other issues. Ahead of this imminent AI legislation, organizations should assess their own ability to secure and monitor AI in their environments.

Many organizations lack experience securing AI workloads and identifying risks associated with AI environments. Just like the rest of an organization’s cloud environment, it is critical to prioritize active risks tied to AI workloads, such as vulnerabilities in in-use AI packages or malicious actors trying to modify AI requests and responses. Without full understanding and visibility of AI risk, it’s possible for AI to do more harm than good.

Mitigate active AI risk with AI Workload Security

We’re excited to unveil AI Workload Security in Sysdig’s CNAPP to help our customers adopt AI securely. AI Workload Security allows security teams to identify and prioritize workloads in their environment with leading AI engines and software packages, such as OpenAI and Tensorflow, and detect suspicious activity within these workloads. With these new capabilities, your organization can get real-time visibility of the top active AI risks, enabling your teams to address them immediately. Sysdig helps organizations manage and control their AI usage, whether it’s official or deployed without proper approval, so they can focus on accelerating innovation.

Sysdig’s AI Workload Security ties into our Cloud Attack Graph, the neural center of the Sysdig platform, integrating with our Risk Prioritization, Attack Path Analysis, and Inventory features to provide a single view of correlated risks and events.

AI Workload Security in action

The introduction of real-time AI Workload Security helps companies prioritize the most critical risks associated with AI environments. Sysdig’s Risks page provides a stack-ranked view of risks, evaluating which combinations of findings and context need to be addressed immediately across your cloud environment. Publicly exposed AI packages are highlighted along with other risk factors. In the example below, we see a critical risk with the following findings:

- Publicly exposed workload

- Contains an AI package

- Has critical vulnerability with an exploit running on an in-use package

- Contains a high confidence event

Based on the combination of findings, users can determine the severity of the risk that exposed AI workloads create. They can also gather more context around the risk, including which packages on the workload are running AI and whether vulnerabilities on these packages can be fixed with a patch.

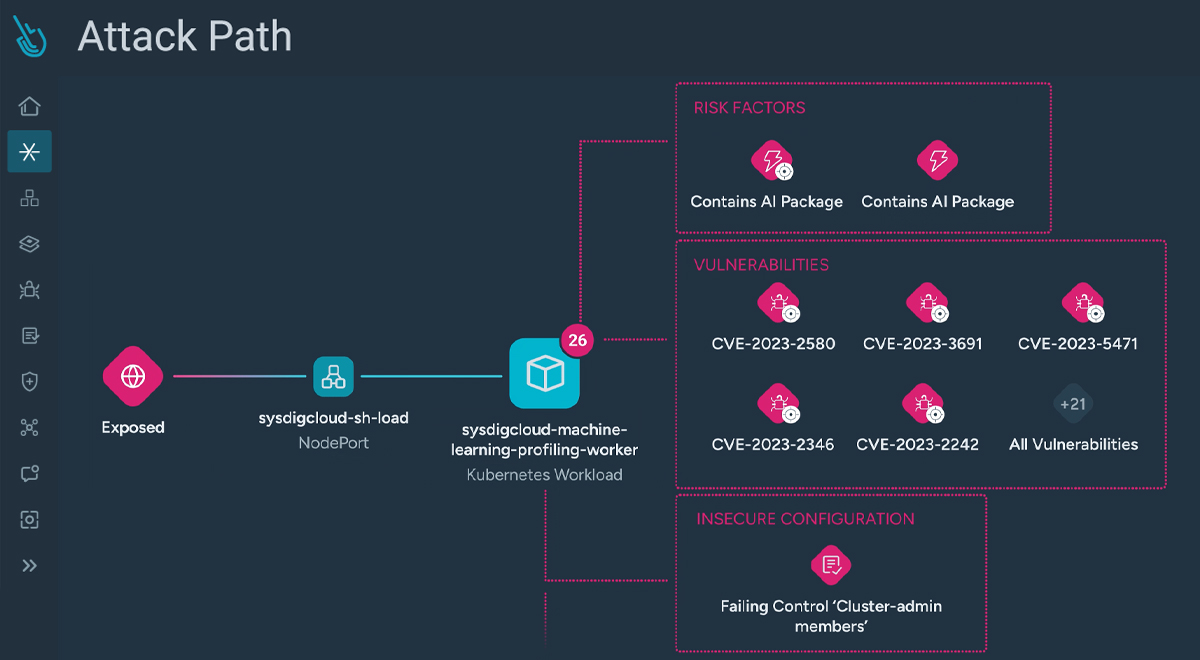

Digging deeper into these risks, users can also get a more visual representation of the exploitable links across resources with Attack Path Analysis. Sysdig uncovers potential attack paths involving workloads with AI packages, showing how they fit with other risk factors like vulnerabilities, misconfigurations, and runtime detections on these workloads. Users can see which AI packages running on the workload are in use and how vulnerable packages can be fixed. With the power of AI Workload Security, users can quickly identify critical attack paths involving their AI models and data, and correlate with real-time events.

Sysdig also gives users the ability to identify all of the resources in your cloud environment that have AI packages running. AI Workload Security empowers Sysdig’s Inventory, enabling users to view a full list of resources containing AI packages with a single click, as well as identify risks on these resources.

Want to learn more?

Armed with these new capabilities, you’ll be well equipped to defend against active AI risk, helping your organization realize the full potential of AI’s benefits. These advancements provide an additional layer of security to our top-rated CNAPP solution, stretching our coverage further across the cloud. Click here to learn more about Sysdig’s leading CNAPP.

See Sysdig in action

Sign up for our Kraken Discovery Lab to execute real cloud attacks and then assume the role of the defender to detect, investigate, and respond.