In this article we will cover Docker image scanning with open source image scanning tools. We will explain how to to deploy and setup Docker security scanning: both on private Docker repositories, and as a CI/CD pipeline validation step. We will also explore ways of integrating image scanning with CI/CD tools like Jenkins and runtime security tools like Falco.

This is the second post in a two-part series on Open Source Container Security. The first post focused on open source container runtime security using Falco to build a response engine for Kubernetes with security playbooks as FaaS.

Sysdig Secure is our commercial product built of top of the open source tools included in this guide. If you are looking for production ready Kubernetes image scanning, compliance or runtime security, check it out as well!

Read on to learn about:

- Docker Vulnerability Scanning

- Docker Image Scanning Open Source Tools

- Open Source Docker Scanning Tool: Anchore Engine

- Deploying the Anchore Engine for Docker Image Scanning

- Configuring Anchore to Scan your Private Docker Repositories

- CI/CD Security: Docker Security Scanning with Jenkins

- Integrating Anchore Engine and Kubernetes for Image Validation

- Blocking Forbidden Docker Images or Unscanned Images

Docker Vulnerability Scanning

One of the last steps of the CI (Continuous Integration) pipeline involves building the container images that will be pulled and executed in our environment. Therefore, whether you are building Docker images from your own code or but also when using unmodified third party images, it’s important to identify and find any known vulnerabilities that may be present in those images. This process is known as Docker vulnerability scanning.

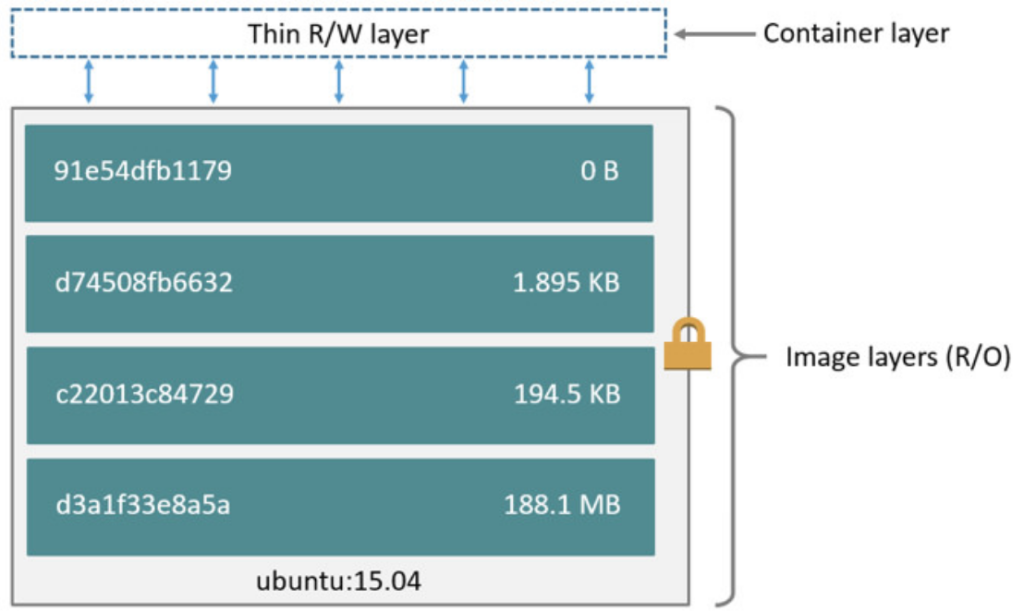

Docker images are composed of several immutable layers, basically a diff over the previous one adding files and other changes, and each one associated with a unique hash id:

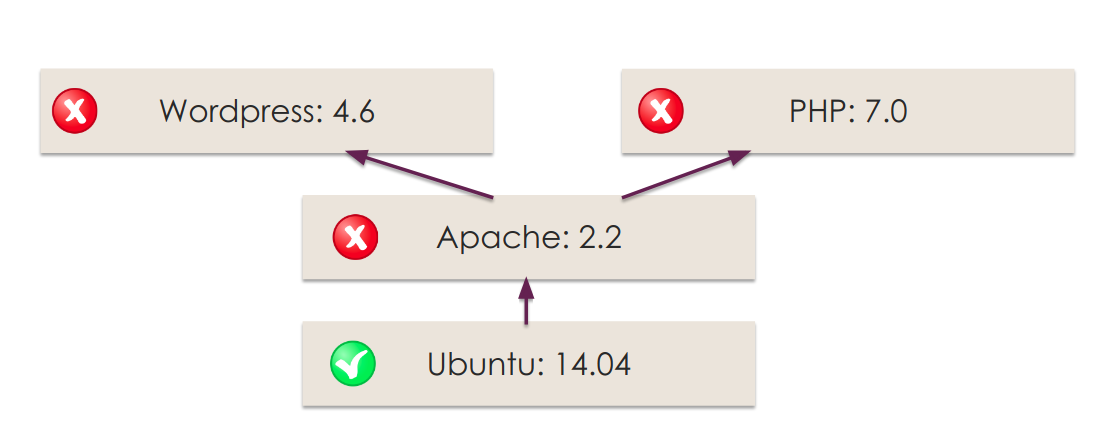

Any new Docker image that you create will probably be based in an existing image (FROM statement in the Dockerfile). That’s why you can leverage this layered design to avoid having to re-scan the entire image every time you make a new one, a change. If a parent image is vulnerable, any other images built on top of that one will be vulnerable too.

The Docker build process follows a manifest (Dockerfile) that includes relevant security information that you can scan and evaluate including the base images, exposed ports, environment variables, entrypoint script, external installed binaries, etc. Btw, don’t miss our Docker security best practices article for more hints in building your Dockerfiles.

In a secure pipeline, Docker vulnerability scanning should be a mandatory step of your CI/CD process and any image should be scanned and approved before ever entering “Running” state in the production clusters.

The Docker security scanning process typically includes:

- Checking the software packages, binaries, libraries, operative system files, etc. against one or more well known vulnerabilities databases. Some Docker scanning tools have a repository containing the scanning results for common Docker images that can be used as a cache to speed up the process.

- Analyzing the

Dockerfileand image metadata to detect security sensitive configurations like running as privileged (root) user, exposing insecure ports, using based images tagged with “latest” rather than specific versions for full traceability, etc. - User defined policies, or any set of requirements that you want to check for every image, like software packages blacklists, base images whitelists, whether a SUID file has been added, etc.

You can can classify and group the different security issues you might find in an image, assigning different priorities: a warning notification is sufficient for some issues, while others will be severe enough to justify aborting the build.

Implementing #Docker image scanning with #opensource tools: @anchore and @falco_org #Kubernetes #CloudNative #security Click to tweetDocker Image Scanning Open-source Tools

There are several Docker image scanning tools available, and some of the most popular include:

- Anchore Engine: Anchore Engine is an open source image scanning tool. Provides a centralized service for inspection, analysis and applies user-defined acceptance policies to allow automated validation and certification of container images.

- CoreOS/Clair: An open source project for the static analysis of vulnerabilities in application containers (currently including appc/Rkt and Docker).

- Vuls.io: Agent-less Linux vulnerability scanner based on information from NVD, OVAL, etc. It has some container image support, although is not a container specific tool.

- OpenScap: Suite of automated audit tools to examine the configuration and known vulnerabilities in your software, following the NIST-certified Security Content Automation Protocol (SCAP). Not container specific again, but does include some level of support.

Open Source Docker Scanning Tool: Anchore Engine

The flexible user-defined policies, breadth of analysis, API and performance characteristics of Anchore Engine made it our top choice among open source tools available today.

- Anchore Engine allows developers to perform detailed analysis on their container images, run queries, produce reports and define policies that can be used in CI/CD pipelines.

- Using Anchore Engine, container images can be downloaded from Docker V2 compatible container registries, analyzed and evaluated against user defined policies.

- It can be accessed directly through a RESTful API or via the Anchore CLI tool.

- The scanning includes not just CVE-based security scans but also policy-based scans that can include checks around security, compliance and operational best practices.

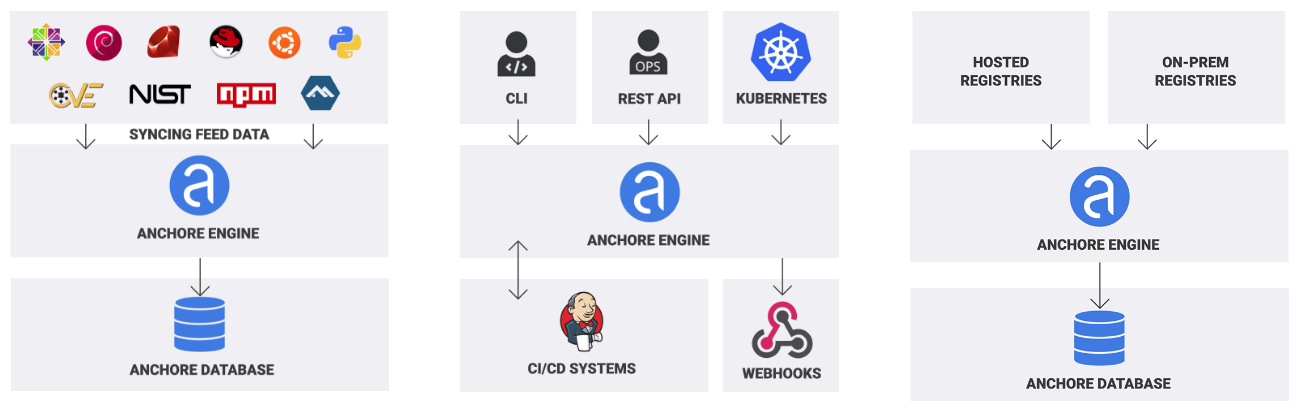

Anchore Engine sources and endpoints

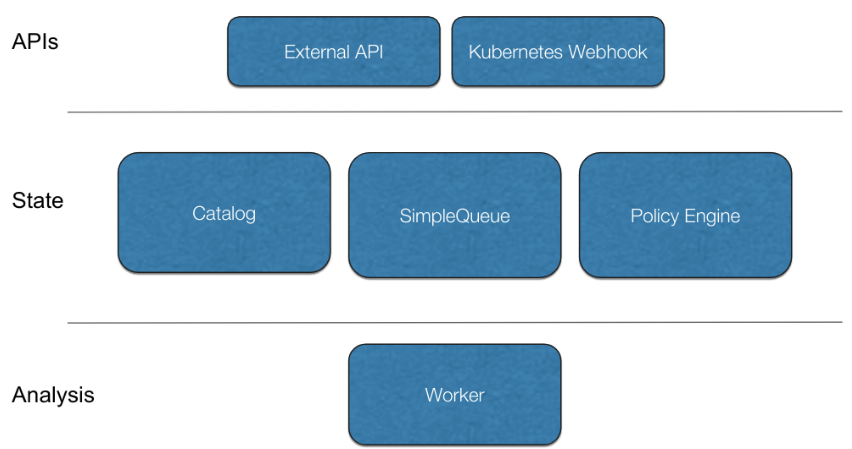

Anchore Engine architecture is comprised of six components that can be deployed in a single container or scaled out:

- API Service: Central communication interface that can be accessed by code using a REST API or directly using the command line.

- Image Analyzer Service: Executed by the “worker”, these Anchore nodes that perform the actual Docker image scanning.

- Catalog Service: Internal database and system state service.

- Queuing Service: Organizes, persists and schedules the engine tasks.

- Policy Engine Service: Policy evaluation and vulnerabilities matching rules.

- Kubernetes Webhook Service: Kubernetes-specific webhook service to validate images before they are spawned.

Anchore Engine architecture

Deploying the Anchore Engine for Docker Image Scanning

How to Install Anchore Using docker-compose

Maybe the fastest way to get a taste of Anchore Engine is to quickly deploy it locally using a docker-compose stack:

mkdir anchore

cd anchore

curl https://raw.githubusercontent.com/anchore/anchore-engine/master/scripts/docker-compose/docker-compose.yaml > docker-compose.yaml

mkdir config

curl https://raw.githubusercontent.com/anchore/anchore-engine/master/scripts/docker-compose/config.yaml > config/config.yaml

mkdir db

docker-compose up -d

Code language: JavaScript (javascript)There are several parameters that you can tune in this config.yaml file: log level, listening port, credentials, webhook notifications, etc.

The CLI client is also available as a Docker container that you can pull directly from DockerHub:

docker pull anchore/engine-cli:latest

Then, we can check that all the Anchore Engine services are up and running and we are ready to go:

docker run anchore/engine-cli:latest anchore-cli --u admin --p foobar --url http://172.18.0.1:8228/v1 system status

Service simplequeue (dockerhostid-anchore-engine, http://anchore-engine:8083): up

Service analyzer (dockerhostid-anchore-engine, http://anchore-engine:8084): up

Service policy_engine (dockerhostid-anchore-engine, http://anchore-engine:8087): up

Service catalog (dockerhostid-anchore-engine, http://anchore-engine:8082): up

Service apiext (dockerhostid-anchore-engine, http://anchore-engine:8228): up

Service kubernetes_webhook (dockerhostid-anchore-engine, http://anchore-engine:8338): up

Engine DB Version: 0.0.7

Engine Code Version: 0.2.3

Code language: JavaScript (javascript)How to Install Anchore Engine in Kubernetes using Helm

You can also install Anchore Engine in Kubernetes with Helm using the Anchore Engine Helm chart. To do so, execute:

helm install --name anchore-stack stable/anchore-engine

Once deployed, it will print a list of instructions and useful commands to connect to the service.

Before anything else, we can check that all the pods are up and running:

kubectl get pods

NAME READY STATUS RESTARTS AGE

anchore-stack-anchore-engine-core-5bf44cb6cd-zxx2k 1/1 Running 0 38m

anchore-stack-anchore-engine-worker-5f865c7bf-r72vs 1/1 Running 0 38m

anchore-stack-postgresql-76c87599dc-bbnxn 1/1 Running 0 38m

Code language: JavaScript (javascript)Then, follow the instructions printed to screen to spawn an ephemeral container that has the anchore-cli tool:

ANCHORE_CLI_USER=admin

ANCHORE_CLI_PASS=$(kubectl get secret --namespace default anchore-stack-anchore-engine -o jsonpath="{.data.adminPassword}" | base64 --decode; echo)

kubectl run -i --tty anchore-cli --restart=Always --image anchore/engine-cli --env ANCHORE_CLI_USER=admin --env ANCHORE_CLI_PASS=${ANCHORE_CLI_PASS} --env ANCHORE_CLI_URL=http://anchore-stack-anchore-engine.default.svc.cluster.local:8228/v1/

/ anchore-cli system status

There are many parameters that you can configure directly from the helm install command. It is always important to replace the default passwords and configure persistent storage volumes. You can review values.yaml file for a complete list.

Configure Anchore to Scan your Private Docker Repositories

Adding private Docker V2 compatible image registries to the Anchore Engine is a pretty straightforward process, regardless of whether they are hosted by you or any of the common cloud registries.

To configure Anchore to scan your private Docker repositories, use the registry subcommand for all the registry-related operations. Its operation is mostly self-describing:

anchore-cli registry add index.docker.io <user> <password>

anchore-cli registry list

Registry Type User

index.docker.io docker_v2 mateobur

Code language: CSS (css)Also replace index.docker.io with the URL for your local registry.

Once that’s complete, you are ready to start pulling and scanning images from the private register:

anchore-cli image add index.docker.io/mateobur/secureclient:latest

Image Digest: sha256:dda434d0e19db72c3277944f92e203fe14f407937ed9f3f9534ec0579ce9cdac

Analysis Status: analyzed

Image Type: docker

Image ID: 5e1be4e7763143e8ff153887d2ae510fe1fee59c9a55392d65f4da73c9626d76

Dockerfile Mode: Guessed

Distro: ubuntu

Distro Version: 18.04

Size: 127739629

Architecture: amd64

Layer Count: 6

Full Tag: index.docker.io/mateobur/secureclient:latest

To pull from a private repository, you will need to add the registry url in the container name. Entering only <username>/<imagename> won’t work.

Note that some vendor-specific Docker registries may need additional parameters on top of just user/password, see scanning Amazon Elastic Container Registry (ECR) with Anchore, for example.

CI/CD Security: Docker Security Scanning with Jenkins

Jenkins is an open source automation server with a plugin ecosystem that supports the typical tools that are part of your delivery pipelines. Jenkins helps to automate the CI/CD process. Anchore has been designed to plug seamlessly into a CI/CD pipeline: a developer commits code into the source code management system, like Git. This change triggers Jenkins to start a build which creates a container image, etc.

In a typical workflow, this container image is then run through some automated testing. If an image does not pass the Docker security scanning (doesn’t meet the organization’s requirements for security or compliance) then it doesn’t make sense to invest the time required to perform automated tests on the image. A better approach is to “learn fast” by failing the build and returning the appropriate reports back to the developer to address the issues..

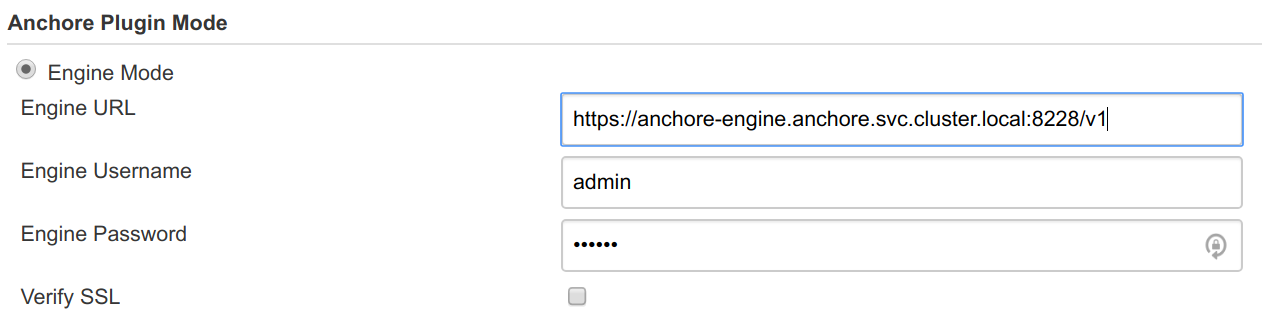

You can use the “Anchore plugin” available in the official plugin list that you can access via the Jenkins interface.

Once you have installed the plugin and configured the connection with the Engine (follow the instructions in the link above), you can include the Anchore evaluation as another (mandatory) step of your pipeline.

This way, your catalog will be automatically up to date and you will be able to detect and retract insecure images before they reach your Docker registry and automated functional tests are run.

From the Manage Jenkins -> Configure System menu, you need to configure the connection with the Engine API endpoint and credentials:

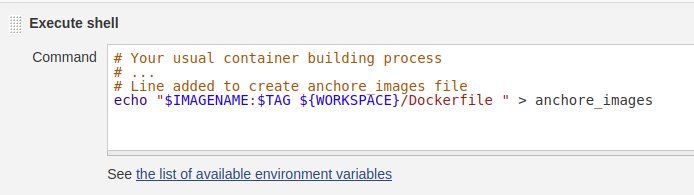

And as the last step of your build pipeline, you can write the image name, tags and (optionally) Dockerfile path to a workspace local file “anchore_images”:

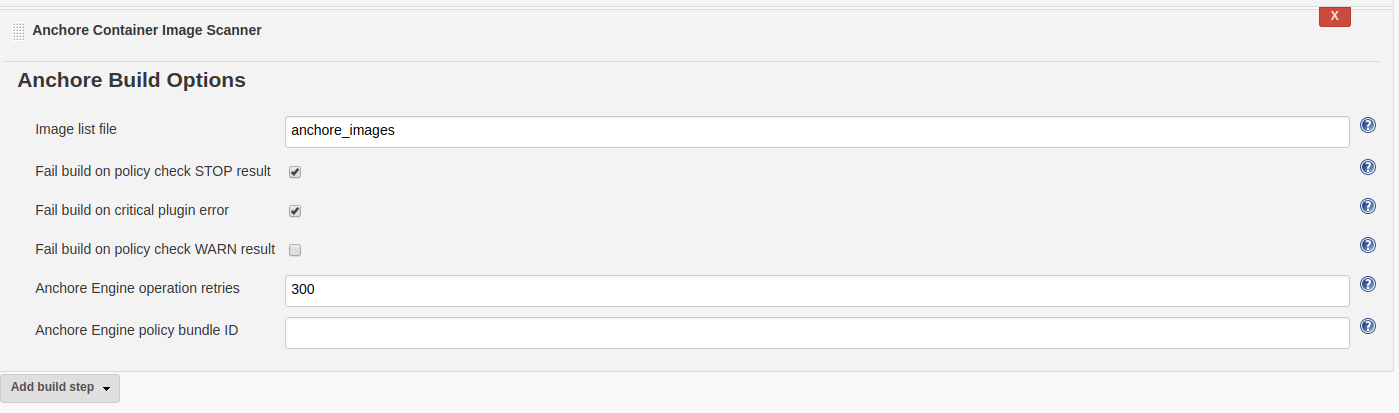

After that, you can invoke the Anchore container image scanner in the next build step:

The build will fail if Anchore detects any stop build vulnerabilities. Of course, you also can define what triggers a stop.

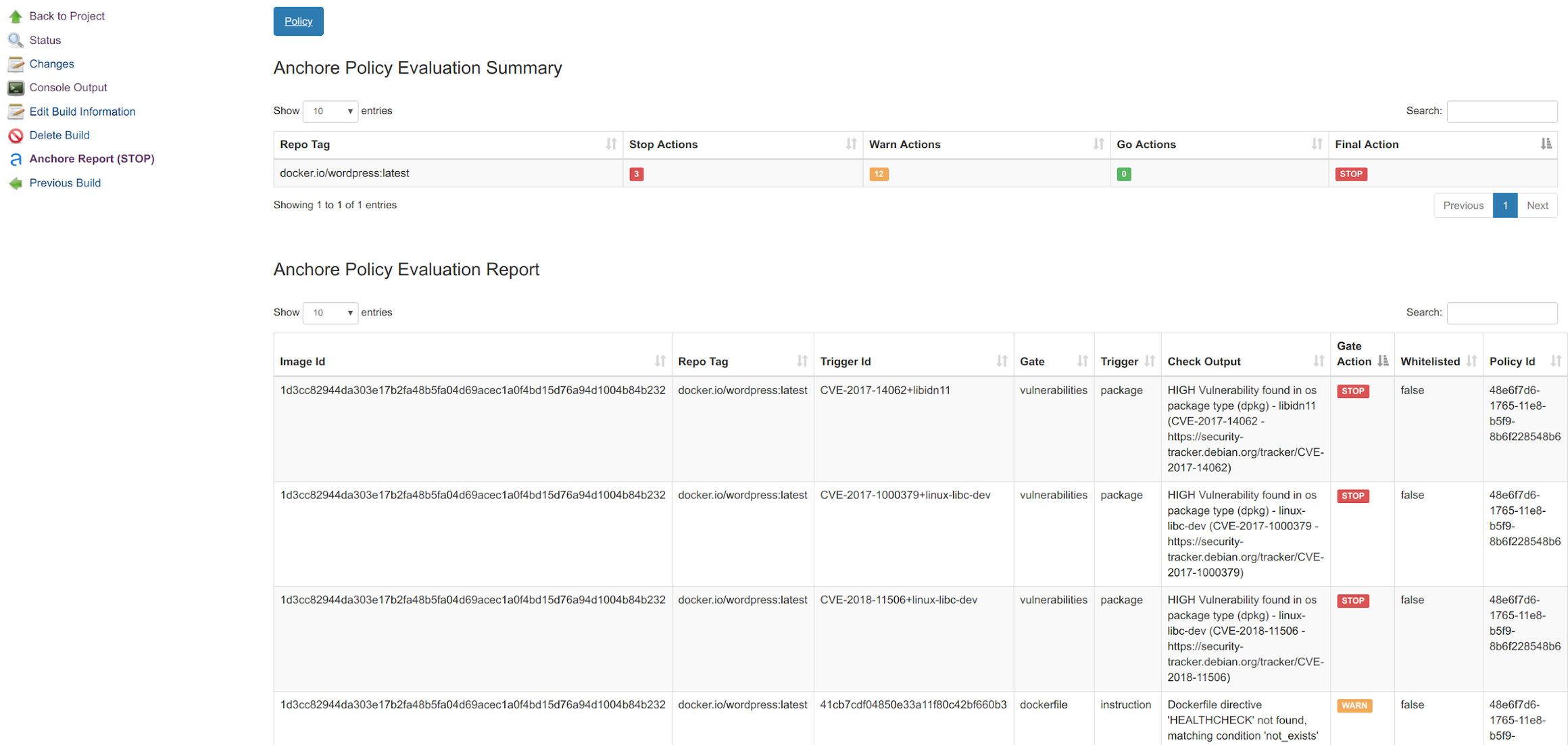

The following is an example of a build process stopped by Anchore Engine, with every vulnerability explained, links to the full description / mitigation procedures, etc:

The results of evaluations such as this are added to you Anchore Engine catalog. You can then use this catalog of approved / rejected Docker images to filter which pods will be accepted by the Kubernetes API, as you will see in the next section, or as an input to Sysdig Falco runtime rules.

Integrating Anchore Engine and Kubernetes for Image Validation

The Kubernetes ImagePolicyWebhook admission controller allows a backend webhook to make admission decisions, such as whether or not a Pod should be allowed in your cluster.

Anchore Engine provides a webhook service specifically designed to enable this feature.

You can read the complete story here, but for the impatient there is also a quick start script.

Assuming that kubectl and helm are available and configured already, run:

git clone https://github.com/viglesiasce/kubernetes-anchore-image-validator.git

cd kubernetes-anchore-image-validator/

./hack/install.sh

Code language: PHP (php)After running the install.sh script, you will need to enable the webhook Kubernetes configuration. How-to instructions will be printed on the Helm output:

File: Docker_scan_ValidatingWebhookConfiguration.yaml

-----------------------------------------------------

Anchore engine policy validator is now installed.

Create a validating webhook resources to start enforcement:

KUBE_CA=$(kubectl config view --minify=true --flatten -o json | jq '.clusters[0].cluster."certificate-authority-data"' -r)

cat > validating-webook.yaml <<eof apiversion:="" admissionregistration="" k8s="" io="" v1beta1="" kind:="" validatingwebhookconfiguration="" metadata:="" name:="" analysis-anchore-policy-validator="" admission="" anchore="" webhooks:="" -="" clientconfig:="" service:="" namespace:="" default="" kubernetes="" path:="" apis="" imagechecks="" cabundle:="" kube_ca="" rules:="" operations:="" create="" apigroups:="" apiversions:="" resources:="" pods="" failurepolicy:="" fail="" eof="" kubectl="" apply="" -f="" validating-webook="" yaml="" code=""></eof>Code language: JavaScript (javascript)A few minutes after enablement, all the pods will be running on the anchore namespace:

kubectl get pods -n anchore

NAME READY STATUS RESTARTS AGE

analysis-anchore-engine-core-97dc7ccdb-w9bhb 1/1 Running 0 3h

analysis-anchore-engine-worker-7b5c95b57c-d9dmg 1/1 Running 0 3h

analysis-anchore-policy-validator-54d598ddb7-6p6ts 1/1 Running 0 3h

analysis-postgresql-c7df6d66f-xhnrz 1/1 Running 0 3h

Code language: JavaScript (javascript)After following the instructions to enable the integration you will be able to see the new webhook configured:

kubectl get validatingwebhookconfiguration

NAME AGE

analysis-anchore-policy-validator.admission.anchore.io 20h

Code language: CSS (css)Anchore will now analyse every image on any Pod scheduled in Kubernetes and containers evaluated as non-secure will never get into the running phase, like this:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedCreate 19s (x13 over 41s) daemonset-controller Error creating: admission webhook "analysis-anchore-policy-validator.admission.anchore.io" denied the request: Image failed policy check: wordpress:latest

Code language: CSS (css)Block Forbidden Docker Images or Images not Scanned

On one side, before deployment, we have image scanning. On the other side, after deployment, we have runtime security. Is there any possible link between the two? Actually yes, events in each side can be useful on both sides.

For example, let’s say you decide to integrate Anchore Engine Docker scanning with Falco runtime security. What if you create a Sysdig Falco rule that will trigger an alert whenever any of the images that are marked as non-secure by Anchore Engine are spawned in your nodes?. This can be interesting in many cases, for example if you are not running the Kubernetes webhook, running without Kubernetes or the webhook fails, or if the vulnerability is found once the container is running.

If you already know the user, password and URL to contact the Anchore Engine API, you can use the Sysdig Falco / Anchore integration directly:

docker run --rm -e ANCHORE_CLI_USER=$ANCHORE_USER

-e ANCHORE_CLI_PASS=$ANCHORE_CLI_PASS

-e ANCHORE_CLI_URL=http://$HOST:$PORT/v1

sysdig/anchore-falco

Code language: PHP (php)This will print a rule that you can directly append to your Falco configuration:

- macro: anchore_stop_policy_evaluation_containers

condition: container.image.id in ("52057de6c8d0d0143dfc71fde55e58edaf3ccc5c2212221a614f45283c5ab063", "65bf726222e13b0ceff0bb20bb6f0e99cbf403a7a1f611fdd2aadd0c8919bbcf", "a8a59477268d92f434d86a73b5ea6de9bf7b05d536359413e79da1feb31f87aa", "a5b7afcfdcc878fae7696ea80eecc31114b9c712a1e896cba87a999d255e4a7b", "8753edeb1aa342081179474cfe5449f1b7d101d92d2799a7840da744b9bbb3ca", "857bc7ff918f67a8e74abd01dc02308040f7f7f9b99956a815c5a9cb6393f11f", "8626492fecd368469e92258dfcafe055f636cb9cbc321a5865a98a0a6c99b8dd", "e86d9bb526efa0b0401189d8df6e3856d0320a3d20045c87b4e49c8a8bdb22c1")

- rule: Run Anchore Containers with Stop Policy Evaluation

desc: Detect containers which does not receive a positive Policy Evaluation from Anchore Engine.

condition: evt.type=execve and proc.vpid=1 and container and anchore_stop_policy_evaluation_containers

output: A stop policy evaluation container from anchore has started (%container.info image=%container.image)

priority: INFO

tags: [container]

Code language: JavaScript (javascript)You will need to periodically run the integration to re-evaluate the status and update this Falco rule with the successive Docker images you want to alert if they are run in your cluster.

Conclusions

Here are some key takeaways on image scanning and runtime security:

- While runtime security takes place after the deployment, image scanning happens in your CI/CD pipeline, either before publishing the images or once they are in your registry.

- Although runtime security and image scanning happen at different points in the container lifecycle, there are very interesting links to explore between them, including information about what’s inside the containers and their function.

- There are various open-source tools available that provide the functionality you need to analyze, inspect, and support image scanning. Anchore Engine’s flexible user-defined policies, API and performance makes it our recommended choice.

Anchore and all the open source projects we mentioned in the first part: Falco, NATS, Kubeless, Helm and obviously Kubernetes – with Python and Golang code, build together what we consider a great open source container security reference stack.

If you want to contribute to this project, there are many ways to do so, including:

- Creating additional documentation on the open source Anchore Engine.

- Extending the library of default Falco rules.

- Contributing more security playbooks such as Kubeless FaaS or any other NATS observers.

- Integrating more tools (PRs are always great!)

Join the discussion on Sysdig’s open source Slack community, #open-source-sysdig or reach out us via Twitter on @sysdig and @anchore!