Falco Feeds extends the power of Falco by giving open source-focused companies access to expert-written rules that are continuously updated as new threats are discovered.

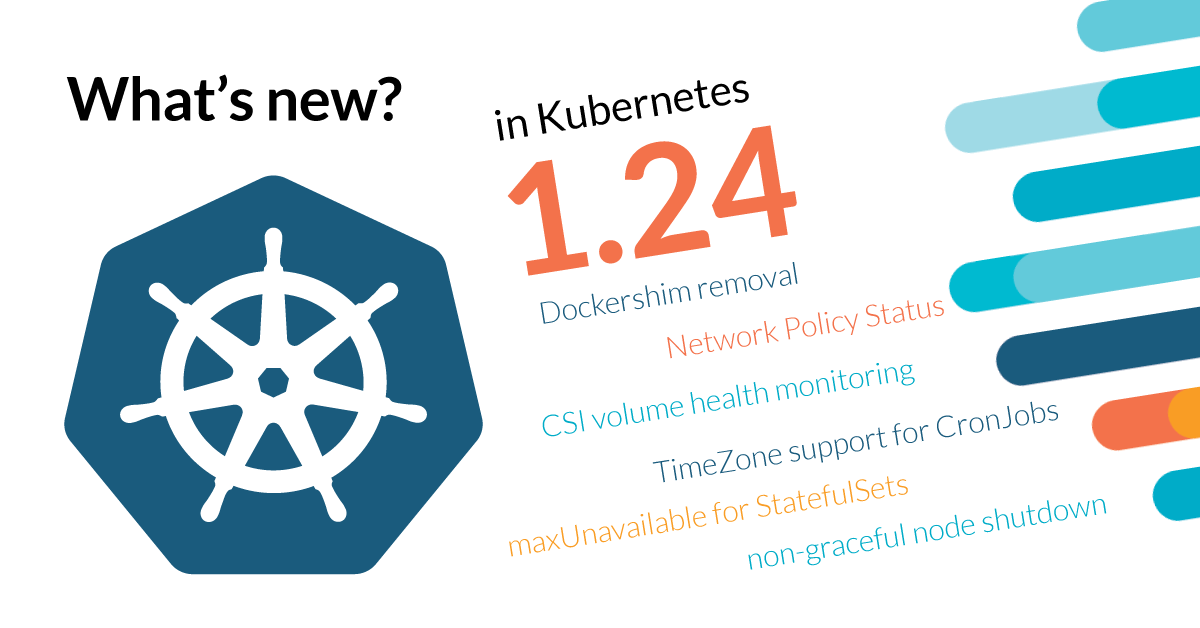

Kubernetes 1.24 is about to be released, and it comes packed with novelties! Where do we begin?

Update: Kubernetes 1.24 release date has been moved to May 3rd (from April 19th).

This release brings 46 enhancements, on par with the 45 in Kubernetes 1.23 and the 56 in Kubernetes 1.22. Of those 46 enhancements, 13 are graduating to Stable, 14 are existing features that keep improving, 13 are completely new, and six are deprecated features.

The elephant in the room in this release is the removal of Dockershim, a necessary step to ensure the future of the Kubernetes project. Watch out for all the deprecations and removals in this version!

The new features included in this version are generally small but have the potential to change how we interact with Kubernetes, like the new Network Policy Status field, the new

CSI volume health monitoring, or TimeZone support for CronJobs. Also, other features will make life easier for cluster administrators like maxUnavailable for StatefulSets, or the non-graceful node shutdown.

Finally, the icing on the cake of this release is all the great features that are reaching the GA state. This includes features with long history like, CSI volume expansion, PodOverhead, storage capacity tracking, and Service Type=LoadBalancer Class, to name a few. We are really hyped about this release!

There is plenty to talk about, so let's get started with what's new in Kubernetes 1.24.

Kubernetes 1.24 – Editor's pick:

These are the features that look most exciting to us in this release (ymmv):

#2221 Dockershim removal

As projects grow, they need to let go of their legacy features to ensure their future. Dockershim was the container runtime of Kubernetes, but it has been superseded by the better CRI pluggable system. Now that Dockershim has been removed, many developers and cluster administrators will have to go through an inconvenient, but necessary migration.

Víctor Jiménez Cerrada – Content Manager Engineer at Sysdig

#1432 CSI volume health monitoring

Being able to load a sidecar that checks for the health of persistent volumes is a welcome addition. Now, cluster administrators will be able to react better and faster to events like a persistent volume being deleted outside of Kubernetes. This will absolutely increase the reliability of Kubernetes clusters.

Vicente J. Jiménez Miras – Security Content Engineer at Sysdig

#2943 Network Policy Status

Yes, please bring more user-friendly features like this one. It is a container security best practice to create network policies that follow a zero-trust approach. However, sometimes you get a difficult to debug error and you end up opening your policy more than than you desire. Being able to debug these situations will make Kubernetes clusters more secure.

Miguel Hernández – Security Content Engineer at Sysdig

#2578 Windows operational readiness definition and tooling convergence

Since it was introduced in Kubernetes 1.14, Windows support keeps growing release after release, opening Kubernetes to a wide range of companies that wouldn't have considered it otherwise.

With this standard that Kubernetes 1.24 defines, it will be easier to compare Windows support between Kubernetes vendors. Hopefully this will make adopting Kubernetes in these environments a bit less uncertain.

Daniel Simionato – Security Content Engineer at Sysdig

#108004 kubelet: expose OOM metrics

This is one tiny improvement that doesn't qualify for an enhancement, but it will have significant repercussions. Starting with 1.24, kubelet offers a new Prometheus metric that registers the number of OutOfMemory events that have occurred in a container. This offers more visibility on a recurring problem in Kubernetes operations when the memory limits don't meet the container usage and needs.

With this new metric, the SRE can have a better understanding of the final cause of the problem and better determine if it's a recurring issue or an edge case. Faster troubleshooting, happier users.

David de Torres – Manager of Engineering at Sysdig

Deprecations

API and feature removals

A few beta APIs and features have been removed in Kubernetes 1.24, including:

- Removed Dockershim.

- Removed the experimental dynamic log sanitization feature.

- Removed cluster addon for dashboard.

- Removed insecure serving configuration from

cloud-providerpackage, which is consumed bycloud-controller-managers. - Deprecated VSphere releases less than 7.0u2.

- Deprecated the in-tree azure plugin.

- Deprecated the

metadata.clusterNamefield. - Removed

Client.authentication.k8s.io/v1alpha1 ExecCredential. - The

node.k8s.io/v1alpha1 RuntimeClassAPI is no longer served, use/v1. - Removed the

tolerate-unready-endpointsannotation in Service, useService.spec.publishNotReadyAddressesinstead. - Deprecated

Service.Spec.LoadBalancerIP. - Deprecated

CSIStorageCapacityAPI versionv1beta1, usev1. - Removed

VolumeSnapshot v1beta1, usev1. - Feature gates:

- Removed

ValidateProxyRedirects. - Removed

StreamingProxyRedirects.

- Removed

- Kube-apiserver:

- Deprecated the

--master-countflag and--endpoint-reconciler-type=master-countreconciler, in favor of the lease reconciler. - Removed the address flags

--address,--insecure-bind-address,--portand--insecure-port.

- Deprecated the

- Kubeadm:

- The

UnversionedKubeletConfigMapfeature gate is enabled by default. kubeadm.k8s.io/v1beta2has been deprecated, usev1beta3for new clusters.- Removed the

node-role.kubernetes.io/masterlabel.

- The

- Kube-controller-manager:

- Removed the flags

--addressand--port.

- Removed the flags

- Kubelet:

- Deprecate the flag

--pod-infra-container-image. - Removed

DynamicKubeletConfig.

- Deprecate the flag

You can check the full list of changes in the Kubernetes 1.24 release notes. Also, we recommend the Kubernetes Removals and Deprecations In 1.24 article, as well as keeping the deprecated API migration guide close for the future.

#2221 Dockershim removal

Stage: Major Change to Stable

Feature group: node

Dockershim is a built-in container runtime in the kubelet code base. However, with the introduction of Container Runtime Interface (CRI) it is possible to decouple container runtimes from the kubelet code base.

Moreover, this decoupling is a good practice that simplifies maintenance for both developers and cluster maintainers.

Alternatives exist, so this does not imply losing functionality in Kubernetes. You may find these resources helpful:

- Migrating from dockershim

- Kubernetes is Moving on From Dockershim

- Dockershim removal FAQ

- Is Your Cluster Ready for v1.24?

#281 DynamicKubeletConfig

Stage: Removed

Feature group: node

After being in beta since Kubernetes 1.11, the Kubernetes team has decided to deprecate DynamicKubeletConfig instead of continuing its development.

#3136 Beta APIs are off by default

Stage: Graduating to Stable

Feature group: architecture

Betas APIs that are not considered stable are enabled by default. This had a good side, as it accelerated adoption of these features. However, this opens the gate for several issues. For example, if a beta API has a bug, it will be present on 90% of the deployed clusters.

Starting with Kubernetes 1.24, new beta APIs will be disabled by default.

This change doesn't affect feature gates.

You may want to check the KEP for further rationale and implementation details.

#1164 Deprecate and remove SelfLink

Stage: Graduating to Stable

Feature group: api-machinery

Feature gate: RemoveSelfLink Default value: true

When this feature is enabled, the .metadata.selfLink field remains part of the Kubernetes API but is always unset.

Read more in our "Kubernetes 1.20 – What's new?" article.

#1753 Kubernetes system components logs sanitization

Stage: Removed

Feature group: instrumentation

The logs sanitization was introduced as an alpha in Kubernetes 1.20. After evaluating it for some versions, the instrumentation SIG decided that the static analysis that graduated to Stable in Kubernetes 1.23 would be a better approach.

#2845 Deprecate klog specific flags in Kubernetes components

Stage: Graduating to Beta

Feature group: instrumentation

This enhancement is part of a bigger effort to simplify logging in its components. The first stone is removing some flags in klog.

Read more in our "Kubernetes 1.23 – What's new?" article.

Kubernetes 1.24 API

#1904 Efficient watch resumption

Stage: Graduating to Stable

Feature group: api-machinery

Feature gate: EfficientWatchResumption Default value: true

From now on, kube-apiserver can initialize its watch cache faster after a reboot.

Read more in our "Kubernetes 1.20 – What's new?" article.

#2885 Beta Graduation Criteria for Field Validation

Stage: Graduating to Beta

Feature group: api-machinery

Feature gate: ServerSideFieldValidation Default value: true

Currently, you can use kubectl –validate=true to indicate that a request should fail if it specifies unknown fields on an object. This enhancement summarizes the work to implement the validation on kube-apiserver.

Read more in our "Kubernetes 1.23 – What's new?" article.

#2887 openapi enum types to beta

Stage: Graduating to Beta

Feature group: api-machinery

Feature gate: OpenAPIEnum Default value: true

This enhancement improves the Kubernetes OpenAPI generator so it can recognize types marked with +enum and look for auto-detect possible values for an enum.

Read more in our "Kubernetes 1.23 – What's new?" article.

#2896 OpenAPI v3

Stage: Graduating to Beta

Feature group: api-machinery

Feature gate: OpenApiv3 Default value: true

This feature adds support to kube-apiserver to serve Kubernetes and types as OpenAPI v3 objects. A new /openapi/v3/apis/{group}/{version} endpoint is available. It serves one schema per resource instead of aggregating everything into a single one.

Read more in our "Kubernetes 1.23 – What's new?" article.

Apps in Kubernetes 1.24

#961 maxUnavailable for StatefulSets

Stage: Alpha

Feature group: apps

Feature gate: MaxUnavailableStatefulSet Default value: false

StatefulSets support stateful applications, either because they need stable persistent storage, unique network identifiers, or an ordered rolling update, to name a few use cases.

In this last case, when performing a rolling update, the Pods will be deleted and recreated one at a time. That minimizes the risk of losing instances of the application, and maximizes the availability and chances of rolling back if something goes wrong.

The subresource maxUnavailable will, from now on, control how many of the application Pods are deleted and recreated at a time, which might speed up the rolling update of an application.

To avoid surprises, before doing a rolling update of a stateful application, remember to check the value of this new parameter. Keep in mind that a very high value might switch our rolling deployment into a recreate one, making us losing the service completely (temporarily in the best case).

#3140 TimeZone support in CronJob

Stage: Alpha

Feature group: apps

Feature gate: CronJobTimeZone Default value: false

This feature honors the delayed request to support time zones in the CronJob resources. Until now, the Jobs created by CronJobs are set in the same time zone, the one on which the kube-controller-manager process was based.

The process of calculating the time at which your Jobs need to start is over. Finding the right time zone might be your new challenge.

#2214 Indexed Job semantics in Job API

Stage: Graduating to Stable

Feature group: apps

Feature gate: IndexedJob Default value: true

Indexed Jobs were introduced in Kubernetes 1.21 to make it easier to schedule highly parallelizable Jobs.

This enhancement adds completion indexes into the Pods of a Job with fixed completion count, to support running embarrassingly parallel programs.

Read more in our "Kubernetes 1.22 – What's new?" article.

#2232 batch/v1: add suspend field to Jobs API

Stage: Graduating to Stable

Feature group: apps

Feature gate: JobReadyPods Default value: true

Since Kubernetes 1.21, Jobs can be temporarily suspended by setting the .spec.suspend field of the job to true, and resumed later by setting it back to false.

Read more in our "Kubernetes 1.21 – What's new?" article.

#2879 Track Ready Pods in Job status

Stage: Graduating to Beta

Feature group: apps

Feature gate: JobReadyPods Default value: true

This enhancement adds the Job.status.ready field that counts the number of Job Pods that have a Ready condition, similar to the existing active, succeeded, and failed fields. This field can reduce the need to listen to Pod updates in order to get a more correct picture of the current status.

Read more in our "Kubernetes 1.23 – What's new?" article.

Kubernetes 1.24 Auth

#2784 CSR Duration

Stage: Graduating to Stable

Feature group: auth

Feature gate: CSRDuration Default value: true

A new ExpirationSeconds field has been added to CertificateSigningRequestSpec, which accepts a minimum value of 600 seconds (10 minutes). This way, clients can define a shorter duration for certificates than the default of one year.

Read more in our "Kubernetes 1.22 – What's new?" article.

#2799 Reduction of Secret-based Service Account Tokens

Stage: Graduating to Beta

Feature group: auth

Feature gate: LegacyServiceAccountTokenNoAutoGeneration Default value: true

Now that the TokenRequest API has been stable since Kubernetes 1.22, it is time to do some cleaning and promote the use of this API over the old tokens.

Up until now, Kubernetes automatically created a service account Secret when creating a Pod. That token Secret contained the credentials for accessing the API.

Now, API credentials are obtained directly through the TokenRequest API, and are mounted into Pods using a projected volume. Also, these tokens will be automatically invalidated when their associated Pod is deleted. You can still create token secrets manually if you need it.

The features to track and clean up existing tokens will be added in future versions of Kubernetes.

Network in Kubernetes 1.24

#2943 Network Policy Status

Stage: Alpha

Feature group: network

Feature gate: NetworkPolicyStatus Default value: false

Network Policy resources are implemented differently by different CNI providers. And they might implement features in a different order. This can lead to a Network Policy not being honored by the current CNI provider, and worst of all, without notifying the user about the situation.

The new Status subresource will allow users to receive feedback on whether a NetworkPolicy and its features has been properly parsed and help them understand why a particular feature is not working.

This will be useful at troubleshooting issues like:

- Port ranges for an Egress Network Policy that might not be implemented by the provider yet.

- Users creating Network Policies with newly announced features that don't seem to work.

- Network Policies developed in staging environments seem to work differently in production environments, which might be running on a slightly older release version.

All in all, it's one step forward that will make the troubleshooting of a Kubernetes network issue less painful.

If you want to learn more about this new feature, check out the KEP.

#3070 Reserve Service IP Ranges For Dynamic and Static IP Allocation

Stage: Alpha

Feature group: network

Feature gate: ServiceIPStaticSubrange Default value: false

Kubernetes Services are an abstract way to expose an application running on a set of Pods. Services have a ClusterIP that is virtual and allows to load balance traffic across the different Pods. This ClusterIP can be assigned:

- Dynamically, the cluster will pick one within the configured Service IP range.

- Statically, the user will set one IP within the configured Service IP range.

Setting a static IP address to a Service might be quite useful but also quite risky. Currently, there isn't a way of knowing in advance whether an IP address has been dynamically assigned to an existing service.

This feature will lower the risk of having IP conflicts between Services using static and dynamic IP allocation, and at the same type, keep the compatibility backwards.

Its implementation is based on the segmentation of the Service network, passed as a string parameter to the --service-cluster-ip-range flag, which is currently used to allocate IP addresses to Services, into two bands.

Preferably, the lower part will be used preferably for static IP addresses while the upper one should be dedicated to dynamically allocated IPs. However, in the case of exhaustion of the upper band, it will continue using the lower range.

The formula to calculate these values can be found in the KEP, but we'll summarize it by saying that the static band will contain at least 16 IPs but never more than 256.

#1435 Support of mixed protocols in Services with type=LoadBalancer

Stage: Graduating to Beta

Feature group: network

Feature gate: MixedProtocolLBService Default value: true

This enhancement allows a LoadBalancer Service to serve different protocols under the same port (UDP, TCP). For example, serving both UDP and TCP requests for a DNS or SIP server on the same port.

Read more in our "Kubernetes 1.20 – What's new?" article.

#2086 Service internal traffic policy

Stage: Graduating to Beta

Feature group: network

Feature gate: ServiceInternalTrafficPolicy Default value: true

You can now set the spec.trafficPolicy field on Service objects to optimize your cluster traffic:

- With

Cluster, the routing will behave as usual. - When set to

Topology, it will use the topology-aware routing. - With

PreferLocal, it will redirect traffic to services on the same Kubernetes node. - With

Local, it will only send traffic to services on the same Kubernetes node.

Read more in our "Kubernetes 1.21 – What's new?" article.

Kubernetes 1.24 Nodes

#688 PodOverhead

Stage: Graduating to Stable

Feature group: node

Feature gate: PodOverhead Default value: true

In addition to the requested resources, your pods need extra resources to maintain their runtime environment.

When this feature is enabled, Kubernetes will take into account this overhead when scheduling a pod. The Pod Overhead is calculated and fixed at admission time and it's associated with the pod's RuntimeClass. Get the full details here.

Read more in our "Kubernetes 1.16 – What's new?" article.

#2133 Kubelet Credential Provider

Stage: Graduating to Beta

Feature group: node

Feature gate: DisableKubeletCloudCredentialProviders Default value: true

This enhancement replaces in-tree container image registry credential providers with a new mechanism that is external and pluggable.

Read more in our "Kubernetes 1.20 – What's new?" article.

#2712 PriorityClassValueBasedGracefulShutdown

Stage: Graduating to Beta

Feature group: node

Feature gate: GracefulNodeShutdownBasedOnPodPriority Default value: false

From now on, you can provide more time to stop for high priority Pods when a note is graceful shutdown. This enhancement adds the possibility to provide Pods different times to stop, depending on its Pod priority class value.

Read more in our "Kubernetes 1.23 – What's new?" article.

In Kubernetes 1.24, the metrics graceful_shutdown_start_time_seconds and graceful_shutdown_end_time_seconds are available to monitor node shutdowns.

#2727 gRPC probes

Stage: Graduating to Beta

Feature group: node

Feature gate: GRPCContainerProbe Default value: true

This enhancement allows configuring gRPC (HTTP/2 over TLS) liveness probes to Pods.

The liveness probes added in Kubernetes 1.16 allow to periodically check if an application is still alive.

In Kubernetes 1.23, support for the gRPC protocol was added.

Scheduling in Kubernetes 1.24

#3022 minDomains in PodTopologySpread

Stage: Alpha

Feature group: scheduling

Feature gate: MinDomainsInPodTopologySpread Default value: false

When new Pods are allocated to nodes, the PodTopologySpread subresource, via the maxSkew parameter, defines how uneven the distribution of Pods can be. Depending on the number of available resources, it might not be possible to comply with the maxSkew requirement. Using WhenUnsatisfiable=DoNotSchedule might lead to the scheduler refusing to allocate those new Pods, for not having enough available resources.

On the other hand, to avoid wasting resources, the Cluster Autoscaler can scale domains up and down according to its utilization. However, when a domain is scaled down to 0, the number of domains included in the calculations performed by the scheduler might not truthfully reflect the total number of available resources. In other words, a domain that has been scaled down to 0 becomes invisible to the scheduler.

The new minDomains subresource establishes a minimum number of domains that should count as available, even though they might not exist at the moment of scheduling a new Pod. Therefore, when necessary, the domain will be scaled up and new nodes within that domain will be automatically requested by the cluster autoscaler.

Don't hesitate to dig in the KEP of this feature for more details.

#902 Non-preempting Priority to GA

Stage: Graduating to Stable

Feature group: scheduling

Feature gate: NonPreemptingPriority Default value: true

Currently, PreemptionPolicy defaults to PreemptLowerPriority, which allows high-priority pods to preempt lower-priority pods.

This enhancement adds the PreemptionPolicy: Never option, so Pods can be placed in the scheduling queue ahead of lower-priority pods, but they cannot preempt other pods.

Read more in our "Kubernetes 1.15 – What's new?" article.

#2249 Pod affinity NamespaceSelector to GA

Stage: Graduating to Stable

Feature group: scheduling

Feature gate: PodAffinityNamespaceSelector Default value: true

By defining node affinity in a deployment, you can constrain which nodes your pod will be scheduled on. For example, deploy on nodes that are already running Pods with a label-value for an example-label.

This enhancement adds a namespaceSelector field so you can specify the namespaces by their labels, rather than their names. With this field, you can dynamically define the set of namespaces.

Read more in our "Kubernetes 1.21 – What's new?" article.

Kubernetes 1.24 Storage

#2268 Non-graceful node shutdown

Stage: Alpha

Feature group: storage

Feature gate: NonGracefulFailover Default value: false

When a node is shutdown but not detected by Kubelet's Node Shutdown Manager, the pods that are part of a StatefulSet will be stuck in terminating status on the shutdown node and cannot move to a new running node.

This feature addresses node shutdown cases that are not detected properly. The pods will be forcefully deleted in this case, trigger the deletion of the VolumeAttachments, and new pods will be created on a new running node so that application can continue to function.

This can also be applied to the case when a node is in a non-recoverable state such as hardware failure or broken OS.

To make this work, taint out-of-service has to be applied by the user once after confirming that the node is shutdown or in a non-recoverable state. Adding the NoExecute effect to the previous taint, like out-of-service=nodeshutdown:NoExecute or out-of-service=hardwarefailure:NoExecute, will evict the Pod and these will be started in other nodes.

To bring the node back, the user is required to manually remove the out-of-service taint after the pods are moved to a new node.

#3141 Control volume mode conversion between source and target PVC

Stage: Alpha

Feature group: storage

Feature gate: N/A

Users can leverage the VolumeSnapshot feature, which GA'd in Kubernetes 1.20, to create a PersistentVolumeClaim or PVC, converting it from a previously taken VolumeSnapshot. Unfortunately, there is no validation of the volume mode conversion.

This feature prevents the unauthorized conversion of the volume mode during such operation. Although there isn't a known CVE in the kernel that would allow a malicious user to exploit it, this feature aims to remove that possibility just in case.

To learn more about the detailed implementation, here is the KEP.

#1432 CSI volume health monitoring

Stage: Alpha

Feature group: storage

Feature gate: CSIVolumeHealth Default value: false

CSI drivers can now load an external controller as a sidecar that will check for volume health, and they can also provide extra information in the NodeGetVolumeStats function that Kubelet already uses to gather information on the volumes.

Read more in our "Kubernetes 1.21 – What's new?" article.

In Kubernetes 1.24, the Volume Health information is exposed as kubelet VolumeStats metrics. The kubelet_volume_stats_health_status_abnormal metric will have a persistentvolumeclaim label with a value of 1 if the volume is unhealthy, 0 otherwise.

#284 CSI volume expansion

Stage: Graduating to Stable

Feature group: storageFeature gate: ExpandPersistentVolumes Default value: trueFeature gate: ExpandInUsePersistentVolumes Default value: true

Feature gate: ExpandCSIVolumes Default value: true

This set of features have been in beta since Kubernetes 1.11, 1.15, and 1.16 respectively. With Kubernetes 1.24, the ability to resize a Persistent Volume finally is considered stable.

#1472 Storage Capacity Tracking

Stage: Graduating to Stable

Feature group: storage

Feature gate: CSIMigrationAzureFile Default value: trueFeature gate: CSIMigration Default value: true

This enhancement tries to prevent pods to be scheduled on nodes connected to CSI volumes without enough free space available.

Read more in our "Kubernetes 1.19 – What's new?" article.

#1489 OpenStack in-tree to CSI driver migration

Stage: Graduating to Stable

Feature group: storage

Feature gate: CSIMigrationOpenStack Default value: true

This enhancement summarizes the work to move OpenStack Cinder code out of the main Kubernetes binaries (out-of-tree).

#1490 Azure disk in-tree to CSI driver migration

Stage: Graduating to Stable

Feature group: storage

Feature gate: CSIMigrationAzureDisk Default value: true

This enhancement summarizes the work to move Azure Disk code out of the main Kubernetes binaries (out-of-tree).

Read more in our "Kubernetes 1.19 – What's new?" article.

#1885 Azure file in-tree to CSI driver migration

Stage: Graduating to Beta

Feature group: storage

Feature gate: InTreePluginAzureDiskUnregister Default value: true

This enhancement summarizes the work to move Azure File code out of the main Kubernetes binaries (out-of-tree).

Read more in our "Kubernetes 1.21 – What's new?" article.

#1495 Volume populator

Stage: Graduating to Beta

Feature group: storage

Feature gate: AnyVolumeDataSource Default value: true

Introduced in Kubernetes 1.18, this enhancement establishes the foundations that will allow users to create pre-populated volumes. For example, pre-populating a disk for a virtual machine with an OS image, or enabling data backup and restore.

To accomplish this, the current validations on the DataSource field of persistent volumes will be lifted, allowing it to set arbitrary objects as values. Implementation details on how to populate volumes are delegated to purpose-built controllers.

#2644 Always honor reclaim policy

Stage: Graduating to Beta

Feature group: storage

Feature gate: HonorPVReclaimPolicy Default value: true

This enhancement fixes an issue where in some Bound Persistent Volume (PV) – Persistent Volume Claim (PVC) pairs, the ordering of PV-PVC deletion determines whether the PV delete reclaim policy is honored.

Read more in our "Kubernetes 1.23 – What's new?" article.

Other enhancements in Kubernetes 1.24

#2551 kubectl return code normalization

Stage: Alpha

Feature group: cli

Feature gate: N/A

More than a feature, this is an attempt to standardize the return codes of the different subcommands kubectl supports and will support. This intends to facilitate the automation of tasks and will provide a better understanding of the result of our commands.

#2590 Add subresource support in kubectl

Stage: Alpha

Feature group: cli

Feature gate: N/A

Some kubectl commands like get, patch, edit, and replace will now contain a new flag --subresource=[subresource-name], which will allow fetching and updating status and scale subresources for all API resources.

You now can stop using complex curl commands to directly update subresources.

#1959 Service Type=LoadBalancer Class

Stage: Graduating to Stable

Feature group: cloud-provider

Feature gate: ServiceLoadBalancerClass Default value: true

This enhancement allows you to leverage multiple Service Type=LoadBalancer implementations in a cluster.

Read more in our "Kubernetes 1.21 – What's new?" article.

#2436 leader migration to GA

Stage: Graduating to Stable

Feature group: cloud-provider

Feature gate: ControllerManagerLeaderMigration Default value: true

As we've been discussing for a while, there is an active effort to move code specific to cloud providers outside of the Kubernetes core code (from in-tree to out-of-tree).

This enhancement establishes a migration process for clusters with strict requirements on control plane availability.

Read more in our "Kubernetes 1.21 – What's new?" article.

#3077 Contextual logging

Stage: Alpha

Feature group: instrumentation

Feature gate: StructuredLogging Default value: false

This enhancement is another piece in the ongoing effort to remove dependencies over klog, the Kubernetes logging library, and its limitations. It builds on top of Kubernetes 1.19 structured logging efforts.

Contextual logging includes two changes aimed for developers:

- Reversing dependencies, so libraries no longer use the global

Logger, but an instance that is passed to them. - Libraries can use the

WithValuesandWithNamefunctions to add extra context information to the logger (and log output).

For example, a new logger can be created using WithName:

logger := klog.FromContext(ctx).WithName("foo")

When this logger is passed around and used:

logger.Info("Done")

It will include "foo" as a prefix.

One relevant use case is unit testing, where devs can provide each test case with its own logger to differentiate their outputs. Now, this can be done without changing the libraries code.

Also, this dependency inversion paves the way to remove the reliance on klog. The global logger could be replaced by any arbitrary implementation of logr.Logger.

Find out more interesting use cases in the KEP, and take a look at the upcoming Blog article.

#3031 Signing release artifacts

Stage: Alpha

Feature group: release

Feature gate: N/A

In order to help avoid supply chain attacks, end users should be able to verify the integrity of downloaded resources, like the Kubernetes binaries.

This enhancement introduces a unified way to sign artifacts. It relies on the sigstore project tools, and more specifically cosign. Although it doesn't add new functionality, it will surely help to keep our cluster more protected.

In addition, it is recommended to also implement other measures to avoid risks, like unauthorized access to the signing key or its infrastructure. For example, auditing the key usage publicly using keyless signing.

#2578 A Windows-[operational readiness] definition and tooling convergence

Stage: Alpha

Feature group: windows

Feature gate: N/A

Since Kubernetes 1.14, Windows container orchestration has been ready for enterprise adoption. However, Conformance tests haven't been as reliable in Windows as they are in Linux.

For this reason, to evaluate the Operational Readiness of a Windows Kubernetes clusters, specific tests will be developed covering the following areas:

- Basic Networking

- Basic Service accounts and local storage

- Basic Scheduling

- Basic concurrent functionality

- Windows HostProcess Operational Readiness

- Active Directory

- Network Policies

- Windows Advanced Networking and Service Proxying

- Windows Worker Configuration

This will provide you with tools for actions, like:

- Verifying that the features we rely on in Kubernetes are supported by a specific Windows Kubernetes cluster.

- Evaluating the completeness of the Kubernetes support matrix for Windows clusters.

- Knowing whether our current version of Kubernetes supports a specific Windows feature.

The efforts on creating a stable ecosystem for Kubernetes on Windows environments are creating new opportunities for all those organizations, whose applications are Windows-specific. Containers aren't Linux exclusive anymore.

Learn more about this extensive topic in the KEP.

#2802 Identify Windows pods at API admission level authoritatively

Stage: Graduating to Beta

Feature group: windows

Feature gate: IdentifyPodOS Default value: true

This enhancement adds an OS field to PodSpec, so you can define what operating system a pod should be running on. That way, Kubernetes have better context to manage Pods.

Read more in our "Kubernetes 1.23 – What's new?" article.

That's all for Kubernetes 1.24, folks! Exciting as always; get ready to upgrade your clusters if you are intending to use any of these features.

If you liked this, you might want to check out our previous 'What's new in Kubernetes' editions:

- Kubernetes 1.23 – What's new?

- Kubernetes 1.22 – What's new?

- Kubernetes 1.21 – What's new?

- Kubernetes 1.20 – What's new?

- Kubernetes 1.19 – What's new?

- Kubernetes 1.18 – What's new?

- Kubernetes 1.17 – What's new?

- Kubernetes 1.16 – What's new?

- Kubernetes 1.15 – What's new?

- Kubernetes 1.14 – What's new?

- Kubernetes 1.13 – What's new?

- Kubernetes 1.12 – What's new?

Get involved in the Kubernetes community:

- Visit the project homepage.

- Check out the Kubernetes project on GitHub.

- Get involved with the Kubernetes community.

- Meet the maintainers on the Kubernetes Slack.

- Follow @KubernetesIO on Twitter.

And if you enjoy keeping up to date with the Kubernetes ecosystem, subscribe to our container newsletter, a monthly email with the coolest stuff happening in the cloud-native ecosystem.