Falco Feeds extends the power of Falco by giving open source-focused companies access to expert-written rules that are continuously updated as new threats are discovered.

Another outstanding Kubernetes release, this time focused on making the CustomResource a first class citizen in your cluster, allowing for better extensibility and maintainability. But wait, there is much more! Here is the full list of what's new in Kubernetes 1.15. This is what's new in #Kubernetes 1.15 Click to tweet

Kubernetes 1.15 – Editor's pick:

These are the features that look more exciting to us for this release (ymmv):

- #95 CustomResourceDefinitions

- #383 Redesign event API

- #962 Execution hooks

- #624 Scheduling framework

Kubernetes 1.15 core

#1024 NodeLocal DNSCache

Stage: Graduating to BetaFeature group: NetworkNodeLocal DNSCache improves Cluster DNS performance by running a dns caching agent on cluster nodes as a Daemonset, thereby avoiding iptables DNAT rules and connection tracking. The local caching agent will query kube-dns service for cache misses of cluster hostnames (cluster.local suffix by default).You can learn more about this beta feature reading the design notes in its Kubernetes Enhancement Proposal (KEP) document.

#383 Redesign event API

Stage: AlphaFeature group: ScalabilityThis effort has two main goals – reduce performance impact that Events have on the rest of the cluster and add more structure to the Event object which is the first and necessary step to make it possible to automate event analysis.The issues with the current state of the Events API are that they are too spammy, difficult to ingest and analyze and suffer from several performance problems.Events can overload API server if there's something wrong with the cluster (e.g. some correlated crashloop on many nodes).This design proposal expands on the current Event API issues and the proposed solutions and improvement efforts.

#492 Admission webhook

Stage: Beta (with major changes)Feature group: APIMutating and validating admission webhooks are becoming more and more mainstream for projects extending the Kubernetes API.Until now mutating webhooks were only called once, in alphabetical order. In Kubernetes 1.15 this will change, allowing webhook re-invocation if another webhook later in the chain modifies the same object.If you enable this feature, it is important to verify that any admission webhook you implement is idempotent. In other words, it can be executed multiple times over the same object without lateral effects like adding the same attribute several times.

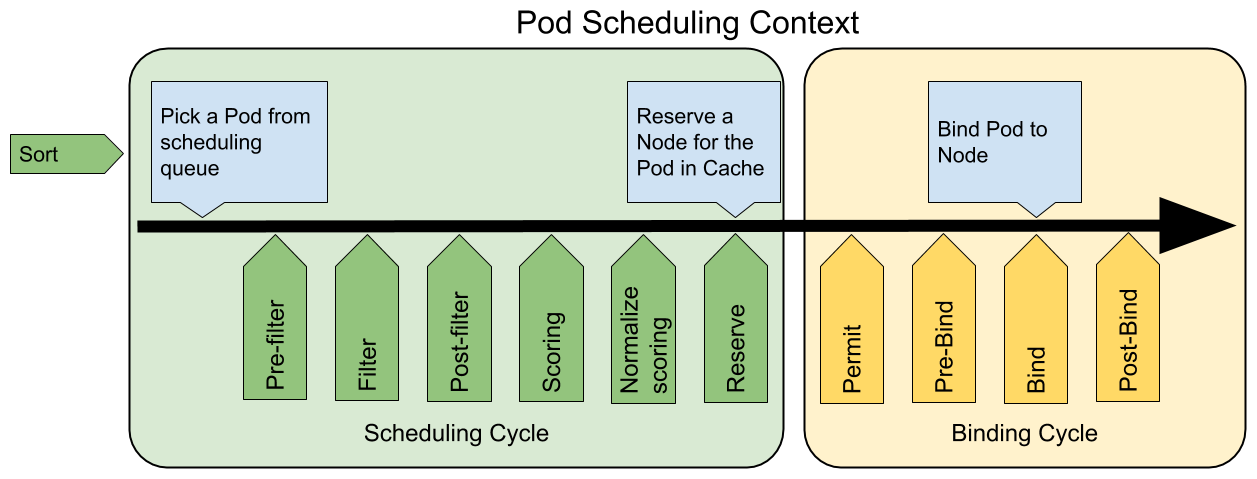

#624 Scheduling framework

Stage: AlphaFeature group: SchedulingThis feature covers a new pluggable architecture for the Kubernetes 1.15 Scheduler that makes scheduler customizations easier to implement. It adds a new set of "plugin" APIs and entry points to the existing scheduler.The following picture shows the scheduling context of a Pod and the extensionpoints that the new scheduling framework exposes:

You can read more about this alpha-stage feature reading the design proposal.

#606 Support 3rd party device monitoring plugins

Stage: Graduating to BetaFeature group: NodeThis feature allows the kubelet to expose container bindings to 3rd party monitoring plugins.With this implementation, administrators will be able to monitor the custom resource assignment to containers using a 3rd party Device Monitoring Agent (For example, % GPU use per pod).The previous in-tree solution required the kubelet to implement device-specific knowledge.Kubelet will offer a new GRPC service at /var/lib/kubelet/pod-resources/kubelet.sock that will export all the information about the assignment between containers and devices.

#757 Pid limiting

Stage: Graduating to BetaFeature group: NodeWe already covered pid limiting in the last release of the "What's new in Kubernetes" series.Pids are a fundamental resource on any host. Administrators require mechanisms to ensure that user pods cannot induce pid exhaustion that may prevent host daemons (runtime, kubelet, etc) from running.This feature allows for the configuration of a kubelet to limit the number of PIDs a given pod can consume. Node-level support for pid limiting no longer requires setting the feature gate SupportNodePidsLimit=true explicitly.There is a Kubernetes 1.15 blog post covering this feature.

#902 Add non-preempting option to PriorityClasses

Stage: AlphaFeature group: SchedulingKubernetes 1.15 adds the PreemptionPolicy field as an alpha feature.PreemptionPolicy defaults to PreemptLowerPriority, which will allow pods of that PriorityClass to preempt lower-priority pods (the standard behaviour).Pods with PreemptionPolicy: Never will be placed in the scheduling queueahead of lower-priority pods, but they cannot preempt other pods.An example use case is for data science workloads: a user may submit a job that they want to be prioritized above other workloads, but do not wish to discard any existing work by preempting running pods.

#917 Add go module support to k8s.io/kubernetes

Stage: StableFeature group: ArchitectureSince the creation of Kubernetes, it has been using godep for vendoring all the required libraries.As the go ecosystem matured, vendoring became a first-class concept, godep became unmaintained, Kubernetes started using a custom version of godep, other vendoring tools (like glide and dep) became available, and dependency management was ultimately added directly to go in the form of go modules.The plan of record is for go1.13 to enable go modules by default and deprecate $GOPATH mode. To be ready for that, the code for several Kubernetes components was adjusted for the Kubernetes 1.15 release.

#956 Add Watch bookmarks support

Stage: AlphaFeature group: APIA given Kubernetes server will only preserve a historical list of changes for a limited time. Clusters using etcd3 preserve changes in the last 5 minutes by default.The "bookmark" watch event is used as a checkpoint, indicating that all objects up to a given resourceVersion that the client is requesting have already been sent.For example, if a given Watch is requesting all the events starting with resourceVersion X and the API knows that this Watch is not interested in any of the events up to a much higher version, the API can skip sending all these events using a bookmark, avoiding unnecessary processing on both sides.This proposal make restarting watches cheaper from kube-apiserver performance perspective.

#962 Execution hooks

Stage: AlphaFeature group: storageExecutionHook provides a general mechanism for users to trigger hook commands in their containers for their different use cases, such as:

- Application-consistency snapshotting

- Upgrade

- Prepare for some lifecycle event like a database migration

- Reload a config file

- Restart a container

The hook spec has two pieces of information: what are the commands to execute and where to execute them (pod selector).Here is an HookAction example:apiVersion: apps.k8s.io/v1alpha1

kind: HookAction

metadata:

name: action-demo

Action:

exec:

command: ["run_quiesce.sh"]

actionTimeoutSeconds: 10You can read more about this alpha feature in its Kubernetes Enhancement Proposal.

#981 PDB support for custom resources with scale subresource

Stage: Graduating to BetaFeature group: AppsPod Disruption Budget (PDB) is a Kubernetes API that limits the number of pods of a collection that are down simultaneously from voluntary disruptions. PDBs allows a user to specify the allowed disruption through either min available or max unavailable number of pods.For example, for a stateless frontend:

- Concern: don't reduce serving capacity by more than 10%.

- Solution: use PDB with minAvailable 90%.

Using PDBs, you can allow the operator to manipulate Kubernetes workloads without degradingapplication availability or performance.

Kubernetes 1.15 custom resources

#95 CustomResourceDefinitions

Stage: Beta (with major changes)Feature group: APIThis feature groups the many modifications and improvements that have been performed over CustomResourceDefinitions for this Kubernetes 1.15 release:

- Structural schema using OpenAPI

- CRD pruning

- CRD defaulting

- Webhook conversion moved to beta

- Publishing the CRD OpenAPI schema

#692 Publish CRD OpenAPI schema

Stage: Graduating to BetaFeature group: APICustomResourceDefinition (CRD) allows the CRD author to define an OpenAPI v3 schema to enable server-side validation for CustomResources (CR).Publishing CRD OpenAPI schemas enables client-side validation, schema explanation (for example using kubectl create, apply or explain) and automatic client generation for CRs, so you can easily instrument your API using any supported programming language.Using the OpenAPI specification will help CRD authors and the Kubernetes API machinery to have a clearer and more precise document format moving forward.

#575 Defaulting and pruning for custom resources

Stage: AlphaFeature group: APITwo features aiming to facilitate the JSON handling and processing associated with CustomResourceDefinitions.Pruning: CustomResourceDefinitions traditionally store any (possibly validated) JSON as it is in etcd. Now, If a structural OpenAPI v3 validation schema is defined and preserveUnknownFields is false, unspecified fields on creation and on update are dropped.preserveUnknownFields: false

validation:

openAPIV3Schema:

type: object

Defaulting: Defaulting is available as alpha since 1.15. It is disabled by default and can be enabled via the CustomResourceDefaulting feature gate. Defaulting also requires a structural schema and pruning.spec:

type: object

properties:

cronSpec:

type: string

pattern: '^(d+|*)(/d+)?(s+(d+|*)(/d+)?){4}

#598 Webhook conversion for custom resources

Stage: Graduating to Beta

Feature group: API

Different CRD versions can have different schemas. You can now handle on-the-fly conversion between versions defining and implementing a conversion webhook. This webhook will be called, for example, in the following cases:

- custom resource is requested in a different version than stored version.

- Watch is created in one version but the changed object is stored in another version.

- custom resource PUT request is in a different version than storage version.

There is an example implementation of a custom resource conversion webhook server that you can use as a reference.

Configuration management

#515 Kubectl get and describe should work well with extensions

Stage: Graduating to Stable

Feature group: Cli

Now it is possible for third party API extensions and CRDs to provide custom output for kubectl get and describe. This moves the output printing to the server side allowing for better extensibility and decoupling the kubectl tool from the specifics of the extension implementation.

You can read the design proposal for this feature and the related server-side get here.

#970 Kubeadm: New v1beta2 config format

Stage: Graduating to Beta

Feature group: Cluster lifecycle

Over time, the number of options to configure the creation of a Kubernetes cluster has greatly increased in the kubeadm config file, while the number of CLI parameters has been kept the same. As a result, the config file is the only way to create a cluster with several specific use cases.

The goal of this feature is to redesign how the config is persisted, improving the current version and providing a better support for high availability clusters using substructures instead of a single flat file with all the options.

#357 Ability to create dynamic HA clusters with kubeadm

Stage: Graduating to beta

Feature group: Cluster lifecycle

Kubernetes can have more than a single control plane to provide high availability. The kubeadm tool is now able to set up a Kubernetes HA cluster:

- With stacked control plane nodes, where etcd nodes are colocated with control plane nodes

- With external etcd nodes, where etcd runs on separate nodes from the control plane

This feature was introduced as a net new alpha in Kubernetes 1.14, you can read about the motivation, design and use cases covered in this KEP.

Cloud providers

#423 Support AWS network load balancer

Stage: Graduating to Beta

Feature group: AWS

You can now use annotations in your Kubernetes 1.15 services to request the new AWS NLB as your LoadBalancer type:

metadata:

name: my-service

annotations:

service.beta.kubernetes.io/aws-load-balancer-type: "nlb"Unlike Classic Elastic Load Balancers, Network Load Balancers (NLBs) forward the client's IP through to the node. The AWS network load balancer has been supported in Kubernetes as Alpha feature since v1.9. The code and API has been stabilized, reaching beta stage.

#980 Finalizer protection for service LoadBalancers

Stage: AlphaFeature group: NetworkBy default, the LoadBalancer resources allocated in a cloud provider should be cleaned-up after the related Kubernetes service is deleted.There are, however, various corner cases where cloud resources are orphaned after theassociated Service is deleted. Finalizer Protection for Service LoadBalancers wasintroduced to prevent this from happening.This finalizer will be attached to any service that has type=LoadBalancer if the cluster has enabled cloud provider integration. Upon the deletion of such service, the actual deletion of the resource will be blocked until this finalizer is removed.

Storage

#625 In-tree storage plugin to CSI Driver Migration

Stage: AlphaFeature group: StorageStorage plugins were originally in-tree, inside the Kubernetes codebase, increasing the complexity of the base code and hindering extensibility.The target is to move all this code to loadable plugins that can interact with Kubernetes through the Container Storage Interface.This will reduce the development costs and will make it more modular and extensible, increasing compatibility between different versions of the storage plugin and Kubernetes code base. You can read more about the ongoing effort to move storage plugins to CSI here.

#989 Extend allowed PVC DataSources

Stage: AlphaFeature group: StorageUsing this feature, you can "clone" an existing PV.Clones are different than Snapshots. A Clone results in a new, duplicate volume being provisioned from an existing volume -- it counts against the users volume quota, it follows the same create flow and validation checks as any other volume provisioning request, it has the same lifecycle and workflow.You need to be aware of the following when using this feature:

- Cloning support

VolumePVCDataSourceis only available for CSI drivers. - Cloning support is only available for dynamic provisioners.

- CSI drivers may or may not have implemented the volume cloning functionality.

#1029 Quotas for ephemeral storage

Stage: AlphaFeature group: NodeThe current quota mechanism relies on periodically walking each ephemeral volume, this method is slow, and has high latency.The mechanism proposed in this feature utilizes filesystem project quotas to provide monitoring of resource consumption and optionally enforcement of limits.It's goals are:

- Improve performance of monitoring by using project quotas in a non-enforcing way to collect information about storage utilization of ephemeral volumes.

- Detect storage used by pods that is concealed by deleted files being held open.

This could also provide a way to enforce limits on per-volume storage consumption by using enforced project quotas.

#531 Add support for online resizing of PVs

Stage: graduating to BetaFeature group: StorageThis feature enables users to expand a volume's file system by editing a PVC without having to restart the pod using the PVC.Expanding in-use PVCs is a beta feature and is enabled by default via ExpandInUsePersistentVolumes feature gate.File system expansion can be performed:

- When pod is starting up

- When pod is running and underlying file system supports online expansion (XFS, ext3 or ext4).

Read more about this feature in the Kubernetes 1.15 official documentation.

#559 Provide environment variables expansion in sub path mount

Stage: Graduating to BetaFeature group: StorageSystems often need to define the mount paths depending on env vars. The previous workaround was to create a sidecar container with symbolic links.To avoid boilerplate, they are going to introduce the possibility to add environment variables to the subPath, so instead of writing:env:

- name: POD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

...

volumeMounts:

- name: workdir1

mountPath: /logs

subPath: $(POD_NAME)You could write:volumeMounts:

- name: workdir1

mountPath: /logs

subPathExpr: $(POD_NAME)And that's all, for now! if you enjoy keeping up to date with the Kubernetes ecosystem, subscribe to our container newsletter, a monthly email with the coolest stuff happening in the cloud-native ecosystem.default: "5 0 * * *"

#598 Webhook conversion for custom resources

Stage: Graduating to BetaFeature group: APIDifferent CRD versions can have different schemas. You can now handle on-the-fly conversion between versions defining and implementing a conversion webhook. This webhook will be called, for example, in the following cases:

- custom resource is requested in a different version than stored version.

- Watch is created in one version but the changed object is stored in another version.

- custom resource PUT request is in a different version than storage version.

There is an example implementation of a custom resource conversion webhook server that you can use as a reference.

Configuration management

#515 Kubectl get and describe should work well with extensions

Stage: Graduating to StableFeature group: CliNow it is possible for third party API extensions and CRDs to provide custom output for kubectl get and describe. This moves the output printing to the server side allowing for better extensibility and decoupling the kubectl tool from the specifics of the extension implementation.You can read the design proposal for this feature and the related server-side get here.

#970 Kubeadm: New v1beta2 config format

Stage: Graduating to BetaFeature group: Cluster lifecycleOver time, the number of options to configure the creation of a Kubernetes cluster has greatly increased in the kubeadm config file, while the number of CLI parameters has been kept the same. As a result, the config file is the only way to create a cluster with several specific use cases.The goal of this feature is to redesign how the config is persisted, improving the current version and providing a better support for high availability clusters using substructures instead of a single flat file with all the options.

#357 Ability to create dynamic HA clusters with kubeadm

Stage: Graduating to betaFeature group: Cluster lifecycleKubernetes can have more than a single control plane to provide high availability. The kubeadm tool is now able to set up a Kubernetes HA cluster:

- With stacked control plane nodes, where etcd nodes are colocated with control plane nodes

- With external etcd nodes, where etcd runs on separate nodes from the control plane

This feature was introduced as a net new alpha in Kubernetes 1.14, you can read about the motivation, design and use cases covered in this KEP.

Cloud providers

#423 Support AWS network load balancer

Stage: Graduating to BetaFeature group: AWSYou can now use annotations in your Kubernetes 1.15 services to request the new AWS NLB as your LoadBalancer type:Unlike Classic Elastic Load Balancers, Network Load Balancers (NLBs) forward the client's IP through to the node. The AWS network load balancer has been supported in Kubernetes as Alpha feature since v1.9. The code and API has been stabilized, reaching beta stage.

#980 Finalizer protection for service LoadBalancers

Stage: AlphaFeature group: NetworkBy default, the LoadBalancer resources allocated in a cloud provider should be cleaned-up after the related Kubernetes service is deleted.There are, however, various corner cases where cloud resources are orphaned after theassociated Service is deleted. Finalizer Protection for Service LoadBalancers wasintroduced to prevent this from happening.This finalizer will be attached to any service that has type=LoadBalancer if the cluster has enabled cloud provider integration. Upon the deletion of such service, the actual deletion of the resource will be blocked until this finalizer is removed.

Storage

#625 In-tree storage plugin to CSI Driver Migration

Stage: AlphaFeature group: StorageStorage plugins were originally in-tree, inside the Kubernetes codebase, increasing the complexity of the base code and hindering extensibility.The target is to move all this code to loadable plugins that can interact with Kubernetes through the Container Storage Interface.This will reduce the development costs and will make it more modular and extensible, increasing compatibility between different versions of the storage plugin and Kubernetes code base. You can read more about the ongoing effort to move storage plugins to CSI here.

#989 Extend allowed PVC DataSources

Stage: AlphaFeature group: StorageUsing this feature, you can "clone" an existing PV.Clones are different than Snapshots. A Clone results in a new, duplicate volume being provisioned from an existing volume — it counts against the users volume quota, it follows the same create flow and validation checks as any other volume provisioning request, it has the same lifecycle and workflow.You need to be aware of the following when using this feature:

- Cloning support

VolumePVCDataSourceis only available for CSI drivers. - Cloning support is only available for dynamic provisioners.

- CSI drivers may or may not have implemented the volume cloning functionality.

#1029 Quotas for ephemeral storage

Stage: AlphaFeature group: NodeThe current quota mechanism relies on periodically walking each ephemeral volume, this method is slow, and has high latency.The mechanism proposed in this feature utilizes filesystem project quotas to provide monitoring of resource consumption and optionally enforcement of limits.It's goals are:

- Improve performance of monitoring by using project quotas in a non-enforcing way to collect information about storage utilization of ephemeral volumes.

- Detect storage used by pods that is concealed by deleted files being held open.

This could also provide a way to enforce limits on per-volume storage consumption by using enforced project quotas.

#531 Add support for online resizing of PVs

Stage: graduating to BetaFeature group: StorageThis feature enables users to expand a volume's file system by editing a PVC without having to restart the pod using the PVC.Expanding in-use PVCs is a beta feature and is enabled by default via ExpandInUsePersistentVolumes feature gate.File system expansion can be performed:

- When pod is starting up

- When pod is running and underlying file system supports online expansion (XFS, ext3 or ext4).

Read more about this feature in the Kubernetes 1.15 official documentation.

#559 Provide environment variables expansion in sub path mount

Stage: Graduating to BetaFeature group: StorageSystems often need to define the mount paths depending on env vars. The previous workaround was to create a sidecar container with symbolic links.To avoid boilerplate, they are going to introduce the possibility to add environment variables to the subPath, so instead of writing:You could write:And that's all, for now! if you enjoy keeping up to date with the Kubernetes ecosystem, subscribe to our container newsletter, a monthly email with the coolest stuff happening in the cloud-native ecosystem.