Kubernetes pod pending is ubiquitous in every cluster, even in different levels of maturity.

If you ask any random DevOps engineer using Kubernetes to identify the most common error that torments their nightmares, a deployment with pending pods is near the top of their list (maybe only second to CrashLoopBackOff).

Trying to push an update and seeing it stuck can make DevOps nervous. Even when the solution is fairly easy, finding the cause of a pod pending and understanding the changes you need to apply can be important (Kubernetes troubleshooting is rarely trivial).

In this article, we will cast some light on the different situations that cause this issue, allowing DevOps teams to find the solution quickly and, best of all, avoid it as much as possible.

What does Kubernetes Pod pending mean?

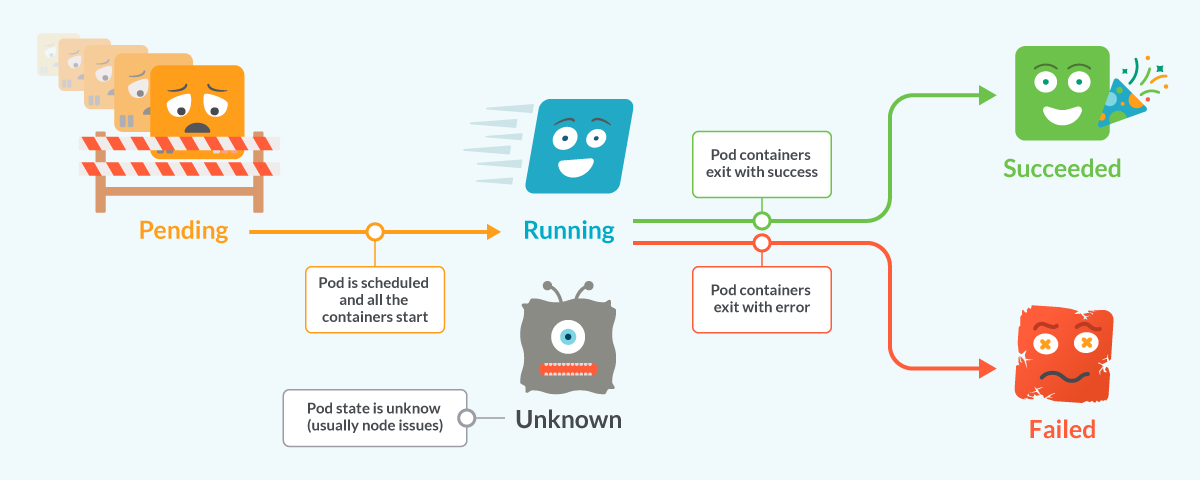

Pods in Kubernetes have a lifecycle composed of several different phases:

- When a pod is created, it starts in the

Pendingphase. - Once the pod is scheduled and the containers have started, the pod changes to the

Runningphase.

Most of the pods only take seconds to progress from Pending to Running and spend most of their life in that state.

Up to this point, the Pod has been accepted by the Kubernetes cluster. However, one or more of the containers have not been set up and made ready to run. This includes the time a Pod spends waiting to be scheduled as well as the time spent downloading container images over the network.

When a pod can’t progress from the Pending to Running phase, the life cycle stops and the pod is held until the problem preventing it from progressing is fixed.

If we list the pods with kubectl, we will see an output showing the Kubernetes pod pending situation:

$ kubectl -n troubleshooting get pods

NAME READY STATUS RESTARTS AGE

stress-6d6cbc8b9d-s4sbh 0/1 Pending 0 17sCode language: Perl (perl)The pod is stuck and won’t run unless we fix the problem.

Troubleshooting Kubernetes pod pending common causes

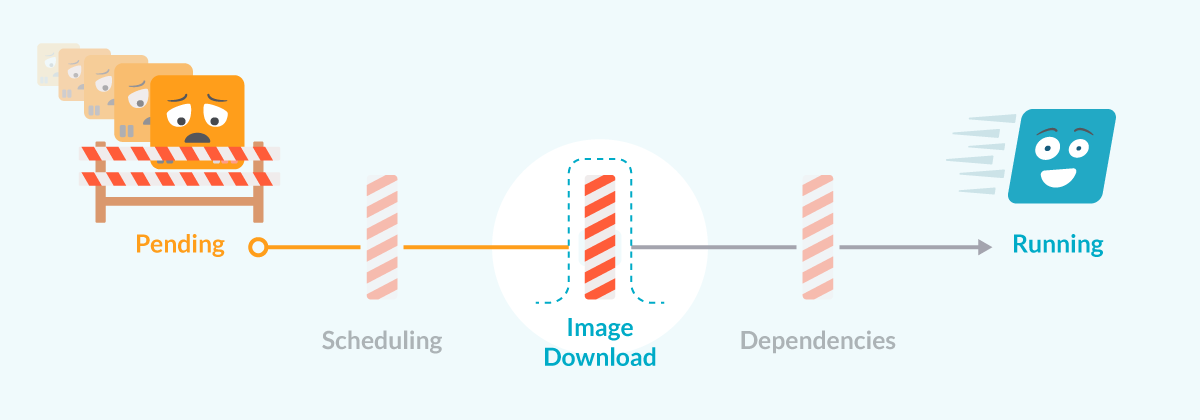

There are several causes that can prevent a pod from running, but we will describe the three main issues:

- Scheduling issues: The pod can’t be scheduled in any Kubernetes node.

- Image issues: There are issues downloading the container images.

- Dependency issues: The pod needs a volume, secret, or config map to run.

The first one is the most common and the last one is rarely seen. Let’s elaborate on each case.

Kubernetes Pod pending due to scheduling issues

When a pod is created, the first thing that a Kubernetes cluster does is try to schedule the pod to run in one of the nodes. This process is often really fast and the pod is assigned quickly to a node with enough resources to run it.

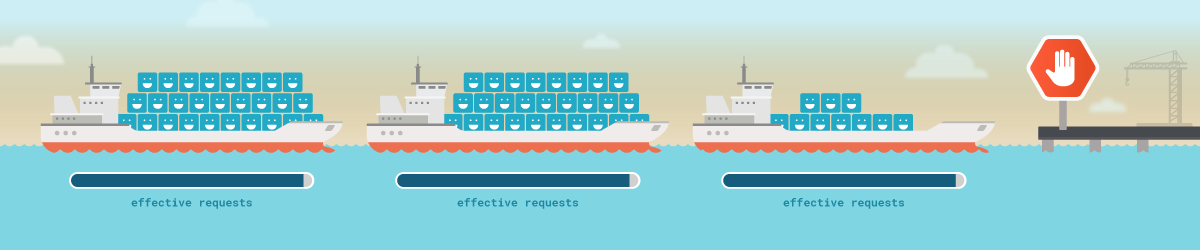

In order to schedule it, the cluster uses the pod’s effective request (find more details in this post about pod eviction). Usually, the pod is assigned to the node with more unrequested resources and goes on with its happy and wonderful life full of SLO compliant replies to requests.

But you wouldn’t be reading this article if this process worked every time. There are several factors that could make the cluster unable to allocate the pod.

Let’s review the most common ones.

There aren’t enough resources in any node to allocate the pod

Kubernetes uses requests for scheduling to decide if a pod fits in the node. The real use of resources doesn’t matter, only the resources already requested by other pods.

A pod will be scheduled in a node when it has enough requestable resources to attend the pod’s effective requests for memory and CPU. Of course, the node must not have reached the maximum number of pods it can run.

When there aren’t any nodes matching all the requirements from the pod, it will be held in a Kubernetes pod pending state until some resources are freed.

Unschedulable nodes

Due to different issues (node pressure) or human actions (node cordoned), a node can change to an unschedulable state. These nodes won’t schedule any pod until their state changes.

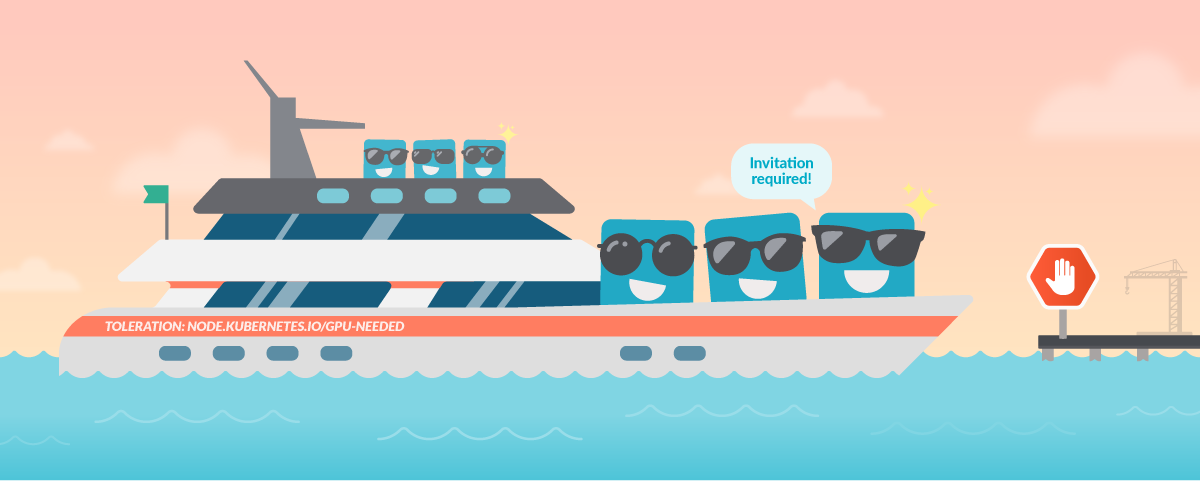

Taints and tolerations

Taints are a Kubernetes mechanism that allows us to limit the pods that can be assigned to different nodes. When a node has a taint, only pods with a matching toleration will be able to run in that node.

This mechanism allows special uses of Kubernetes, like having a different type of nodes for different workloads (nodes with GPU, with different CPU/memory ratios, etc.).

Even when we describe every reason separately, the scheduling problems are often caused by a combination of these issues. Usually, you can’t schedule because some nodes are full and the other nodes are tainted, or maybe one node is unschedulable due to memory pressure.

In order to find out what the scheduling problem is, you need to check the events generated from the scheduler about the pod, which will include a detailed description of the reasons that prevent the node to be allocated. We can see the events with kubectl describe, for example:

$ kubectl -n troubleshooting describe pod stress-6d6cbc8b9d-s4sbh

Name: stress-6d6cbc8b9d-s4sbh

Namespace: troubleshooting

Priority: 0

Node: <none>

Labels: app=stress

pod-template-hash=6d6cbc8b9d

Annotations: <none>

Status: Pending

IP:

IPs: <none>

Controlled By: ReplicaSet/stress-6d6cbc8b9d

Containers:

stress:

Image: progrium/stress

Port: <none>

Host Port: <none>

Args:

--cpu

1

--vm

2

--vm-bytes

150M

Limits:

cpu: 300m

memory: 120000Mi

Requests:

cpu: 200m

memory: 100000Mi

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-snrww (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

kube-api-access-snrww:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 4m17s (x41 over 34m) default-scheduler 0/5 nodes are available: 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 4 Insufficient memory.Code language: Perl (perl)We can see in the output the exact reason in the message:

0/5 nodes are available: 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 4 Insufficient memory.Code language: JavaScript (javascript)- One of the nodes is tainted.

- Four of the nodes don’t have enough requestable memory.

In order to fix this problem, we have two options:

- Reduce the requests in the pod spec (You can find a really good guide on how to rightsize your requests in this article).

- Increase the capacity of the cluster by adding more nodes or increasing the size of every node.

There is another important factor to consider in case of an update of a currently running workload: The upgrade policy.

Due to this policy, Kubernetes can allow the workload to create more pods than usual while the update is in progress, keeping old pods for some time while it creates new ones. This means a workload can request more resources than expected for some time. If the cluster doesn’t have enough spare resources, the update will be blocked, leaving some pods pending until the process is unblocked (or the rollback timeout stops the update).

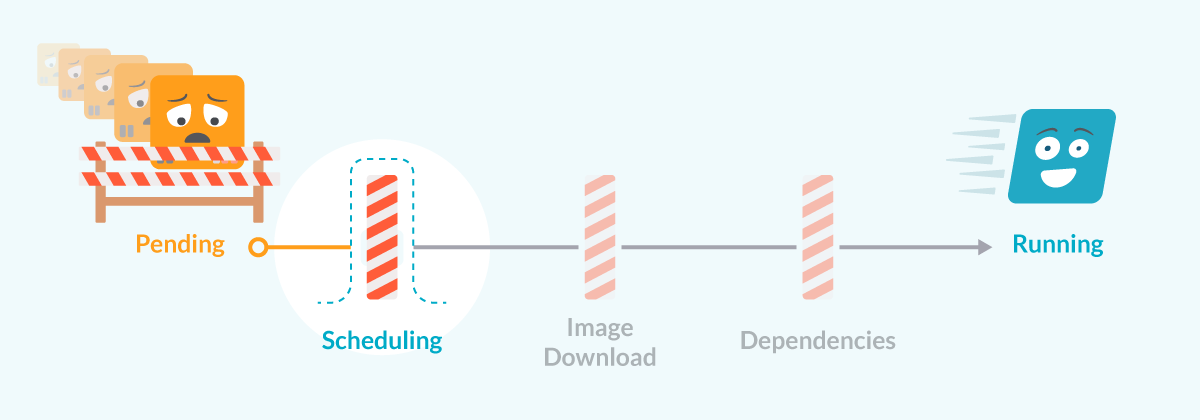

Pod pending due to image issues

Once the pod is allocated in a node, the kubelet will try to start all the containers in the pod spec. In order to do that, it will try to download the image and run it.

There are several errors that can prevent the image to be downloaded:

- Wrong image name.

- Wrong image tag.

- Wrong repository.

- Repository requires authentication.

For more information about image problems, check the article on ErrImagePull and ImagePullBackOff.

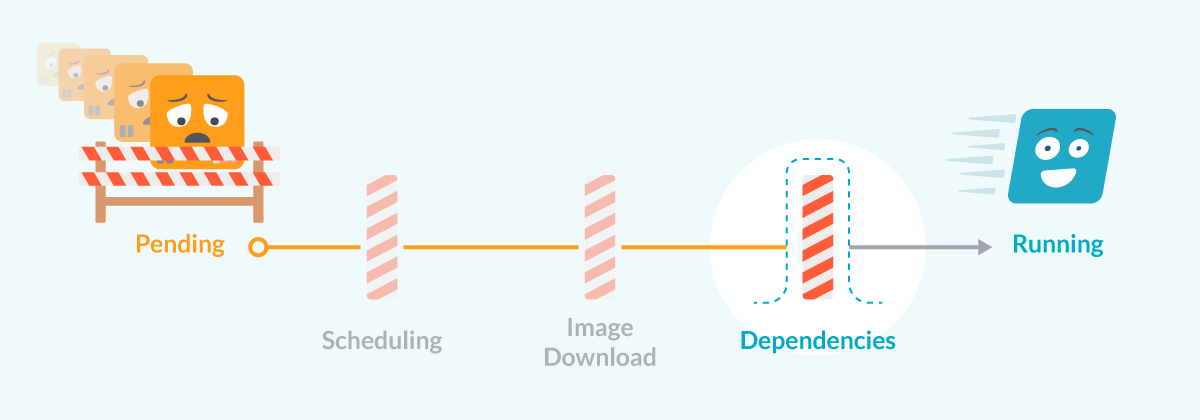

Kubernetes Pod pending due to dependency problems

Before the pod starts, kubelet will try to check all the dependencies with other Kubernetes elements. If one of these dependencies can’t be met, the pod will be kept in a pending state until the dependencies are met.

In this case, kubectl will show the pod like this:

$ kubectl -n mysql get pods

NAME READY STATUS RESTARTS AGE

mysql-0 0/1 ContainerCreating 0 97sCode language: Perl (perl)And in the events, we can see something like this:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 3m19s default-scheduler Successfully assigned mysql/mysql-0 to ip-172-20-38-115.eu-west-1.compute.internal

Warning FailedMount 76s kubelet Unable to attach or mount volumes: unmounted volumes=[config], unattached volumes=[kube-api-access-gxjf8 data config]: timed out waiting for the condition

Warning FailedMount 71s (x9 over 3m19s) kubelet MountVolume.SetUp failed for volume "config" : configmap "mysql" not foundCode language: Perl (perl)The Message column will provide you with enough information to be able to pinpoint the missing element. The usual causes are:

- A config map or a secret hasn’t been created, or the name provided is incorrect.

- A volume can’t be mounted in the node because it hasn’t been released yet by another node. This happens especially in the process to update a

statefulset, as the volume mounted must be the same as the old pod.

Conclusion

Understanding the reasons for a pod to be kept in the Pending phase is key to safely deploy and update workloads in Kubernetes. Being able to quickly locate the issue and let the deployment progress will save you some headaches and reduce downtime.

Monitor Kubernetes and troubleshoot issues up to 10x faster

Sysdig can help you monitor and troubleshoot your Kubernetes cluster with the out-of-the-box dashboards included in Sysdig Monitor. Advisor, a tool integrated in Sysdig Monitor accelerates troubleshooting of your Kubernetes clusters and its workloads by up to 10x.

Sign up for a 30-day trial account and try it yourself!