Falco Feeds extends the power of Falco by giving open source-focused companies access to expert-written rules that are continuously updated as new threats are discovered.

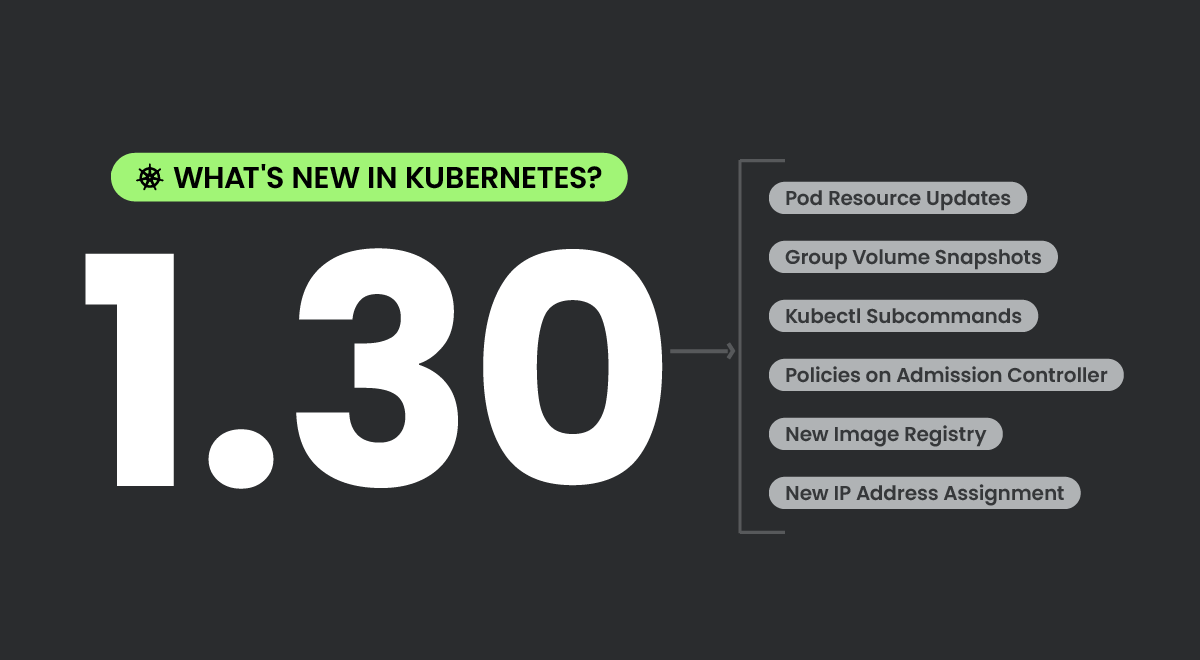

Kubernetes 1.30 is on the horizon, and it's packed with fresh and exciting features! So, what's new in this upcoming release?

Kubernetes 1.30 brings a plethora of enhancements, including a blend of 58 new and improved features. From these, several are graduating to stable, including the highly anticipated Container Resource Based Pod Autoscaling, which refines the capabilities of the Horizontal Pod Autoscaler by focusing on individual container metrics. New alpha features are also making their debut, promising to revolutionize how resources are managed and allocated within clusters.

Watch out for major changes such as the introduction of Structured Parameters for Dynamic Resource Allocation, enhancing the previously introduced dynamic resource allocation with a more structured and understandable approach. This ensures that Kubernetes components can make more informed decisions, reducing dependency on third-party drivers.

Further enhancing security, the support for User Namespaces in Pods moves to beta, offering refined isolation and protection against vulnerabilities by supporting user namespaces, allowing for customized UID/GID ranges that bolster pod security.

There are also numerous quality-of-life improvements that continue the trend of making Kubernetes more user-friendly and efficient, such as updates in pod resource management and network policies.

We are buzzing with excitement for this release! There's plenty to unpack here, so let's dive deeper into what Kubernetes 1.30 has to offer.

Kubernetes 1.30 – Editor's pick

These are the features that look most exciting to us in this release:

This enhancement sees the most significant overhaul, improving system stability by modifying swap memory behavior on Linux nodes to better manage memory usage and system performance. By optimizing how swap memory is handled, Kubernetes can ensure smoother operation of applications under various load conditions, thereby reducing system crashes and enhancing overall reliability.

Nigel Douglas – Sr. Open Source Security Advocate (Falco Security)

#3221 Structured Authorization Configuration

This enhancement also hits beta, streamlining the creation of authorization chains with enhanced capabilities like multiple webhooks and fine-grained control over request validation, all configured through a structured file. By allowing for complex configurations and precise authorization mechanisms, this feature significantly enhances security and administrative efficiency, making it easier for administrators to enforce policy compliance across the cluster.

Mike Coleman – Staff Developer Advocate – Open Source Ecosystem

#3488 CEL for Admission Control

The integration of Common Expression Language (CEL) for admission control introduces a dynamic method to enforce complex, fine-grained policies directly through the Kubernetes API, enhancing both security and governance capabilities. This improvement enables administrators to craft policies that are not only more nuanced but also responsive to the evolving needs of their deployments, thereby ensuring that security measures keep pace with changes without requiring extensive manual updates.

Thomas Labarussias – Sr. Developer Advocate & CNCF Ambassador

Apps in Kubernetes 1.30

#4443 More granular failure reason for Job PodFailurePolicy

Stage: Net New to Alpha

Feature group: sig-apps

The current approach of assigning a general "PodFailurePolicy" reason to a Job's failure condition could be enhanced for specificity. One way to achieve this is by adding a customizable Reason field to the PodFailurePolicyRule, allowing for distinct, machine-readable reasons for each rule trigger, subject to character limitations. This method, preferred for its clarity, would enable higher-level APIs utilizing Jobs to respond more precisely to failures, particularly by associating them with specific container exit code.

#3017 PodHealthyPolicy for PodDisruptionBudget

Stage: Graduating to Stable

Feature group: sig-apps

Pod Disruption Budgets (PDBs) are utilized for two main reasons: to maintain availability by limiting voluntary disruptions and to prevent data loss by avoiding eviction until critical data replication is complete. However, the current PDB system has limitations. It sometimes prevents eviction of unhealthy pods, which can impede node draining and auto-scaling.

Additionally, the use of PDBs for data safety is not entirely reliable and could be considered a misuse of the API. Despite these issues, the dependency on PDBs for data protection is significant enough that any changes to PDBs must continue to support this requirement, as Kubernetes does not offer alternative solutions for this use case. The goals are to refine PDBs to avoid blocking evictions due to unhealthy pods and to preserve their role in ensuring data safety.

#3998 Job Success/completion policy

Stage: Net New to Alpha

Feature group: sig-apps

This Kubernetes 1.30 enhancement offers an extension to the Job API, specifically for Indexed Jobs, allowing them to be declared as successful based on predefined conditions. This change addresses the need in certain batch workloads, like those using MPI or PyTorch, where success is determined by the completion of specific "leader" indexes rather than all indexes.

Currently, a job is only marked as complete if every index succeeds, which is limiting for some applications. By introducing a success policy, which is already implemented in third-party frameworks like the Kubeflow Training Operator, Flux Operator, and JobSet, Kubernetes aims to provide more flexibility. This enhancement would enable the system to terminate any remaining pods once the job meets the criteria specified by the success policy.

CLI in Kubernetes 1.30

#4292 Custom profile in kubectl debug

Stage: Net New to Alpha

Feature group: sig-cli

This merged enhancement adds the –custom flag in kubectl debug to let the user customize its debug resources. The enhancement of the 'kubectl debug' feature is set to significantly improve security posture for operations teams.

Historically, the absence of a shell in base images posed a challenge for real-time debugging, which discouraged some teams from using these secure, minimalistic containers. Now, with the ability to attach data volumes within a debug container, end-users are enabled to perform in-depth analysis and troubleshooting without compromising on security.

This capability promises to make the use of shell-less base images more appealing by simplifying the debugging process.

#2590 Add subresource support to kubectl

Stage: Graduating to Stable

Feature group: sig-cli

The proposal introduces a new –subresource=[subresource-name] flag for the kubectl commands get, patch, edit, and replace.

This enhancement will enable users to access and modify status and scale subresources for all compatible API resources, including both built-in resources and Custom Resource Definitions (CRDs). The output for status subresources will be displayed in a formatted table similar to the main resource.

This feature follows the same API conventions as full resources, allowing expected reconciliation behaviors by controllers. However, if the flag is used on a resource without the specified subresource, a 'NotFound' error message will be returned.

#3895 Interactive flag added to kubectl delete command

Stage: Graduating to Stable

Feature group: sig-cli

This proposal suggests introducing an interactive mode for the kubectl delete command to enhance safety measures for cluster administrators against accidental deletions of critical resources.

The kubectl delete command is powerful and permanent, presenting risks of unintended consequences from errors such as mistyping or hasty decisions. To address the potential for such mishaps without altering the default behavior due to backward compatibility concerns, the proposal recommends a new interactive (-i) flag.

This flag would prompt users for confirmation before executing the deletion, providing an additional layer of protection and decision-making opportunity to prevent accidental removal of essential resources.

Instrumentation

#647 API Server tracing

Stage: Graduating to Stable

Feature group: sig-instrumentation

This Kubernetes 1.30 enhancement aims to improve debugging through enhanced tracing in the API Server, utilizing OpenTelemetry libraries for structured, detailed trace data. It seeks to facilitate easier analysis by enabling distributed tracing, which allows for comprehensive insight into requests and context propagation.

The proposal outlines goals to generate and export trace data for requests, alongside propagating context between incoming and outgoing requests, thus enhancing debugging capabilities and enabling plugins like admission webhooks to contribute to trace data for a fuller understanding of request paths.

#2305 Metric cardinality enforcement

Stage: Graduating to Stable

Feature group: sig-instrumentation

This enhancement addresses the issue of unbounded metric dimensions causing memory problems in instrumented components by introducing a dynamic, runtime-configurable allowlist for metric label values.

Historically, the Kubernetes community has dealt with problematic metrics through various inconsistent approaches, including deleting offending labels or metrics entirely, or defining a retrospective set of acceptable values. These fixes are manual, labor-intensive, and time-consuming, lacking a standardized solution.

This enhancement aims to remedy this by allowing metric dimensions to be bound to a predefined set of values independently of code releases, streamlining the process and preventing memory leaks without necessitating immediate binary releases.

#3077 Contextual Logging

Stage: Graduating to Beta

Feature group: sig-instrumentation

This contextual logging proposal introduces a shift from using a global logger to passing a logr.Logger instance through functions, either via a context.Context or directly, leveraging the benefits of structured logging. This method allows callers to enrich log messages with key/value pairs, specify names indicating the logging component or operation, and adjust verbosity to control the volume of logs generated by the callee.

The key advantage is that this is achieved without needing to feed extra information to the callee, as the necessary details are encapsulated within the logger instance itself.

Furthermore, it liberates third-party components utilizing Kubernetes packages like client-go from being tethered to the klog logging framework, enabling them to adopt any logr.Logger implementation and configure it to their preferences. For unit testing, this model facilitates isolating log output per test case, enhancing traceability and analysis.

The primary goal is to eliminate klog's direct API calls and its mandatory adoption across packages, empowering function callers with logging control, and minimally impacting public APIs while providing guidance and tools for integrating logging into unit tests.

Network in Kubernetes 1.30

#3458 Remove transient node predicates from KCCM's service controller

Stage: Graduating to Stable

Feature group: sig-network

To mitigate hasty disconnection of services and to minimize the load on cloud providers' APIs, a new proposal suggests a change in how the Kubernetes cloud controller manager (KCCM) interacts with load balancer node sets.

This enhancement aims to discontinue the practice of immediate node removal when nodes temporarily lose readiness or are being terminated. Instead, by introducing the StableLoadBalancerNodeSet feature gate, it would promote a smoother transition by enabling connection draining, allowing applications to benefit from graceful shutdowns and reducing unnecessary load balancer re-syncs. This change is aimed at enhancing application reliability without overburdening cloud provider systems.

#3836 Ingress Connectivity Reliability Improvement for Kube-Proxy

Stage: Graduating to Beta

Feature group: sig-network

This Kubernetes 1.30 enhancement introduces modifications to the Kubernetes cloud controller manager's service controller, specifically targeting the health checks (HC) used by load balancers. These changes aim to improve how these checks interact with kube-proxy, the service proxy managed by Kubernetes. There are three main improvements:

1) Enabling kube-proxy to support connection draining on terminating nodes by failing its health checks when nodes are marked for deletion, particularly useful during cluster downsizing scenarios;

2) Introducing a new /livez health check path in kube-proxy that maintains traditional health check semantics, allowing uninterrupted service during node terminations;

3) Advocating for standardized health check procedures across cloud providers through a comprehensive guide on Kubernetes' official website.

These updates seek to ensure graceful shutdowns of services and improve overall cloud provider integration with Kubernetes clusters, particularly for services routed through nodes marked for termination.

#1860 Make Kubernetes aware of the LoadBalancer behavior

Stage: Graduating to Beta

Feature group: sig-network

This enhancement is a modification to the kube-proxy configurations for handling External IPs of LoadBalancer Services. Currently, kube-proxy implementations, including ipvs and iptables, automatically bind External IPs to each node for optimal traffic routing directly to services, bypassing the load balancer. This process, while beneficial in some scenarios, poses problems for certain cloud providers like Scaleway and Tencent Cloud, where such binding disrupts inbound traffic from the load balancer, particularly health checks.

Additionally, features like TLS termination and the PROXY protocol implemented at the load balancer level are bypassed, leading to protocol errors. The enhancement suggests making this binding behavior configurable at the cloud controller level, allowing cloud providers to disable or adjust this default setting to better suit their infrastructure and service features, addressing these issues and potentially offering a more robust solution than current workarounds.

Kubernetes 1.30 Nodes

#3960 Introducing Sleep Action for PreStop Hook

Stage: Graduating to Beta

Feature group: sig-node

This Kubernetes 1.30 enhancement introduces a 'sleep' action for the PreStop lifecycle hook, offering a simpler, native option for managing container shutdowns.

Instead of relying on scripts or custom solutions for delaying termination, containers could use this built-in sleep to gracefully wrap up operations, easing transitions in load balancing, and allowing external systems to adjust, thereby boosting Kubernetes applications' reliability and uptime.

#2400 Node Memory Swap Support

Stage: Major Change to Beta

Feature group: sig-node

The enhancement integrates swap memory support into Kubernetes, addressing two key user groups: node administrators for performance tuning and application developers requiring swap for their apps.

The focus is to facilitate controlled swap use on a node level, with the kubelet enabling Kubernetes workloads to utilize swap space under specific configurations. The ultimate goal is to enhance Linux node operation with swap, allowing administrators to determine swap usage for workloads, initially not permitting individual workloads to set their own swap limits.

#24 AppArmor Support

Stage: Graduating to Stable

Feature group: sig-node

Adding AppArmor support to Kubernetes marks a significant enhancement in the security posture of containerized workloads. AppArmor is a Linux kernel module that allows system admins to restrict certain capabilities of a program using profiles attached to specific applications or containers. By integrating AppArmor into Kubernetes, developers can now define security policies directly within an app config.

The initial implementation of this feature would allow for specifying an AppArmor profile within the Kubernetes API for individual containers or entire pods. This profile, once defined, would be enforced by the container runtime, ensuring that the container's actions are restricted according to the rules defined in the profile. This capability is crucial for running secure and confined applications in a multi-tenant environment, where a compromised container could potentially affect other workloads or the underlying host.

Scheduling

#3633 Introduce MatchLabelKeys to Pod Affinity and Pod Anti Affinity

Stage: Graduating to Beta

Feature group: sig-scheduling

This Kubernetes 1.30 enhancement introduces MatchLabelKeys for PodAffinityTerm to refine PodAffinity and PodAntiAffinity, enabling more precise control over Pod placements during scenarios like rolling upgrades.

By allowing users to specify the scope for evaluating Pod co-existence, it addresses scheduling challenges that arise when new and old Pod versions are present simultaneously, particularly in saturated or idle clusters. This enhancement aims to improve scheduling effectiveness and cluster resource utilization.

#3902 Decouple TaintManager from NodeLifecycleController

Stage: Graduating to Stable

Feature group: sig-scheduling

This enhancement separated the NodeLifecycleController duties into two distinct controllers. Currently, the NodeLifecycleController is responsible for both marking unhealthy nodes with NoExecute taints and evicting pods from these tainted nodes.

The proposal introduces a dedicated TaintEvictionController specifically for managing the eviction of pods based on NoExecute taints, while the NodeLifecycleController will continue to focus on applying taints to unhealthy nodes. This separation aims to streamline the codebase, allowing for more straightforward enhancements and the potential development of custom eviction strategies.

The motivation behind this change is to untangle the intertwined functionalities, thus improving the system's maintainability and flexibility in handling node health and pod eviction processes.

#3838 Mutable Pod scheduling directives when gated

Stage: Graduating to Stable

Feature group: sig-scheduling

The enhancement introduced in #3521, PodSchedulingReadiness, aimed at empowering external resource controllers – like extended schedulers or dynamic quota managers – to determine the optimal timing for a pod's eligibility for scheduling by the kube-scheduler.

Building on this foundation, the current enhancement seeks to extend the flexibility by allowing mutability in a pod's scheduling directives, specifically node selector and node affinity, under the condition that such updates further restrict the pod's scheduling options. This capability enables external resource controllers to not just decide the timing of schedulin,g but also to influence the specific placement of the pod within the cluster.

This approach fosters a new pattern in Kubernetes scheduling, encouraging the development of lightweight, feature-specific schedulers that complement the core functionality of the kube-scheduler without the need for maintaining custom scheduler binaries. This pattern is particularly advantageous for features that can be implemented without the need for custom scheduler plugins, offering a streamlined way to enhance scheduling capabilities within Kubernetes ecosystems.

Kubernetes 1.30 storage

#3141 Prevent unauthorized volume mode conversion during volume restore

Stage: Graduating to Stable

Feature group: sig-storage

This enhancement addresses a potential security gap in Kubernetes' VolumeSnapshot feature by introducing safeguards against unauthorized changes in volume mode during the creation of a PersistentVolumeClaim (PVC) from a VolumeSnapshot.

It outlines a mechanism to ensure that the original volume mode of the PVC is preserved, preventing exploitation through kernel vulnerabilities, while accommodating legitimate backup and restore processes that may require volume mode conversion for efficiency. This approach aims to enhance security without impeding valid backup and restore workflows.

#1710 Speed up recursive SELinux label change

Stage: Net New to Beta

Feature group: sig-storage

This enhancement details improvements to SELinux integration with Kubernetes, focusing on enhancing security measures for containers running on Linux systems with SELinux in enforcing mode. The proposal outlined how SELinux prevents escaped container users from accessing host OS resources or other containers by assigning unique SELinux contexts to each container and labeling volume contents accordingly.

The proposal also seeks to refine how Kubernetes handles SELinux contexts, offering the option to either set these manually via PodSpec or allow the container runtime to automatically assign them. Key advancements include the ability to mount volumes with specific SELinux contexts using the -o context= option during the first mount to ensure the correct security labeling, as well as recognizing which volume plugins support SELinux.

The motivation behind these changes includes enhancing performance by avoiding extensive file relabeling, preventing space issues on nearly full volumes, and increasing security, especially for read-only and shared volumes. This approach aims to streamline SELinux policy enforcement across Kubernetes deployments, particularly in securing containerized environments against potential security breaches like CVE-2021-25741.

#3756 Robust VolumeManager reconstruction after kubelet restart

Stage: Graduating to Stable

Feature group: sig-storage

This enhancement addresses the issues with kubelet's handling of mounted volumes after a restart, where it currently loses track of volumes for running Pods and attempts to reconstruct this state from the API server and the host OS – a process known to be flawed.

It proposes a reworking of this process, essentially a substantial bugfix that impacts significant portions of kubelet's functionality. Due to the scope of these changes, they will be implemented behind a feature gate, allowing users to revert to the old system if necessary. This initiative builds on the foundations laid in KEP 1790, which previously went alpha in v1.26.

The modifications aim to enhance how kubelet, during startup, can better understand how volumes were previously mounted and assess whether any changes are needed. Additionally, it seeks to address issues like those documented in bug #105536, where volumes fail to be properly cleaned up after a kubelet restart, thus improving the overall robustness of volume management and cleanup.

Other enhancements

#1610 Container Resource based Pod Autoscaling

Stage: Graduating to Stable

Feature group: sig-autoscaling

This enhancement outlines enhancements to the Horizontal Pod Autoscaler's (HPA) functionality, specifically allowing it to scale resources based on the usage metrics of individual containers within a pod. Currently, HPA aggregates resource consumption across all containers, which may not be ideal for complex workloads with containers whose resource usage does not uniformly scale.

With the proposed changes, HPA would have the capability to scale more precisely by assessing the resource demands of each container separately.

#2799 Reduction of Secret-based Service Account Tokens

Stage: Graduating to Stable

Feature group: sig-auth

This improvement outlines measures to minimize the reliance on less secure, secret-based service account tokens following the general availability of BoundServiceAccountTokenVolume in Kubernetes 1.22. With this feature, service account tokens are acquired via the TokenRequest API and stored in a projected volume, making the automatic generation of secret-based tokens unnecessary.

This aims to cease the auto-generation of these tokens and remove any that are unused, while still preserving tokens explicitly requested by users. The suggested approach includes modifying the service account control loop to prevent automatic token creation, promoting the use of the TokenRequest API or manually created tokens, and implementing a purge process for unused auto-generated tokens.

#4008 CRD Validation Ratcheting

Stage: Graduating to Beta

Feature group: sig-api-machinery

This proposal focuses on improving the usability of Kubernetes by advocating for the "shift left" of validation logic, moving it from controllers to the frontend when possible. Currently, the process of modifying validation for unchanged fields in a Custom Resource Definition (CRD) is cumbersome, often requiring version increments even for minor validation changes. This complexity hinders the adoption of advanced validation features by both CRD authors and Kubernetes developers, as the risk of disrupting user workflows is high. Such restrictions not only degrade user experience but also impede the progression of Kubernetes itself.

For instance, KEP-3937 suggests introducing declarative validation with new format types, which could disrupt existing workflows. The goals of this enhancement are to eliminate the barriers that prevent CRD authors and Kubernetes from both broadening and tightening value validations without causing significant disruptions. The proposal aimed to automate these enhancements for all CRDs in clusters where the feature is enabled, maintaining performance with minimal overhead and ensuring correctness by preventing invalid values according to the known schema.

If you liked this, you might want to check out our previous 'What's new in Kubernetes' editions:

- Kubernetes 1.27 – What's new?

- Kubernetes 1.26 – What's new?

- Kubernetes 1.25 – What's new?

- Kubernetes 1.24 – What's new?

- Kubernetes 1.23 – What's new?

- Kubernetes 1.22 – What's new?

- Kubernetes 1.21 – What's new?

- Kubernetes 1.20 – What's new?

- Kubernetes 1.19 – What's new?

- Kubernetes 1.18 – What's new?

- Kubernetes 1.17 – What's new?

- Kubernetes 1.16 – What's new?

- Kubernetes 1.15 – What's new?

- Kubernetes 1.14 – What's new?

- Kubernetes 1.13 – What's new?

- Kubernetes 1.12 – What's new?

Get involved with the Kubernetes project:

- Visit the project homepage.

- Check out the Kubernetes project on GitHub.

- Get involved with the Kubernetes community.

- Meet the maintainers on the Kubernetes Slack.

- Follow @KubernetesIO on Twitter.

And if you enjoy keeping up to date with the Kubernetes ecosystem, subscribe to our container newsletter, a monthly email with the coolest stuff happening in the cloud-native ecosystem.